Dr. J's Compiler and Translator Design Lecture Notes

(C) Copyright 2011-2019 by Clinton Jeffery and/or original authors where

appropriate. For use in Dr. J's Compiler classes only. Lots of material

in these notes originated with Saumya Debray's Compiler course notes from

the University of Arizona, for which I owe him a debt of thanks. Various

portions of his notes were in turn inspired by the ASU red dragon book.

lecture #1 began here

Syllabus

Yes, we went over the syllabus.

Why study compilers?

Computer scientists study compiler construction for the

following reasons:

- Experience with large-scale

applications development. Your compiler may be the largest

program you write as a student. Experience working with really big

data structures and complex interactions between algorithms will

help you out on your next big programming project.

- A shining triumph of CS theory.

It demonstrates the value of theory over the impulse to just "hack up"

a solution.

- A basic element of programming language research.

Many language researchers write compilers for the languages they design.

- Many applications have similar properties to one or more phases of

a compiler, and compiler expertise and tools can help an application

programmer working on other projects besides compilers.

CS 445 is labor intensive. This is a good thing: there is no way to

learn the skills necessary for writing big programs without this kind

of labor-intensive experience.

Some Tools we will use

Labs and lectures will discuss all of these, but if you do not know them

already, the sooner you go learn them, the better.

- C and "make".

- If you are not expert with these yet, you will be a lot closer

by the time you pass this class.

- lex and yacc

- These are compiler-writers tools, but they are useful for other

kinds of applications, almost anything with a complex file format

to read in can benefit from them.

- gdb

- If you do not know a source-level debugger well, start learning.

You will need one to survive this class.

- e-mail

- Regularly e-mailing your instructor is a crucial part of class

participation. If you aren't asking questions, you aren't doing

your job as a student.

- web

- This is where you get your lecture notes, homeworks, and labs,

and turnin all your work.

Compilers - What Are They and What Kinds of Compilers are Out There?

The purpose of a compiler is: to translate a program in some language (the

source language) into a lower-level language (the target

language). The compiler itself is written in some language, called

the implementation language. To write a compiler you have to be

very good at programming in the implementation language, and have to

think about and understand the source language and target language.

There are several major kinds of compilers:

- Native Code Compiler

- Translates source code into hardware (assembly or machine code)

instructions. Example: gcc.

- Virtual Machine Compiler

- Translates source code into an abstract machine code, for execution

by a virtual machine interpreter. Example: javac.

- JIT Compiler

- Translates virtual machine code to native code. Operates within

a virtual machine. Example: Sun's HotSpot java machine.

- Preprocessor

- Translates source code into simpler or slightly lower level source code,

for compilation by another compiler. Examples: cpp, m4.

- Pure interpreter

- Executes source code on the fly, without generating machine code.

Example: Lisp.

OK, so a pure interpreter is not really a compiler. Here are some more tools,

by way of review, that compiler people might be directly concerned with, even

if they are not themselves compilers.

You should learn any of these terms that you don't already know.

- assembler

- a translator from human readable (ASCII text) files of machine

instructions into the actual binary code (object files) of a machine.

- linker

- a program that combines (multiple) object files to make an executable.

Converts names of variables and functions to numbers (machine addresses).

- loader

- Program to load code. On some systems, different executables start at

different base addresses, so the loader must patch the executable with

the actual base address of the executable.

- preprocessor

- Program that processes the source code before the compiler sees it.

Usually, it implements macro expansion, but it can do much more.

- editor

- Editors may operate on plain text, or they may be wired into the rest

of the compiler, highlighting syntax errors as you go, or allowing

you to insert or delete entire syntax constructs at a time.

- debugger

- Program to help you see what's going on when your program runs.

Can print the values of variables, show what procedure called what

procedure to get where you are, run up to a particular line, run

until a particular variable gets a special value, etc.

- profiler

- Program to help you see where your program is spending its time, so

you can tell where you need to speed it up.

Phases of a Compiler

- Lexical Analysis:

- Converts a sequence of characters into words, or tokens

- Syntax Analysis:

- Converts a sequence of tokens into a parse tree

- Semantic Analysis:

- Manipulates parse tree to verify symbol and type information

- Intermediate Code Generation:

- Converts parse tree into a sequence of intermediate code instructions

- Optimization:

- Manipulates intermediate code to produce a more efficient program

- Final Code Generation:

- Translates intermediate code into final (machine/assembly) code

Example of the Compilation Process

Consider the example statement; its translation to machine code

illustrates some of the issues involved in compiling.

position = initial + rate * 60

|

30 or so characters, from a single line of source code, are first

transformed by lexical analysis into a sequence of 7 tokens. Those

tokens are then used to build a tree of height 4 during syntax analysis.

Semantic analysis may transform the tree into one of height 5, that

includes a type conversion necessary for real addition on an integer

operand. Intermediate code generation uses a simple traversal

algorithm to linearize the tree back into

a sequence of machine-independent three-address-code instructions.

t1 = inttoreal(60)

t2 = id3 * t1

t3 = id2 + t2

id1 = t3 |

Optimization of the intermediate code allows the four instructions to

be reduced to two machine-independent instructions. Final code generation

might implement these two instructions using 5 machine instructions, in

which the actual registers and addressing modes of the CPU are utilized.

MOVF id3, R2

MULF #60.0, R2

MOVF id2, R1

ADDF R2, R1

MOVF R1, id1

|

Reading!

-

Read the Louden text chapters 1-2. Except you may SKIP the parts that

describe the TINY project. Within the Scanning chapter, there are large

portions on the finite automata that should be CS 385 review; you may

SKIM that material, unless you don't know it or don't remember it.

-

Read Sections 3-5 of the Flex manual,

Lexical Analysis With Flex.

- Read the class lecture notes

as fast as we manage to cover topics. Please ask questions about

whatever is not totally clear. You can Ask Questions in class or

via e-mail.

Although the whole course's lecture notes are ALL available to you

up front, I generally revise each lecture's notes, making additions,

corrections and adaptations to this year's homeworks, the night before each

lecture. The best time to print hard copies of the lecture notes, if you

choose to do that, is one

day at a time, right before the lecture is given. Or just read online.

Some Resources for the class Project and a Policy Statement

Class Dev+Test Linux Machine: historically we have used a generic

Linux server like "wormulon" to do (and grade) class work. This semester

our UI CS system admin, Victor, has created a virtual machine

specifically for this class (and separate ones for many other classes).

Our machine is named "cs-445.cs.uidaho.edu". Try it out

and report any problems to me.

Unlike software engineering, the compiler class project is a solo exercise,

meant to increase your skill at programming on a larger scale than in most

classes. On the one hand it is sensible to use software engineering tools

such as revision control systems (like git) on a large project like this.

On the other hand it is not OK to share your work with your classmates,

intentionally or through stupidity. If you use a revision control system,

figure out how to make it private. Various options:

- on github you setup private repositories, either for free or cheap

- you can use revision control with a local repository. setup is easy,

but if you do this, figure out how to back up your work.

- you can figure out how to do git through ssh onto a UI CS unix

account

Initial Discussion of HW#1

This one is on a tight fuse, please hit the ground running and seek

assistance if anything hinders your progress.

lecture #2 began here

Mailbag

- The vgo.html lists += and -= on both the "in VGo" and "not in VGo"

operator lists, what up with that?

- Good catch. += and -= are pretty easy and common operators so they

are in VGo and should not be on the "not in VGo" list. Fixed.

Getting Going

Last time, we ended sort of in the middle of a description of

Homework #1.

We need to finish that, but we also need some introductory material on

lexical analysis in order to understand parts of it. So we will come

back to HW#1 before the end of class.

Overview of Lexical Analysis

A lexical analyzer, also called a scanner, typically has the

following functionality and characteristics.

What is a "token" ?

In compilers, a "token" is:

- a single word of source code input (a.k.a. "lexeme")

- an integer code that refers to a single word of input

- a set of lexical attributes computed from a single word of input

Programmers think about all this in terms of #1. Syntax checking uses

#2. Error reporting, semantic analysis, and code generation require #3. In

a compiler written in C, for each token you allocate a C struct to store (3)

for each token.

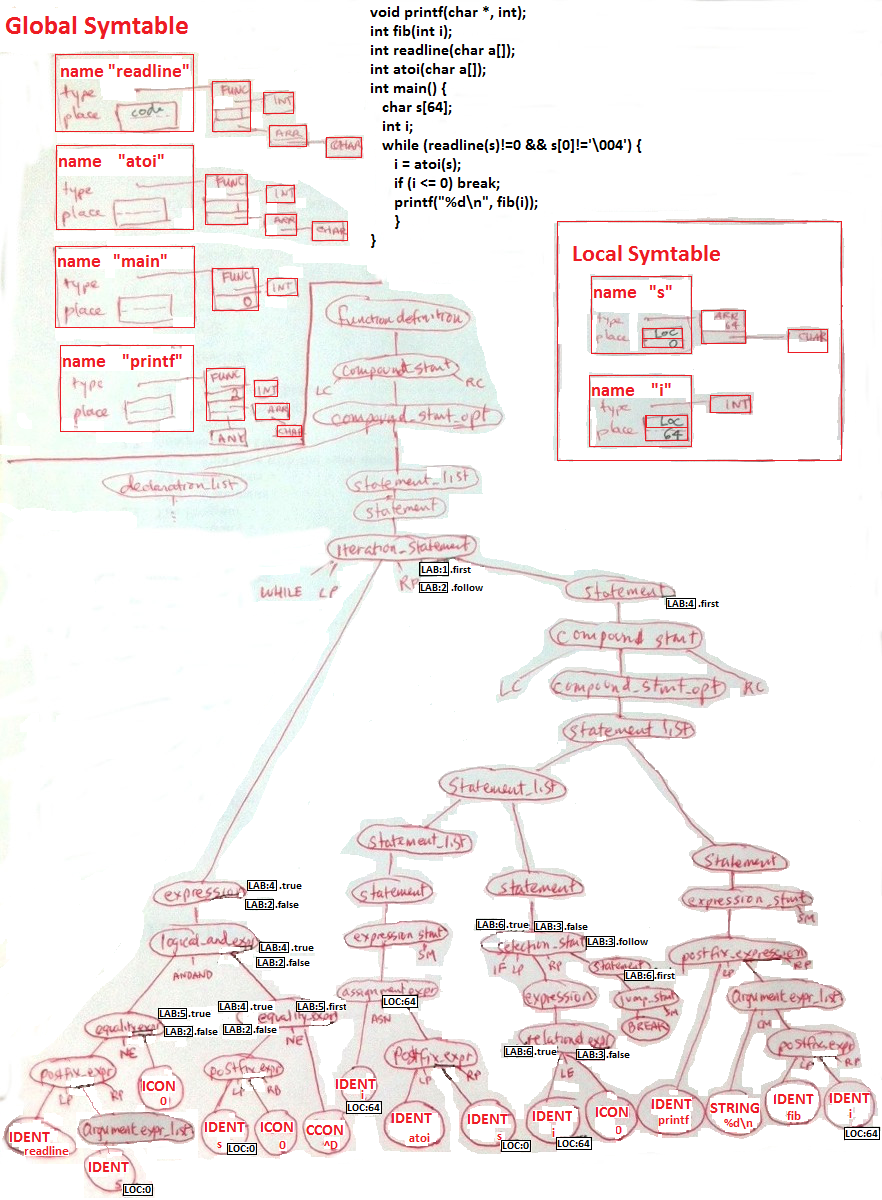

Auxiliary data structures

You were presented with the phases of the compiler, from lexical and syntax

analysis, through semantic analysis, and intermediate and final code

generation. Each phase has an input and an output to the next phase.

But there are a few data structures

we will build that survive across multiple phases: the literal table,

the symbol table, and the error handler.

- lexeme table

- a table that stores lexeme values, such as strings and variable

names, that may occur in many places. Only one copy of each

unique string and name needs to be allocated in memory.

- symbol table

- a table that stores the names defined (and visible with) each

particular scope. Scopes include: global, and procedure (local).

More advanced languages have more scopes such as class (or record)

and package.

- error handler

- errors in lexical, syntax, or semantic analysis all need a common

reporting mechanism, that shows where the error occurred (filename,

line number, and maybe column number are useful).

Reading Named Files in C using stdio

In this class you are opening and reading files. Hopefully this is review

for you; if not, you will need to learn it quickly. To do any "standard

I/O" file processing, you start by including the header:

#include <stdio.h>

This defines a data type (FILE *) and gives prototypes for

relevant functions. The following code opens a file using a string filename,

reads the first character (into an int variable, not a char, so that it can

detect end-of-file; EOF is not a legal char value).

FILE *f = fopen(filename, "r");

int i = fgetc(f);

if (i == EOF) /* empty file... */

Command line argument handling and file processing in C

The following example is from Kernighan & Ritchie's "The C Programming

Language", page 162.

#include <stdio.h>

/* cat: concatenate files, version 1 */

int main(int argc, char *argv[])

{

FILE *fp;

void filecopy(FILE *, FILE *);

if (argc == 1)

filecopy(stdin, stdout);

else

while (--argc > 0)

if ((fp = fopen(*++argv, "r")) == NULL) {

printf("cat: can't open %s\n", *argv);

return 1;

}

else {

filecopy(fp, stdout);

fclose(fp);

}

return 0;

}

void filecopy(FILE *ifp, FILE *ofp)

{

int c;

while ((c = getc(ifp)) != EOF)

putc(c, ofp);

}

Warning: while using and adapting the above code would be fair game in this

class, the yylex() function is very different than the filecopy() function!

It takes no parameters! It returns an integer every time it finds a token!

So if you "borrow" from this example, delete filecopy() and write yylex()

from scratch. Multiple students have fallen into this trap before you.

A Brief Introduction to Make

It is not a good idea to write a large program like a compiler as a single

source file. For one thing, every time you make a small change, you would

need to recompile the whole program, which will end up being many thousands

of lines. For another thing, parts of your compiler may be generated by

"compiler construction tools" which will write separate files. In any case,

this class will require you to use multiple source files, compiled

separately, and linked together to form your executable program. This

would be a pain, except we have "make" which takes care of it for us.

Make uses an input file named "makefile", which stores in ASCII text form

a collection of rules for how to build a program from its pieces. Each

rule shows how to build a file from its source files, or dependencies.

For example, to compile a file under C:

foo.o : foo.c

gcc -c foo.c

The first line says to build foo.o you need foo.c, and the second line,

which must being with a tab, gave a command-line to

execute whenever foo.o should be rebuilt, i.e. when it is missing or

when foo.c has been changed and need to be recompiled.

The first rule in the makefile is what "make" builds by default, but

note that make dependencies are recursive: before it checks whether

it needs to rebuild foo.o from foo.c it will check whether foo.c needs

to be rebuilt using some other rule. Because of this post-order

traversal of the "dependency graph", the first rule in your makefile

is usually the last one that executes when you type "make". For a

C program, the first rule in your makefile would usually be the

"link" step that assembles objects files into an executable as in:

compiler: foo.o bar.o baz.o

gcc -o compiler foo.o bar.o baz.o

There is a lot more to "make" but we will take it one step at a time.

You can read or skim the

GNU make

manual, particularly section 2, to learn more about make.

You can find useful on-line documentation

on "make" (manual page, Internet reference guides, etc) if you look.

lecture #3 began here

Mailbag

- I've heard of malloc(), but I haven't had any real experience

working with it.

- malloc(), calloc() and realloc() are a flexible memory management

API for C that corresponds roughly to

new keyword in C++.

They let you

allocate memory generically by # of bytes, independent of the type

system. This capability is powerful but dangerous.

- My experience with flex and bison was way back in the

Programming Languages course.

- Same goes for most everybody; this background is what is expected.

You are to read and learn flex and bison from scratch if you don't

remember it. Your reading assignment should be pretty well finished

by now, and you should be ready for me to lecture on flex. We will

teach what is needed of them for this course, in this course.

- What should the lexical analyzer look like? where do I start?

- Homework #1 is about learn to use a declarative language called

Flex which does almost all the work for you. The only design

issue is how does it interact with the rest of the compiler, i.e.

its public interface. This is partly hardwired/designed for you

by flex, your only customization option is how to make token

information available to the later phases of the compiler.

- How should our output be visible?

- One human readable output line, per token, as shown in hw1.html

Build the linked list first, then walk it (visit all nodes) to

print the output. Figure out how to do this so output is in the

correct order and not reversed!

- Are there any comments in VGo?

- Yes, VGo supports //. You are also required to recognize, handle,

and emit an error message if you find any /* ... */ comments.

- You mention storing the int and double as binary. That just means

storing them in int and double variables, correct?

- It means for constants you have to convert from the lexeme string

that actually appears in the source code to the value (int, double)

and then store the result in the corresponding lexical attribute

variable.

- When do you use

extern and when do you use

#include in C programming?

-

extern can be done without an #include,

to tell one module

about global variables defined in another module. But if you are

going to share that extern with multiple modules, it

is best to put it in an #include.

More generally, use #include in order to share types,

externs, function prototypes,

and symbolic #define's across multiple files. That is all.

No code, which is to say, no function bodies.

- Can I add parameters to

yylex()?

- No, you can't add your own parameters,

yylex() is a

public interface.

You might be tempted to return a token structure pointer, or

add some parameters to tell it what filename it is reading from.

But you can't. Leave yylex()'s interface alone,

the parser will call it with its current interface.

- Do you want us to have a .h file for enumerating all the different

kind of tokens for HW 1? I was looking into flex and bison and it

looks like bison creates a tab.h file that does this automatically.

- Yes. In HW1 you create a .h file for these defines; plan to throw it

away in favor of the one Bison creates for you in HW#2.

- Are you going to provide us the list of tokens required, or the .h file?

- No, I am providing a language reference, from which you are to make the

list. But by asking the right questions, you are making me add details

to the language reference. Out of mercy, I went and dug out the list

of integer codes used in an early version of the Go compiler, and put it

in a vgo.tab.h that you may use. It is not

guaranteed to be complete or correct. Specifically, it does not seem to

include built-in type names, which almost surely have to be distinguished

from identifiers (variable names)...

- Will you always call "make" on our submissions?

- Yes. I expect you to use make and provide a makefile in each

homework. Turn in the whole source, not just "changed" or

"new" files for some assignments. My script will

unpack your .zip file by saying "unzip" in some new test directory

and then run "make" and then run your executable. If

anything goes wrong (say, you unzipping into a subdirectory the script

does not know the name of) you will lose a few points.

On the other hand, I do not want the tool-generated files

(lex.yy.c, cgram.tab.c) or .o or executables. The makefile should

contain correct dependencies to rerun flex (and later, bison) and

generate these files whenever source (.l, .y , etc.) files are changed.

Regular Expressions

The notation we use to precisely capture all the variations that a given

category of token may take are called "regular expressions" (or, less

formally, "patterns". The word "pattern" is really vague and there are

lots of other notations for patterns besides regular expressions).

Regular expressions are a shorthand notation

for sets of strings. In order to even talk about "strings" you have

to first define an alphabet, the set of characters which can

appear.

- Epsilon (ε) is a regular expression denoting the set

containing the empty string

- Any letter in the alphabet is also a regular expression denoting

the set containing a one-letter string consisting of that letter.

- For regular expressions r and s,

r | s

is a regular expression denoting the union of r and s

- For regular expressions r and s,

r s

is a regular expression denoting the set of strings consisting of

a member of r followed by a member of s

- For regular expression r,

r*

is a regular expression denoting the set of strings consisting of

zero or more occurrences of r.

- You can parenthesize a regular expression to specify operator

precedence (otherwise, alternation is like plus, concatenation

is like times, and closure is like exponentiation)

Lex/Flex Extended Regular Expressions

Although the basic regular expression operators given earlier

are sufficient to describe all regular languages,

in practice everybody uses extensions:

- For regular expression r,

r+

is a regular expression denoting the set of strings consisting of

one or more occurrences of r. Equivalent to rr*

- For regular expression r,

r?

is a regular expression denoting the set of strings consisting of

zero or one occurrence of r. Equivalent to r|ε

- The notation [abc] is short for a|b|c. [a-z] is short for a|b|...|z.

[^abc] is short for: any character other than a, b, or c.

What is a "lexical attribute" ?

A lexical attribute is a piece of information about a token. These typically

include:

| category | an integer code used to check syntax

|

| lexeme | actual string contents of the token

|

| line, column, file | where the lexeme occurs in source code

|

| value | for literals, the binary data they represent

|

Avoid These Common Bugs in Your Homeworks!

- yytext or yyinput were not declared global

- main() does not have its required argc, argv parameters!

- main() does not call yylex() in a loop or check its return value

- getc() EOF handling is missing or wrong! check EVERY all to getc() for EOF!

- opened files not (all) closed! file handle leak!

- end-of-comment code doesn't check for */

- yylex() is not doing the file reading

- yylex() does not skip multiple spaces, mishandles spaces at the front

of input, or requires certain spaces in order to function OK

- extra or bogus output not in assignment spec

- = instead of ==

lecture #4 began here

Mailbag

-

When creating the linked list I see that you have a struct token and a

struct tokenlist. Should I create my linked list this way or can I eliminate

the struct tokenlist and add a next pointer inside struct token(struct token

*next) and use that to connect my linked list?

-

The organization I specified - with two separate structs - was very

intentional. Next homework, we need the struct tokens that we allocate from

inside yylex(), but not the struct tokenlist that you allocate from outside

yylex(). You can do anything you want with the linked list structure, but

the struct token must be kept more-or-less as-is, and allocated inside

yylex() before it returns each time.

- I was wondering if we should have a different code for each keyword or just have a 'validkeyword' code and an 'invalidkeyword' code.

- Generally, you need a different code for two keywords if and when they are used in different positions in the syntax. For example, int and float are type names and are used in the same situations, but the keywords func and if, denoting the beginning of a function and the beginning of a conditional expression, have different syntax rules and need different integer codes.

Before we dive back in to regular expressions, let's waltz through some VGo,

and btw, take a peek at a possibly-perfect HW#1 makefile.

Some Regular Expression Examples

Regular expressions are the preferred notation for

specifying patterns of characters that define token categories. The best

way to get a feel for regular expressions is to see examples. Note that

regular expressions form the basis for pattern matching in many UNIX tools

such as grep, awk, perl, etc.

What is the regular expression for each of the different lexical items that

appear in C programs? How does this compare with another, possibly simpler

programming language such as BASIC? What are the corresponding rules for our

language this semester, are they the same as C?

| lexical category | BASIC | C | Go |

| operators | the characters themselves | For operators that are regular expression operators we need mark them

with double quotes or backslashes to indicate you mean the character,

not the regular expression operator. Note several operators have a

common prefix. The lexical analyzer needs to look ahead to tell

whether an = is an assignment, or is followed by another = for example.

| ??

|

| reserved words | the concatenation of characters; case insensitive |

Reserved words are also matched by the regular expression for identifiers,

so a disambiguating rule is needed.

| ??

|

| identifiers | no _; $ at ends of some; 2 significant letters!?; case insensitive | [a-zA-Z_][a-zA-Z0-9_]*

| Same a C; only difference is that starting with a Capital specifies

public members of a package.

|

| numbers | ints and reals, starting with [0-9]+ | 0x[0-9a-fA-F]+ etc.

| Go has C literals, plus imaginary/complex numbers.

|

| comments | REM.* | C's comments are tricky regexp's

| ??

|

| strings | almost ".*"; no escapes | escaped quotes

| ??

|

| what else?

| ??

|

lex(1) and flex(1)

These programs generally take a lexical specification given in a .l file

and create a corresponding C language lexical analyzer in a file named

lex.yy.c. The lexical analyzer is then linked with the rest of your compiler.

The C code generated by lex has the following public interface. Note the

use of global variables instead of parameters, and the use of the prefix

yy to distinguish scanner names from your program names. This prefix is

also used in the YACC parser generator.

FILE *yyin; /* set this variable prior to calling yylex() */

int yylex(); /* call this function once for each token */

char yytext[]; /* yylex() writes the token's lexeme to an array */

/* note: with flex, I believe extern declarations must read

extern char *yytext;

*/

int yywrap(); /* called by lex when it hits end-of-file; see below */

The .l file format consists of a mixture of lex syntax and C code fragments.

The percent sign (%) is used to signify lex elements. The whole file is

divided into three sections separated by %%:

header

%%

body

%%

helper functions

The header consists of C code fragments enclosed in %{ and %} as well as

macro definitions consisting of a name and a regular expression denoted

by that name. lex macros are invoked explicitly by enclosing the

macro name in curly braces. Following are some example lex macros.

letter [a-zA-Z]

digit [0-9]

ident {letter}({letter}|{digit})*

A friendly warning: your UNIX/Linux/MacOS Flex tool is NOT

good at handling input files saved in MS-DOS/Windows format, with

carriage returns before each newline character. Some browsers,

copy/paste tools, and text editors might add these carriage returns

without you even seeing them, and then you might end up in Flex Hell

with cryptic error messages for no visible reason. Download with

care, edit with precision. If you need to get rid of carriage returns

there are lots of tools for that. You can even build them into your

makefile. The most classic UNIX tool for that task is tr(1), the

character translation utility

lecture #5 began here

- What up with Blackboard?

- I didn't make Blackboard available until recently. It and the submission

zone entry for HW#1 should be up now. Let me know if it is not.

- One of the examples uses the reserved word float, which is not in our

list. Do I need to support the reserved word float?

- No. You should only support float64, which is proper Go.

If you spot any improper Go in any example, please point me at it so I

can fix it.

- When will our Midterm be?

- The week of October 14-18. Let's vote on whether to hold the exam on

Oct 14, 15, 16, or 17 soon, like maybe tomorrow.

- In the specification for assignment 1 it says that we should accept

.go files and if there is no extension given add a .go to the filename.

Should we still accept and run our compiler on other file extensions

that could be provided or should we return an error of some sort?

- Accept no other extensions. If any file has an extension other than .go,

you can stop with a message like: "Usage: vgo [options] filename[.go] ..."

- When I add .go to filenames, my program is messing up its subsequent

command line arguments. What up with that?

- Well, I could make an educated guess, but if this machine has putty,

why don't we take a look?

- how am i supposed to import the lexor into my main.c file?

- Do not import or #include your lexer. Instead,

link your lexer into the executable, and tell

main()

how to call it, by providing a prototype for yylex().

If yylex()

sets any global variables (it does), you'd declare those as

extern. You can do prototypes and externs in main.c,

but these things are exactly what header (.h) files were invented for.

- Is the

struct token supposed to be in our

main()? Do we use yylex()

along with other variables within lex.yy.c to fill the "struct token" with

the required information?

- Rather than overwriting a global struct each time, a pointer

to a struct token should be in

main().

Function yylex() should allocate a struct token, fill it,

and make it visible to main(), probably by assigning its

address to some global pointer variable. Function main()

should build the linked list in a loop, calling yylex() each

time through the loop. It should then print the output by looping through

the linked list.

What are the Best Regular Expressions you can Write For VGo?

| Category | Regular Expression

|

| Variable names | [a-zA-Z_][a-zA-Z0-9_]*

|

| Integer constants | "-"?[0-9]+ | "0x"[0-9A-Fa-f]+

|

| Real # Constants | [0-9]*"."[0-9]+

|

| String Constants | \"([^"\n]|("\\\""))*\"

|

| Rune Constants |

|

| Complex # Constants |

|

Q: Why consider doing some of the harder ones in Go but not in VGo?

Q: What other lexical categories in VGo might give you trouble?

Flex Body Section

The body consists of of a sequence of regular expressions for different

token categories and other lexical entities. Each regular expression can

have a C code fragment enclosed in curly braces that executes when that

regular expression is matched. For most of the regular expressions this

code fragment (also called a semantic action consists of returning

an integer that identifies the token category to the rest of the compiler,

particularly for use by the parser to check syntax. Some typical regular

expressions and semantic actions might include:

" " { /* no-op, discard whitespace */ }

{ident} { return IDENTIFIER; }

"*" { return ASTERISK; }

"." { return PERIOD; }

You also need regular expressions for lexical errors such as unterminated

character constants, or illegal characters.

The helper functions in a lex file typically compute lexical attributes,

such as the actual integer or string values denoted by literals. One

helper function you have to write is yywrap(), which is called when lex

hits end of file. If you just want lex to quit, have yywrap() return 1.

If your yywrap() switches yyin to a different file and you want lex to continue

processing, have yywrap() return 0. The lex or flex library (-ll or -lfl)

have default yywrap() function which return a 1, and flex has the directive

%option noyywrap which allows you to skip writing this function.

You can avoid a similar warning for an unused unput() function by saying

%option nounput.

Note: some platforms with working Flex installs (I am told this includes

cs-445.cs.uidaho.edu, which runs CENTOS)

might not have a flex library, neither -ll or -lfl. Using %option directives

or providing your own dummy library functions are a solution to having

no flex library -lfl available.

Lexical Error Handling

- Really, two kinds of lexical errors: nonsense, and stuff in Go that's

not in VGo.

- Include file name and line number in your error messages.

- Avoid cascading error messages -- only print the first one you see

on a given line/function/source file.

- You can write regular expressions for common errors, in order

to give a better message than "lexical error" or "unrecognized character".

(This is how you should approach stuff in Go that's not in VGo.)

lecture #6 began here

Mailbag

- Are we going to talk about Multi-Line Comments, or what?

- Sure, let's talk about multi-line comments. Go recognizes C-style

/* comments */ like this. If we let you off the hook, we will surely

see .go input files with these comments where you will emit weird bogus

error messages. So we either allow these comments, or recognize and

specifically disallow them. We could take a vote. But how do you

write the regular expression for them? The regular expression for

these may be harder than you think. Many flex books will have

sneaky answers instead of just writing the regular expression.

I am a pragmatist.

- Do we really have to write our compilers in C? That's so lame!

- Despite its grievous flaws, C is by far the most powerful and

influential programming language ever invented.

Mastering C is more valuable than learning compiler construction,

otherwise I would surely be making you write your compiler in Unicon,

which would be WAYYYY easier.

That said, I have allowed students to write their compiler in

C++ or Python on the past, usually with poor results. If you have a

compelling story, I will listen to proposals for alternatives to C.

- When I compile my homework, my executable is named a.out, not vgo!

What do I do?

- Some of you who are less familiar with Linux should read the

"manual pages" for gcc, make, etc. gcc has a -o option, that would work.

Or in your makefile you could rename the file after building it.

- Can I use flex start conditions?

- Yes, if you need to, feel free.

- Can I have an extension?

- Yeah, but the further you fall behind, the more zeroes you end up with

for assignments that you don't do.

Late homeworks are accepted with a penalty per day (includes

weekend days) except in the case of a valid excused absence.

The penalty starts at 10% per day (HW#1), and reduces by 2% per

assignment (8%/day for HW#2, 6%/day for HW#3, 4%/day for HW#4,

and 2%/day for HW#5). I reserve the right to underpenalize.

- Do you accept and regrade resubmissions?

- Submissions are normally graded by a script in a batch.

Generally, if an initial submission was fail, I might accept a

resubmission for partial credit up to a passing (D) grade. If a

submission fails for a trivial reason such as a missing file, I might

ask you to resubmit with a lighter penalty.

- Just tried to download the example VGo programs and the link is broken

- Yeah, have to create example programs before the links will exist.

Working on it. If necessary, google a bunch of Go sample programs or

write your own.

- Should the extension be .g0 or .go?

- The extension is .go. ".g0" was the extension last year, for a language

that was not based on Go syntax. If you see links I need to update feel

free to provide pointers. But refresh your web browser cache first,

and make sure you are not looking at an old copy of the class page.

- I have not been able to figure out how sscanf() will help me. Could you

point me to an example or documentation.

- Yes. Note that sscanf'ing into a double calls

for a %lg.

- What is wrong with my commandline argument code.

- If it is not that you are overwriting the existing arrays with strcat()

instead of allocating new larger arrays in order to hold new longer

strings, then it is probably that you are using sizeof() instead of

strlen().

- Can you go over using the %array vs. the standard %pointer option

and if there are any potential benefits of using %array?

I was curious to see if you could use YYLMAX in junction

with %array to limit the size of identifiers, but there is

a probably a better way.

- After yylex() returns,

the actual input characters matched are available as a string named

yytext, and the number of input symbols matched are in yyleng. But is

yytext a char * or an array of char? Usually it doesn't matter in C,

but I personally have worked on a compiler where declaring an extern

for yytext in my other modules, and using the wrong one, caused a crash.

Flex has both pointer and array implementations available via %array

and %pointer declarations, so your compiler can use either. YYLMAX

is not a Flex thing, sorry, but how can we limit

the length of identifiers? Incidentally: I am astonished, to read

claims that the Flex scanner buffer doesn't automatically increase

in size as needed, and might be limited by default to 8K or so regexes.

If you write open-ended regular expressions, but might be advisable

in this day of big memory to say something like

i=stat(filename,&st);

yyin=fopen(filename,"r");

yy_current_buffer = yy_create_buffer(yyin, st.st_size);

to set flex so that it cannot experience buffer overrun. By the way,

Be sure to

check ALL your C library calls for error returns in this class!

- The O'Reilly book recommended using Flex states instead of that big

regular expression for C comments. Is that reasonable?

- Yes, you may implement the most elegant correct answer you can

devise, not just what you see in class.

- Are we free to explore non-optimal solutions?

- I do not want to read lots of extra pages of junk code, but you are free

to explore alternatives and submit the most elegant solution you come

up with, regardless of its optimality. Note that there are some parts

of the implementation that I might mandate. For example, the symbol table

is best done as a hash table. You could use some other fancy data

structure that you love, but if you give me a linked list I will be

disappointed. Then again, a working linked list implementation would get

more points than a failed complicated implementation.

- Is it OK to allocate a token structure inside main() after yylex()

returns the token?

- No. In the next phase of your compiler, you will not call

yylex(), the Bison-generated parser will call

yylex(). There is a way for

the parser to grab your token if you've stored it in a global variable,

but there is not a way for the parser to build the token structure itself.

However you are expected to allocate the linked list nodes in main(), and

in the next homework that linked list will be discarded. Don't get attached.

- My tokens' "text" field in my linked list are all messed up when I go

back through the list at the end. What do I do?

- Remember to make a physical copy of

yytext each token,

because it overwrites itself each time it matches a regular expression

in yylex(). Typically a physical copy of a C string is

made using strdup(), which is a malloc()

followed by strcpy().

- C++ concatenates adjacent string literals, e.g. "Hello" " world"

Does our lexer need to do that?

-

No, you do not have to do it.

But if you did, can you think of a way to get the job done without too

much pain?

It could be done in the lexer, in the parser, or sneakily in-between.

Be careful to consider 3+ adjacent string literals

("Hello" " world, " "how are you" and so on)

- How do I handle escapes in svals? Do I need to worry about more than

\n \t \\ and \r?

-

You replace the two-or-more characters with a single, encoded character.

'\\' followed by 'n' become a control-J character. We need

\n \t \\ and \" -- these are ubiquitous.

You can do additional ones like \r but they are not required and

will not be tested.

- What about ++ and -- ?

- Good point. I removed them from the not implemented

Go operators section, but didn't get them put in to

the table of VGo operators. Let's do increment/decrement.

On Go's standard library functions

I started with just Println, but it is pretty hard to write toy Go

programs if you can't read in input, and Go's input is almost as bad

as Java's. As you can see, it is pretty hard to get away from Go's

love of multiple assignment. What should we do?

reader = bufio.NewReader(os.Stdin)

text, _ = reader.ReadString('\n')

|

|

Lexing Reals

C float and double constants have to have at least one digit, either

before or after the required decimal. This is a pain:

([0-9]+.[0-9]* | [0-9]*.[0-9]+) ...

You might almost be happier if you wrote

([0-9]*.[0-9]*) { return (strcmp(yytext,".")) ? REAL : PERIOD; }

Starring: C's ternary operator e1 ? e2 : e3 is an if-then-else

expression, very slick. Note that if you have to support

scientific/exponential real numbers (JSON does), you'll need a bigger regex.

Lex extended regular expressions

Lex further extends the regular expressions with several helpful operators.

Lex's regular expressions include:

- c

- normal characters mean themselves

- \c

- backslash escapes remove the meaning from most operator characters.

Inside character sets and quotes, backslash performs C-style escapes.

- "s"

- Double quotes mean to match the C string given as itself.

This is particularly useful for multi-byte operators and may be

more readable than using backslash multiple times.

- [s]

- This character set operator matches any one character among those in s.

- [^s]

- A negated-set matches any one character not among those in s.

- .

- The dot operator matches any one character except newline: [^\n]

- r*

- match r 0 or more times.

- r+

- match r 1 or more times.

- r?

- match r 0 or 1 time.

- r{m,n}

- match r between m and n times.

- r1r2

- concatenation. match r1 followed by r2

- r1|r2

- alternation. match r1 or r2

- (r)

- parentheses specify precedence but do not match anything

- r1/r2

- lookahead. match r1 when r2 follows, without

consuming r2

- ^r

- match r only when it occurs at the beginning of a line

- r$

- match r only when it occurs at the end of a line

Lexical Attributes and Token Objects

Besides the token's category, the rest of the compiler may need several

pieces of information about a token in order to perform semantic analysis,

code generation, and error handling. These are stored in an object instance

of class Token, or in C, a struct. The fields are generally something like:

struct token {

int category;

char *text;

int linenumber;

int column;

char *filename;

union literal value;

}

The union literal will hold computed values of integers, real numbers, and

strings. In your homework assignment, I am requiring you to compute

column #'s; not all compilers require them, but they are easy. Also: in

our compiler project we are not worrying about optimizing our use of memory,

so am not requiring you to use a union.

lecture #7 began here

Mailbag

- Does VGo use a colon operator? The spec says no, but then it says yes.

- At present colon is not in VGo, thanks for the catch.

- Does HW#1 expect semi-colon insertion? It is not mentioned in the hw1

description but is in the VGo spec.

- We will need semi-colon insertion for HW#2 but it is not required for HW#1. Let's discuss.

- I was trying to come up with a regular expression for runes that are accepted in VGo.

I am having trouble finding what a rune looks like, and especially what the difference looks like for VGo vs Go.

Do you have any examples of Go vs VGo runes?

- The full Go literals spec is here.

VGo rune literals are basically C/C++ char literals. A VGo lexical

analyzer might want to start with a regex for a char literal, and then

add regex'es for things that are legal Go and not legal VGo. Here are

examples. There are also octal and hex escapes legal in Go but not in VGo.

| Regex | Interpretation

|

|---|

"'"[^\\\n]"'" | initial attempt at normal non-escaped runes

|

"'\\"[nt\\']"'" | a few legal VGo escaped runes

|

"'\\"[abfrv]"'" | legal in Go not in VGo escaped runes

|

"'\\u"[0-9a-fA-F]{4}"'" | legal in Go not in VGo

|

"'\\U"[0-9a-fA-F]{8}"'" | legal in Go not in VGo

|

- You mentioned that the next homework assignment, we won't be calling

yylex() from main() (which is why you previously

mentioned you cannot allocate the token structure in main()).

I have followed that rule,

but I question how will linked lists be set up in Homework #2 then?

- In HW#2 the linked list will be subsumed (that is, replaced) by you

building a tree data

structure. If you built a linked list inside

yylex(), that

would be a harmless waste of time and space and could be left in place.

If you malloc'ed the token structs inside yylex() but

built the linked list in your main(), your linked list

will just go away in HW#2 when we modify main() to call

the Bison parser function yyparse() instead of the loop

that repeatedly calls yylex().

- Can you test my scanner and see if I get an "A"?

- No.

- Can you post tests so I can see if my scanner gets an "A"?

- See these vgo sample files.

If you share additional tests that you devise, for example

when you have questions, I will add them to this collection

for use by the class.

- So if I run OK on these files, do I get an "A"?

- Maybe.

You should devise "coverage tests" to hit all described features.

-

Are we required to be using a lexical analysis error function lexerr()?

-

-

Whether you have a helper function with that particular name is up to you.

- You should report lexical errors in a manner that is helpful to the user.

Include line #, filename, and nature of the error if possible.

- Many lexical errors could consist of "Go token X is not legal in vgo".

- You are allowed to stop with an error exit status when you find an error.

-

The HW1 Specification says we are to use at least 2 separately compiled

.c files. Does Flex's generated lex.yy.c count as one of them,

or are you looking for yet another .c file, aside from lex.yy.c?

-

lex.yy.c counts. You may have more, but you should at least have a lex.yy.c

or other lex-compatible module, and a main function in a separate .c file

- For numbers, should we care about their size? What if an integer in

the .go file is greater than 2^64 ?

- We could ask: what does the Go compiler do. Or we could just say:

good catch, the VGo compiler would ideally range check and emit an error

if a value that doesn't fit into 64-bits occurs, for either the integer

or (less likely) float64 literals. Any ideas on how to detect an out

of range literal?

- I was using valgrind to test memory leaks and saw that there is a

leak-check=full option. Should I be testing that as well, or

just standard valgrind output with no options?

- You are welcome to use valgrind's memory-leak-finding capabilities, but

you are only being graded on whether your compiler performs illegal reads or

writes, including reads from uninitialized memory.

Playing with a Real Go Compiler

One way to tell what your compiler should do in VGo is to compare it with

what a real Go compiler does on any given input. I think we will have one

of these on the cs-445 machine for you to play with soon. I played with

one and immediately learned some things:

What to do about Go's standard library functions, cont'd

We will do a literal, minimalist interpretation of what is necessary

to support functions like reader.ReadString(). VGo will support two kinds of

calls: ones that return a single value, and ones that return a single value

plus a boolean flag that indicates whether the function succeeded or failed.

Flex Manpage Examplefest

To read a UNIX "man page", or manual page, you type "man command"

where command is the UNIX program or library function you need information

on. Read the man page for man to learn more advanced uses ("man man").

It turns out the flex man page is intended to be pretty complete, enough

so that we can draw some examples from it. Perhaps what you should figure

out from these examples is that flex is actually... flexible. The first

several examples use flex as a filter from standard input to standard

output.

- Line Counter/Word Counter

int num_lines = 0, num_chars = 0;

%%

\n ++num_lines; ++num_chars;

. ++num_chars;

%%

main()

{

yylex();

printf( "# of lines = %d, # of chars = %d\n",

num_lines, num_chars );

}

- Toy compiler example

/* scanner for a toy Pascal-like language */

%{

/* need this for the call to atof() below */

#include <math.h>

%}

DIGIT [0-9]

ID [a-z][a-z0-9]*

%%

{DIGIT}+ {

printf( "An integer: %s (%d)\n", yytext,

atoi( yytext ) );

}

{DIGIT}+"."{DIGIT}* {

printf( "A float: %s (%g)\n", yytext,

atof( yytext ) );

}

if|then|begin|end|procedure|function {

printf( "A keyword: %s\n", yytext );

}

{ID} printf( "An identifier: %s\n", yytext );

"+"|"-"|"*"|"/" printf( "An operator: %s\n", yytext );

"{"[^}\n]*"}" /* eat up one-line comments */

[ \t\n]+ /* eat up whitespace */

. printf( "Unrecognized character: %s\n", yytext );

%%

main( argc, argv )

int argc;

char **argv;

{

++argv, --argc; /* skip over program name */

if ( argc > 0 )

yyin = fopen( argv[0], "r" );

else

yyin = stdin;

yylex();

}

On the use of character sets (square brackets) in lex and similar tools

A student recently sent me an example regular expression for comments that read:

COMMENT [/*][[^*/]*[*]*]]*[*/]

One problem here is that square brackets are not parentheses, they do not nest,

they do not support concatenation or other regular expression operators. They

mean exactly: "match any one of these characters" or for ^: "match any one

character that is not one of these characters". Note also that you

can't use ^ as a "not" operator outside of square brackets: you

can't write the expression for "stuff that isn't */" by saying (^ "*/")

Finite Automata

Efficiency in lexical analyzers based on regular expressions is all about

how to best implement those wonders of CS 385: the finite automata. Today

we briefly review some highlights from theory of computation with an eye

towards implementation. Maybe I will accidentally describe something

differently than how you heard it before.

A finite automaton (FA) is an abstract, mathematical machine, also known as a

finite state machine, with the following components:

- A set of states S

- A set of input symbols E (the alphabet)

- A transition function move(state, symbol) : new state(s)

- A start state S0

- A set of final states F

The word finite refers to the set of states: there is a fixed size

to this machine. No "stacks", no "virtual memory", just a known number of

states. The word automaton refers to the execution mode: there is

no brain, not so much as a instruction set and sequence of instructions.

The entire logic is just a hardwired short loop that executes the same

instruction over and over:

while ((c=getchar()) != EOF) S := move(S, c);

What this "finite automaton algorithm" lacks in flexibility,

it makes up in speed.

lecture #8 began here

Mailbag

- My HW#1 grade sucked and I worked quite a bit on it.

- Yes. Let's discuss the grading scale. First, here's the distribution

of HW#1 grades:

10 10 10

9 9 9 9 9

8 8

7 7 7 7

6 6 6

5 5

4 4

1 1 1 1

- You are graded relative to your peers

- I do not use a 90/80/70/60 scale

- If your score was 6+ you are "fine"

- If your score was below 6, you could (and maybe should) fix and resubmit

for partial credit.

- Excused absences and negotiated circumstances may allow you to resubmit

or submit late, so long as that isn't abused.

- My C compiles say "implicit declaration of function"

- The C compiler requires a prototype (or actual function definition)

before it sees any calls to each function, in order to generate

correct code. On 64-bit platforms, treat this warning as an error.

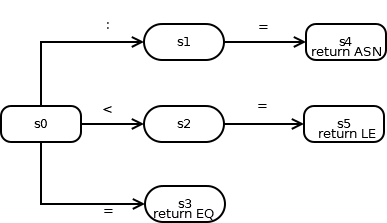

DFAs

The type of finite automata that is easiest to understand and simplest to

implement is called a deterministic finite

automaton (DFA). The word deterministic here refers to the return

value of

function move(state, symbol), which goes to at most one state.

Example:

S = {s0, s1, s2}

E = {a, b, c}

move = { (s0,a):s1; (s1,b):s2; (s2,c):s2 }

S0 = s0

F = {s2}

Finite automata correspond in a 1:1 relationship to transition diagrams;

from any transition diagram one can write down the formal automaton in

terms of items #1-#5 above, and vice versa. To draw the transition diagram

for a finite automaton:

- draw a circle for each state s in S; put a label inside the circles

to identify each state by number or name

- draw an arrow between Si and Sj, labeled with x

whenever the transition says to move(Si, x) : Sj

- draw a "wedgie" into the start state S0 to identify it

- draw a second circle inside each of the final states in F

The Automaton Game

If I give you a transition diagram of a finite automaton, you can hand-simulate

the operation of that automaton on any input I give you.

DFA Implementation

The nice part about DFA's is that they are efficiently implemented

on computers. What DFA does the following code correspond to? What

is the corresponding regular expression? You can speed this code

fragment up even further if you are willing to use goto's or write

it in assembler.

state := S0

for(;;)

switch (state) {

case 0:

switch (input) {

'a': state = 1; input = getchar(); break;

'b': input = getchar(); break;

default: printf("dfa error\n"); exit(1);

}

case 1:

switch (input) {

EOF: printf("accept\n"); exit(0);

default: printf("dfa error\n"); exit(1);

}

}

Flex has extra complications. It accepts multiple regular expressions, runs

them all in parallel in one big DFA, and adds semantics to break ties. These

extra complications might be viewed as "breaking" the strict rules of DFA's,

but they don't really mess up the fast DFA implementation.

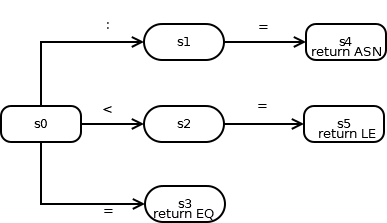

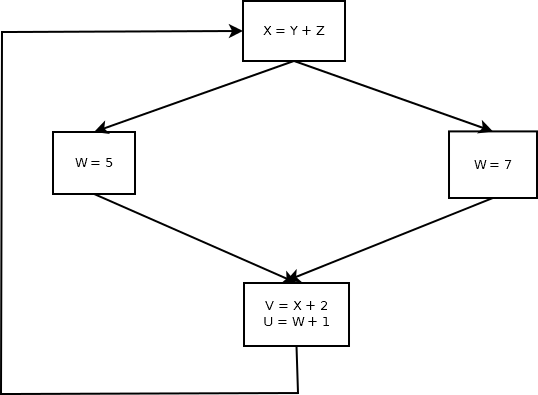

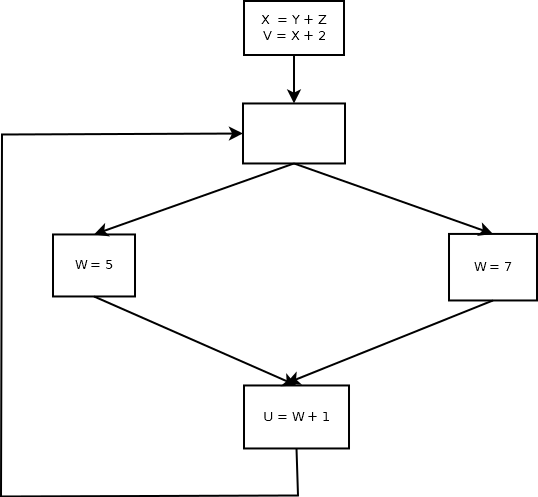

Deterministic Finite Automata Examples

A lexical analyzer might associate different final states with different

token categories. In this fragment, the final states are marked by

"return" statements that say what category to return. What is incomplete

or wrong here?

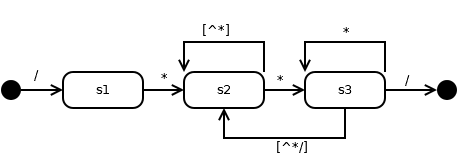

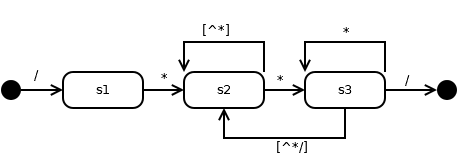

C Comments:

C Comments Redux

Nondeterministic Finite Automata (NFA's)

Notational convenience motivates more flexible machines in which function

move() can go to more than one state on a given input symbol, and some

states can move to other states even without consuming an input symbol

(ε-transitions).

Fortunately, one can prove that for any NFA, there is an equivalent DFA.

They are just a notational convenience. So, finite automata help us get

from a set of regular expressions to a computer program that recognizes

them efficiently.

NFA Examples

ε-transitions make it simpler to merge automata:

multiple transitions on the same symbol handle common prefixes:

factoring may optimize the number of states. Is this picture OK/correct?

C Pointers, malloc, and your future

For many of you success as a computer scientist may boil down to what it

will take for you to master the concept of dynamically allocated memory,

and whether you are willing to do that. In C this means pointers and the

malloc() family of functions. Here are some tips:

- Draw "memory box" pictures of your variables. Pencil and paper

understanding of memory leads to correct running programs.

- Always initialize local pointer variables. Consider this code:

void f() {

int i = 0;

struct tokenlist *current, *head;

...

foo(current)

}

Here, current is passed in as a parameter to foo, but it is a

pointer that hasn't been pointed at anything. I cannot tell you how many

times I personally have written bugs myself or fixed bugs in student code,

caused by reading or writing to pointers that weren't pointing at anything

in particular. Local variables that weren't initialized point at random

garbage. If you are lucky this is a coredump, but you might not be lucky,

you might not find out where the mistake was, you might just get a wrong answer.

This can all be fixed by

struct tokenlist *current = NULL, *head = NULL;

- Avoid this common C bug:

struct token *t = (struct token *)malloc(sizeof(struct token *)));

This compiles, but causes coredumps during program execution. Why?

- Check your

malloc() return value to be sure it is not

NULL. Sure, modern programs have big memories so you think they will "never

run out of memory". Wrong. malloc() can return NULL even on

big machines. Operating systems often place limits on memory far beneath

the hardware capabilities. wormulon (or cs-course42) is likely a conspicuous

example. Machine shared across 40 users? You may have a lower memory

limit than you think.

NFA examples - from regular expressions

Can you draw an NFA corresponding to the following?

(a|c)*b(a|c)*

(a|c)*|(a|c)*b(a|c)*

(a|c)*(b|ε)(a|c)*

Regular expressions can be converted automatically to NFA's

Each rule in the definition of regular expressions has a corresponding

NFA; NFA's are composed using ε transitions. This is called

"Thompson's construction" ).

We will work

examples such as (a|b)*abb in class and during lab.

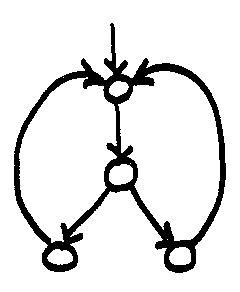

- For ε, draw two states with a single ε transition.

- For any letter in the alphabet,

draw two states with a single transition labeled with that letter.

- For regular expressions r and s, draw r | s

by adding a new start state with ε transitions to the start

states of r and s, and a new final state with ε transitions

from each final state in r and s.

- For regular expressions r and s, draw rs

by adding ε transitions from the final states of r to the

start state of s.

- For regular expression r, draw r*

by adding new start and final states, and ε transitions

- from the start state to the final state,

- from the final state back to the start state,

- from the new start to the old start and from the old final

states to the new final state.

- For parenthesized regular expression (r) you can use the NFA for r.

lecture #9 began here

Mailbag

- Go has four different kinds of literal constants, but for HW#2,

the grammar one thing, LLITERAL, what up?

- You are correct. If you return four different codes for four different

types of literals, you have to modify the grammar to replace LLLITERAL

with a grammar rule that allows your four integer codes. Alternatively,

you can have your four (or more) different flex regular expressions for

different kinds of literal constants, all return the LLITERAL integer.

- How do I deal with semi-colons?

- For HW#1, hopefully you just added an integer token category for them,

and returned that integer if you

saw one, even though explicit semi-colons are infrequent

in source code. For HW#2 your options are to write a grammar

that doesn't need semi-colons and works anyhow, or write a grammar that

needs semi-colons, and perform semi-colon insertion.

- What do you mean, for ival/dval/sval, by telling us to

"store binary value here"

- A binary value is the actual native representation that corresponds to

the string of ASCII codes that is the lexeme, for example what you get when

you call atoi("1234") for the token "1234".

- I am getting a lot of "unrecognized rule" errors in my .l file

- Look for problems with regular expressions or semantic actions prior to

the first reported error. If you need better diagnosis, find a way to

show me your code. One student saw these errors because they omitted

the required space between their regular expressions and their C semantic

actions.

- Do you have any cool tips to share regarding the un-escaping of special

characters?

- Copy character-by-character from the yytext into

a newly allocated array. Every escape sequence of multiple characters in yytext

represents a single character in

sval. Inside your loop copying characters from yytext into sval,

if you see a backslash in yytext, skip it and use a switch statement

on the next character. See below for additional discussion.

-

How can we represent and print out the binary value in ival and dval?

Wouldn't both ival and dval need to be char arrays types to actually display

a "binary representation"?

-

You do NOT have to convert to a binary string representation or output anything

in 0b010101010000 format.

-

Is a function name also an identifier?

-

Yes.

The Go designers probably had good reason to include semi-colons in the Go

grammar, to facilitate parsing, but they didn't want code to require them

99% of the time, so they introduced semi-colon insertion, an idea that they

borrowed from other languages.

- At a newline, semi-colon is inserted if the last token was

- identifier

- literal (number, rune, or string)

- break, continue, fallthrough, or return

- ++ -- ) ] or }

- "a semi-colon may be omitted before a closing ) or }"

What does (2) even mean? Automatic ; insertion before every ) or }?

Or just that the grammar handles an optional semi-colon in those spots?

Ways to Implement Semi-colon Insertion

- preprocessor

- you could write a pre-pass that does nothing but semi-colon insertion

- layered in between yylex() and yyparse()

- you could rename the yylex() generated by flex to be yylex2(), and

write a new yylex() that returns a semi-colon if conditions are right,

and otherwise just calls yylex2(). You'd have to have some global or

static memory for (1) what was the last token and (2) whether we saw

a newline

- within the regular expression for newline?

- not quite general enough, but you could return a semi-colon integer

when you see a newline whose previous token met the conditions

- layered inside yylex's C semantic actions

- a student figured out that one can do semi-colon insertion inside

a helper function called from each flex semantic action. The function,

if it substitutes a semi-colon for what is normally returned, has to

save/remember what it was going to return, and return it later. The

trick here is: if you inserted a semi-colon and saved your found token,

you return the saved token after you have scanned your next

token. In that case, you need to save thattoken somehow;

one way is to tell flex to back up via yyless(0).

NFA's can be converted automatically to DFA's

In: NFA N

Out: DFA D

Method: Construct transition table Dtran (a.k.a. the "move function").

Each DFA state is a set of

NFA states. Dtran simulates in parallel all possible moves N can make

on a given string.

Operations to keep track of sets of NFA states:

- ε_closure(s)

- set of states reachable from state s via ε

- ε_closure(T)

- set of states reachable from any state in set T via ε

- move(T,a)

- set of states to which there is an NFA transition from states in T on symbol a

NFA to DFA Algorithm:

Dstates := {ε_closure(start_state)}

while T := unmarked_member(Dstates) do {

mark(T)

for each input symbol a do {

U := ε_closure(move(T,a))

if not member(Dstates, U) then

insert(Dstates, U)

Dtran[T,a] := U

}

}

HW #1 Tips

These comments are based on historical solutions. I learned a lot from my

older siblings when I was young. Consider this your opportunity to learn

from your Vandal forebears' mistakes.

- better solutions' lexer actions looked like

...regex... { return token(TERMSYM); }

-

where token() allocates a token structure, sets a global variable to

point to it, and returns the same integer category that it is passed

from yylex(), so yylex() in turn returns this value.

- Put in enough line breaks.

-

Use <= 80 columns in your code, so that it prints readably.

- Comment non-trivial helper functions. Comment non-trivial code.

- Comment appropriate for a CS professional reader, not a newbie tutorial.

I know what i++ does, you do not have to tell me.

- Do not leave in commented-out debugging code or whatever.

- I might miss, and misgrade, your good output if I can't see it.

- Fancier formatting might calculate field widths from actual data

and use a variable to specify field widths in the printf.

- You don't

have to do this, but if you want to it is not that hard.

- Remind yourself of the difference between NULL and '\0' and 0

- NULL is used for pointers. The NUL byte '\0' terminates strings. 0

is a different size from NULL on many 64-bit compilers. Beware.

- Avoid O(n2) or worse, if at all possible

- It is possible to write bad algorithms that work, but it is better

to write good algorithms that work.

- Avoid big quantities of duplicate code

- You will have to use and possibly extend this code all semester.

- Use a switch when appropriate instead of long chain of if-statements

- Long chains of if statements are actually slow and less readable.

- On strings, allocate one byte extra for NUL.

- This common 445 problem causes valgrind trouble, memory violations etc.

- On all pointers, don't allocate and then just point the pointer

someplace else

- This common student error results in, at least, a memory leak.

- Don't allocate the same thing over and over unless copies may need

to be modified.

- This is often a performance problem.

- Check all allocations and fopen() calls for NULL return (good to have helper functions).

- C library functions can fail. Expect and check for that.

- Beware losing the base pointer that you allocated.

- You can only free()

if you still know where the start of what you allocated was.

- Avoid duplicate calls to strlen()

- especially in a loop! (Its O(n2))

- Use strcpy() instead of strncpy()

- unless you are really copying only part of a string, or

copying a string into a limited-length buffer.

- You can't

malloc() in a global initializer

-

malloc() is a runtime allocation from a memory

region that does not

exist at compile or link time. Globals can be initialized, but not to

point at memory regions that do not exist until runtime.

- Don't use raw constants like 260

- use symbolic names, like LEFTPARENTHESIS or LP

- The vertical bar (|) means nothing inside square brackets!

- Square brackets are an implicit shortcut for a whole lot of ORs

- If you don't allocate your token inside yylex() actions...

- You'll have to go back and do it, you need it for HW#2.

- If your regex's were broken

- If you know it, and were lazy, then fix it. If you don't know it,

then good luck on the midterm and/or final, you need to learn these,

and devise some (hard) tests!

On resizing arrays in C

The sval attribute in the homework is a perfect example of a problem which a

Business (MIS) major might not be expected to solve well, but a CS major

should be able to do by the time they graduate. This is not to encourage

any of you to consider MIS, but rather, to encourage you to learn how to

solve problems like these.

The problem can be summarized as: step through yytext, copying each character

out to sval,

looking for escape sequences.

Space allocated with malloc() can be increased in size by realloc().

realloc() is awesome. But, it COPIES and MOVES the old chunk of

space you had to the new, resized chunk of space, and frees the old

space, so you had better not have any other pointers pointing at

that space if you realloc(), and you have to update your pointer to

point at the new location realloc() returns.

There is one more problem: how do we allocate memory for sval, and how big

should it be?

- Solution #1: sval = malloc(strlen(yytext)+1) is very safe, but wastes

space.

- Solution #2: you could malloc a small amount and grow the array as

needed.

sval = strdup("");

...

sval = appendstring(sval, yytext[i]); /* instead of sval[j++] = yytext[i] */

where the function appendstring could be:

char *appendstring(char *s, char c)

{

i = strlen(s);

s = realloc(s, i+2);

s[i] = c;

s[i+1] = '\0';

return s;

}

Note: it is very inefficient to grow your array one character at

a time; in real life people grow arrays in large chunks at a time.

- Solution #3: use solution one and then shrink your array when you

find out how big it actually needs to be.

sval = malloc(strlen(yytext)+1);

/* ... do the code copying into sval; be sure to NUL-terminate */

sval = realloc(sval, strlen(sval)+1);

Practice converting NFA to DFA

OK, you've seen the algorithm, now can you use it?

...

...did you get:

OK, how about this one:

lecture #10 began here

Syntax Analysis

Parsing is the act of performing syntax analysis to verify an input

program's compliance with the source language. A by-product of this process

is typically a tree that represents the structure of the program.

Context Free Grammars

A context free grammar G has:

- A set of terminal symbols, T

- A set of nonterminal symbols, N

- A start symbol, s, which is a member of N

- A set of production rules of the form A -> ω,

where A is a nonterminal and ω is a string of terminal and

nonterminal symbols.

A context free grammar can be used to generate strings in the

corresponding language as follows:

let X = the start symbol s

while there is some nonterminal Y in X do

apply any one production rule using Y, e.g. Y -> ω

When X consists only of terminal symbols, it is a string of the language

denoted by the grammar. Each iteration of the loop is a

derivation step. If an iteration has several nonterminals

to choose from at some point, the rules of derviation would allow any of these

to be applied. In practice, parsing algorithms tend to always choose the

leftmost nonterminal, or the rightmost nonterminal, resulting in strings

that are leftmost derivations or rightmost derivations.

Context Free Grammar Examples

Well, OK, so how much of the C language grammar can we come up

with in class today? Start with expressions, work on up to statements, and

work there up to entire functions, and programs.

Context Free Grammar Example (from BASIC)

How many terminals and non-terminals does the grammar below use?

Compared to the little grammar we started last time, how does this rate?

What parts make sense, and what parts seem bogus?

Program : Lines

Lines : Lines Line

Lines : Line

Line : INTEGER StatementList

StatementList : Statement COLON StatementList

StatementList : Statement

Statement: AssignmentStatement

Statement: IfStatement

REMark: ... BASIC has many other statement types

AssignmentStatement : Variable ASSIGN Expression

Variable : IDENTIFIER

REMark: ... BASIC has at least one more Variable type: arrays

IfStatement: IF BooleanExpression THEN Statement

IfStatement: IF BooleanExpression THEN Statement ELSE Statement

Expression: Expression PLUS Term

Expression: Term

Term : Term TIMES Factor

Term : Factor

Factor : IDENTIFIER

Factor : LEFTPAREN Expression RIGHTPAREN

REMark: ... BASIC has more expressions

Mailbag

Really, I want to thank all of you who are sending me juicy questions

by e-mail.

- I am trying to get a more basic picture of the communication that happens between Flex and Bison. From my understanding:

- main() calls yyparse()

- yyparse() calls yylex()

- yylex() returns tokens from the input as integer values which are enumerated to text for readability to the .y file

- yyparse() tries to match these integers against rules of our grammar

(if it unsuccessful it errors (shift/reduce, reduce/reduce, unable to parse))

Is this correct?

- 1-3 are correct. 3 also includes: yylex() sets a global variable so that

yyparse() can pickup the lexical attributes, e.g. a pointer to struct token.

4 is correct except that shift/reduce and reduce/reduce conflicts are found

at bison time, not at yyparse() runtime. But yes yyparse() can find syntax

errors and we have to report them meaningfully.

- Does

yylval have any use to us? In CS 210 we did a

calculator and it was used to bring the value of the token into the parser.

- Yes,

yylval is how yyparse() picks up lexical attributes.

We will talk about it more in class.

- How would we add artificial tokens, like the semi-colon, without skipping

real tokens when we return?

- Save the real, found token in a global or static, or figure out a way to

push it back onto the input stream. Easiest is a one-token saved (pointer to

a) token struct.

- Since we are adding semi-colons without knowledge

of the grammar how do we avoid simply putting a semi-colon at the end of