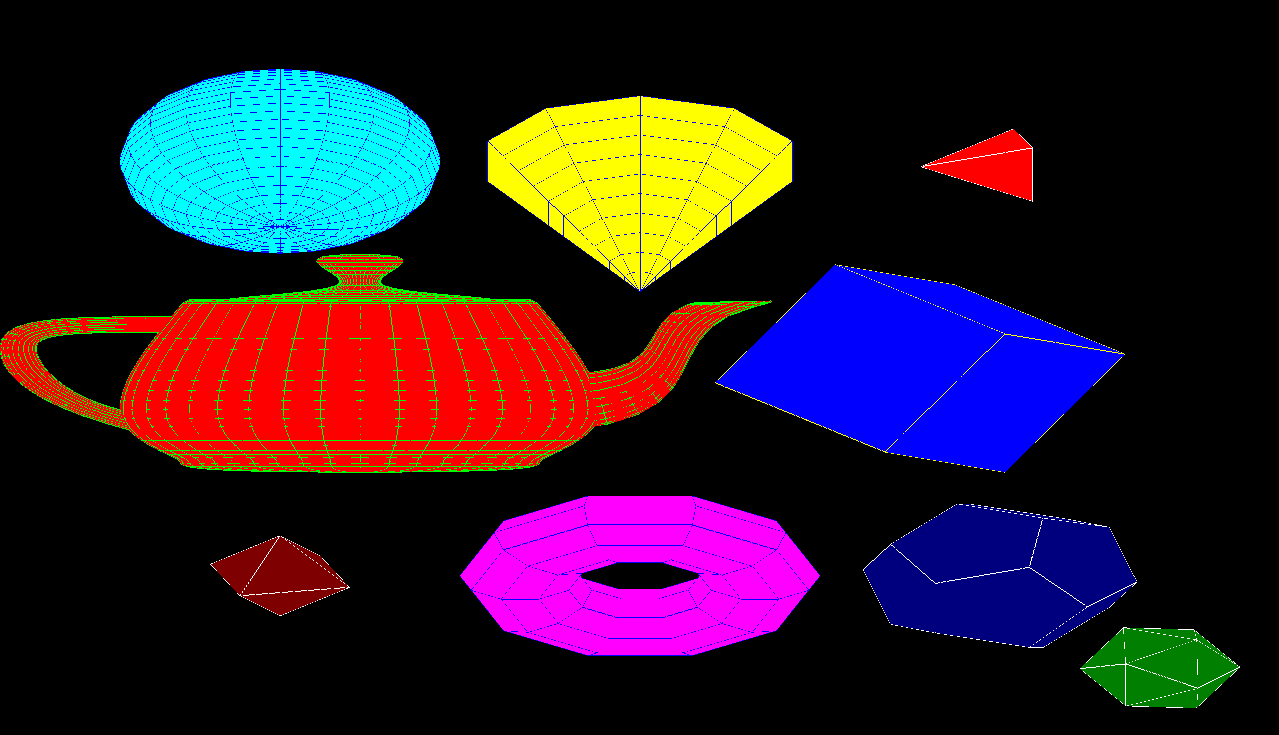

But really, complex shapes are usually composed of lots of triangles,

organized into data structures called 3D models; more on that shortly.

If you develop in C/C++, there are two libraries that are "completely

standard" OpenGL: libGL and libGLU.

Asynchronous is if all N machines just send their packets however fast they get

around to it, and nothing is scheduled. Asynchronous is usually better. It

takes extra coding to handle asynchronous communications.

Game developers tend to not know network coding, and want to outsource it

from some black-box game-network-library. Just cause you pick a 3rd party

network library does not mean things will magically be easy. They depend on,

and can't do better than, the underlying OS (C) API's and their semantics.

Plus, they tend to impose their own additional weirdnesses that tie you to

them.

Class was cancelled on Wednesday February 5. Sorry!

[Oehlke/Nair] gives a 3D example that uses 12 model instances. Will all 12

be visible? If you have a reasonable (small) number of models, brute force

is an option:

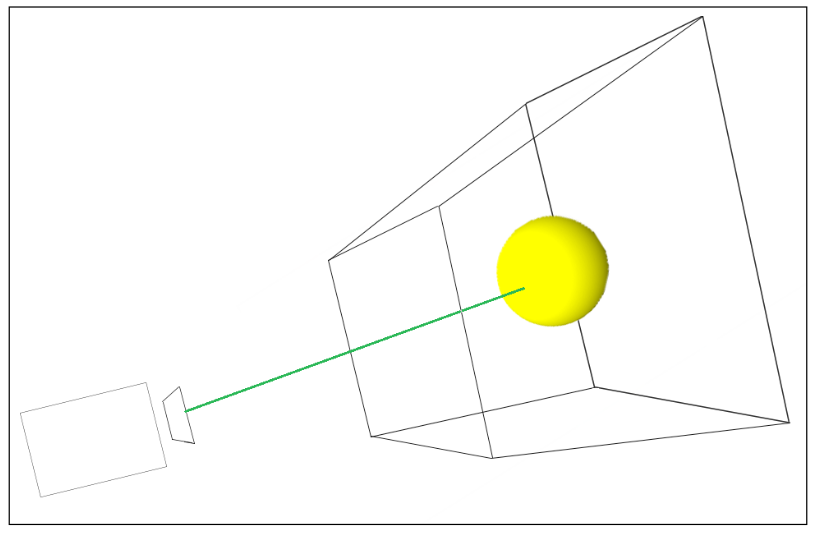

Ray picking == shooting a line (ray) from camera through a point on viewport

(calculatable from x,y screen coordinates by transforming them into world

coordinates) and on into objects in the viewing frustum to see what the user

clicked on.

Nair's explanations come in a later section,

after the code is presented. You might want to skip the

code section and read the explanation first.

Lecture #16 was a guest lecture overview of Blender 3D model operations.

Lecture #17 was a guest lecture overview of Blender texturing-by-painting,

UV mapping, and the like.

More broadly, I am trying to incrementally get us from Di Guiseppe one-player

FPS to MMO-style multi-player. There are architecture questions.

Consider this a discussion of TCP to complement [S/O Ch. 4] which

only discusses UDP.

You have to ask the operating system to put a socket in non-blocking I/O mode.

Best answers on the 3D vs. 2D question managed to say something more deep

than "3D is more difficult to program than 2D", although that is certainly

true. It was good if you noted that the mechanics in 3D were often more

complicated, and that 3D often spends a much higher percentage of its budget

on assets. Several of you noted that many game genres can use either 2D or

3D, but some genres are tied to one or the other. For example, it might be

hard to imagine a 3D side-scroller, or a 2D first person shooter (although

there are lots of 2D "shooters").

Best answers on multi-user vs. single-user managed to say something more deep

than "multi-user is more difficult to program than single-user". Some folks

said, or almost said, that playing with (or against) other humans reduces the

need in multi-user games for good AI -- because the other humans constitute

nonartificial intelligence.

turn on the recording, Dr. J

turn on the recording, Dr. J

Consider the ones we didn't get to last time.

Although a naive generic definition of scalability in multi-user games

might be: "handling more users", Steed thinks we can do better than that.

We are just looking for interesting stuff in the

slides that I might have skipped, or figures that

help illustrate selected topics that I covered.

After starting with all rectangular box shapes, CVE was extended with

two primitives to allow for uneven terrain: "ramps", ...

and "heightfields".

The world (models, art), as hard as they are, would be this hollow

shell if it is not accompanied by meaningful objects, things you can

interact with, NPC's, things to do, resources and things you can make.

- coding cost

- use the highest-level language that can deliver adequate graphics,

networking, and compute performance. But coding cost is the minority.

- modeling cost

- where can we import existing models instead of creating our own?

can we get to where this "just works" for a vast preponderance of models?

- texture-acquisition cost

- can a library of standard textures deliver 80+% of our texture needs?

- sound-acquisition

- can a library of standard sounds deliver 50+% of our audio needs?

Lecture 43

- Today is the next-to-the-last lecture.

- Is your semester project going to play nicely? What-all are you going

to have working? What all are you not sure about, or struggling with?

Selected Bits from the Remainder of Di Guiseppe

- We left off in Di Guiseppe having had several lectures on Blender.

- Jeffery has run out of time on his glorious plan of taking Josh's

rigged character and doing some animations using it.

- Di Guiseppe only has 4 pages (136-139)

consisting of a few screenshots on animation, discussing Blender's

Pose Mode. Basically, pick a number (4+ ?) of key frames, and manually

set bone positions at each one. Name the animation. Typical game

characters will have from 2-3 to many dozens of named animations.

- If any of you have any animations in your final project,

that's extra credit and not likely to be thanks to Di Guiseppe.

- I can talk about animation from Turning the Pages (did I do that

previously either in CS 328 or 428? I do not want to repeat myself)

- I can also reasonably ask: is there any more good stuff in Di Guiseppe

(pp 140+) that I wish we covered? A bunch of Chapter 5 material

discussed below seems like we did it earlier. On Friday I'll look for

any other bits (Ch 6 and 7) that are worth discussing.

Export to FBX

- We previously mentioned (end of lecture 8), and I think Josh

also mentioned already

- To get to LibGDX from Blender (or

other 3D model software like Maya) the best path is export to .FBX format

- FBX (Filmbox) is a proprietary (boo) binary format owned by Autodesk

(a BIG software vendor known primarily for _______?).

- Object-based 3D model + animations + audio and video

- For awhile Autodesk thought they would make

money selling videogame middleware; maybe they have given up.

- Enough of the secret FBX format is made public in their publically

available C++ SDK that folks have been able to reverse engineer FBX

readers/writers for at least a practical working subset of FBX.

- Main warning for FBX Export in Di Giuseppe: only check the ARM(ature)

and MESH checkboxes in the export dialog. Maybe Blender can do more

or different, but in general you will want the simplest most low-level

3D model+animation format to minimize complications during conversion

to libGDX's native G3DJ format.

Fbx-Conv

I have a preference for G3DJ over G3DB. JSON is good. People sometimes tweak

things in their G3DJ. I forget if there's a trivial G3DJ-to-G3DB but their

should be, only used for final shipped game binaries.

Importing a 3D Model

We can look for how Di Guiseppe does it or we can look at how

turning the pages does it.

Reducing the Cost of Constructing a 3D World

The Hong Kong Floor Plan Efforts

For many virtual environments, you might find the content creator expects

you to start from good old Dungeons and Dragons maps, or the bones of old

architecture floor plans. We started CVE from such drawings, and then found

a bunch of related work.

Building 3D Models/Worlds From 2D Digital Images

In or after a previous class, a student mentioned that one can just use

Google Foo, or at least that Dr. Ma has done so, to generate 3D models;

no fancy LIDAR might be required. Turns out there are lots of tools:

Main issue would be: do they generate/export a format that your software

can import/use? Another issue would be: many of the available tools are

expensive commercial offerings.

Readings on Intelligent NPC's

BDI

- Michael Bratman (Philosopher, Stanford)

- desire+"commitment"=intention

- selection of plans from a "plan library"

- balance (re)selection time vs. execution time

- execute the current plan until completion or commitment timeout

- does not include how to create plans

Lecture 44

Welcome to Dr. J's "Last Lecture" (at UI)

- How many of you are graduating this spring? How many next fall?

- I spent 13.5 years at UI and have a lot of love for the place.

- At various levels, UI finds ways to make you happy to graduate and

move on...

Now for some Highlights from Di Giuseppe Chapter 6

- A shader thing, uses GLSL, but that doesn't show up in the Java code

- This is the second libGDX book that uses DirectionalShadowLight.java

despite its "@deprecated Experimental, likely to change, do not use!"

comment (LOL)

- This means to use it, you should pull its code into your project,

not assume it will remain in libGDX as-is.

- implements a ShadowMap interface

- creates its own FrameBuffer object

- (guessing here) renders shadows into framebuffer, hands framebuffer to

a generic GLSL shader that darkens shadowed patches of real scene

- Feels like Deja Vu all over again

- Recommended tutorial on Shadow Mapping from Microbasic.net

Visual Effect for Weapons Fire and/or Enemy Death Effect

- What should weapons fire look like?

- Maybe easiest to have it actually be a (short lived) entity.

- What should death look like?

- For Di Guiseppe the answer is: soda bubbles and a fadeout.

I suppose some weapons might vaporize a target, but this is rare.

- Uses Particle Editor from libGDX Tools Page

- Billboard effect (vs. PointSprite or ModelInstance)

- Di Guiseppe wanted "soda bubble" effect, chose a Cylinder from the spawn

influencer

In the Java code:

- class RenderSystem adds a ParticleSystem, renders its effects

along with other models

- BlendingAttribute

Performance Tips (Di Guiseppe)

- Don't recreate the same objects over and over

- Di Giuseppe's solution: use Java static variables, e.g. for enemy

- Others might say: use member variables of some singleton class,

re-use from an instance pool, etc.

- Don't render shadows for entities that aren't visible

- Di Giuseppe notes that an isVisible() test already available

makes shadowing faster; it mattered more when on Android

For posterity: exactly what did you have to do to get book code running?

- never built, but took ideas and used them

- change "extends" to say "implements" as needed

Procedurally-Generated Height Fields

Impetus: building "outside" and/or other uneven areas.

Reading

First, photos:

- Facing south out JEB's front door:

- Facing west from JEB's west edge:

- Facing east from JEB's east edge:

- The Front of JEB (missing)

- Facing east from JEB's west edge (shows rise in elevation):

Discussion of sloping street outside JEB

Room {

NAME blahblah

...

obstacles [

...

HeightField {

coords [26 , 12.3, 18.9]

tex grass.png

width 2

length 2

heights [ [0, 0, 0], [0, 0.5, 0], [0, 0, 0] ]

}

]

}

Discussion of HeightField.icn:

#

# A HeightField is a non-flat piece of terrain, analogous to a Ramp.

# It has a "world-coordinate embedding", within which it plots a grid

# of varying heights, rendered using a regular mesh of triangles.

# See [Rabin2010], Chapter 4.2, Figure 4.2.11. Compared with the Rabin

# examples, we use a particular "alternating diagonal" layout:

#

# V-V-V

# |\|/|

# V-V-V

# |/|\|

# V-V-V

#

# Call the rectangular surface regions between adjacent vertices "cells".

# Let HF be a list of list of heights. There are in fact *HF-1 cell rows,

# and *(HF[1])-1 cell columns. In the above example *HF=3, *(HF[1])=3, and

# although the heightfield matrix is a 3x3, the cell matrix is 2x2. The

# cell row length is length/(*HF-1) and the

# cell column width is width/(*(HF[1])-1).

#

# Vertex Vij, where i is the row and j is the column, starting at 0, is given

# by x+(i*cell_column_width),y+HF[i+1][j+1],z+(j*cell_row_length).

#

class HeightField : Obstacle(

x, y, z, # base position

width, length, # x- and z- extents

HF # list of lists of y-offsets.

rows, columns, row_length, column_width # derived/internal

)

#

# assumes 0-based subscripts

#

method calc_vertex(i,j)

return [x+i*column_width, y+HF[i+1][j+1], z+(j*row_length)]

end

method render(render_level)

every row := 2 to *HF do {

every col := 2 to *(HF[1]) do {

v1 := calc_vertex(col-1,row-1)

v2 := calc_vertex(col-1,row)

v3 := calc_vertex(col,row)

v4 := calc_vertex(col,row-1)

# there are two cases, triangle faces forward (cell row+col even)

# and triangle faces backward (cell row+col odd)

if row+col %2 = 0 then {

# render a triangle facing the previous column

FillPolygon(v1 ||| v2 ||| v3)

FillPolygon(v1 ||| v3 ||| v4)

}

else {

# render a triangle facing the next column

FillPolygon(v1 ||| v2 ||| v4)

FillPolygon(v2 ||| v3 ||| v4)

}

}

}

end

initially(coords,w,h,l,tex)

HF := list(3)

every !HF := list(3, 0.0)

HF[2,2] := 0.5

rows := *HF-1, columns := *(HF[1])-1

row_length := length/rows, column_width := width/columns

end

And: discussion of procedural generating of a heightfield

procedure main()

every z := 0 to 5 do {

every x := 0 to 20 do {

writes(trun(hf(x,z)), " ")

}

write()

}

end

procedure hf(x:real,z:real)

return (log(x+1,2) + z/5.0 * (1-x/20)) * 1.5 / 4.39

end

procedure trun(r)

return left(string(r) ? tab((find(".")+3)|0), 5)

end

A (Temporary?) Network Focus

I am going to focus attention in class on the networking aspects of what we

are doing, at least until we get a better handle on it. We've spent only

modest time on networking so far.

Hello, cveworld

We need a Java client that gets through the initial login/handshake for

cve. We apparently need to know the cve cypher. And, we probably need

to get it working with non-blocking I/O.

cve cypher

Located in utilities.icn,

the cve cypher is Very Simple. For each character of a string:

encode it by adding 62, and concatenate a random character. To decode,

extract every odd character and subtract 62.

For example, a cypher of the string "system" is

s (115) + 62 = 177, hex B1

y (121) + 62 = 183, hex B7

s (115) + 62 = 177, hex B1

t (116) + 62 = 178, hex B2

e (101) + 62 = 163, hex A3

m (109) + 62 = 171, hex AB

Because the CVE session transcript

was printing out strings using Unicon's "image" function, which prints

upper ascii characters in hex format,

this string in the transcript looked approximately like

"\xb1\x00\xb7\x00\xb1\x00\xb2\x00\xa3\x00\xab\x00"

(i.e. it was the "secret" login name used to do a system login and

create a new user account).

Implementing cypher(s) in C would be trivial, is it as trivial in Java?

(yes, see cypher.java).

Next steps towards Hello, cveworld

OK, so some of your HW#4 chat programs implemented much of the CVE login

protocol. Given the cypher, what will it take for us to make one that

actually logs in on cveworld.com?

dat/ directory organization

If you were using the CVE server, your level designs would go in here.

There are some directories with static information replicated on all clients,

and some directories with dynamic information, possibly stored mainly on the

server.

cve/doc - project documentation source; several subdirectories

cve/dat

cve/dat/3dmodels/ - basic support for models in .s3d and .x format

cve/dat/help - command reference and user guide PDF

cve/dat/images - non-textures such as logos

cve/dat/images/letters - textures images for alphanumeric in-game text

cve/dat/newsfeed - in-game forum-like asynchronous text boards

cve/dat/nmsu - NMSU Science Hall level; many subdirs

cve/dat/projects/ - in-game software project spaces

cve/dat/scratch - scratch space

cve/dat/sessions - in-game collaborative IDE sessions

cve/dat/textures - common textures that may be used in all levels

cve/dat/uidaho/ - UIdaho Janssen Engineering Building

cve/dat/users - user accounts not tied to a particular server/level

.avt files

#@ Avatar property file generated by amaker.icn

#@ on: 07:17:49 MST 2018/03/22

NAME=spock

GENDER=m

HEIGHT=0.6

XSIZE=0.7

YSIZE=0.7

ZSIZE=0.7

SKIN COLOR=white

SHIRT COLOR=white

PANTS COLOR=white

SHOES COLOR=white

HEAD SHAPE=1

SHAPE=human

FACE PICTURE=spock.gif

Privacy=Everyone

The JEB demos

The JEB (Janssen Engineering Building) demos give us a gentle introduction

to the internals of the CVE virtual environment, from a data-centric

viewpoint: how do data files describe the virtual world? how would 3D

models of virtual objects integrate into this virtual world?

- jeb1 and

jeb1.zip

-

This introduction to the CVE code shows you the first 2500 lines of (Unicon)

CVE code pertaining to the 3D graphics. The network-centric multi-user

client/server code is omitted for the same of exposition and would need to

be discussed later after understanding the basic "virtual world" object

model introduced here.

- jeb2 and

jeb2.zip

-

This second-level of CVE detail consists of around ~6100 lines of code.

It includes a (rather deplorable, but fairly "programmable", Lego-man)

avatar class (avatar.icn) along with a contrasting, crude ancestor of

the 3D model file format support libraries (s3dparse.icn for S3D files),

and a simple standalone tool for debugging one's room modeling (modview.icn)

Comparing JEB demo models with the Hong Kong folks'

It seems in building virtual versions of the NMSU CS department and the

Uidaho CS department, we had some of the same problems as some Hong Kong

researchers generating 3D models from Floor Plans.

Raw Data

A virtual space starts with measurements and images. If we had CAD

files for JEB that would be swell, but as far as I know, we don't. We have

crude floor plans. We need to extract (x,y,z) coordinates

for those portions of the building we wish to model, sufficient to make a

"wire frame" model. We need to create images depicting the surfaces of all

the polygons in that model, the images are called textures and have certain

special properties.

There is one other kind of raw data I'd like you to collect: yourselves.

I want to push beyond the crude avatars I've used previously, and model

ourselves in crude, low-polygon textured glory.

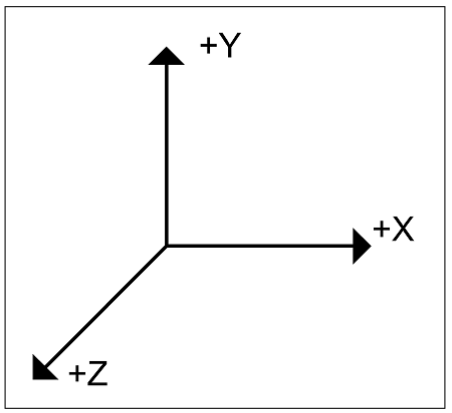

On Coordinate Systems

The coordinate system is the first piece of 3D graphics we are learning.

It is very simple and pretty much follows OpenGL conventions. x is east-west,

y is up-down, and z is north-south. Positive runs east, up, and south.

To anyone who gets confused about a positive axis running from right to left

or from top to bottom instead of what you were expecting: this just means

that your perspective is turned around from those used by the world

coordinates. If your character rotates 180 degrees appropriately, suddenly

positive values go the opposite direction from before and what was right to

left is the more familiar left to right. The point: world coordinates are

different from your personal eyeball coordinate system, don't confuse them.

A Common Coordinate System

For this class, we will use a standard/common coordinate system: 1.0 units =

1 meter, with an origin (0.0,0.0,0.0) in the northwest corner at ground

level. Y grows "up", X grows east, and Z grows south. The entire building

(except anything below ground level as viewed from the northwest corner)

will have fairly small positive real numbers in the model. This coordinate

system is referred to as FHN world coordinates (FHN=Frank Harary

Normal). Frank Harary was a graph theorist friend I knew in New Mexico.

The coordinate system is named after him because (0,0,0) was at one time

the corner of his office.

Room Modeling

For simplicity's sake, a room will consist of one or more rectangular

areas, each bounded by floor, ceiling, walls, and doors or openings into

other rectangular areas. Fortunately or unfortunately for you, we will

use the term Room to denote these rectangular areas. Within each room

are 0 or more obstacles and decorations. Obstacles are things like tables

and chairs, computers and printers. Decorations are things like signs

and posters that do not affect movement.

For your room, we need to:

measure its x and z using FHN,

- measure its y (how?)

- identify its exits (doors and openings) and for each exit,

- its connecting room

- its x,y,z, height, and plane

- collect textures for floor, walls, ceilings, doors, decorations

- identify major obstacles (essential virtual objects in the room)

Another Sample Room

Taken from NMSU's virtual CS department we have the following. It is for

a ground floor room (y's are 0). This example has both obstacles and

decorations.

Room {

name SH 167

x 29.2

y 0

z 0.2

w 6

h 3.05

l 3.7

floor Wall {

texture floor2.gif

coords [29.2,0,0.2, 29.2,0,3.9, 35.2,0,3.9, 35.2,0,0.2]

}

obstacles [

Box { # column

Wall {coords [34.3,0,0.2, 34.3,3.05,0.2, 34.3,3.05,0.6, 34.3,0,0.6]}

Wall {coords [34.3,0,0.6, 34.0,0,0.6, 34.0,3.05,0.6, 34.3,3.05,0.6]}

Wall {coords [34.0,0,0.6, 34.0,3.05,0.6, 34.0,3.05,0.2, 34.0,0,0.2]}

}

Box { # window sill

Wall {coords [29.2,0,0.22, 29.2,1.0,0.22, 35.2,1.0,0.22, 35.2,0,0.22]}

Wall {coords [29.2,1,0.22, 29.2,1.0,0.2, 35.2,1.0,0.2, 35.2,1,0.22]}

}

Chair {

coords [31.2,0,1.4]

position 0

color red

type office

movable true

}

Table {

coords [31.4,0,2.4]

position 180

color very dark brown

type office

}

]

decorations [

Wall { # please window

texture wall2.gif

coords [29.2,1.0,0.22, 29.2,3.2,0.22, 35.2,3.2,0.22, 35.2,1.0,0.22]

}

Wall { # whiteboard

texture whiteboard.gif

coords [29.3,1.0,3.7, 29.3,2.5,3.7, 29.3,2.5,0.4, 29.3,1.0,0.4]

}

Windowblinds {

coords [29.2,1.5,0.6]

angle 90

crod blue

cblinds very dark purplish brown

height 3.05

width 6

}

]

}

Diving into Jeb1

File Organization Comes First.

It appears we have two source files, and a few images (.gif) and model

(.dat) files.

jeb1: jeb1.icn model.u

unicon jeb1 model.u

model.u: model.icn

unicon -c model

jeb1.zip: jeb1.icn model.icn

zip jeb1.zip jeb1.icn model.icn *.gif *.dat makefile README

jeb1.icn

- ~500 lines. If you want to reimplement in your preferred language,

go for it.

- IVIB-generated GUI front end

- Embeds a 3D subwindow in a larger 2D window

- class Subwindow3D is a bit of a hack but shows technique

- Dispatch message loop overridden for subwin and net

- include-in-example modelfile parser should be outsourced.

A Walk Through a Smattering of Jeb1

Most attributes can be changed afterwards using function WAttrib(),

which takes as many attributes as you like. The following line

enables texture mapping in the window:

WAttrib("texmode=on")

Assigning a value to a variable uses := in Unicon. Most of the rest of

the numeric operators and computation is the same as in any programming

language. In 3D graphics, a lot of real numbers are used.

In the jeb1 demo, the

user controls a moving camera, which has an (x,y,z) location, an

(x,y,z) vector describing where they are looking, relative to their

current position, and camera angles up or down. Initial values of

posx and posy are "in the middle of the first room in the model".

The rooms have min and max values for x,y, and z so:

posx := (r.minx + r.maxx) / 2

posy := r.miny + 1.9

posz := (r.minz + r.maxz) / 2

lookx := posx; looky := posy -0.15; lookz := 0.0

Unicon has a "list" data type for storing an ordered collection of items.

There is a global variable named Room which holds such a list, a list of

Room() objects. We will discuss Room() objects in a bit. This is how

the list of rooms is created, with 0 elements:

Rooms := [ ]

The code that actually reads a model file and creates Room() objects

and puts them on the Rooms list is procedure make_model(). We defer

the actual parsing discussion to later or elsewhere.

procedure make_model(corridor)

local fin, s, r

fin := open(modelfile) | stop("can't open model.dat")

while s := readlin(fin) do s ? {

if ="#" then next

else if ="Room" then {

r := parseroom(s, fin)

put(world.Rooms, r)

world.RoomsTable[r.name] := r

if /posx then {

# ... no posx defined, calculate posx/posy per earlier code

}

}

else if ="Door" then parsedoor(s,fin)

else if ="Opening" then parseopening(s,fin)

# else: we didn't know what to do with it, maybe its an error!

}

close(fin)

end

The "rooms" in jeb.dat are JEB230, JEB 228 and the corridor

immediately outside. Each room is created by a constructor procedure,

and inserted into both a list and a table for convenience access. (Do

we need the list? Maybe not! Tables are civilization!)

The following line steps through all the elements of the Rooms list,

and tells each Room() to draw itself. The exclamation point is an

operator that generates each element from the list if the surrounding

expression requires it. The "every" control structure requires every

result the expression can produce. The .render() calls

a method render() on an object (in this case, on each object in turn as

it is produced by !). Note that our CVE will probably

want to get smart about only drawing those rooms that are "visible",

in order to scale performance.

every (!Rooms).render()

Aspects of the .dat file format

Obstacles

Student question for the day: my room is not a perfect rectangle, it has

an extra column jutting out on one of the walls, what do I do?

Answer: in HW4 such a thing might be omitted, but you might get around

to including the column as an obstacle (a virtual Box) in your .dat file.

The obstacles section is also where things like bookshelves and tables

might go.

obstacles [

Box { # column

Wall {coords [34.3,0,0.2, 34.3,3.05,0.2, 34.3,3.05,0.6, 34.3,0,0.6]}

Wall {coords [34.3,0,0.6, 34.0,0,0.6, 34.0,3.05,0.6, 34.3,3.05,0.6]}

Wall {coords [34.0,0,0.6, 34.0,3.05,0.6, 34.0,3.05,0.2, 34.0,0,0.2]}

}

]

Ceilings?

Originally there was no special ceiling syntax; before now a

person had to put a decoration up there in order to change the ceiling.

However, ceilings are very much like floors, so I went into model.icn

procedure parseroom() and added the following after the floor code.

Hopefully it is there now in the public version.

The parser is

a handwritten in a vaguely recursive descent style; at syntax levels where

many fields can be read, it builds a table (so order does not matter)

from which we populate an object's fields. So the table t's fields correspond

to what the file had in it, and the .coords here is the list of vertices

which the object in the model wants.

if \ (t["ceiling"]) then {

t["ceiling"].coords := [t["x"],t["y"]+t["h"],t["z"],

t["x"],t["y"]+t["h"],t["z"]+t["l"],

t["x"]+t["w"],t["y"]+t["h"],t["z"]+t["l"],

t["x"]+t["w"],t["y"]+t["h"],t["z"]]

t["ceiling"].set_plane()

r.ceiling := t["ceiling"]

}

This allows us to say declarations like this in .dat files:

ceiling Wall {

texture jeb230calendar.gif

}

Event Handling

Most programs that open a window or use a graphical user interface

are event-driven meaning that the program's main job is to

sit around listening for user key and mouse clicks, interpreting them

as instructions, and carrying them out. Pending() returns a list of

events waiting to be processed. Event() actually returns the key or

mouse event. For a simple demo program, one could code the event

processing loop oneself, something like the following.

repeat {

if *Pending() = 0 then { # continue in current direction, if any }

else {

case ev := Event() of {

Key_Up: cam_move(xdelta := 0.05) # Move Foward

... other keys

}

}

Events may be strings (for keyboard characters), but

most are small negative integer codes, with symbolic names such as

Key_Up defined in keysyms.icn.

$include "keysyms.icn"

The Jeb1 demo isn't this simple, since it embeds the 3D window in a Unicon

GUI interface. Events will be discussed in more detail below; for now it is

enough to say that they just modify the camera location and tell the scene

to redraw itself. cam_move() checks for a collision and if not, it updates

the global variables (e.g. posx,posy,posz). After the cam_move(), function

Eye(x,y,z,lx,ly,lz) sets the camera position and look direction.

jeb1 is a Unicon GUI application. The GUI owns the control flow and calls

a procedure when an interesting event happens. In Unicon terminology, a

Dispatcher runs the following loop until the program exits. The key call

is select(), which tells is which input sources have an event(s) for us.

method message_loop(r)

local L, dialogwins, x

connections := []

dialogwins := set()

every insert(dialogwins, (!dialogs).win)

every put(connections, !dialogwins | !subwins | !nets)

while \r.is_open do {

if x := select(connections,1)[1] then {

if member(subwins, x) then {

&window := x

do_cve_event()

}

else if member(dialogwins, x) then do_event()

else if member(nets, x) then do_net(x)

else write("unknown selector ", image(x))

# do at least one step per select() for smoother animation

do_nullstep()

}

else do_validate() | do_ticker() | do_nullstep() | delay(idle_sleep)

}

end

do_event() calls the normal Unicon GUI callbacks for the menus, textboxes,

etc. do_cve_event() is a GUI handler for keys in the 3D subwindow.

method do_cve_event()

local ev, dor, dist, closest_door, closest_dist, L := Pending()

case ev := Event() of {

Key_Up: {

xdelta := 0.05

while L[1]===Key_Up_Release & L[4]===Key_Up do {

Event(); Event(); xdelta +:= 0.05

}

cam_move(xdelta) # Move Foward

}

Key_Down: {

xdelta := -0.05

while L[1]===Key_Down_Release & L[4]===Key_Down do {

Event(); Event(); xdelta -:= 0.05

}

cam_move(xdelta) # Move Backward

}

Key_Left: {

ydelta := -0.05

while L[1]===Key_Left_Release & L[4]===Key_Left do {

Event(); Event(); ydelta -:= 0.05

}

cam_orient_yaxis(ydelta) # Turn Left

}

Key_Right: {

ydelta := 0.05

while L[1]=== Key_Right_Release & L[4] === Key_Right do {

Event(); Event(); ydelta +:= 0.05

}

cam_orient_yaxis(ydelta) # Turn_Right

}

"w": looky +:= (lookdelta := 0.05) #Look Up

"s": looky +:= (lookdelta := -0.05) #Look Down

"q": exit(0)

"d": {

closest_door := &null

closest_dist := &null

every (dor := !(world.curr_room.exits)) do {

if not find("Door", type(dor)) then next

dist := sqrt((posx-dor.x)^2+(posz-dor.z)^2)

if /closest_door | (dist < closest_dist) then {

closest_door := dor; closest_dist := dist

}

}

if \closest_door then {

if \ (closest_door.delt) === 0 then {

closest_door.start_opening()

}

else closest_door.done_opening()

closest_door.delta()

}

}

-166 | -168 | (-(Key_Up|Key_Down) - 128) : xdelta := 0

-165 | -167 | (-(Key_Left|Key_Right) - 128) : ydelta := 0

-215 | -211 : lookdelta := 0

}

Eye(posx,posy,posz,lookx,looky,lookz)

end

The Line Between jeb1.icn and model.icn

This program is a rapid prototype to test a concept. Originally it

was a single file (a single procedure!), but after the initial

demo (by Korrey Jacobs, adapted by Ray Lara, both at NMSU)

proved the concept, Dr. J started reorganizing it into two categories,

the code providing the underlying modeling capabilities (model.icn)

and the code providing the user interface (jeb1.icn). The

dividing line is imperfect, we might want to move some code from

one file into the other.

model.icn

We may as well start with the larger of the two source files.

model.icn is intended to be usable for any cve, not just the UI

CS department CVE. It defines classes Door, Wall, Box, and Room,

where Room is a subclass of Box.

Wall() is the simplest class here, it is just a textured polygon,

holding a texture value and a list of x,y,z coordinates, and

providing a method render(). Every object in the CVE's model

will provide a method render().

class Wall(texture, coords)

method render()

if current_texture ~=== texture then {

WAttrib("texture="||texture, "texcoord=0,0,0,1,1,1,1,0")

current_texture := texture

}

(FillPolygon ! coords) | write("FillPolygon fails")

end

initially(t, c[])

texture := t

coords := c

end

Class Box() is more interesting, it is a rectangular area with walls

that one cannot walk through, and a bounding box for collision detection.

Doors, openings, and other exceptions are special-cased by subclassing

and overriding default behavior. Rectangular areas are singled out

because they are common and have easy collision detection; when a wall

goes from floor to ceiling, collision detection reduces to a 2D

problem.

Box() has methods:

- mkwall() (to make walls)

- render() (to draw)

- disallows(x,z) to assist in collision detection

Class Door() is not just a graphical object, it is a connection between (2)

rooms, which can be open (1.0) or closed(0.0) or in between. It supports

methods:

- render() to draw the door

- set_openness(o) to specify how open (ajar) the door is

- add_room(r) to attach a room to the door

- other(room) to compute the other room

- allows(px,pz) to check whether the door is open enough to walk through.

- start_opening(), delta(), and done_opening() when the user activates

the door, as the door is opening or closing, and when it finishes.

The direction toggles each time, between opening and closing.

The full code of jeb1.icn

import gui

$include "guih.icn"

class Untitled : Dialog(chat_input, chat_output, text_field_1, subwin)

method component_setup()

self.setup()

end

method end_dialog()

end

method init_dialog()

end

method on_exit(ev)

write("goodbye")

exit(0)

end

method on_br(ev)

end

method on_kp(ev)

end

method on_mr(ev)

end

method on_subwin(ev)

write("subwin")

end

method on_about(ev)

local sav

sav := &window

&window := &null

Notice("jeb1 - a 3D demo by Jeffery")

&window := sav

end

method on_chat(ev)

chat_output.set_contents(put(chat_output.get_contents(), chat_input.get_contents()))

chat_output.set_selections([*(chat_output.get_contents())])

chat_input.set_contents("")

end

method setup()

local exit_menu_item, image_1, menu_1, menu_2, menu_bar_1, overlay_item_1, overlay_set_1, text_menu_item_2

self.set_attribs("size=800,750", "bg=light gray", "label=jeb1 demo")

menu_bar_1 := MenuBar()

menu_bar_1.set_pos("0", "0")

menu_bar_1.set_attribs("bg=very light green", "font=serif,bold,16")

menu_1 := Menu()

menu_1.set_label("File")

exit_menu_item := TextMenuItem()

exit_menu_item.set_label("Exit")

exit_menu_item.connect(self, "on_exit", ACTION_EVENT)

menu_1.add(exit_menu_item)

menu_bar_1.add(menu_1)

menu_2 := Menu()

menu_2.set_label("Help")

text_menu_item_2 := TextMenuItem()

text_menu_item_2.set_label("About")

text_menu_item_2.connect(self, "on_about", ACTION_EVENT)

menu_2.add(text_menu_item_2)

menu_bar_1.add(menu_2)

self.add(menu_bar_1)

overlay_set_1 := OverlaySet()

overlay_set_1.set_pos(6, 192)

overlay_set_1.set_size(780, 558)

overlay_item_1 := OverlayItem()

overlay_set_1.add(overlay_item_1)

overlay_set_1.set_which_one(overlay_item_1)

self.add(overlay_set_1)

subwin := Subwindow3D()

subwin.set_pos(14, 195)

subwin.set_size("767", "551")

subwin.connect(self, "on_subwin", ACTION_EVENT)

subwin.connect(self, "on_br", BUTTON_RELEASE_EVENT)

subwin.connect(self, "on_mr", MOUSE_RELEASE_EVENT)

subwin.connect(self, "on_kp", KEY_PRESS_EVENT)

self.add(subwin)

chat_input := TextField()

chat_input.set_pos("12", "162")

chat_input.set_size("769", "25")

chat_input.set_draw_border()

chat_input.set_attribs("bg=very light green")

chat_input.connect(self, "on_chat", ACTION_EVENT)

chat_input.set_contents("")

self.add(chat_input)

chat_output := TextList()

chat_output.set_pos("10", "29")

chat_output.set_size("669", "127")

chat_output.set_draw_border()

chat_output.set_attribs("bg=very pale whitish yellow")

chat_output.set_contents([""])

self.add(chat_output)

image_1 := Image()

image_1.set_pos("686", "31")

image_1.set_size("106", "120")

image_1.set_filename("nmsulogo.gif")

image_1.set_internal_alignment("c", "c")

image_1.set_scale_up()

self.add(image_1)

end

initially

self.Dialog.initially()

end

#

# N3Dispatcher is a custom dispatcher. Currently it knows about 3D

# subwindows but we will extend it for networked 3D applications.

#

class N3Dispatcher : Dispatcher(subwins, nets, connections)

method add_subwin(sw)

insert(subwins, sw)

end

method do_net(x)

write("do net ", image(x))

end

method do_nullstep()

local moved, dor

thistimeofday := gettimeofday()

thistimeofday := thistimeofday.sec * 1000 + thistimeofday.usec / 1000

if (delta := thistimeofday - \lasttimeofday) < 17 then {

delay(17 - delta)

}

lasttimeofday := thistimeofday

if xdelta ~= 0 then {

cam_move(xdelta)

moved := 1

}

if ydelta ~= 0 then {

cam_orient_yaxis(ydelta)

moved := 1

}

if lookdelta ~= 0 then {

looky +:= lookdelta; moved := 1

}

every (\((dor := !(world.curr_room.exits)).delt)) ~=== 0 do {

if dor.delta() then moved := 1

else dor.done_opening()

}

if \moved then {

Eye(posx,posy,posz,lookx,looky,lookz)

return

}

end

method cam_move(dir)

local deltax := dir * cam_lx, deltaz := dir * cam_lz

if world.curr_room.disallows(posx+deltax,posz+deltaz) then {

deltax := 0

if world.curr_room.disallows(posx+deltax,posz+deltaz) then {

deltaz := 0; deltax := dir*cam_lx

if world.curr_room.disallows(posx+deltax,posz+deltaz) then {

fail

}

}

}

#calculate new position

posx +:= deltax

posz +:= deltaz

#update look at spot

lookx := posx + cam_lx

lookz := posz + cam_lz

end

#

# Orient the camera

#

method cam_orient_yaxis(turn)

#update camera angle

cam_angle +:= turn

if abs(cam_angle) > 2 * &pi then

cam_angle := 0.0

cam_lx := sin(cam_angle)

cam_lz := -cos(cam_angle)

lookx := posx + cam_lx

lookz := posz + cam_lz

end

global lasttimeofday

#

# Execute one event worth of motion and update the camera

#

method do_cve_event()

local ev, dor, dist, closest_door, closest_dist, L := Pending()

case ev := Event() of {

Key_Up: {

xdelta := 0.05

while L[1]===Key_Up_Release & L[4]===Key_Up do {

Event(); Event(); xdelta +:= 0.05

}

cam_move(xdelta) # Move Foward

}

Key_Down: {

xdelta := -0.05

while L[1]===Key_Down_Release & L[4]===Key_Down do {

Event(); Event(); xdelta -:= 0.05

}

cam_move(xdelta) # Move Backward

}

Key_Left: {

ydelta := -0.05

while L[1]===Key_Left_Release & L[4]===Key_Left do {

Event(); Event(); ydelta -:= 0.05

}

cam_orient_yaxis(ydelta) # Turn Left

}

Key_Right: {

ydelta := 0.05

while L[1]=== Key_Right_Release & L[4] === Key_Right do {

Event(); Event(); ydelta +:= 0.05

}

cam_orient_yaxis(ydelta) # Turn_Right

}

Key_PgUp |

"w": looky +:= (lookdelta := 0.05) #Look Up

Key_PgDn |

"s": looky +:= (lookdelta := -0.05) #Look Down

"q": exit(0)

"d": {

closest_door := &null

closest_dist := &null

every (dor := !(world.curr_room.exits)) do {

if not find("Door", type(dor)) then next

dist := sqrt((posx-dor.x)^2+(posz-dor.z)^2)

if /closest_door | (dist < closest_dist) then {

closest_door := dor; closest_dist := dist

}

}

if \closest_door then {

if \ (closest_door.delt) === 0 then {

closest_door.start_opening()

}

else closest_door.done_opening()

closest_door.delta()

}

}

-166 | -168 | (-(Key_Up|Key_Down) - 128) : xdelta := 0

-165 | -167 | (-(Key_Left|Key_Right) - 128) : ydelta := 0

-215 | -211 | (-(Key_PgUp|Key_PgDn)-128): lookdelta := 0

}

Eye(posx,posy,posz,lookx,looky,lookz)

end

method message_loop(r)

local L, dialogwins, x

connections := []

dialogwins := set()

every insert(dialogwins, (!dialogs).win)

every put(connections, !dialogwins | !subwins | !nets)

while \r.is_open do {

if x := select(connections,1)[1] then {

if member(subwins, x) then {

&window := x

do_cve_event()

}

else if member(dialogwins, x) then do_event()

else if member(nets, x) then do_net(x)

else write("unknown selector ", image(x))

# do at least one step per select() for smoother animation

do_nullstep()

}

else do_validate() | do_ticker() | do_nullstep() | delay(idle_sleep)

}

end

initially

subwins := set()

nets := set()

dialogs := set()

tickers := set()

idle_sleep_min := 10

idle_sleep_max := 50

compute_idle_sleep()

end

class Subwindow3D : Component ()

method resize()

compute_absolutes()

# WAttrib(cwin, "size="||w||","||h)

end

method display()

initial please(cwin)

Refresh(cwin)

end

method init()

if /self.parent then

fatal("incorrect ancestry (parent null)")

self.parent_dialog := self.parent.get_parent_dialog_reference()

self.cwin := (Clone ! ([self.parent.get_cwin_reference(), "gl",

"size="||w_spec||","||h_spec,

"pos=14,195", "inputmask=mck"] |||

self.attribs)) | stop("can't open 3D win")

self.cbwin := (Clone ! ([self.parent.get_cbwin_reference(), "gl",

"size="||w_spec||","||h_spec,

"pos=14,195"] |||

self.attribs))

set_accepts_focus()

dispatcher.add_subwin(self.cwin)

end

end

# link "world"

global modelfile

procedure main(argv)

local d

modelfile := argv[1] | stop("uasge: jeb1 modelfile")

world := FakeWorld()

#

# overwrite the system dispatcher with one that knows about subwindows

#

gui::dispatcher := N3Dispatcher()

d := Untitled()

d.show_modal()

end

link model

global world

procedure make_model(cooridoor)

local fin, s, r

fin := open(modelfile) | stop("can't open ", image(modelfile))

while s := readlin(fin) do s ? {

if ="#" then next

else if ="Room" then {

r := parseroom(s, fin)

put(world.Rooms, r)

world.RoomsTable[r.name] := r

if /posx then {

r.calc_boundbox()

posx := (r.minx + r.maxx) / 2

posy := r.miny + 1.9

posz := (r.minz + r.maxz) / 2

lookx := posx; looky := posy -0.15; lookz := 0.0

}

}

else if ="Door" then parsedoor(s,fin)

else if ="Opening" then parseopening(s,fin)

# else write("didn't know what to do with ", image(s))

}

close(fin)

end

#CONTROLS:

#up arrow - move foward

#down arrow - move backward

#left arrow - rotate camera left

#right arrow - rotate camera right

# ' w ' key - look up

# ' s ' key - look down

# ' d ' key - toggle door open/closed

#if you get lost in space (may happen once in a while)

#just restart the program

$include "keysyms.icn"

#GLOBAL variables

global posx, posy, posz # current eye x,y,z position

global lookx, looky, lookz # current look x position and so on

global cam_lx, cam_lz, cam_angle # eye angles for orientation

global xdelta, ydelta, lookdelta

global Rooms

procedure please(d)

local r

&window := d

WAttrib("texmode=on")

#initialize globals

# posx := 32.0; posy := 1.9; posz := 2.0

# lookx := 32.0; lookz := 0.0; looky := 1.75

cam_lx := cam_angle := 0.0; cam_lz := -1.0

# render graphics

make_model()

every r := !world.Rooms do {

if not r.disallows(posx, posz) then

world.curr_room := r

}

every (!world.Rooms).render(world)

xdelta := ydelta := lookdelta := 0

dispatcher.cam_move(0.01)

Eye(posx,posy,posz,lookx,looky,lookz)

# ready for event processing loop

end

# fakeworld - minimal nsh-world.icn substitute for demo

record fakeconnection(connID)

class FakeWorld(

current_texture, d_wall_tex, connection, curr_room,

d_ceil_tex, d_floor_tex, collide, Rooms, RoomsTable

)

method find_texture(s)

return s

end

initially

Rooms := []

RoomsTable := table()

collide := 0.8

connection := fakeconnection()

d_floor_tex := "floor.gif"

d_wall_tex := "walltest.gif"

d_ceil_tex := d_wall_tex

end

### Ivib-v2 layout ##

#...blah blah machine-generated comments omitted...

model.icn

- Model.icn is ~1400 lines

- We can't go through it all, but we can skim it for ideas.

- A small class library for virtual objects.

- Consider logical vs. physical modeling again

- Graphics-only: model should just be a data structure

- Typical 3D artist: model=data structure (with optional animations)

- VE builder: model=code class. unlimited behavior

- But we really want model=data structure!

- Want our cake and eat it too.

model.icn

The only part we had time to look at today was the top of class Room:

Class Room()

Class Room() is the most important, and is presented in its entirety.

From Box we inherit the vertices that bound our rectangular space.

class Room : Box(floor, # "wall" under our feet

ceiling, # "wall" over our heads

obstacles, # list: things that stop movement

decorations, # list: things to look at

exits, # x-y ways to leave room

name

)

A room disallows a move if: (a) outside or (b) something in the way.

The margin of k meters reduces graphical oddities that occur if

the eye gets too near what it is looking at. Note that JEB doors are

kind of narrow, and that OpenGL's graphical clipping makes it relatively

easy to accidentally see through walls.

method disallows(x,z)

if /minx then calc_boundbox()

# regular area is normally OK

if minx+1.2 <= x <= maxx-1.2 & minz+1.2 <= z <= maxz-1.2 then {

every o := !obstacles do

if o.disallows(x,z) then return

fail

}

# outside of regular area OK if an exit allows it

every e := !exits do {

if e.allows(x,z) then {

if minx <= x <= maxx & minz <= z <= maxz then {

# allow but don't change room yet

}

else {

curr_room := e.other(self) # we moved to the other room

}

fail

}

}

return

end

Method render() draws an entire room.

method render()

every ex := !exits do ex.render()

WAttrib("texmode=on")

floor.render()

ceiling.render()

every (!walls).render()

every (!obstacles).render()

every (!decorations).render()

end

The following add_door method tears a hole in a wall. It needs extending to

handle multiple doors in the same wall, and to handle xplane walls. These

and many other features may actually be in model.icn; the code example in

class is a simplified summary.

method add_door(d)

put(exits, d)

d.add_room(self)

# figure out what wall this door is in, and tear a hole in it,

# for example, find the wall the please door is in,

# remove that wall, and replace them with three

every w := !walls do {

c := w.coords

if c[1]=c[4]=c[7]=c[10] then {

if d.x = c[1] then write("door is in xplane wall ", image(w))

}

else if c[3]=c[6]=c[9]=c[12] then {

if abs(d.z - c[3]) < 0.08 then { # door is in a zplane wall

# remove this wall

while walls[1] ~=== w do put(walls,pop(walls))

pop(walls)

# replace it with three segments:

# w = above, w2 = left, and w3 = right of door

w2 := Wall ! ([w.texture] ||| w.coords)

w3 := Wall ! ([w.texture] ||| w.coords)

every i := 1 to *w.coords by 3 do {

w.coords[i+1] <:= d.y+d.height

w2.coords[i+1] >:= d.y+d.height

w2.coords[i] >:= d.x

w3.coords[i+1] >:= d.y+d.height

w3.coords[i] <:= d.x + d.width

}

put(walls, w, w2, w3)

return

}

}

else { write("no plane; giving up"); fail }

}

end

Rooms maintain separate lists for obstacles and decorations.

Obstacles figure in collision detection.

method add_obstacle(o)

put(obstacles, o)

end

method add_decoration(d)

put(decorations, d)

end

Monster State

You may have worked some of this out already, or be working on it;

I just want to push forward.

- What (and and how much) is it in the current FPS?

- What should still be in the FPS? What should instead be on the server?

- How do we pull apart the client side monster state

from the server functionality

Extending CVE Network Protocol

It may be painful for server to perform certain computations

currently performed by client, such as for each shot, what it hit.

From client to server

and from server to other clients | description | From server to client | description

|

|---|

| \fire dx dy dz target

| send this every shot.

coordinates are direction vector.

target is entity hit according to client

| \damage target amount

| server sends its assessment of damage.

response to \fire.

|

| \weapon userid X

| change to weapon X where is is one of: spear, pistol, shotgun

| \death target

| death is like... notification to de-rez someone's avatar,

short of them actually logging out, which would disconnect

|

|

|

| \avatar ...

| notification to rez someone's avatar. Our respawn command.

|

|

|

| \inform ...

| suggests it just posts a message to clients' chat boxes;

occurs during regular login and might need to occur on respawn

|

|

|

| \avatar raptor11 ...

\avatar akyl7

| notification to rez someone's avatar. Our respawn command.

|

Looked at first few of these, go back into them when we get back to the

discussion of rigging and animation.

- The job of a digital artist.

- In practice: range of motions + TIMING.

- Squash and stretch technique.

- Secondary action - the movement of smaller parts or details of

a character, possibly after (in response to) the completion/stopping of

the primary action.

- Attach the mesh to a structure === rigging

- Actually,

- Vertex weights = proportion each bone contributes to each vertex position.

-

Often a whole bunch of vertices just attach to a single bone.

- "Skeleton", not rendered at all, is a set of connected "control objects"

used to deform/move/rotate parts of a mesh in order to animate

- Identify key poses, let software fill in-betweens

- Mid-animation transitions to a different animation

- Take acting classes or study examples in order to know how to

capture emotion in a pose or a motion.

- Avoid missing polygons that show when the model moves -- example,

a robed character, when falling down, is liable to show parts of

what's underneath the robe.

- Add vertices around joints/bend points

Some more Free Web Resources

- www.textures.com

- it would be possible to shop textures-first

and develop models just to utilize textures that you found

- www.3drt.com

- provides (just) 12 free models including a free monster character used

in book, a free test synthetic humanoid character, about 5 other

free monster types

- free monster almost used as-is (.zip download contained a .fbx),

but x- and z- rotated 90degrees, and models generally will require scaling

from whatever their unit system is into game world coordinates

- free monster has several animations rolled into one; AnimationController's

animate() method has many overloaded flavors, several of which take a

offset and duration (float times; in seconds I think). Book has

arithmetic to calculate where the animations begin and end,

converting frame # to seconds or vice versa.

2 Pages on Skydome

- shallow interp: enclose entire gameworld in a (textured)

sphere or other primitive to make sure view never escapes rendered content

- fancier external environments often constructed as "environment maps"

- precomputed texture image of the distant environment surrounding a

rendered object, useful to fake reflective objects e.g. mirrors, lakes,...

- superficially similar to raytracing but less expensive

- book uses a "SkyDome" that comes from "the libGDX tests" (spacedome.g3db)

- wikipedia talks about using spherical or cubic enclosures, and various

complex maths to construct the images for them.

2 Pages on Shadows and Lights

- traditional OpenGL had a few (like 8) limited light sources you could

program, setting position, direction etc. in CPU API

- for modern GPUs, libGDX let's you write shaders in GLSL

- simple/fast shading seen in various libGDX demos; many of them

use a DirectionalShadowLight.java "debug" class.

- instantiate, then add (and set as shadowMap) in environment

CVE's Start-Stop Daemon and Watchdog

- You need daemon status in order to leave a server running without

a connected console such as a Terminal window. Check out

start-stop-daemon.c.

Actually, this was a lot less magical than I thought. It does both

starting and stopping of a daemon, but the actual daemonization is

pretty classic brute force. Look for:

- fork()

- opening of /dev/null

- dup2()'s of devnull_fd into file descriptors 0, 1, and 2

- exec()

- You need a watchdog to watch your server and make it stay up. See

watchdog.icn. Jeffery

must not have written this, because he would never have written

a variable named "stoping". Look for:

- main() loop

- killing and starting processes nicely by invoking start-stop-daemon

with various command line options, via

stop-cved* and start-cved* scripts

- logging in with code directly ripped from the CVE client,

executing an \uptime command, and then logging out

Steps Toward Making a Functional C-K (FPS) Server

I am thinking every day we need to figure out things that need doing,

and do them. I am thinking I don't login to discord often enough; I am

on too many machines, discord is not omnipresent for me. E-mail me.

- added hp (hit points). decided to also add maxhp.

- added \fire, \damage, \weapon, \obit to cve/src/common/commands.icn.

What else do we need?

- added placeholders where \fire and \weapon command should be processed

in cve/src/server/server.icn.

What else do we need?

- didn't add anything for \damage yet, it would be sent out from server,

in response to a \fire command.

Same for \obit.

Steps Toward Client Network Integration

- Modify client to have a usable chat window

- Demo Java code that connects to server from inside client

- Demo echoing net traffic in chat window

- Demo working chat

- Demo working mutual 2-avatar rez/derez each other on login/logout

- Demo working n-avatars rezzing each other

- Demo working \move

Git

What all have we learned about Git/libGDX best practices? Things to avoid?

- Don't push the whole repository!!!

- checkin .java files and assets, not arcane gradle cache things.

can we be more

specific about what to be sure to not checkin, and how to avoid it?

- learn and use .gitignore, ignore .class files, .lock, .bin

Cheap Easy Value Adds in the Current Client

- send the server \fire events

- even pre-targeting, the server can do interesting things with it.

but targeting will not be hard.

- send server \move events.

- Actually, this ought to be a good way to crash the

server, unless/until you switch over from the UIdaho model to the C-K

model. But once switched, server will remember where you go, so next

time you

login you will resume there (if you process the \avatar command to set

your x,y,z at least). This means \move can be tested even before you

can see other users moving.

- add a checkbox on the login menu for whether to "show password"

- obscure passwords by default. could do this even without checkbox

- avatar appearance (jersey color?) should be persistent across logins

- Its fine to allow edits in-game or each login, but normally it would be

associated with account creation. Could add a simple command for that,

or use existing CVE .avt file mechanisms.

- add a single-line text input widget to allow transmission of arbitrary

commands to the net

- This is very useful for testing/debugging purposes! Eventually it might

be retained for in-game text chat.

- Fix snow on ground that is very pixelated

- Normally this would be done by tiling. Do texture coordinates

> 1.0 work

in libgdx, or do we need to research how to tile/repeat textures?

- add some mob (mob = monster or npc) health indicator

- Player needs to know: are we making progress here, or should

we run? There are various ways to do this, e.g. make them slower

as they get more wounded.

- At present, no mob feels very big.

- Giganticness is the whole point of some mobs; some are

way bigger than elephants. Do they smash

through trees, etc?

- Add A little bit of camera shake tied to giant's stomp sounds.

- Such a mood-setting atmospheric would have a big impact.

NPCs in the CVE Server

Given that they aren't logged in and getting server attention by sending

network messages, where do server-based NPC's fit in the CVE

event-driven execution model?

- We could spawn a thread for each one, but I'd prefer not. There is

some existing "npc thread" code in the CVE server, which might or might

not work. Our cveworld.com server is pretty lightweight and we should

assume no heavy computes there.

- We could update each npc each frame (kind of like polling, potentially

expensive)

- We could update npcs every k milliseconds (put each on a timer). NPC

timers could vary by species and/or dynamically, e.g. faraway NPCs get

updated more seldom than NPCs near players.

Additional Considerations for Monsters/NPCs

- Monsters and non-player characters need some kind of execution model.

- Current exe model is: "always charge at player every frame"

- We looked at BDI (belief-desire-intention); a popular human-like AI model.

We might or might not have time to implement something better than the

current FPS behavior.

- Many games use "good ole" finite automata (wander mode, sleep mode,

fight mode, flight mode...).

- Humanoid execution models need to (look and) "feel" intelligent.

Animals do not have to feel intelligent, but they should feel natural.

- We could apply a variant of a minimax algorithm, especially if

a central intelligence controlling many units (Sauron)

- Is the goal in a game to appear human-like in intelligence?

- Behavior Trees are a model with alleged advantages over finite automata

- NPC's may use pathfinding algorithms to determine their route

- This tutorial on steering behaviors

may be useful for helping NPC's not be so clumsy and rude. It

may remind you of this flocking paper and/or this site with videos and notes which you might have seen in CS 328.

- Build a tree data structure to organize complex unit behavior rules.

- Different node types for prioritized, sequenced, conditional and

concurrent/alternating behaviors

Example from Joost's Blog

Class Project Demos During Scheduled Final Exam Slot

- Our final is...when ???

- To the exent possible, we will be demo'ing and evaluating your

work by playing it.

- 120 minutes for k students == up to 120/k minutes per student. You

may elect to use 0 of your minutes.

- Group grade and Individual grade (each is half your project grade);

group grade based on what is working in git at end of semester

- More credit (up to a individual grade of A) for work that

appears within an integrated demonstration from git master

- Less credit (up to an individual grade of B) for work that

is turned in but not integrated

- I will accept minor tweaks after the final demo, but I am not looking

for major new functionality added after that point. You may want to

schedule an appointment and demo for me, if you get something fixed.

Who is Watching the Watchdog?

Implementation of the \fire Command on the Server

Miss:

Hit:

Weapon Type Switch

\weapon userid X informs all clients every time a weapon is switched

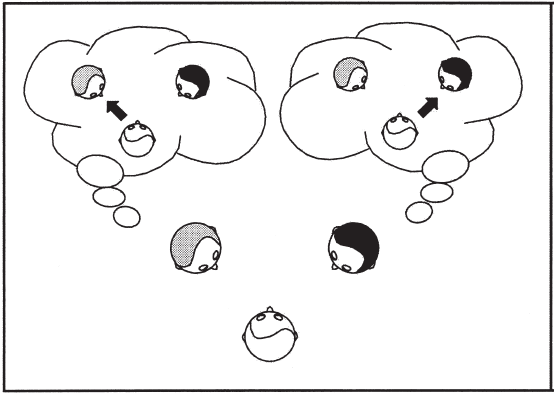

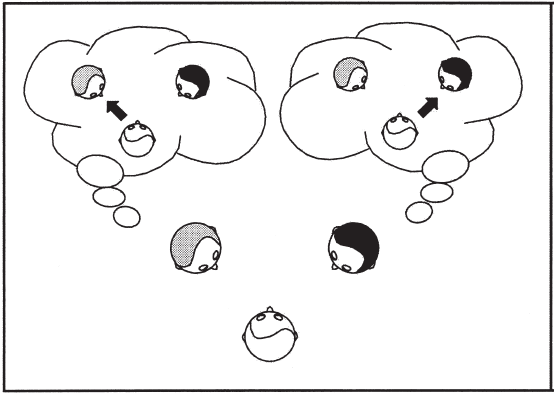

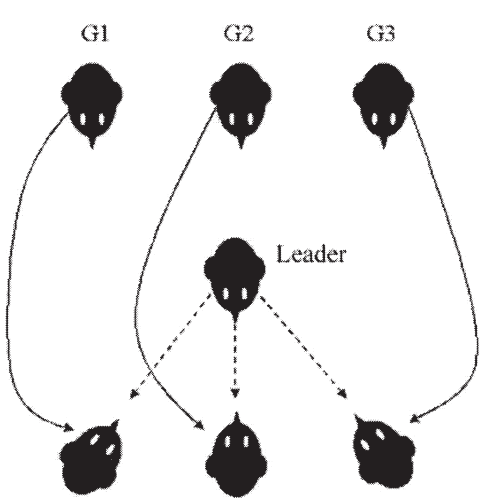

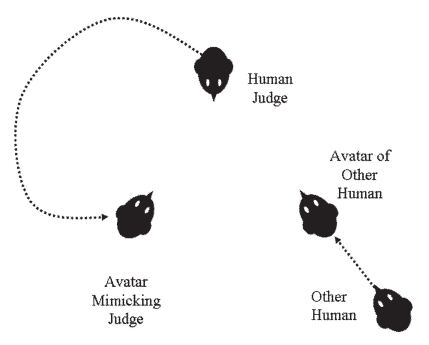

Transformed Social Interaction

Ramifications:

- An important paper, miscategorized as relating to intelligent NPC's;

NPC agents could exploit these ideas, but they could also be

used with human player agents.

- subject: non-verbal communication in virtual environments.

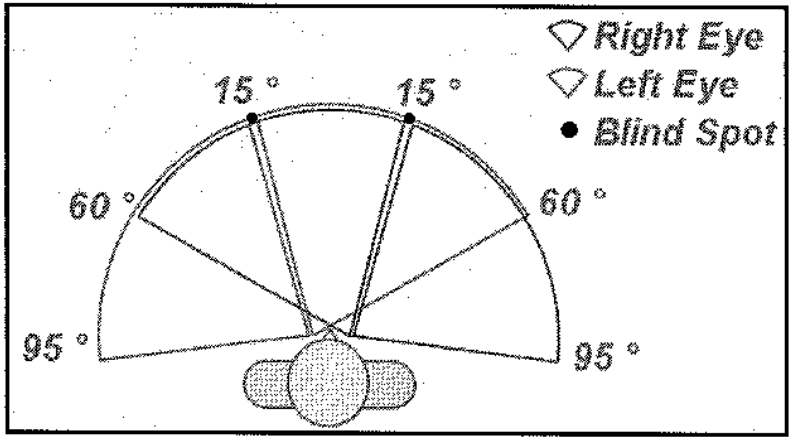

- eye gaze, in particular, conveys substantial information

- components under study in TSI

- avatars (self representation)

- sensory capabilities

- contextual situation

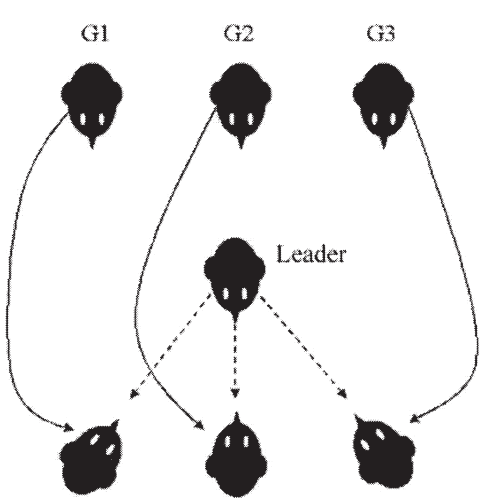

Avatar Transformation

- are we able to sense/track nonverbals yet? almost

- (avatar) similarity breeds attraction, so what's to prevent a "leader"

from having an avatar that looks to each player like themselves?

- could be rendered differently for each viewer

- could be a morphed

hybrid of true appearance and audience characteristics.

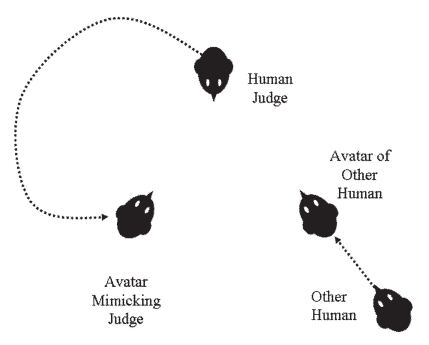

- (subtle) mimicking behavior works too -- leaning, leg position, etc.

- gaze is very important; in a CVE you can maintain eye contact with

everyone at once:

- or you can gaze closely at someone without your avatar giving that away

- you could have human assistants providing your avatars' nonverbals

tailored to each audience member:

- gaze tracking could (more innocently) just keep the human informed when

they are doing good, or spending too much time looking at one person or

place.

- it can similarly tell a teacher when students are bored or inattentive

- simply having avatars' names over their heads makes it easier for leaders

to address the masses.

- "ghost" humans that are unrendered, or rendered (and audible) only to

selected users, might assist an interaction, observing things from

different points of view, etc.

Transforming the situation

- A viewer can decide to look at things from another player's point of view

- A viewer can "rewind" and review an interaction, play it back at 2X speed, etc. at a cost in real-time interactivity

- An absent person can pre-record avatar behaviors or responses to

questions for use in a meeting, and after a meeting the present individuals

can post-record answers to questions that the absent person might have after

reviewing the meeting recording

Some implications

- People might just reject using virtual environments for mediated

communication if they become (or are perceived) as too bogus

- May need detectors for bot-proxies

- NVTT

- In one experiment, humans only were 66% correct in bot detection,

even when told that the bot was mimicking their own head movements.

Educational Virtual Environments

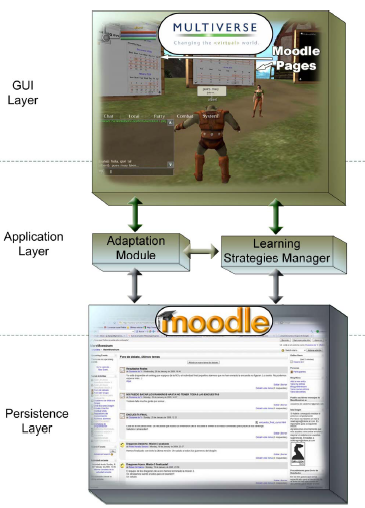

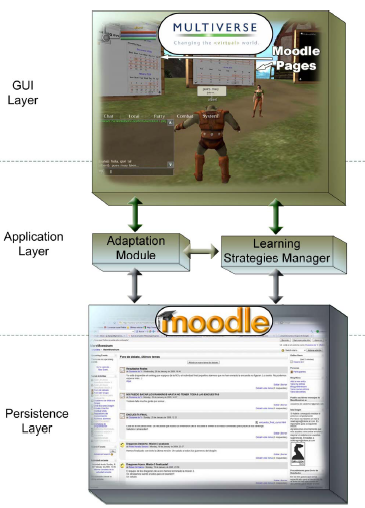

Mare Monstrum Paper

Basic ideas:

- Computer aided instruction has "suffered a deep transformation" from

Computer-Aided Instruction and intelligent tutors to "using MMO's for education"

- the theory was, this would improve motivation

- Authors question the wisdom and efficacy of this change

- Built atop Multiverse and Moodle

- Problem: game is usually a black box, instructor can't see that learning

has happened in there

- "Instead of trying to sugar-coat the learning as fun, turn the whole

learning scenario into a game"

- incorporate both cooperation and competition

- dropout rate in CS1 course dropped from 70% to 15% after using Mare

Monstrum in it. 90+% of students reported that the game helped.

CodeSpells

Vocabulary Word

cyranoid

Tony Downey supplement

- Since we are talking about game design, you may also find

this

Tony Downey blog article interesting. Please read it. Thank Bruce.

Key subpoints to consider (and agree or disagree with):

- Prototype

- Design is usually by trial and error, not by flashes of brilliance

- Gameplay trumps content

- Dynamic content trumps static content

- Progress, Obstacles, Resources - the big three mechanics

- Avoid perfectionism and NIH syndrome - you don't have to invent it all

- [Jeffery] ... but canned game frameworks can overly constrain

your game.

- "variable driven development" - identify explicit simulation parameters.

use variables, not constants. consider a runtime interface for

tweaking them.

- community -- high scores, in-game expressions of identity, status

- power law -- free to play, sell add-ons later

- collect and analyze player data

- tutorial ? I thought Minecraft was lauded for not having a tutorial...

- demo - learn by watching

- guidepost - learn by in-game signs

- sandbox - learn in a danger-proof environment

- sensei - learn from a recurring relationship with a master

GUIs in Games

- You may want a Graphical User Interface (GUI)

for your arcade or strategy games.

- Key issue: how are GUI components and graphics mixed?

- if you are real, real lucky, your language/engine does it for you

- and doesn't leave you stuck in a limited-option world

- graphics-primitives-are-GUI-widgets model

- pain to write a new widget for every graphical entity in a game

- build custom GUI using graphics primitives

- pain to develop enough GUI from scratch

- independent-subwindow "view" approach

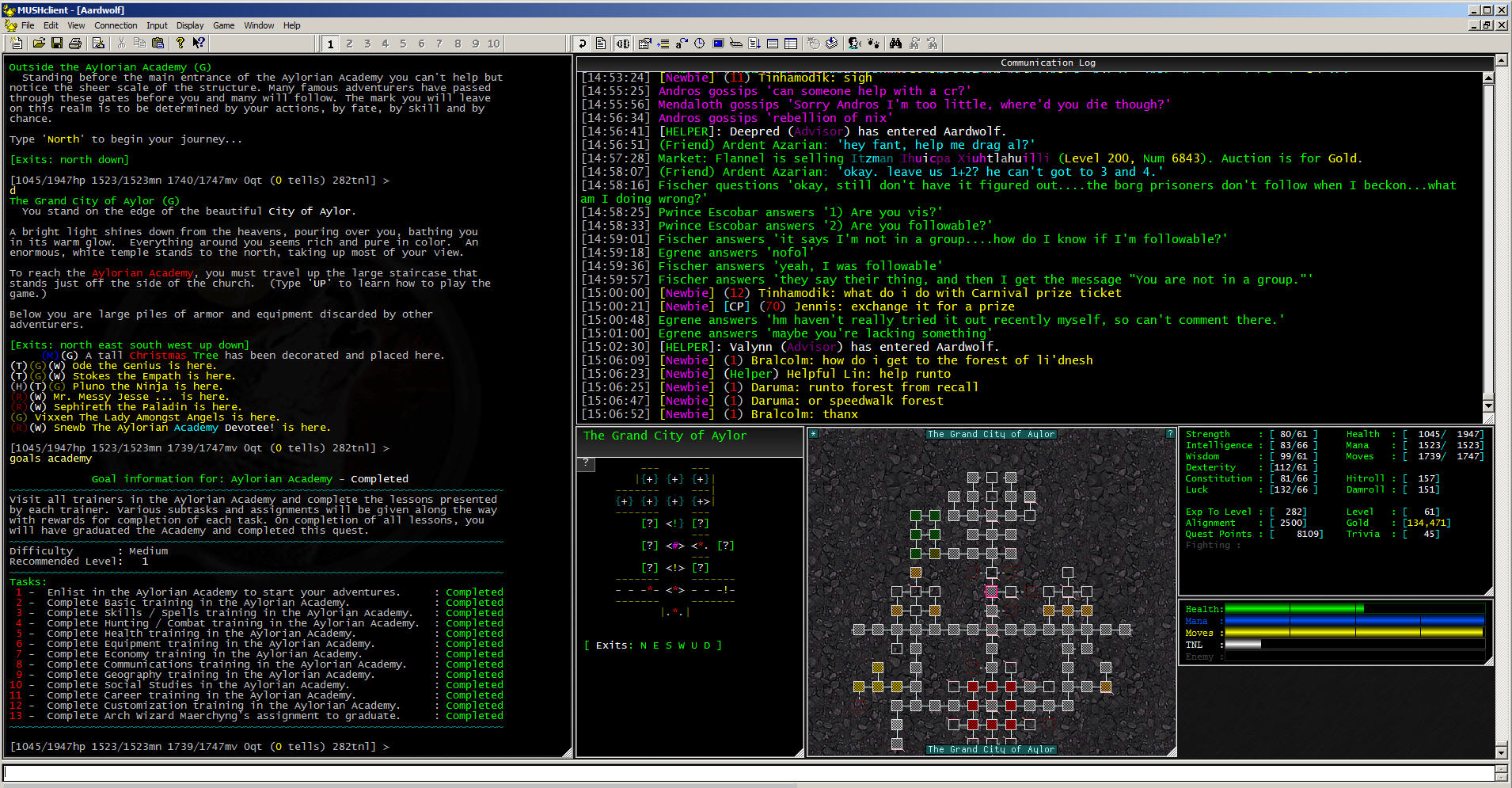

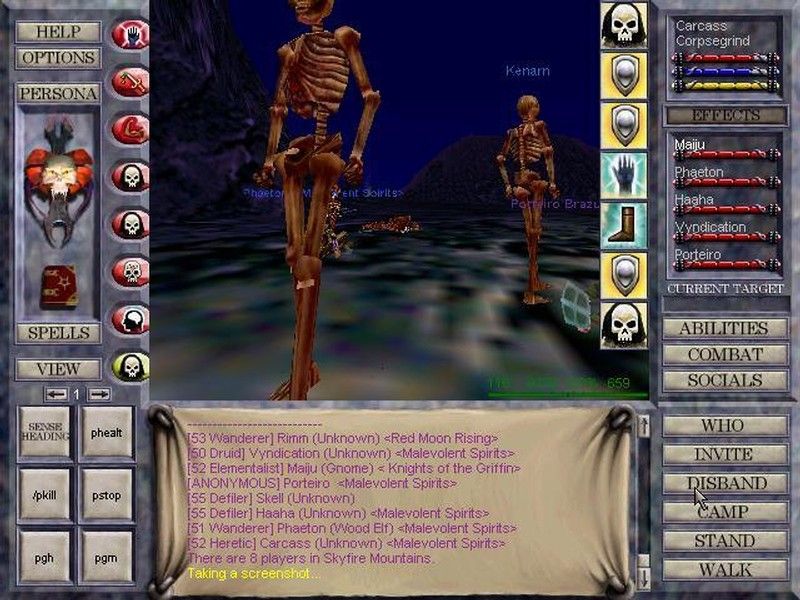

- moderately easy to mashup a Frankeninterface; looks a bit old school.

Compare early EverQuest with later MMOs.

Clarification of the size of worlds

- According to

wiki answers: earth's radius is 3961 miles; its circumference is 25k+. Thanks Bruce.

- According to

a battle.net forum

one of the many estimates of the size of the World of Warcraft is: around 8

miles in radius.

- Accepting that it is scaled for our convenience from some fictional planet

that is comparable in size to earth, the "scaling for our convenience" is

~500x. As far as I know that is not counting the Outland you can port to, which

is a set of fragments of the Orcs' home world.

- It is reasonable to ask questions like: is 500x the optimal scaling

number for virtual worlds? Suppose that people want more content -- more

surface area? What additional game mechanics would that call for?

- Where scaling gets interesting is when scaling of sizes/distances

triggers issues in scaling of time -- real time or game time.

- Lotro abstracts away time/space trade-offs using "Travel Rations".

You buy and eat travel rations to ignore months-long travel. Are there

better ways to accomplish such scale-tweaks?

-

When gamers want not just a virtual planet, but a virtual galaxy,

what are the implications for a virtual environment? How successful

have games been at feeling "galactic" in scope?

- WoW doesn't feel like it is a 500x scale.

"Scale" in games might be 1:1 in some areas, 50x or 500x in others, or

logarithmic if you have a really large space to deal with. See

research papers on "graphical fisheye views of graphs" for dynamic

nonlinear scales one could adopt in games.

- Suppose a virtual world designer wanted to dynamically tweak the

scale as new content is introduced? Suppose a game mechanic

included a "Zoom" mechanism? What might it look like?

Parallel Concerns, Moving into Virtual Environments

- learn enough Unicon to do the VE assignments

- learn about 3D programming

- learn about 3D modeling

- start assembling some "pirate assets"

- try out SecondLife's (and/or MineCraft's) content creation

- introduce CVE

We are gonna probably go breadthfirst through these to provide some coverage

of all of them, rather than depthfirst to cover some and skip others.

A "missing link"

Genre-wise, a missing link between the toy-function JEB Demo and the

many-function CVE program, we should be doing a homework that let's

us explore a classic genre: the FPS, or First-Person Shooter

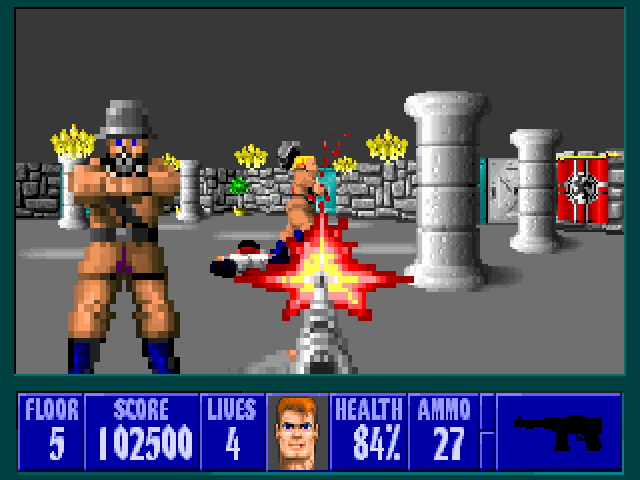

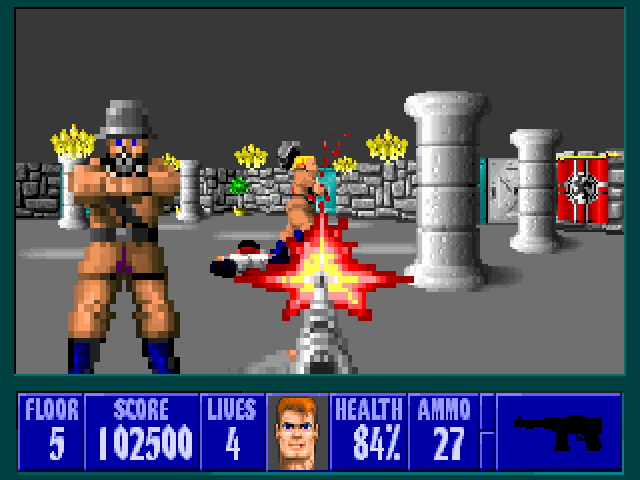

FPS's

This is the genre that built the 3D PC graphics card industry.

There were some 3D games before these, but id Software's early

games really established a genre. Note that they were a tiny team,

without big backing, and released their early hits as shareware in

the 1990's.

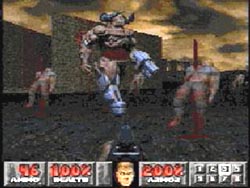

- Wolfenstein 3D

- Doom

- Quake (source)

From the Quake3 source code you can tell that one option for us to FPS it in

style is just to learn and understand their 335K LOC engine well enough to

modify it to do whatever we want. Software engineers after all believe

strongly in code re-use and it is spectacularly generous of Id to release

their code.

Another option is to search for higher (-level) ground, such as filling in

some of the gaps between the ~2.5K LOC jeb demo and the FPS genre.

The main differences between wandering around the halls of a CS department

(the JEB demo) and Wolfenstein 3D or Doom are:

- animated 3D enemy characters

- 3D models, simple A/I

- simple combat system

- health meter, attack and damage animations

- a very few virtual objects

- weapons, armor, health kits

The latter two have been addressed in HW#2 in this class. The animated

3D enemy characters will drive our next couple lectures and homework 5.

3D Modeling, Part I

- A 3D Model, for the purposes of this class

- A data structure (with an external representation -- a file format)

capable of representing an arbitrary-shaped, generally solid object

and that object's range of motions.

- Start with: Polygon Mesh and Texture(s)

- Add: bone structure, motion API

- (strangely?) OpenGL does not have built-in 3D models.

- If you dig, you find that SGI did a sweet toolkit atop OpenGL

called OpenInventor, but that never became ubiquitous as OpenGL.

OpenInventor influenced the design of VRML, which influenced the

design of X3D.

- No universal standard for 3D modeling that I know of.

- Suggestions welcome

- 3D Model File Format Requirements

- "open". public. platform neutral. easily parsed.

preferably human readable. "adequate" performance.

- scripted animation versus program control?

- Sort of a question of how much manual artistry can we afford.

Artists make better-looking animation, but are too expensive.

Ideal would be: a set of general parametric or programmable animations.

- In this class we will discuss two 3D model formats: S3D and .X

- The S3D (simple 3D)

Check out s3dparse.icn

You really need to see some

sample S3D files

in order to get a feel for the beauty of the S3D file format.

s3dparse.icn, and S3D files themselves, may have

various usually-nonfatal "bugs". There was even a

bug in the S3D file format document.

We also took a look at the desperate situation vis a vis creating

3D models (a job performed by experts with much training)

and our need to build such models for our games and virtual environments.

Let us continue from there.

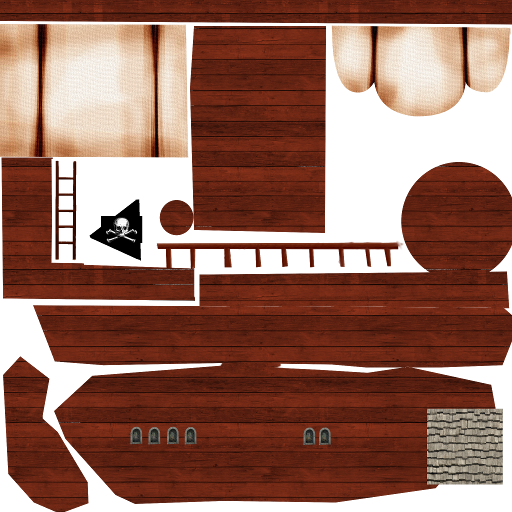

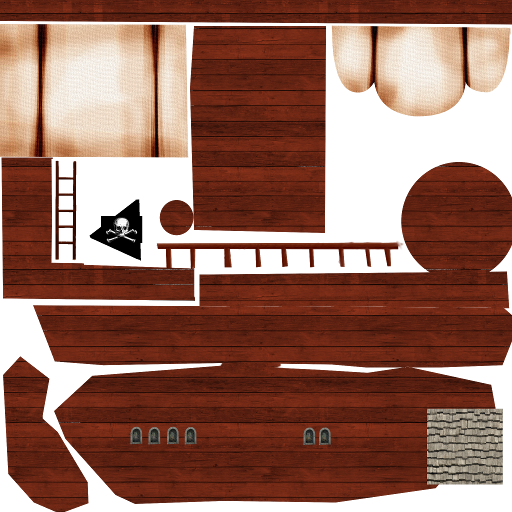

A Grim S3D Example (Part 1)

Consider the following texture images of a character (you can pretend

it is a space marine or pirate to shoot at, if you want).

- What is the absolute minimum number of polygons in order for

produce a 3D solid based on these textures?

-

How many polygons -- how much of a mesh -- do we have to have in order to

generate a "reasonable" 3D model?

- What is the maximum*?

Dr. J is 5'9" (1.75m), his elbow-elbow width is approximately 24" (0.61m) and

his front-back is 11" (.28m) at the belly.

(*this is a trick question)

Dr. J Model 0 - Stuck in a PhoneBooth, Hardwired Code

To get someone visible within Jeb 1, you could add something like

the following right after the rooms are rendered:

drawavatar( ? (world.Rooms) )

This invokes some hardwired code to render an avatar in a randomly

selected room. The procedure to render the digital photos as is,

prior to any 3D modeling, might look like:

procedure drawavatar(r)

# place randomly in room r

myx := r.minx + ?(r.maxx - r.minx)

myy := r.miny

myz := r.minz + ?(r.maxz + r.minz)

# ensure a meter of room to work with

myx <:= r.minx + 1.0; myx >:= r.maxx - 1.0

myz <:= r.minz + 1.0; myz >:= r.maxz - 1.0

PushMatrix()

Translate(myx, myy, myz)

WAttrib("texmode=on","texcoord=0,0,0,1,1,1,1,0")

Texture("jeffery-front.gif")

FillPolygon(0,0,0, 0,1.75,0, .61,1.75,0, .61,0,0)

Texture("jeffery-rear.gif")

FillPolygon(0,0,.28, 0,1.75,.28, .61,1.75,.28, .61,0,.28)

Texture("jeffery-left.gif")

FillPolygon(0,0,0, 0,1.75,0, 0,1.75,.28, 0,0,.28)

Texture("jeffery-right.gif")

FillPolygon(.61,0,.28, .61,1.75,.28, .61,1.75,0, .61,0,0)

PopMatrix()

end

Dr. J Model 0.1 - Stuck in a PhoneBooth, S3D File

The above hardwired code calls FillPolygon four times to draw four rectangles.

If we draw the exact same picture with triangles...we need 8 triangles,

composed from 8 vertices. Taking a wild stab, try the following .s3d file.

I did not get it right on the first try (but I was close). Note that

vertex coordinates (numbers like 1.75, .61, .28) are given in meters

based directly on measurements given earlier.

// version

103

// numTextures,numTris,numVerts,numParts,1,numLights,numCameras

4,8,8,1,1,0,0

// partList: firstVert,numVerts,firstTri,numTris,"name"

0,8,0,8,"drj"

// texture list: name

jeffery-front.gif

jeffery-rear.gif

jeffery-right.gif

jeffery-left.gif