Lecture Notes for CS 404/504 Program Monitoring and Visualization

Note to Dr. J: next time you teach this course, review and re-order

some papers and lecture material up to the front.

What this Course is About

This course is a blend of

- dynamic analysis --

the study of program execution behavior, with

- visualization --

the graphical depiction of large amounts of information.

It turns out that much of the key connecting glue between monitoring and

visualization comes from static analysis, the study of

program properties observable from the source code.

Each week, you can expect part of the lecture material to come

from dynamic analysis and part from graphics/visualization.

Similarly, part of the time each week will be studying interesting

work done by others, and part of the time will be engaged playing

with my research infrastructure, working on software tools

that will (hopefully) advance the state of the art.

Reading Assignment #1

- Go read unicon.org. Download the main

Unicon book. Read chapter 1. By Friday, e-mail the instructor with

questions you have about Unicon based on the website and chapter 1.

- Read Program Visualization in a Virtual Environment, by Hirose/Ogi/Riesterer.

By Monday, be prepared to discuss this paper.

Early History of Monitoring and Visualization according to Jeffery

Others may have more and better information, but this is my version of

that subset of computing history relevant to this course.

- In the beginning, there were programs.

- And programs begat bugs. In the

punchcard era, the highlight of one's afternoon often was getting back one's

output from one's daily program run, a short stack of punched cards to the

effect that the program was not executed at all, due to an error in the

source code.

- Eventually programs started to compile or assemble.

- When

a program ran and did not produce expected output, one was supposed to go

back to the source code and read/study/stare at it to find out why. This

still works, some of the time.

When the computing industry reached a stage of having interactive, text

screen terminals, all kinds of new bugs became common-place. Along with

mankind's increased ability to generate bugs, a whole slew of tools and

techniques were developed to understand program executions, including

tracing, and source level debuggers. These tools still work, they just

don't scale well. Sadly, if you look at a modern IDE its debugging and

tracing capabilities are not much improved from what was available 40

years ago. This is (I claim) because problems in monitoring and debugging

are hard, and the cost of building new tools which might advance the

state of the art is very high.

By the 1980's, interactive 2D graphics was ubiquitous and improving rapidly

in performance. People started to use graphics to help understand program

execution behavior partly because text-only techniques did not scale well,

and partly juse because the graphics was available. A movie called

"Sorting out Sorting"

(parts 1,2,3),

originally presented at SIGGRAPH, made a compelling argument that

graphical techniques could be valuable in teaching and understanding

algorithms.

Sorting Out Sorting was done one frame at a time on truly ancient facilities.

A group at Brown University (home of graphics guru Andy Van Dam, algorithms

guru Robert Sedgewick and a cast of thousands) set out to replicate on

interactive workstations what Ron Baecker had done a frame at a time. One

result of this effort was Marc Brown's Ph.D. and related software. We will

present more history in a later session.

What About Us?

- This course is a follow-on to the work presented in my Ph.D.

(insert story of Dr. J's Ph.D. here).

-

The central premise of my Ph.D. is that if we build the

infrastructure needed to reduce program monitoring and visualization to

"no harder than writing ordinary applications" and then use a rapid prototyping

language suitable for research experimentation, we should be able to propel

the state of the art forward.

- My Ph.D. produced an execution monitoring

framework and a 2D graphics API well-suited to these goals. Since then the

monitoring framework has been improved and 3D graphics has become ubiquitous.

- This semester we will find out what we can do with this framework.

Each time I have taught this class, we have propelled the research

forward a little bit.

Announcements

There is a bblearn for this course now. It has a HW#1 posted, but I am not

so sure I like it. I may think of a better HW#1 for you, by this weekend.

Check for HW#1 on Monday. In the meantime, learn some Unicon.

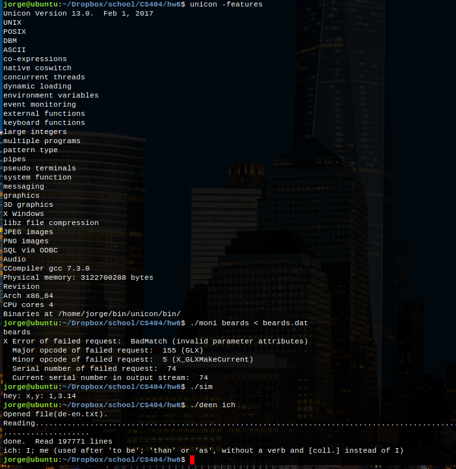

Unicon 101

- Unicon is found at unicon.org

- We will teach Unicon from scratch in this class

- Unicon programs' source code are in .icn files and are compiled

into VM bytecode by default (execution model vaguely resembles Java)

- Unicon programs are organized similar to C++, as a set of functions

and optionally, classes, starting from main().

- For this class, we will have to make sure that you have access to a

machine with unicon, including its (2D and 3D) graphics facilities.

- Unicon comes with a simple IDE; feel free use it or skip it. I use

Emacs but the IDE has a nice help menu that will quicklaunch most

reference documentation. IDE has a known bug on MacOS.

- Unicon itself has one pending 3D bug I intend to fix for this class,

and we may encounter other bugs. In response to those, I will almost

surely (re)build Unicon from sources one or more times during the

semester. You can plan to re-install one or more times. It is research

software. If you were to build Unicon yourself after I announced a bugfix,

this generally requires a complete C/C++ compiler, with supporting tools

such as "make" and "subversion". On Windows we use MSYS and Mingw64.

Unicon: the Easiest Parts

Let's ssh into a test machine to live-demo the following:

| Types | Control Flow

|

|---|

| string | success vs. failure

|

| integer | if-then-else

|

| real | while-do

|

| cset | calls, argument rules

|

| list | generators

|

| table | case-of

|

| file | every-do

|

Alternate Resources for Unicon Study

None of this is assigned reading. It is here for your convenience;

you know, in case you just hate the Unicon book.

Monitoring Framework Intro

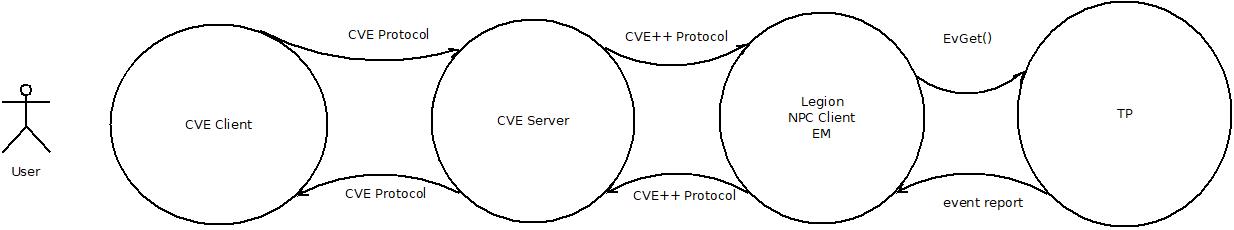

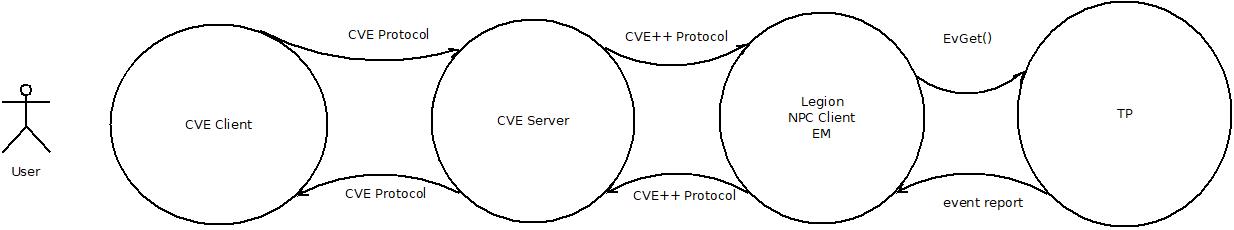

An execution monitor (EM) observes events in a target program (TP).

There are two-process, one-process (callback), and thread-models.

- two-process model

- EM and TP communicate via network sockets, pipes, or files.

- one-process/callback

- The TP calls the EM when an event occurs. The EM is

organized as a set of callbacks, i.e. it doesn't have

its own main() or control flow, it just responds to things.

- thread

- EM and TP are threads in the same address space, making

communication far easier.

Which model do most debuggers use? The two-process model. Which model should

we use for visualization tools? What is different about their requirements?

- two-process model

- Pros:

- easiest to do language-neutral and cross-language.

- Least intrusive

Cons:

- slowest option; fast enough for debuggers but not for visualization

- low-level and/or difficult access to TP state

- one-process/callback

- Pros:

Cons:

- intrusive (monitor shares target program stack and globals)

- most difficult for monitor programming (no control flow!)

- thread

- Pros:

Cons:

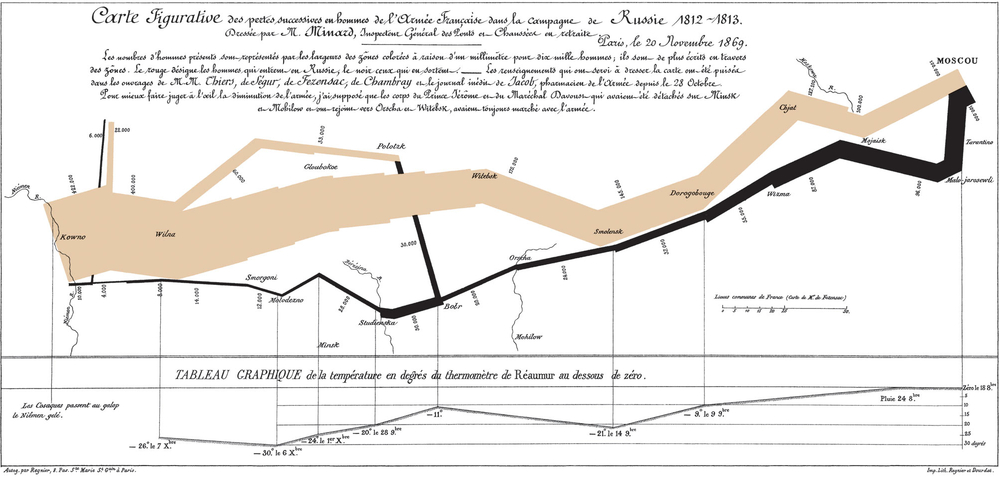

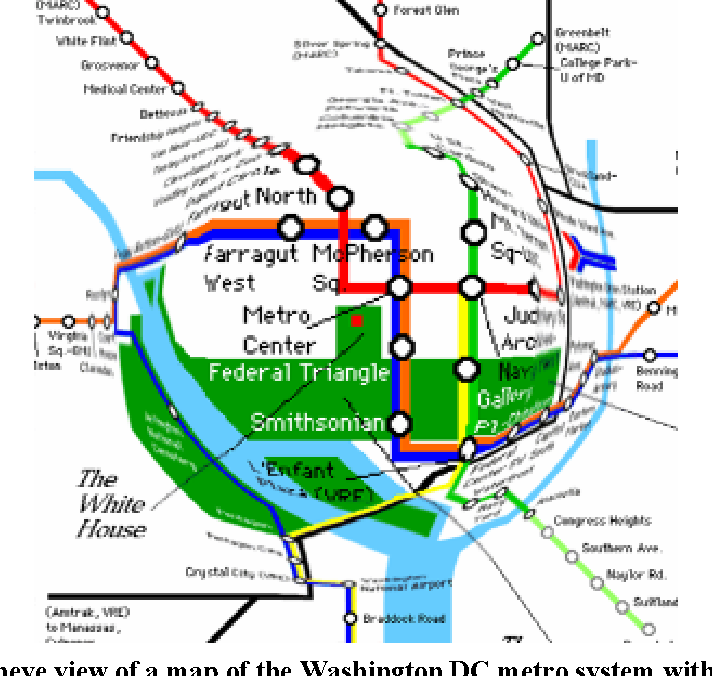

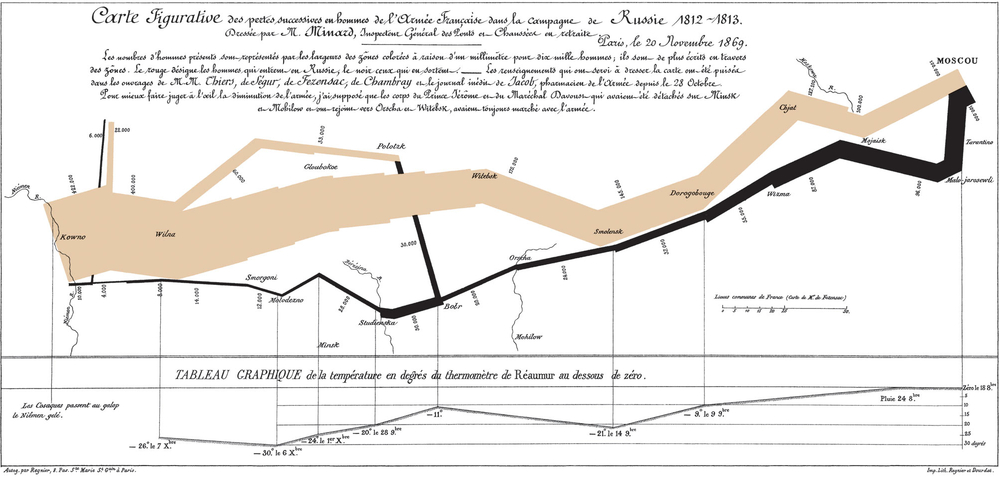

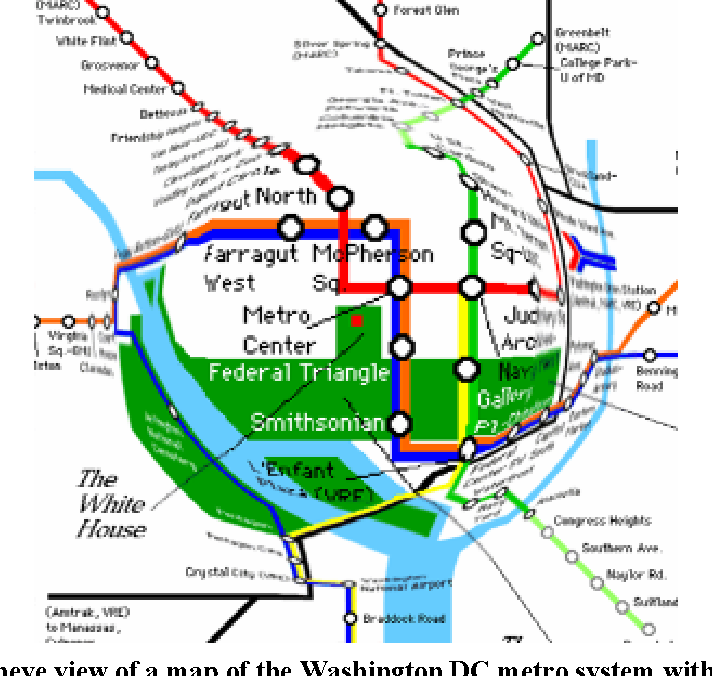

Graphic Design of the Day: a map

Napoleon's March into Russia: proof you can

legibly plot extra dimensions atop a map.

Maps have legends to explain what's on them,

along with two primary dimensions which are

intuitively based on actual geometry.

lecture 3

Reading for this week

Compared with last time I taught this class, I want you to spend enough time

to learn Unicon, or rather the 1/2 of it that will be useful

for writing visualization tools.

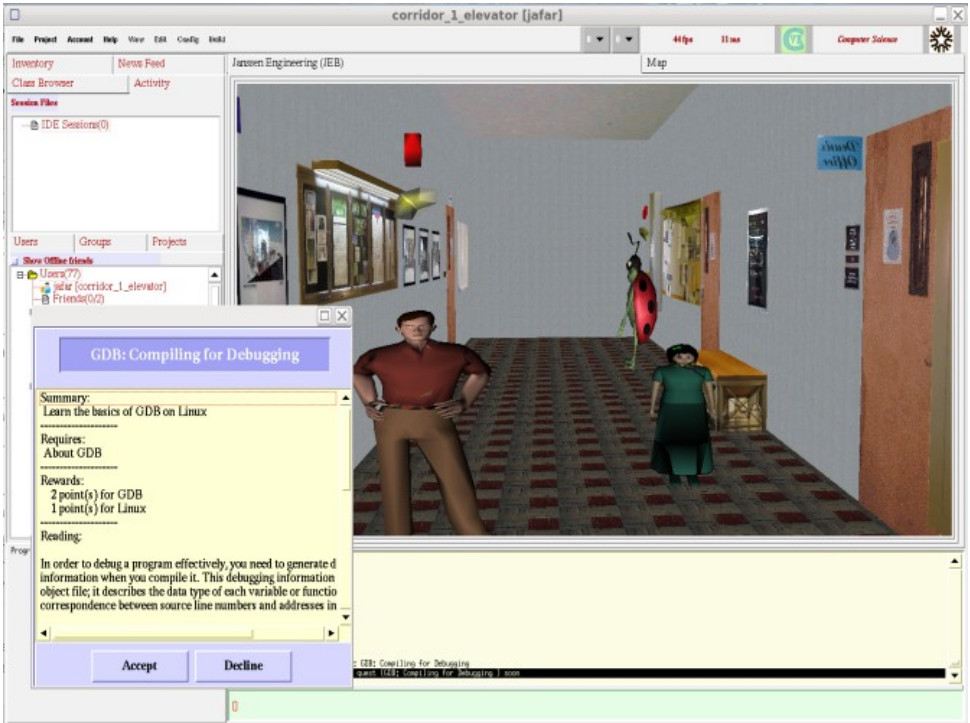

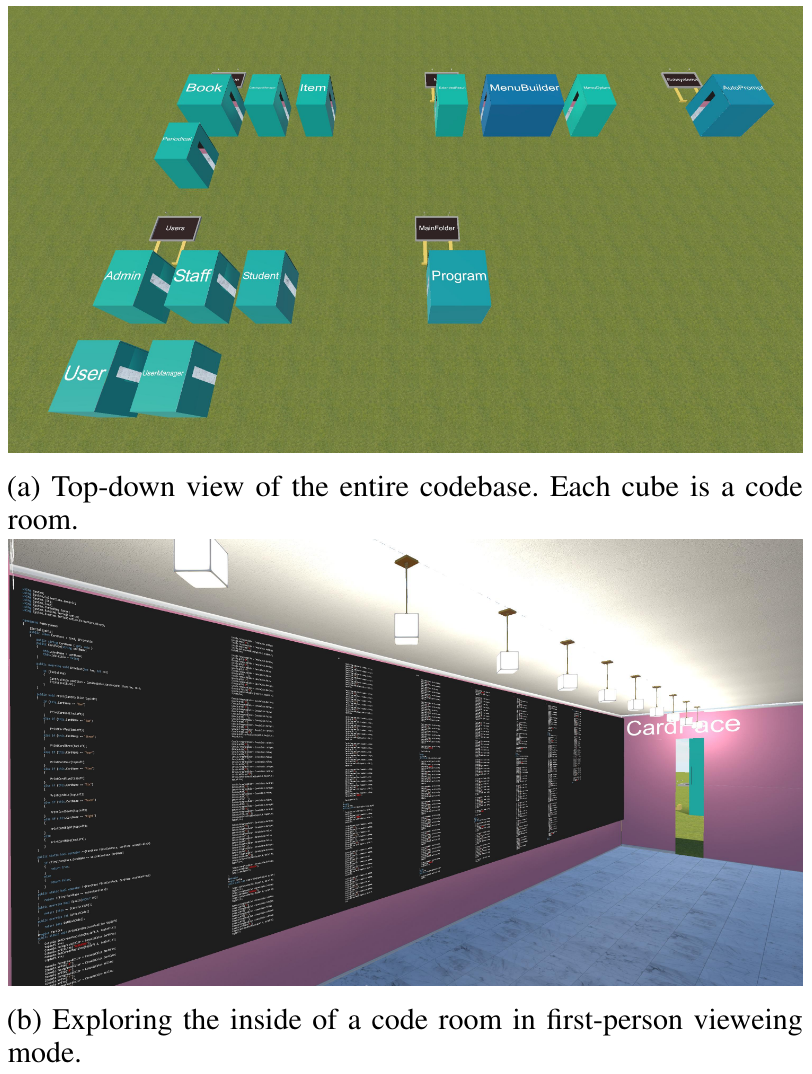

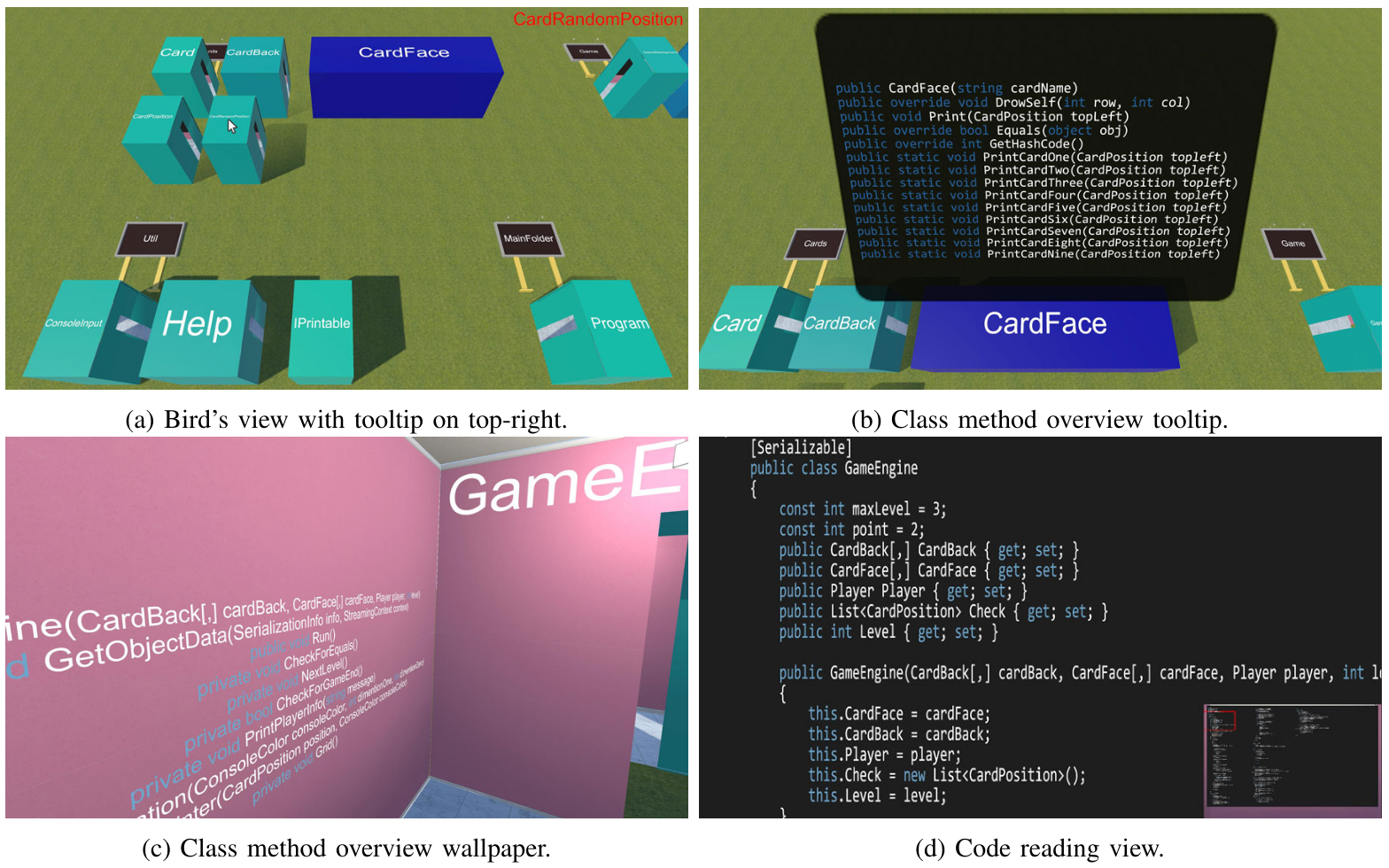

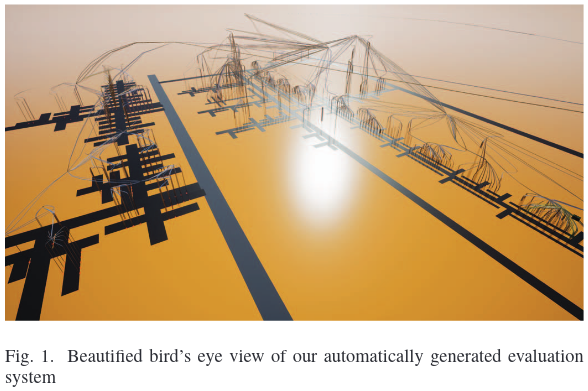

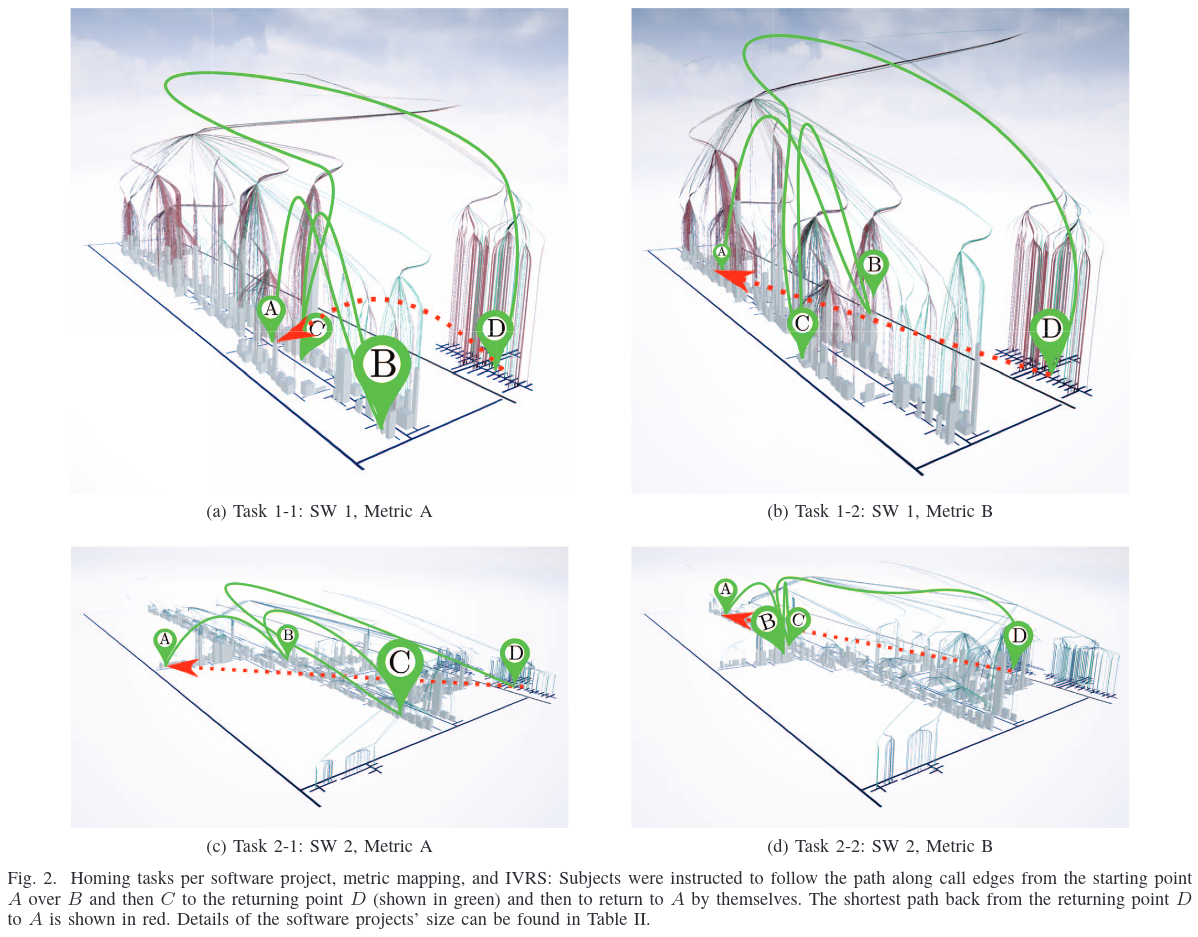

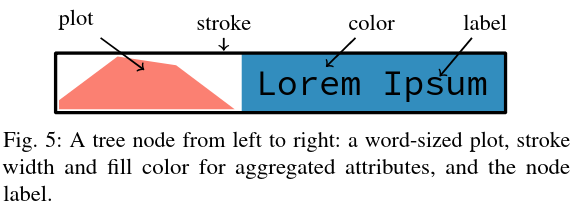

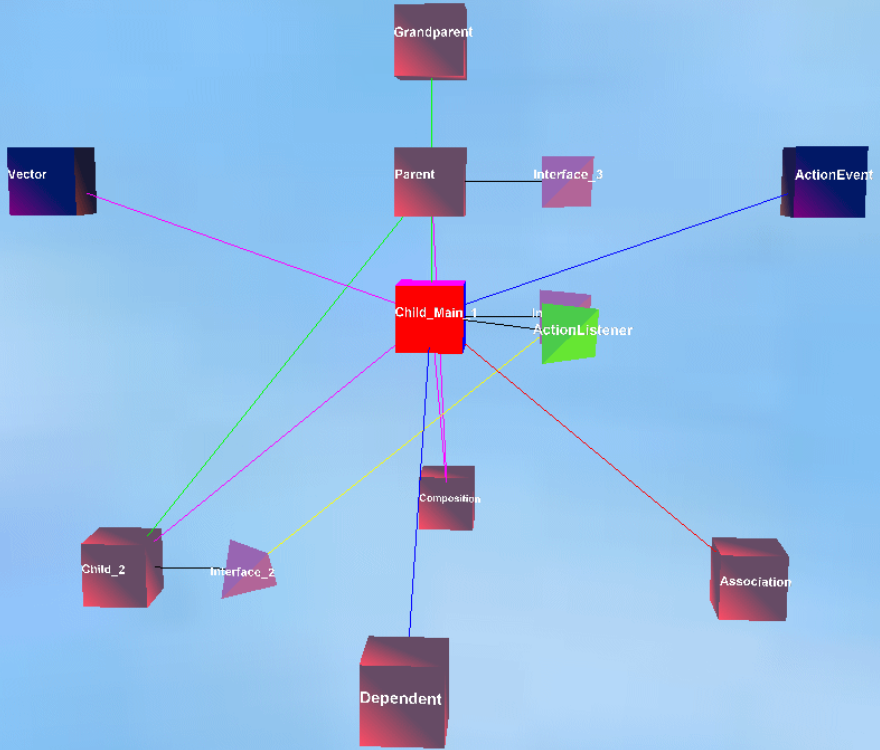

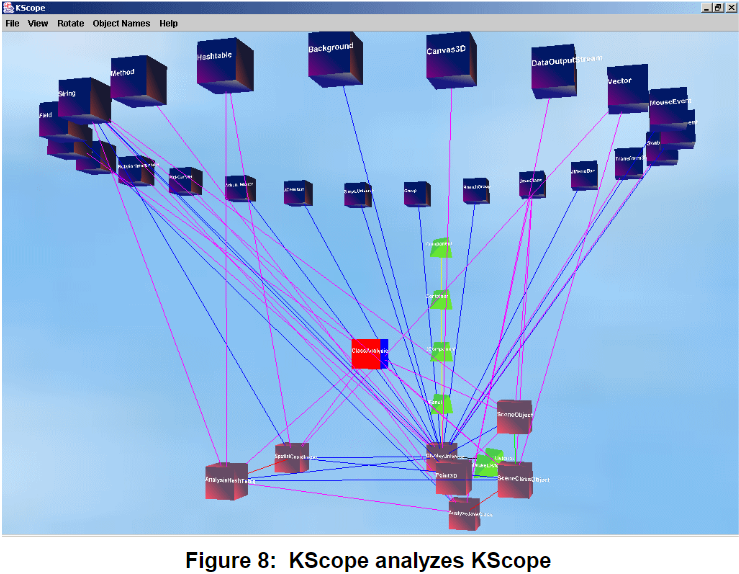

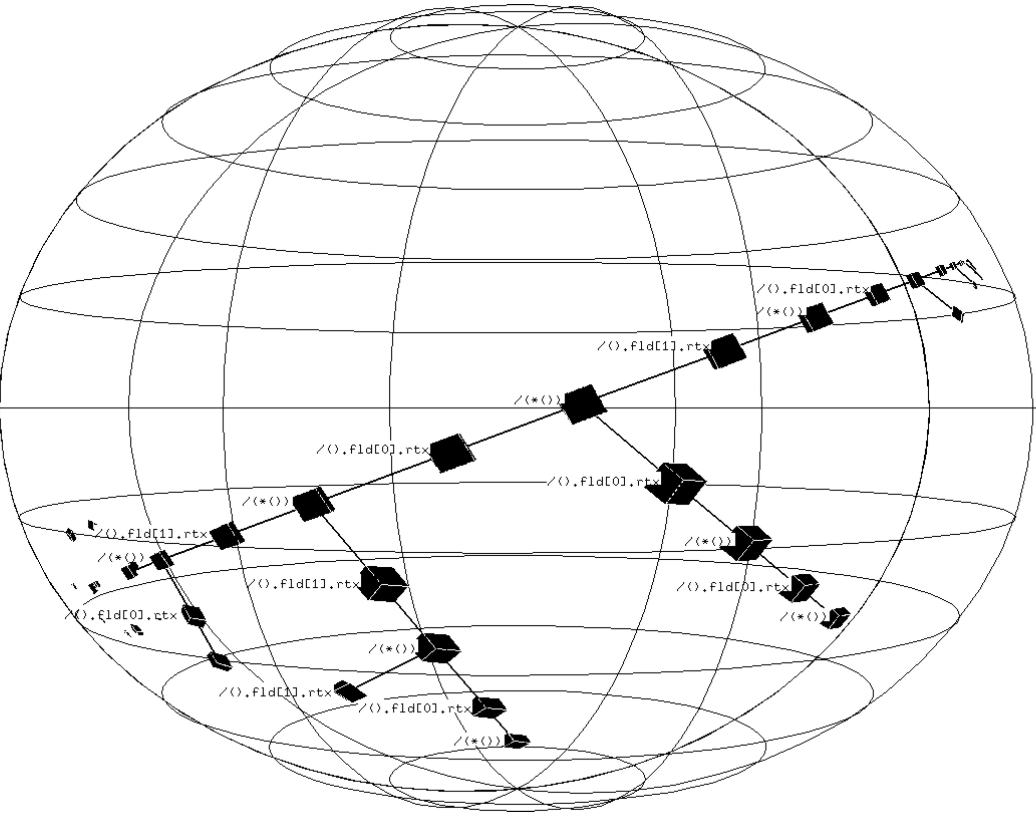

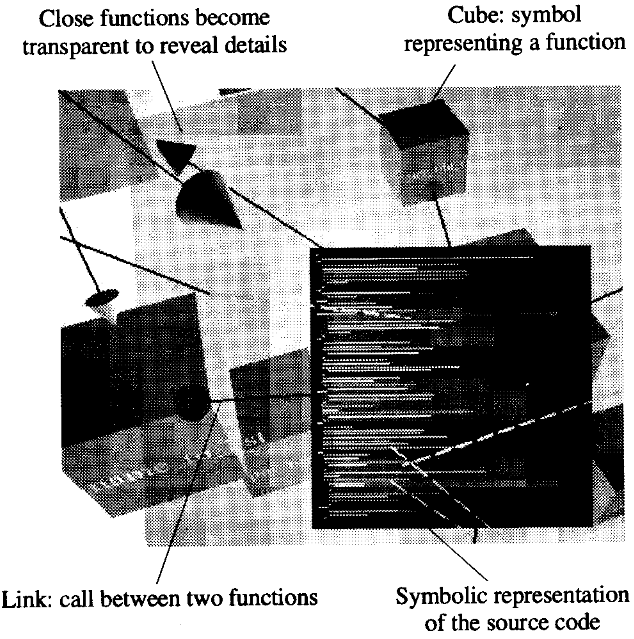

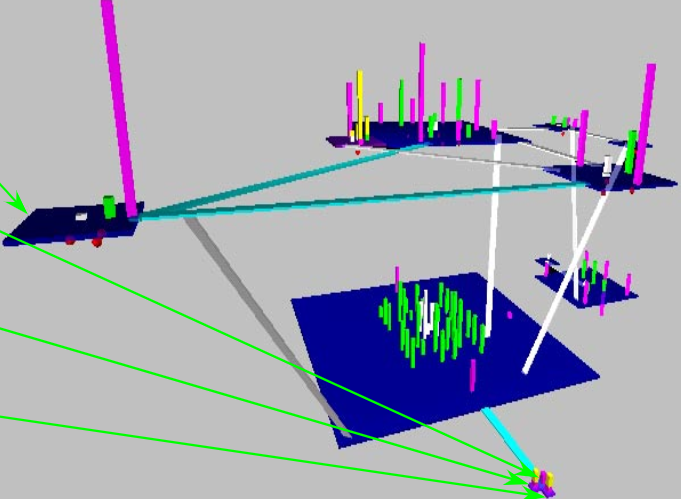

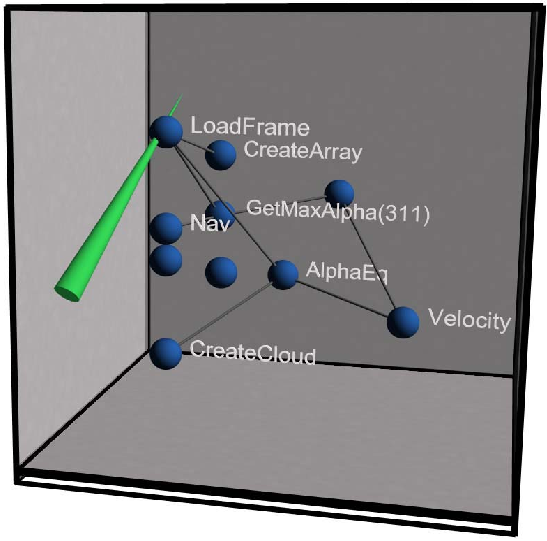

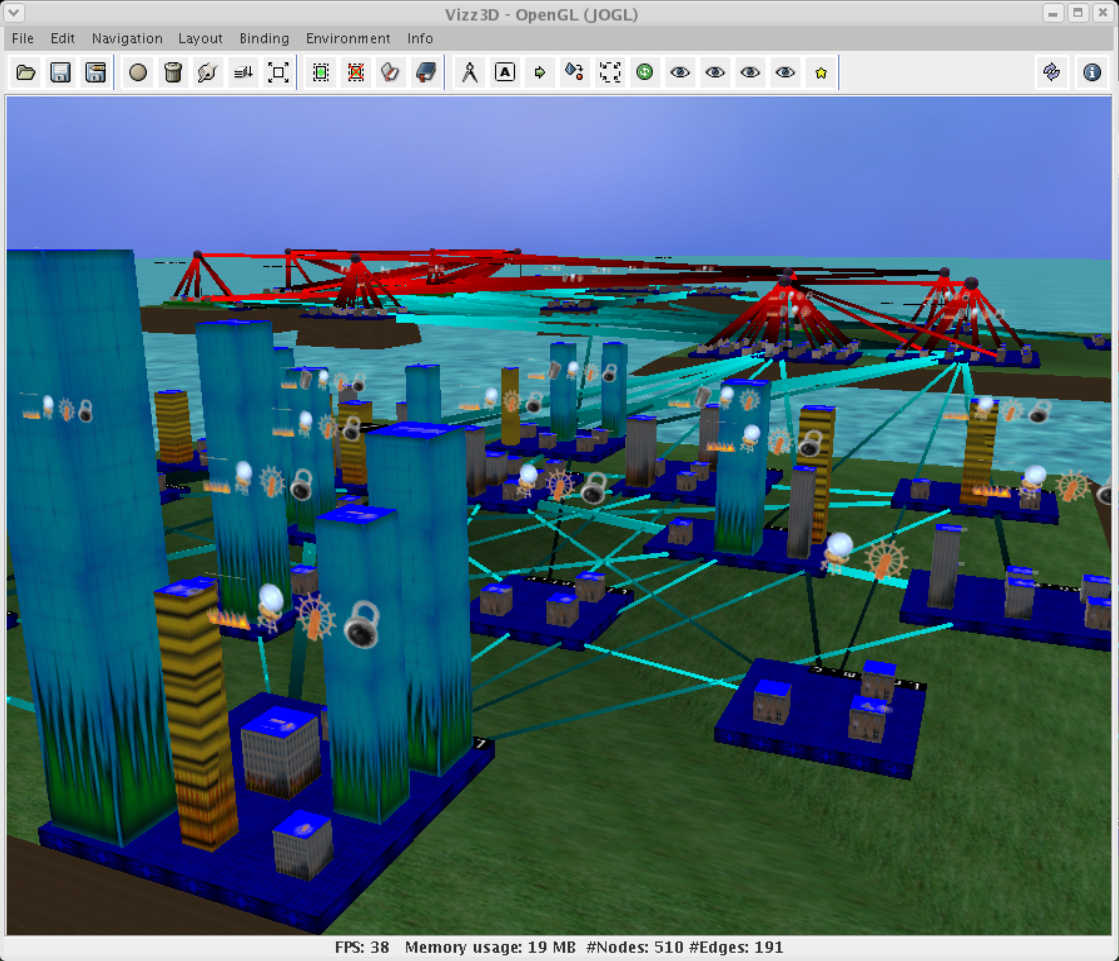

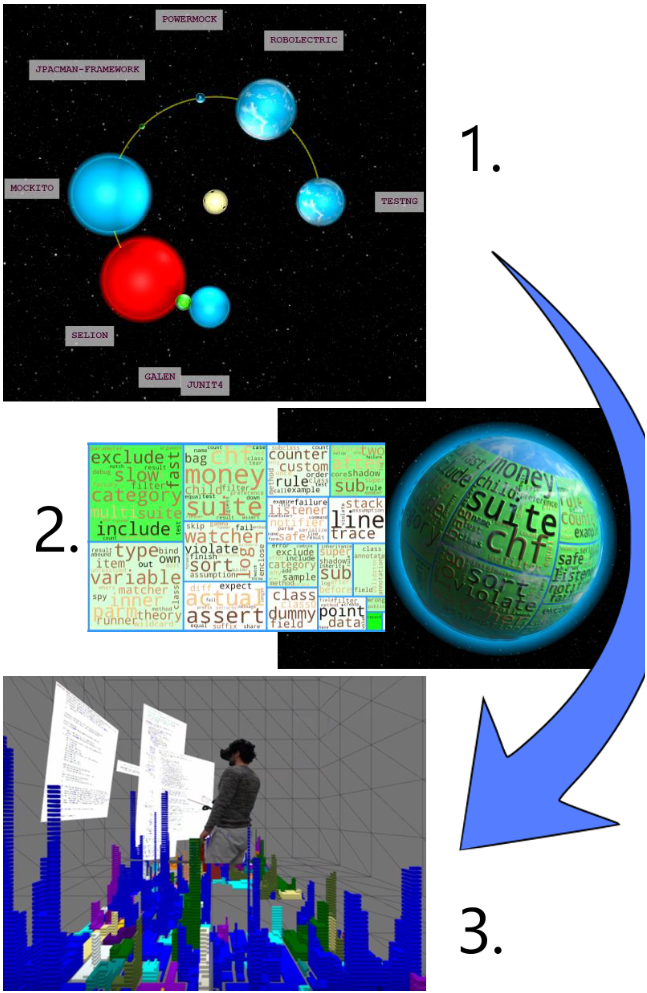

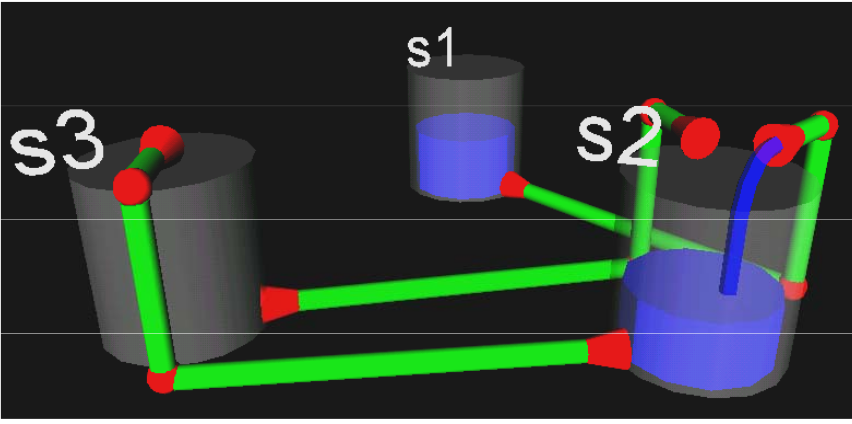

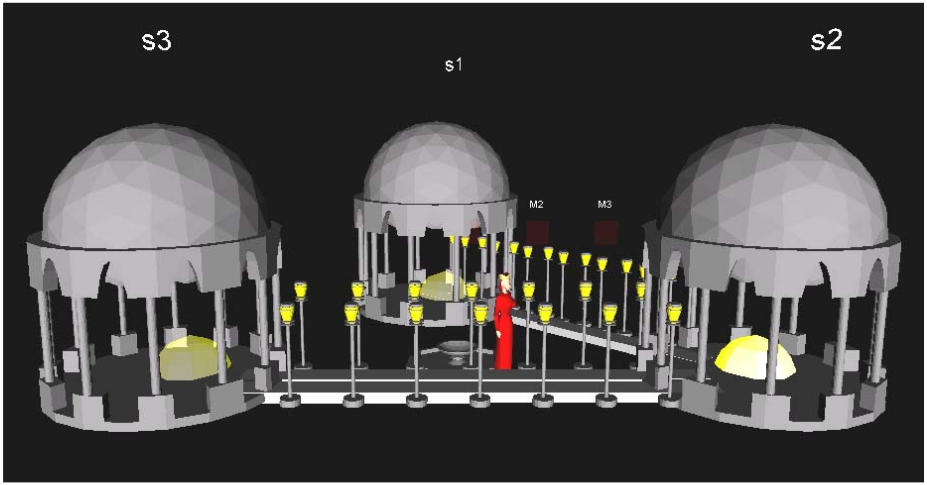

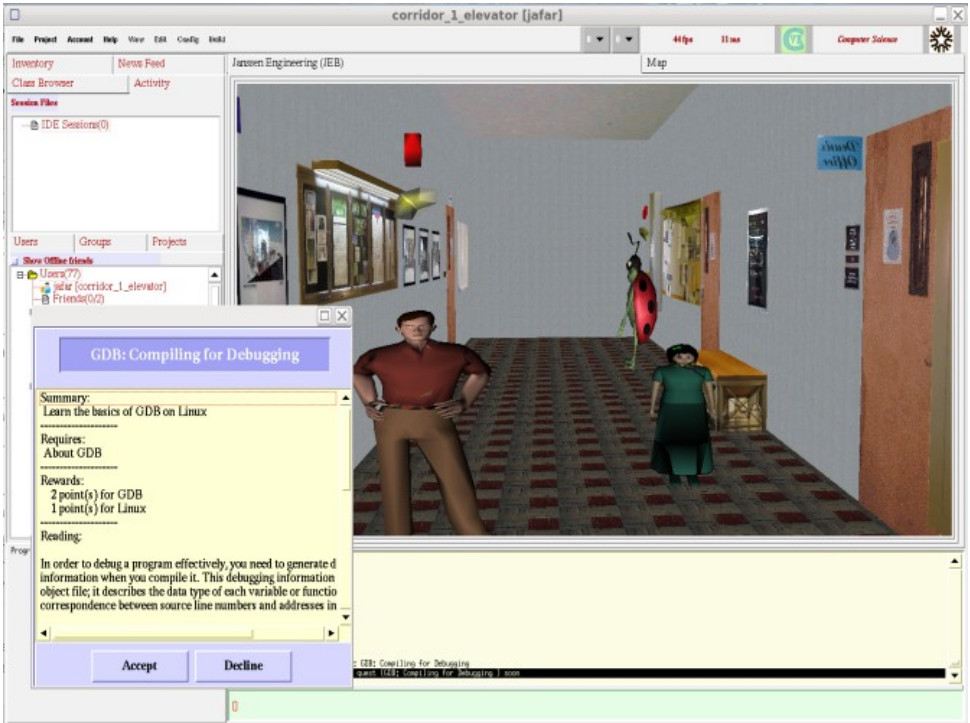

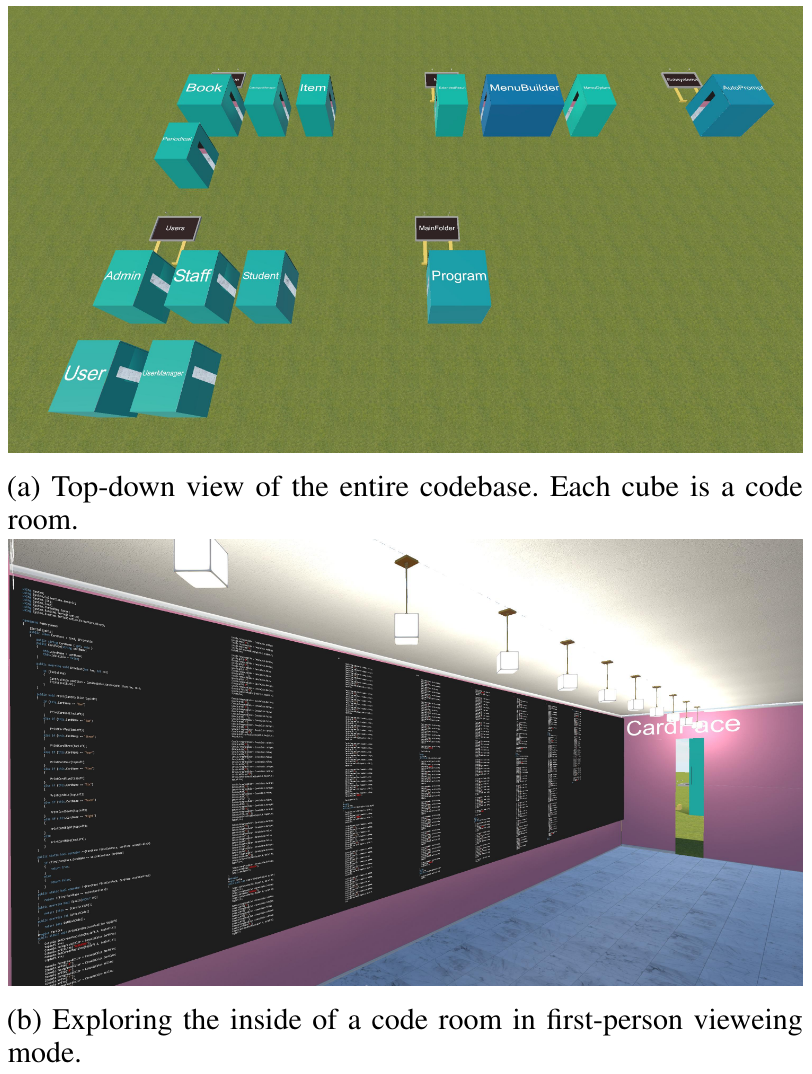

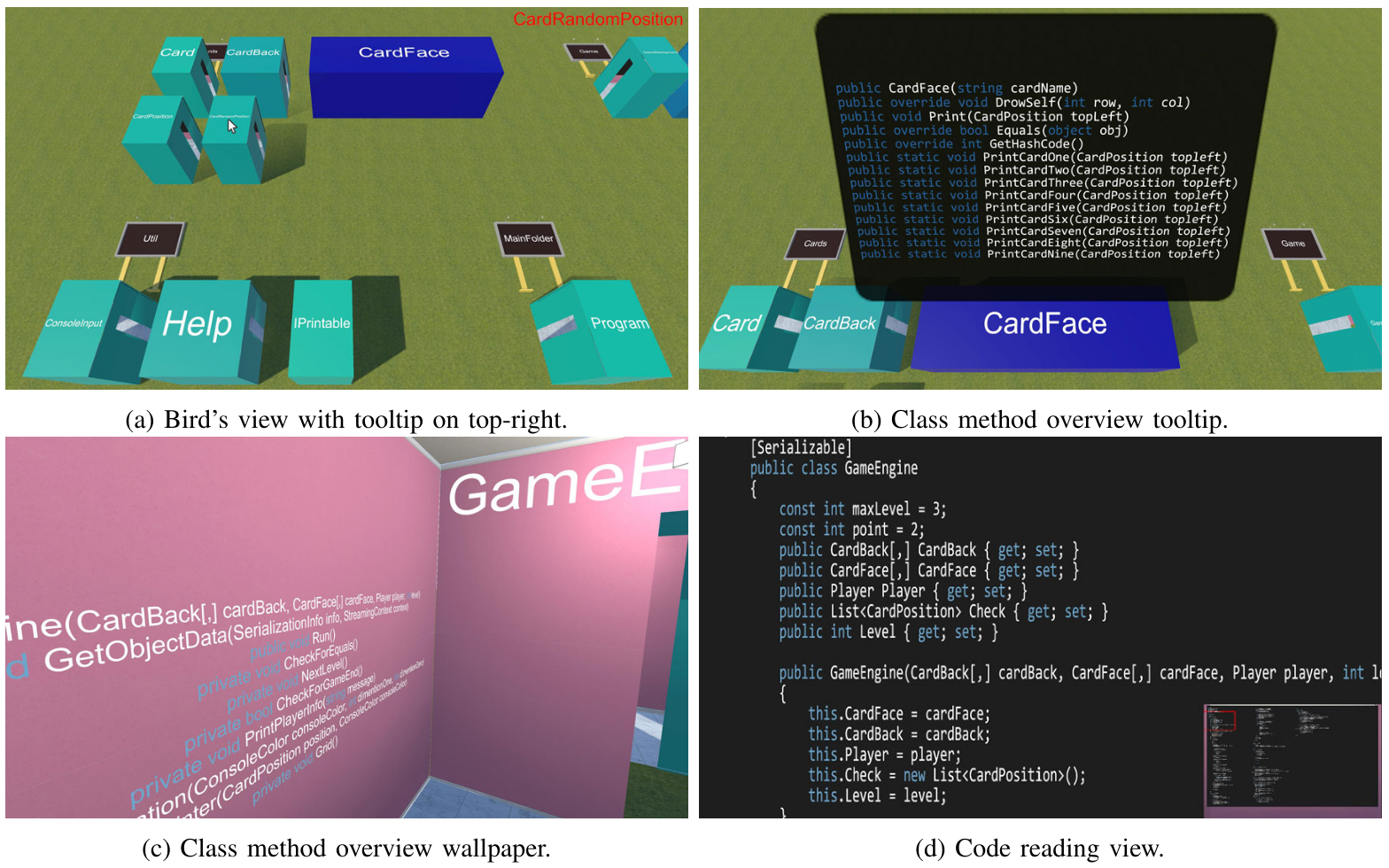

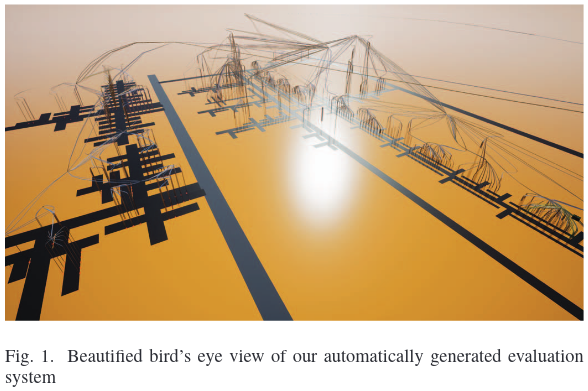

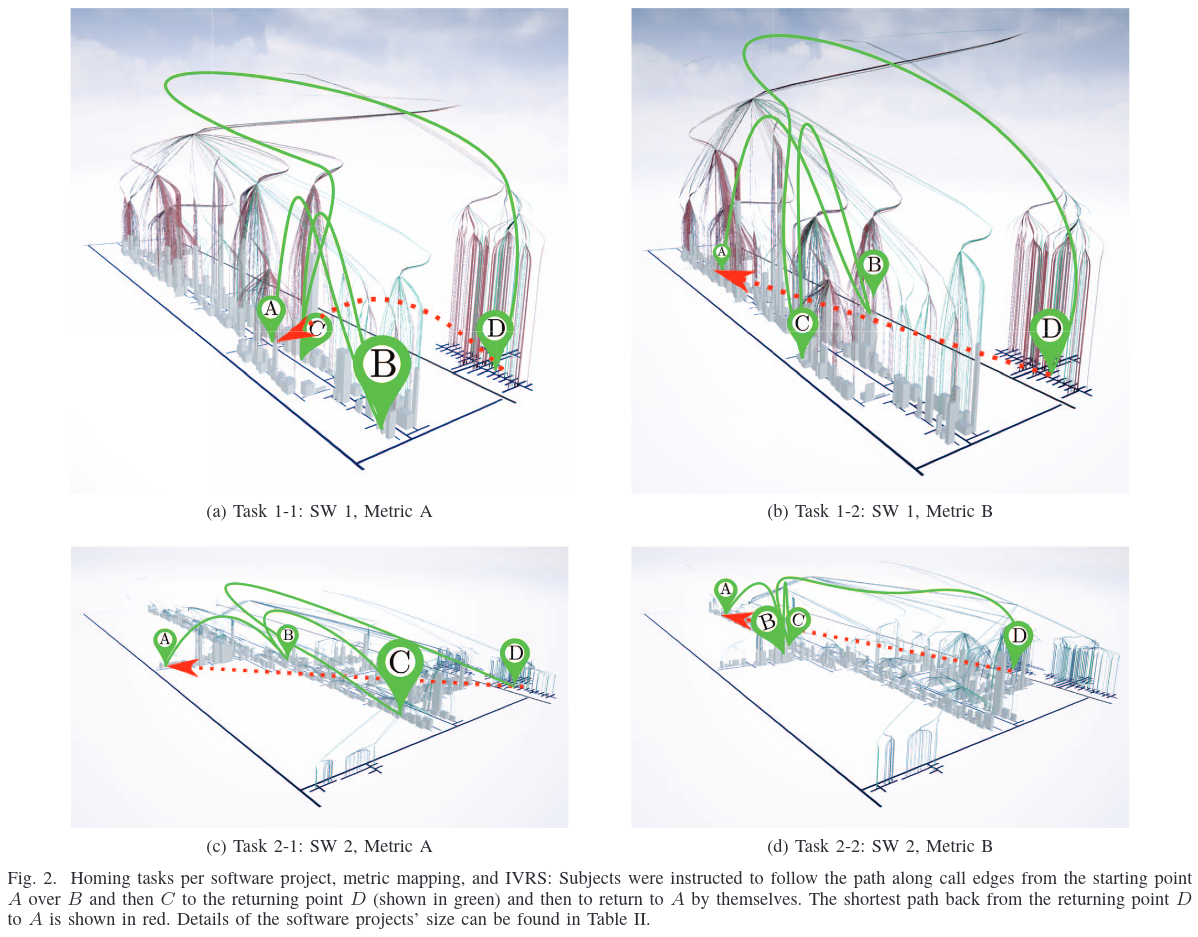

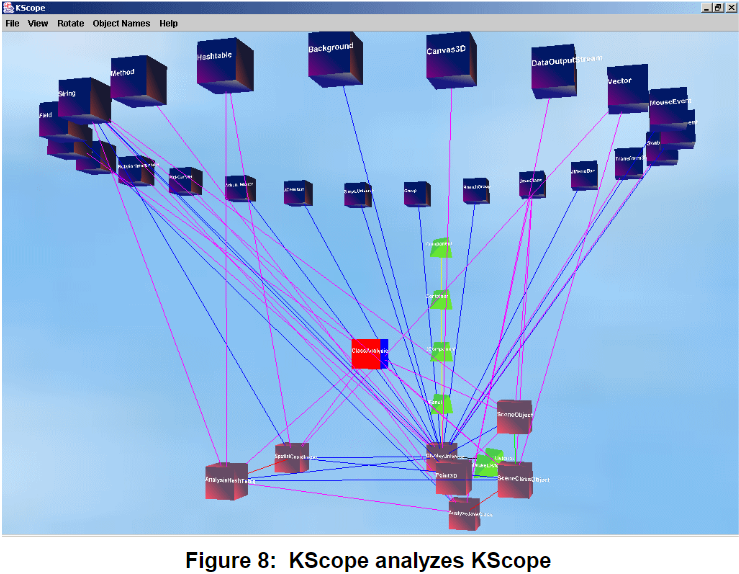

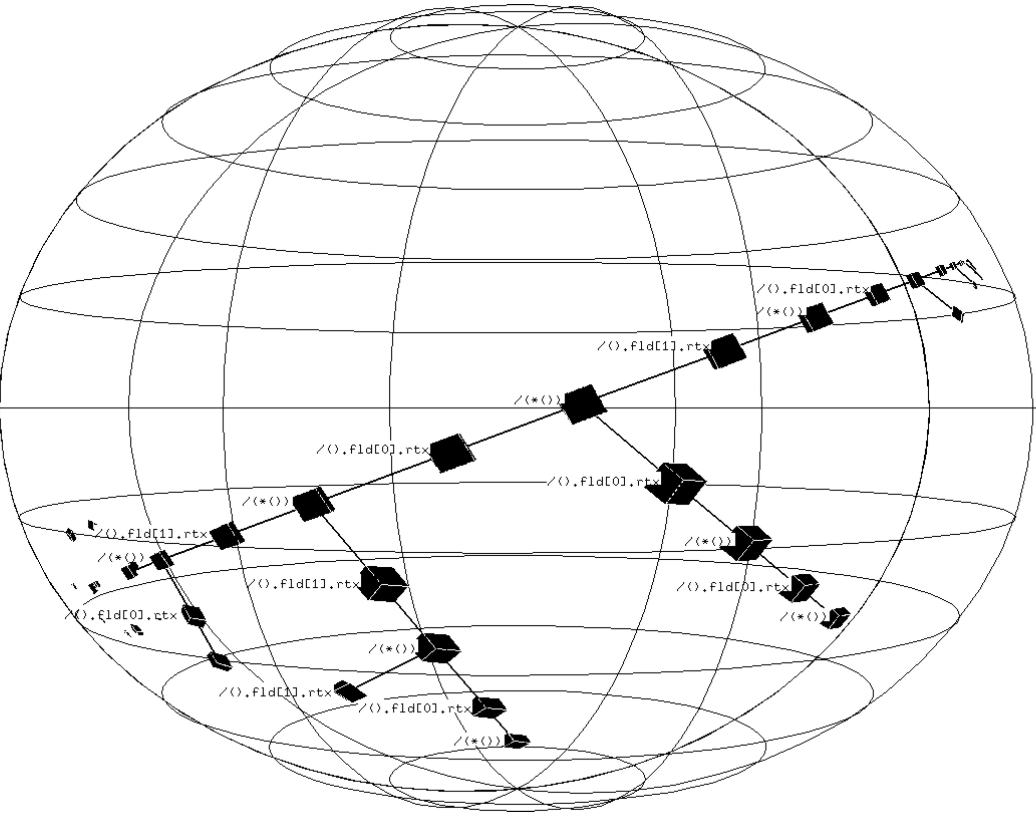

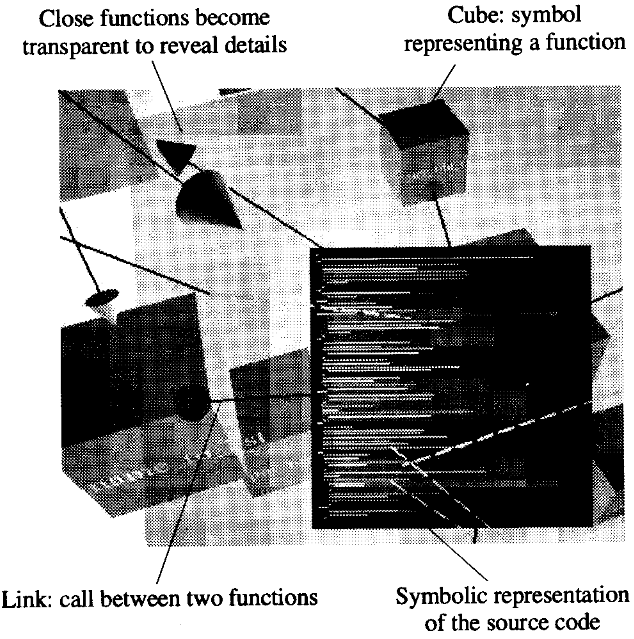

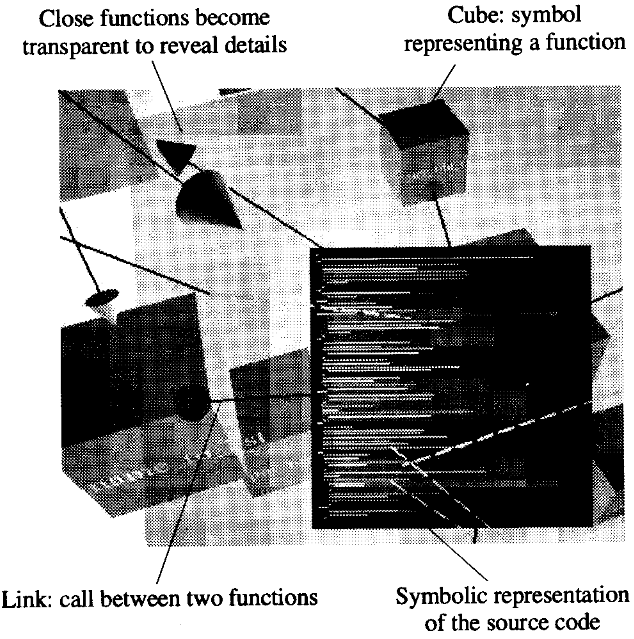

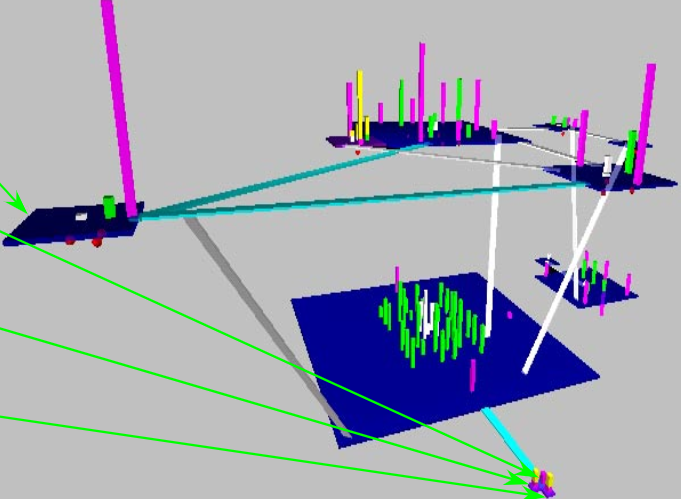

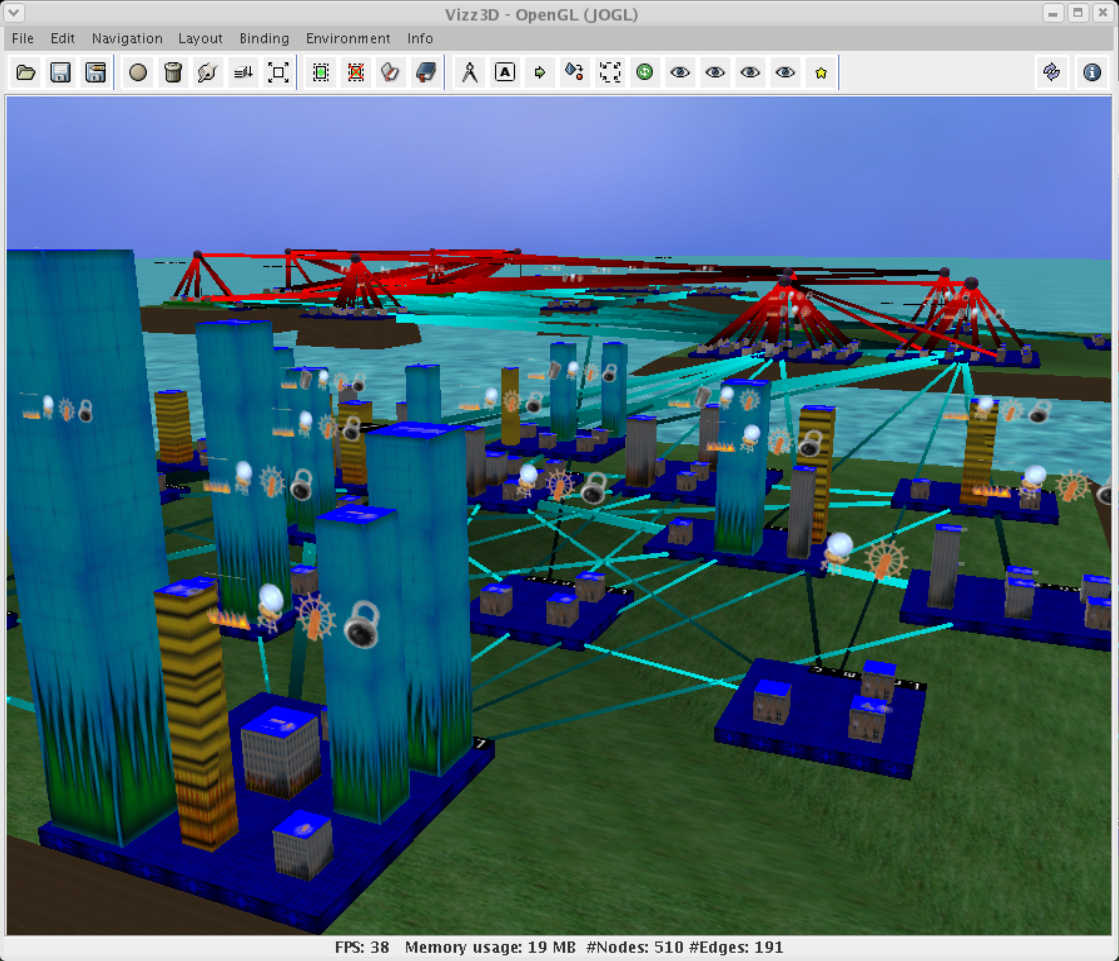

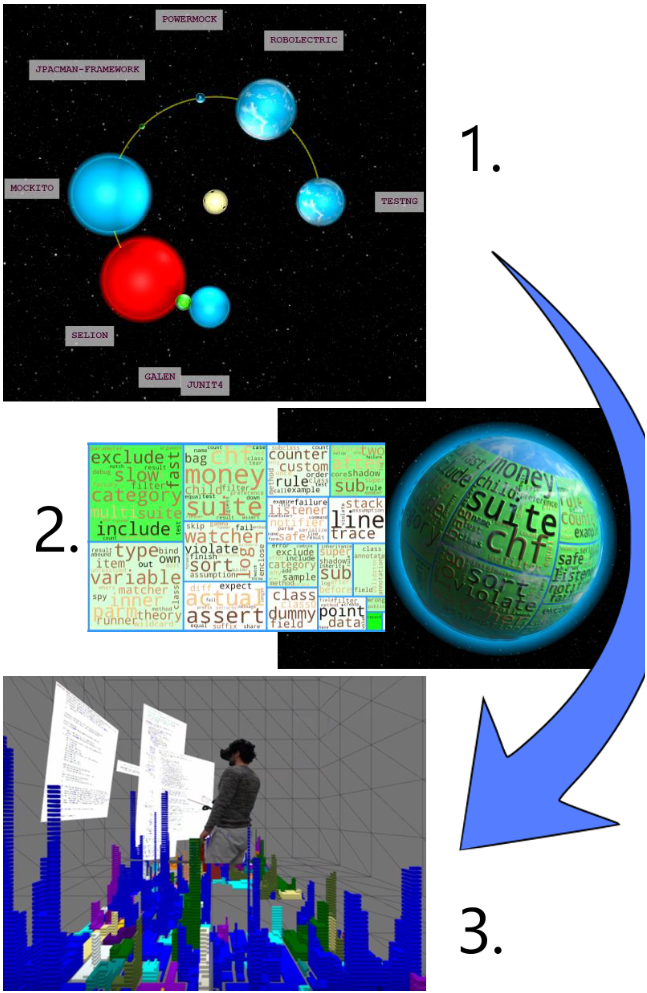

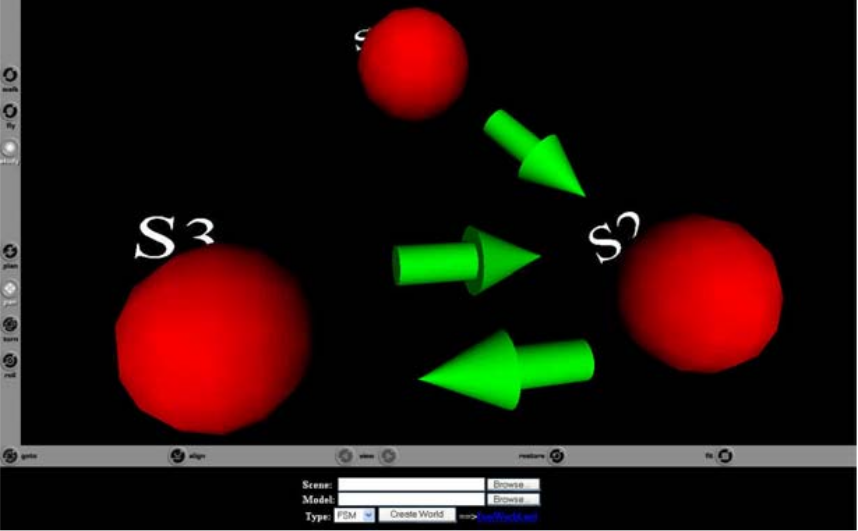

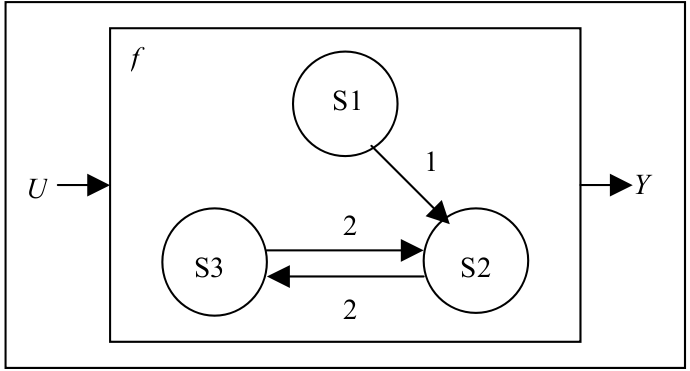

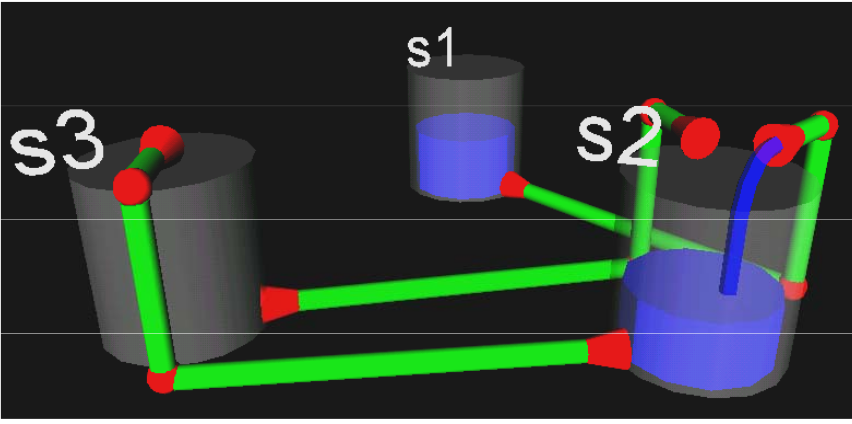

Highlights from Hirose

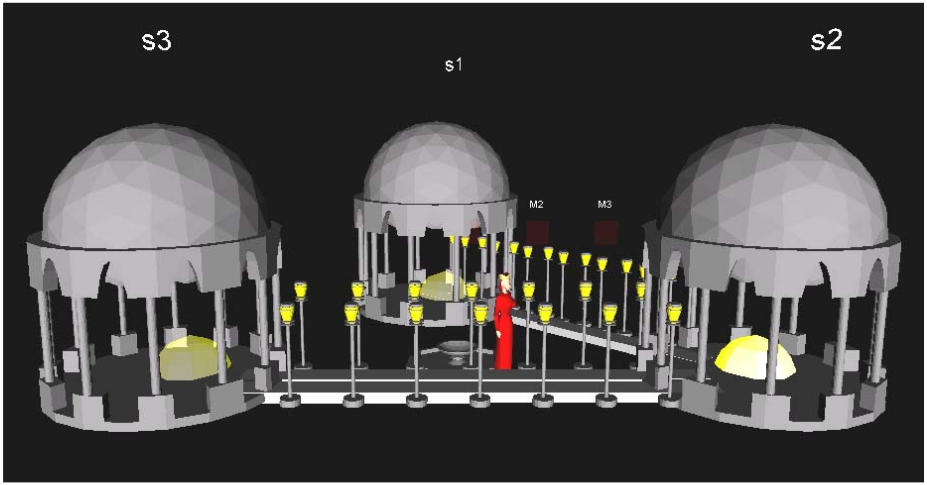

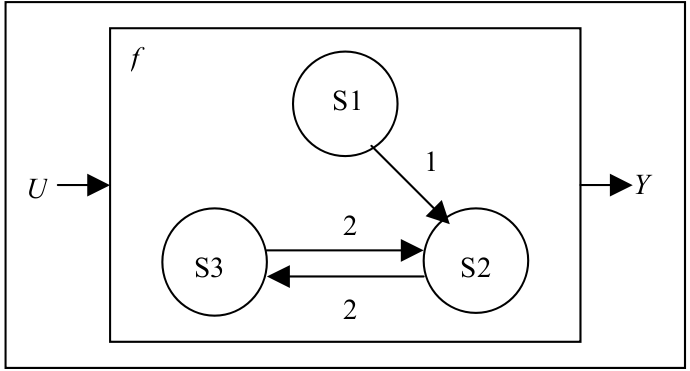

[Hirose97] describes research from the University of Tokyo, presented

at the annual conference of the World Society for Computer Graphics.

- What do Hirose et al mean by "virtual environment"? What does a

virtual environment have to do with program visualization?

- Goal of visualization: help human build a cognitive model

of a large, complex software system.

- when are graphic representations more useful than purely textual

representations?

- What is the paper's proposed solution to the "bird's nest graph"

problem with program visualizations, which is that the many

crossing lines make the depicted graph difficult to understand

- programs have neither intuitive appearance nor physical form.

what info to show? How to depict it graphically?

How to position it within 2D or 3D space?

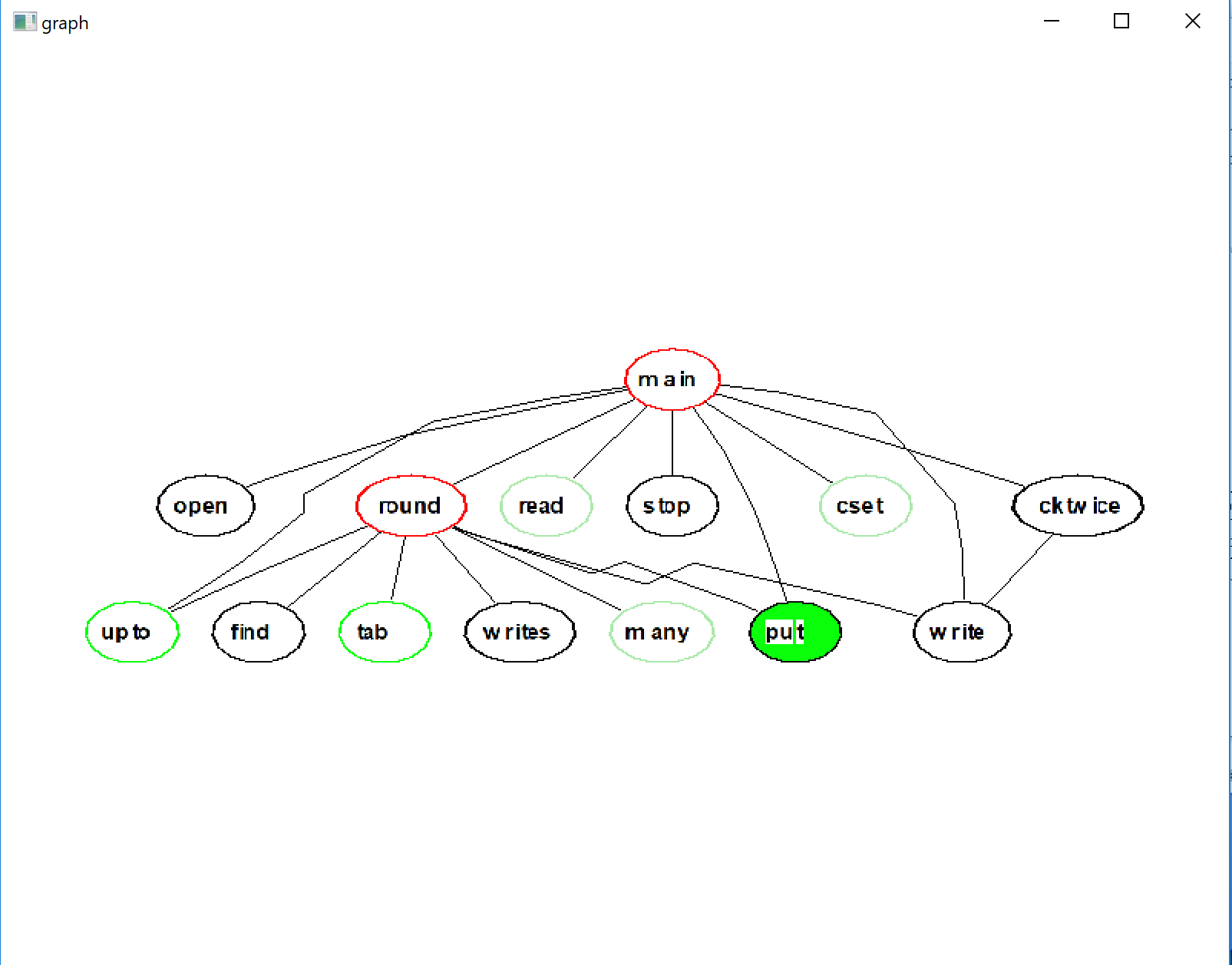

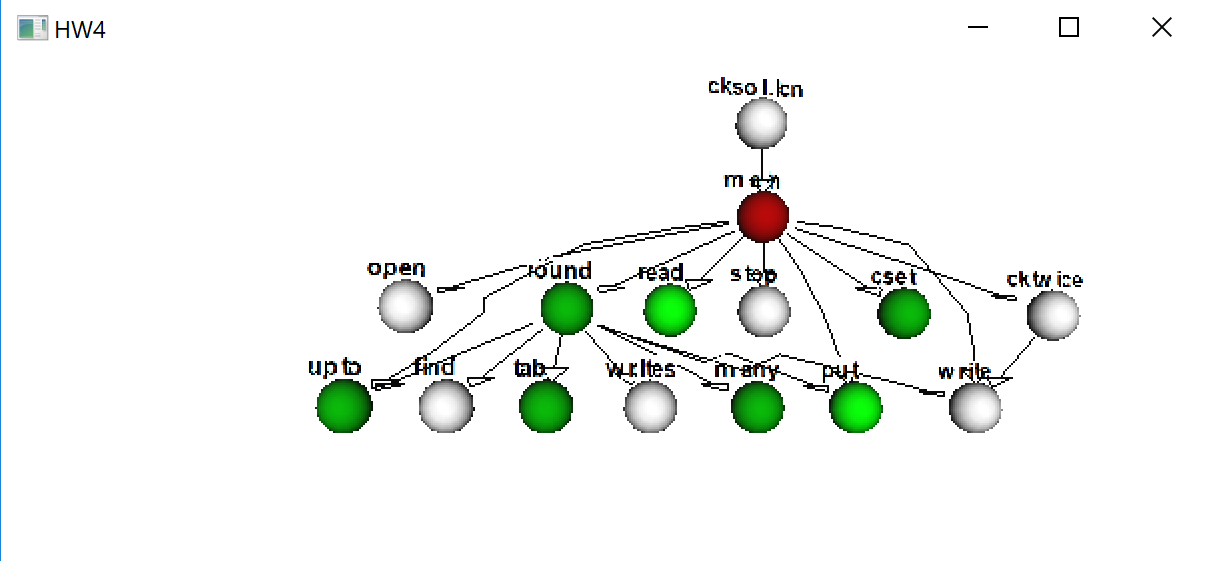

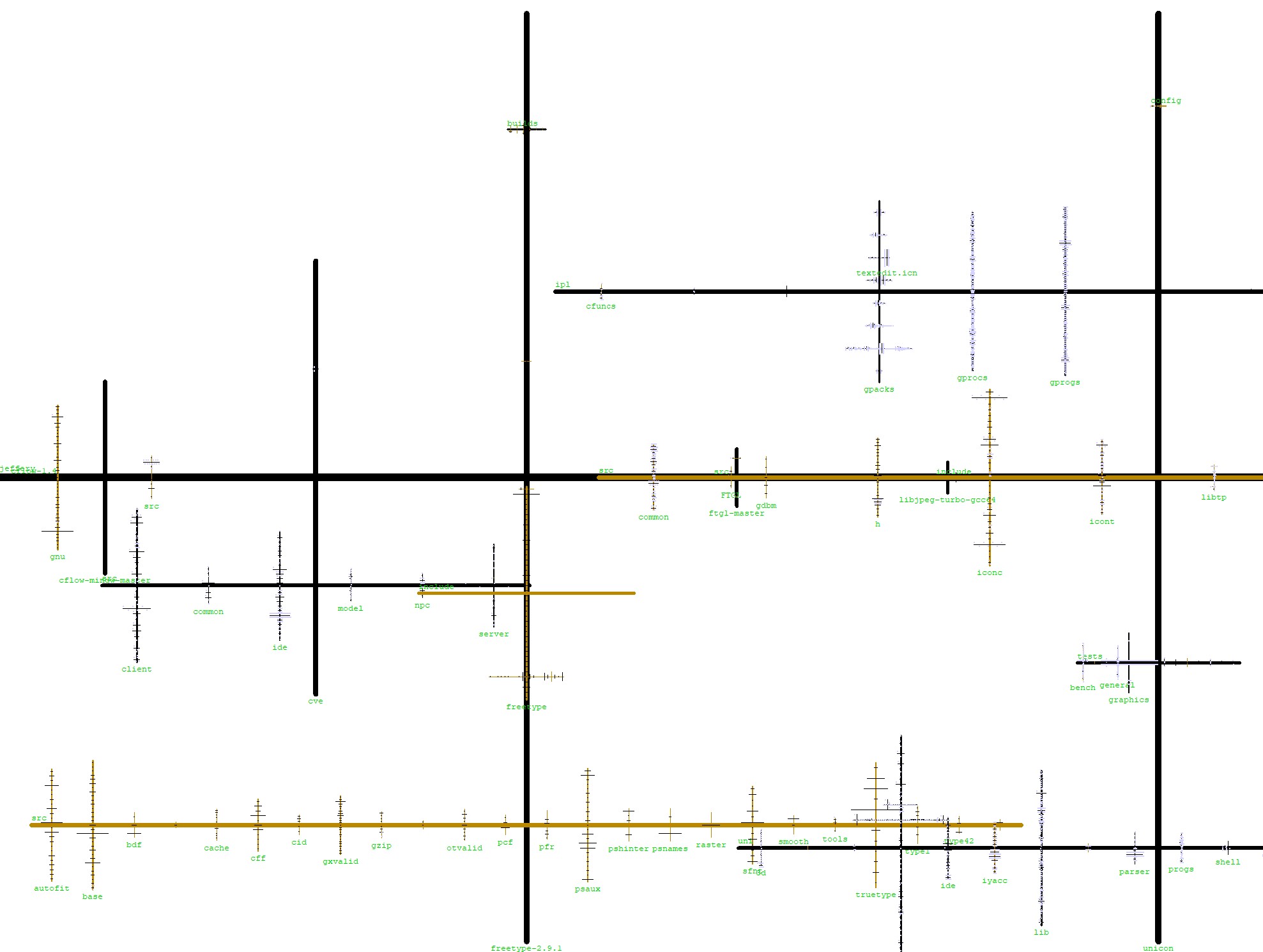

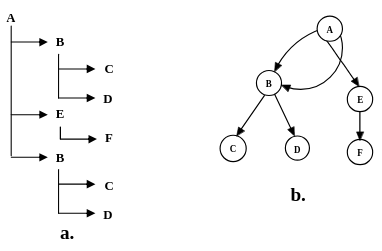

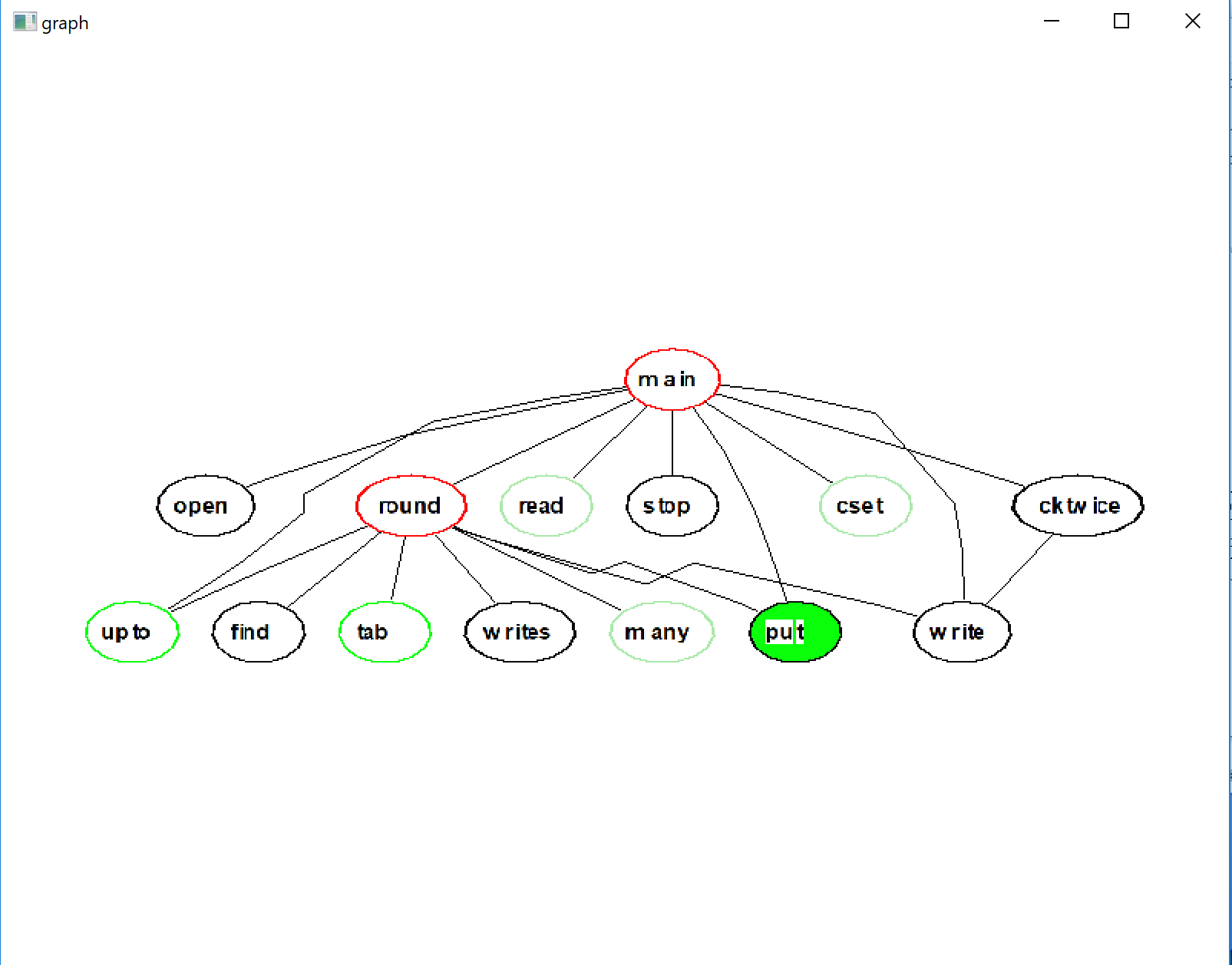

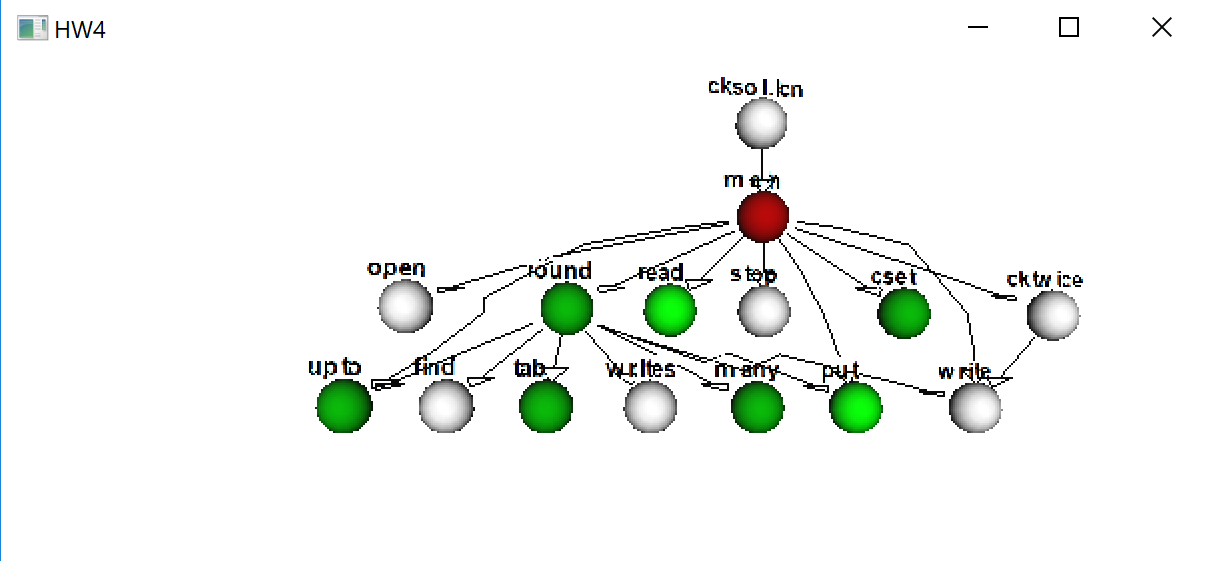

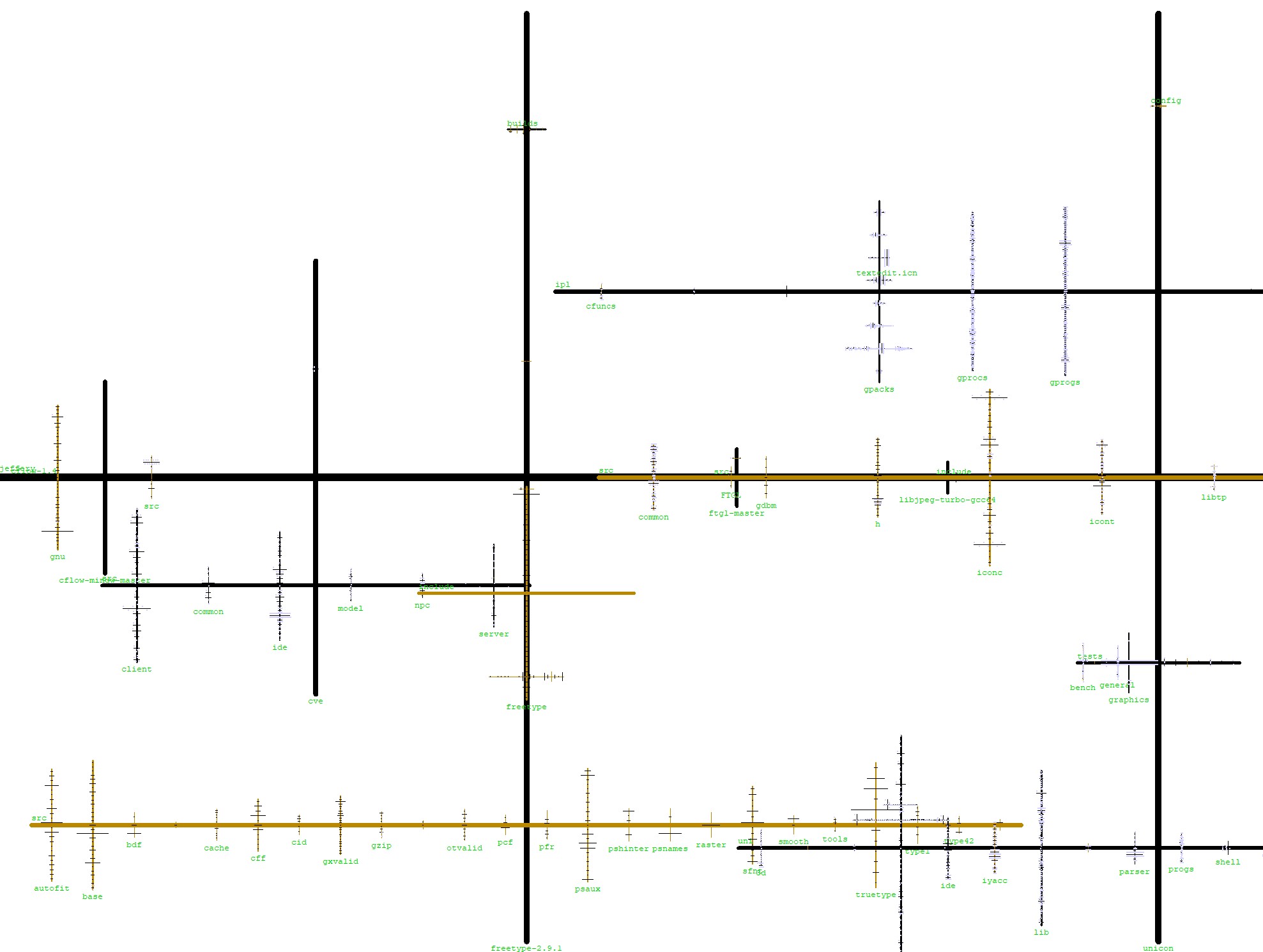

- Proposed static analysis: from source code, generate an

"intermediate file" containing:

- list of source files

- list of functions in each file

- for each function, a list of calls to other functions

- "data structures"

(Hirose note: "intermediate file" format might make sense for

multiple/many languages, allowing subsequent visualization on

all of them).

(Jeffery note: many tools have been written to extract this before,

might not have to do it from scratch)

- don't ask the user to place all the objects -- too much work

- force-directed placement algorithm:

- each object exerts a repulsive force on every other object

- relationships between objects exert attractive forces

- model it as a physical system, start with random positions

within a small space and turn forces loose.

layout is finished when it reaches equilibrium

- Fundamental in visualization: too much information. Too complex. Need to

select/simplify/abstract. For example: merging similar entities,

omitting peripheral ones.

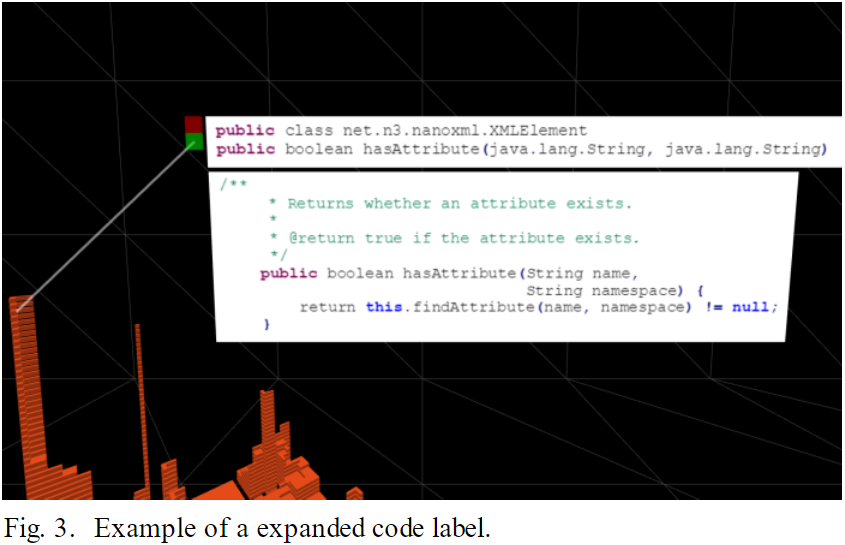

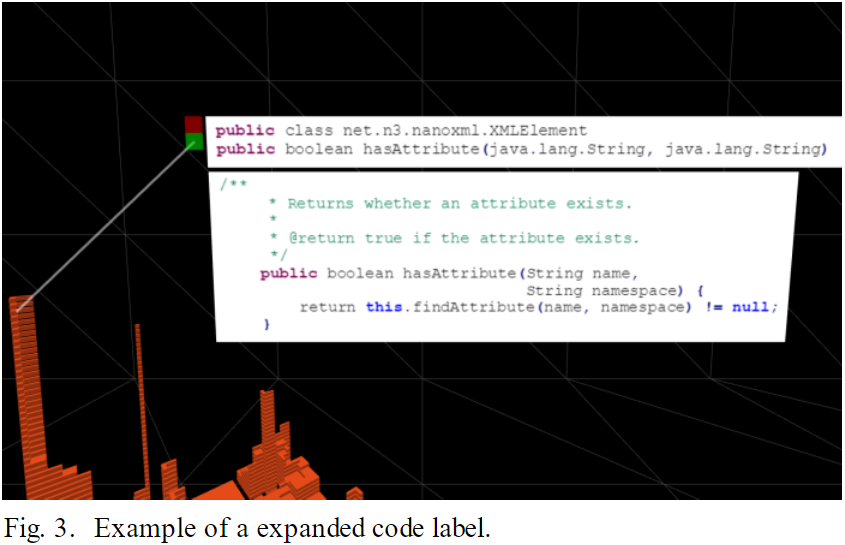

- Levels of abstraction, levels of detail. At a distance: only show

external coupling. From close up: object becomes transparent,

source code is visible.

- Color-coding : a limited, extra dimension. Example: color GUI

components red, database components green, computations blue.

Example: distance from main() (or current point of execution)

indicated by brightness/saturation/grayscale. Hirose does not

note the significant percent of users with some colorblindness.

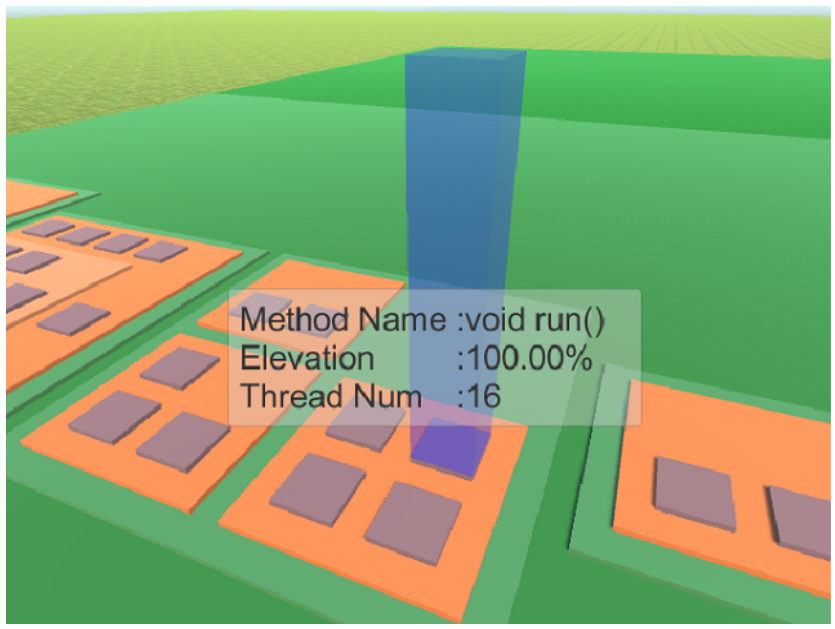

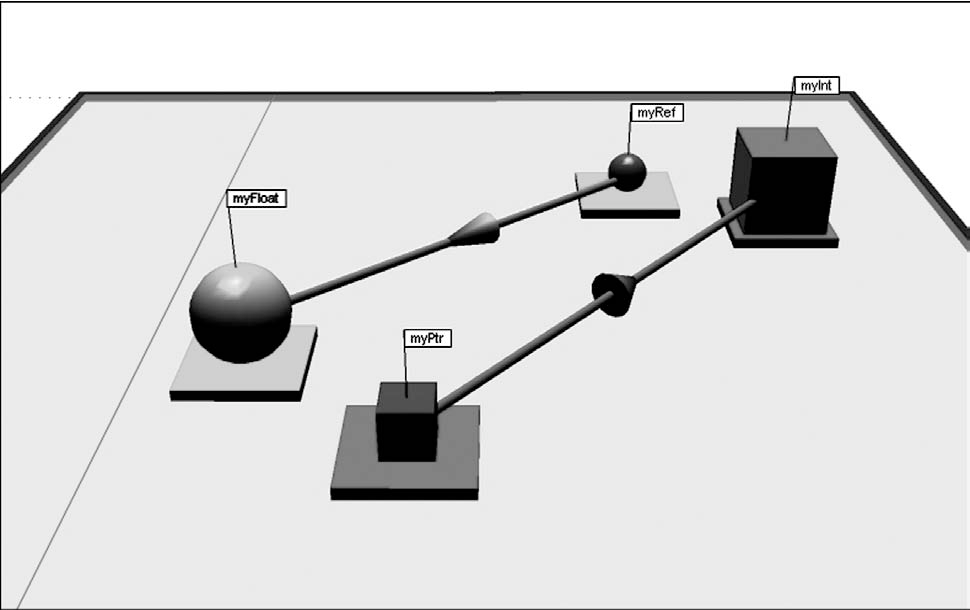

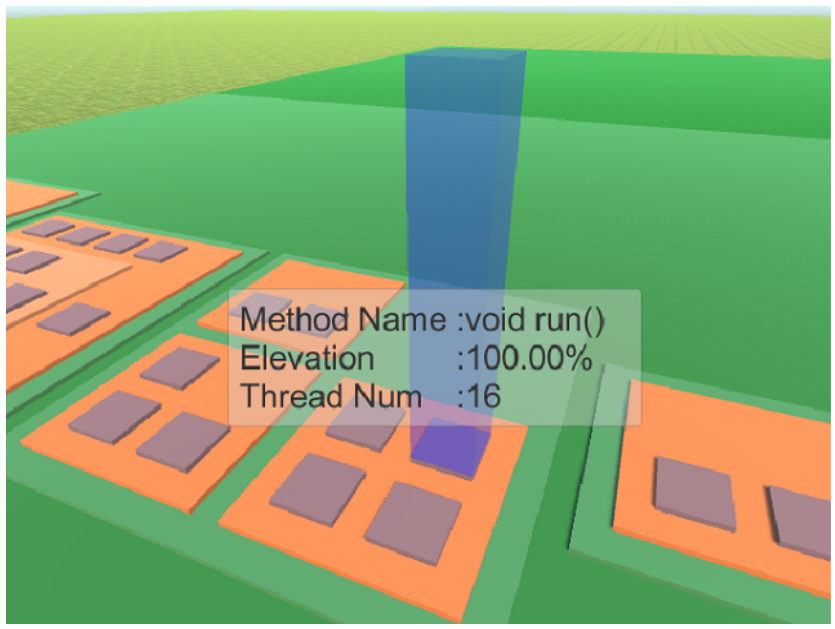

- Geometric appearance. E.g. size and shape. Hirose uses cubes

sized to the log of the #lines of code. Shape is another limited,

extra dimension that can be used.

- Does VR matter? What impact do VR goggles or a CAVE have on users

ability to understand the information we want to depict in this class?

Cheesey Movie References

What movies present topics relevant to this class, i.e. program

visualization, program behavior monitoring, or virtual environments

where such activities occur?

- TRON

- Disclosure

- Ready Player One

Graphic Design Principles

We need graphic design principles in preparation for visualization work.

The following can be attributed to Edward Tufte, a renowned ivy league

graphic designer who has written some beautiful books.

- show the information

- show as much as you can with as little ink as possible

- remove ink that isn't showing useful information

- remove redundant information

- revise and edit

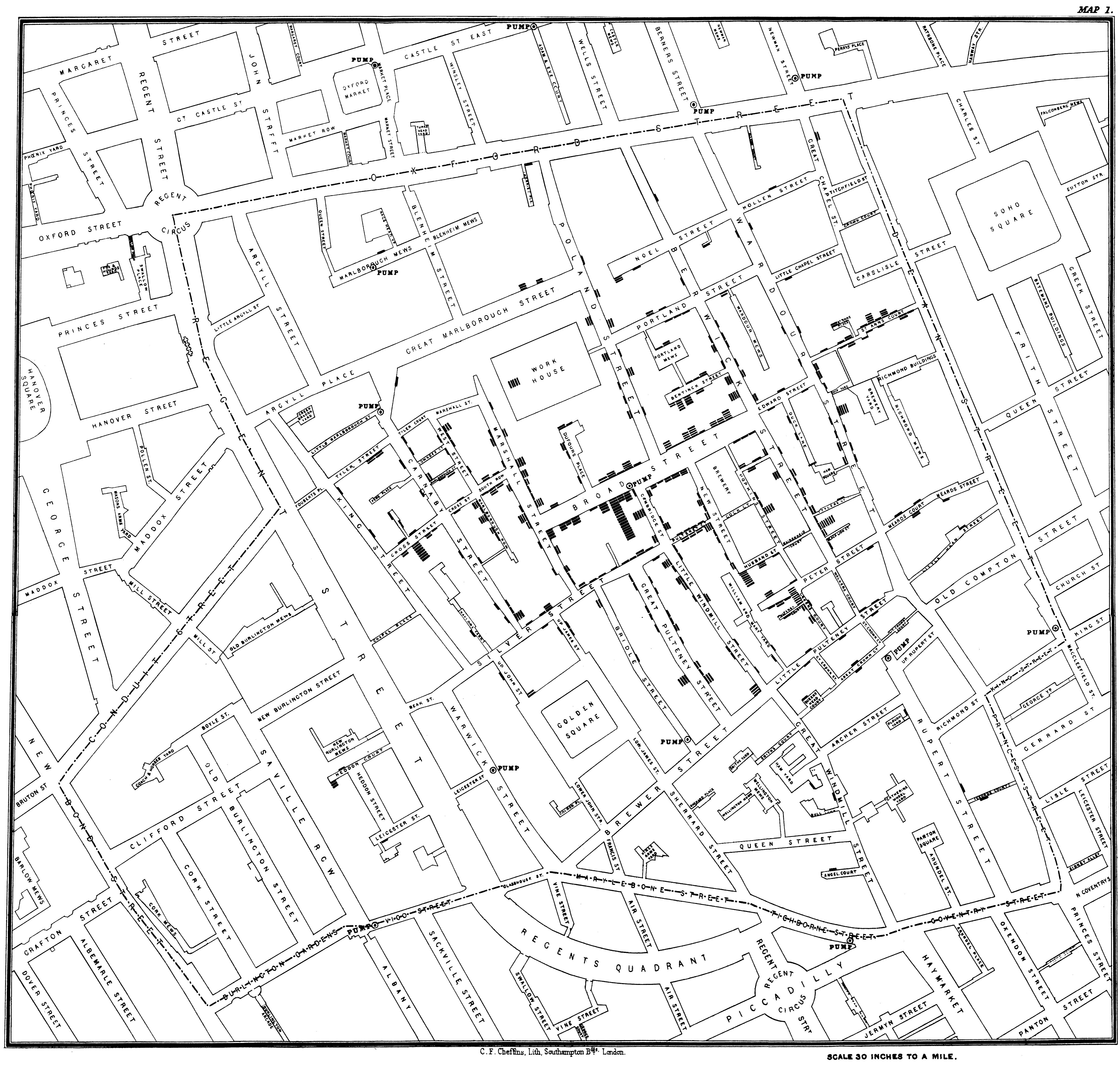

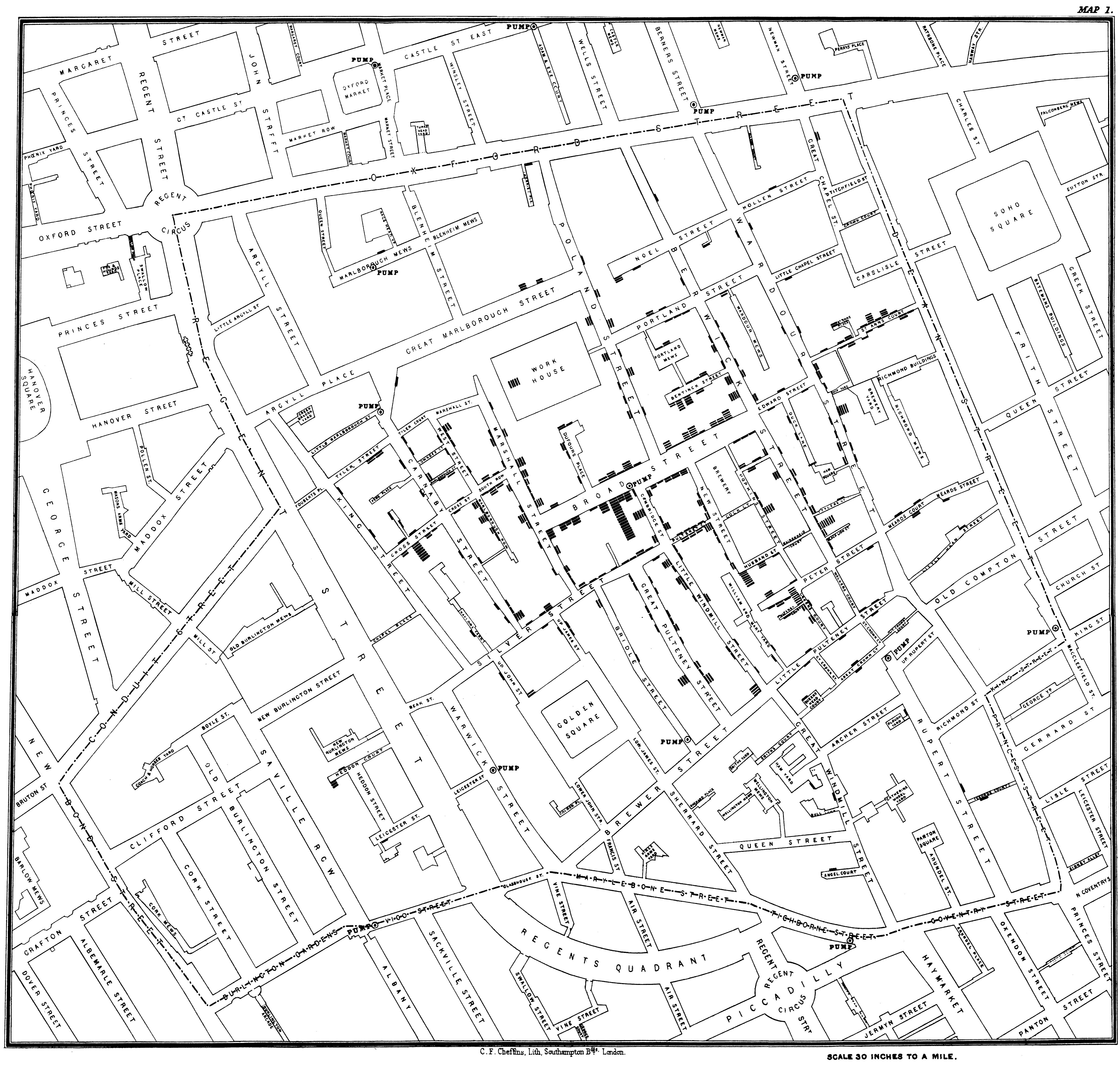

Graphic Design of the Day: a scatter plot

A map of London by John Snow, 1854, cleaned up by John Mackenzie

of the University of Deleware.

lecture 4

Mailbag

- I am having trouble using the star operator on lists, *L

- The size operator *L works only after L has been assigned a list

value.

L := []

- How do I check if a string is not in my list of strings?

- Well, first off, if one were doing this a lot maybe one should

use a set instead of a list. Unicon has a set type. But for

occasional use on lists of reasonable size,

s==!L tells

if s is in the list L. s ~== !L is not so good, it will

almost surely succeed unless every value in L is s. Instead use

not (s == !L)

Unicon: the next level

Let's peek at

CS210 lecture notes on Unicon to see if I missed any highlights

during the live demo.

Monitoring Buzzwords

Volume, dimensionality, intrusion, and access. Solve these four

unsolvable problems and you've got the makings of a decent

monitoring and visualization framework.

- volume

- if you think static analysis of source code has a lot of information

the programmer may have to understand and/or deal with, wait until

you see the amount of information dynamic analysis generates. Even

small, short-running programs can generate millions and millions

of events of interest. Monitoring and visualization tools have

to filter/discard, condense/simplify, and analyze their input,

turning low level data into higher-level information.

- dimensionality

- understanding program behavior involves many dimensions:

control flow, data structures, algorithms, memory access patterns,

input/output behavior... Visualizations can be selective, but often

want to depict more than just 2 or 3 dimensions' worth of data even

though they are using a 2D (or 3D) output device.

- intrusion

- The act of observing program execution behavior changes that behavior.

Monitors have to minimize/mitigate this or they will be visualizing

their own side effects more than the thing they purport to show. The

first form of intrusion is to skew the timing of the observed behavior.

Monitoring a program may also alter its memory layouts (e.g. on the

stack), which might make bugs disappear (or merely exaggerate them).

- access

- Simple monitors might graphically depict exactly the information contained

in the sequence of events that they deserve, but most monitors need to

ask additional information, by accessing potentially the entire state of

the program being executed.

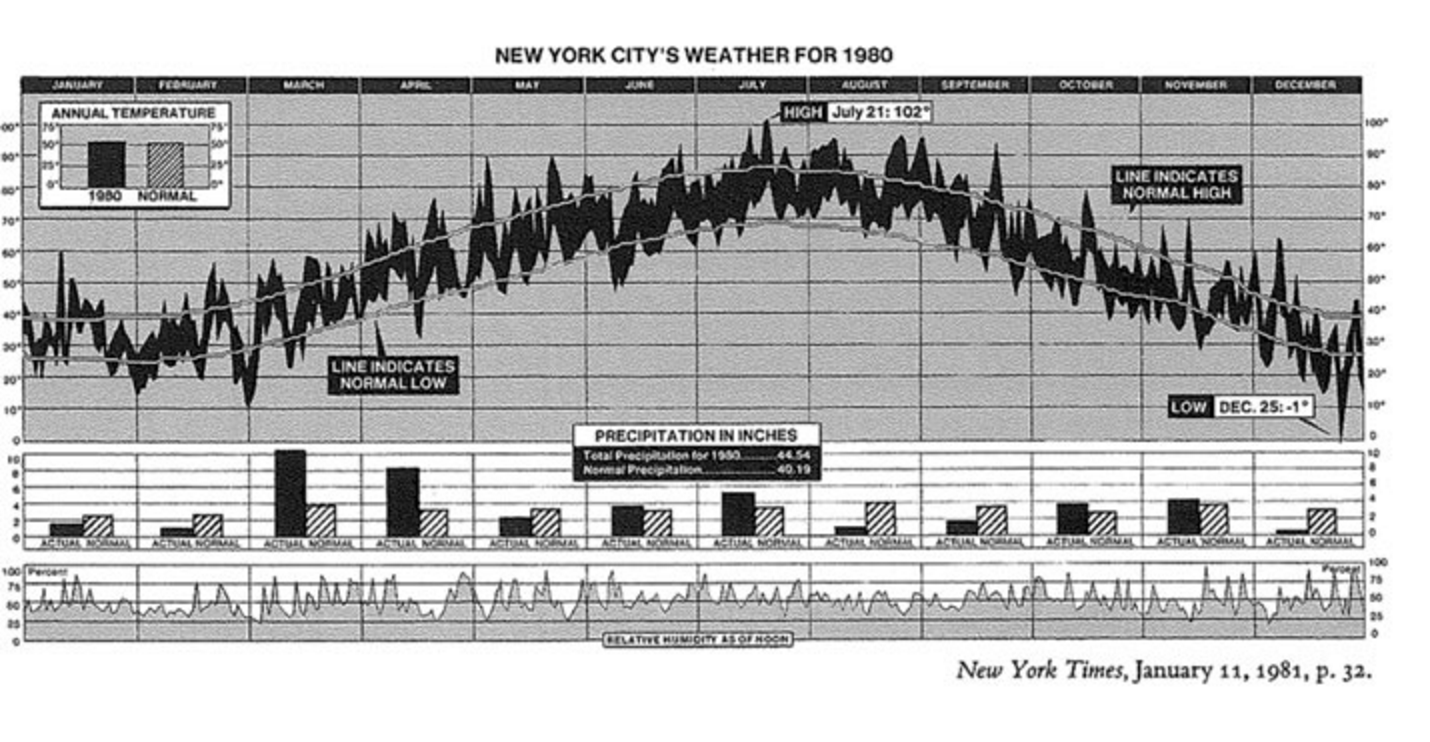

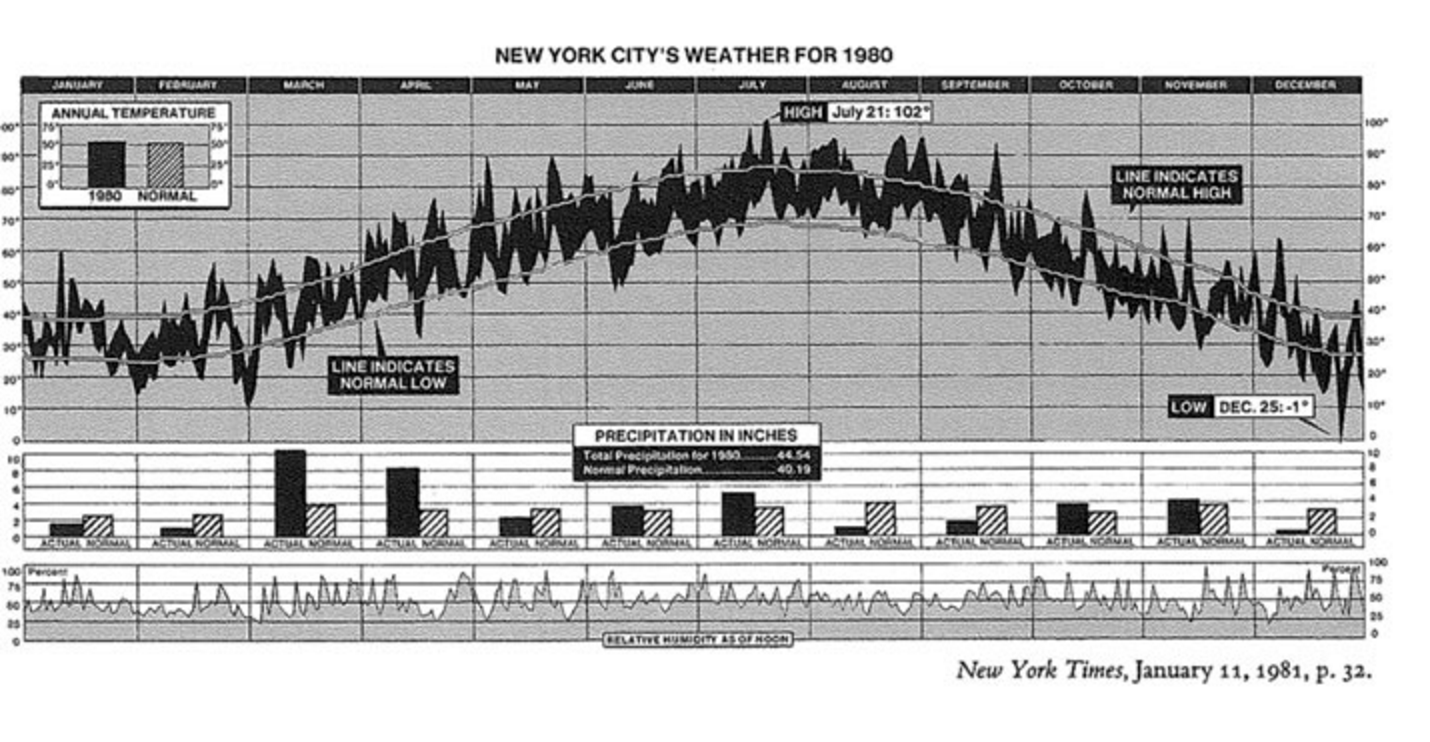

Graphic Design of the Day: Line Plots

Multiple dimensions of weather along a primary time axis.

From the New York Times, popularized by Tufte.

lecture 5

Announcements

- Regular office hours: after class MW 2:30-3:20. Also by appointment.

- UI closed - no class on Monday

Surprised by Failure?

- Don't be surprised when fallible expressions fail.

- Failure in Unicon isn't a rare event like an exception,

- Failure is part of every program's life.

- You should learn to know how to identify fallible expressions.

- Expect failure. Write code to accommodate it.

When to check for failure: everywhere that failure can occur, and

everywhere that failure will matter. Examples:

- comparisons are designed to fail, most folks don't miss these

- type conversions like integer() are also designed to fail

- open() and similar system functions that ask for an operating

system resource that might not be available -- check them!

- find() and similar built-ins, UNLESS you can prove data is valid

- subscripts, unless you can prove valid index ranges

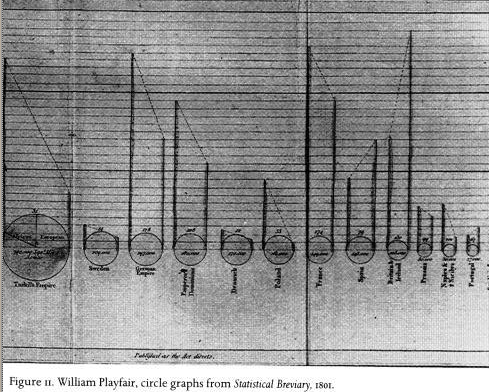

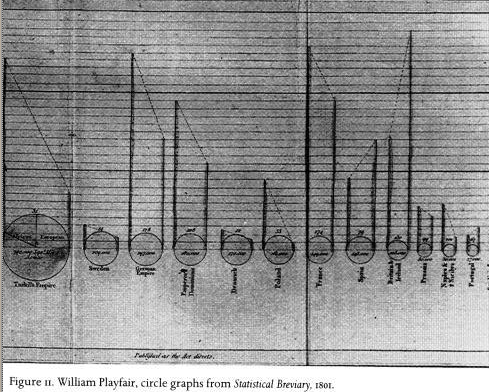

Graphic Design of the Day

William Playfair's chart depicting area, population, and tax revenues

of countries in europe is another excellent example of depicting multiple

dimensions of data.

The slope between the population and tax revenues points down for most

countries and sharply up for England (and less so, for Spain).

Introduction to Unicon Monitoring Facilities

- events

- billions and billions of tiny points in time, with a tiny data payload,

and the ability to easily inspect the entire program state. Event names

like E_Pcall or E_Lbang

- event keywords

-

&eventcode and &eventvalue

- built-atop co-expression data type

- threads that take turns.

AKA coroutine, goroutine, or co-operative or synchronous thread.

- the VM is instrumented for you

- asymmetric coroutines. VM C code sends events to monitors written in Unicon

lecture 6

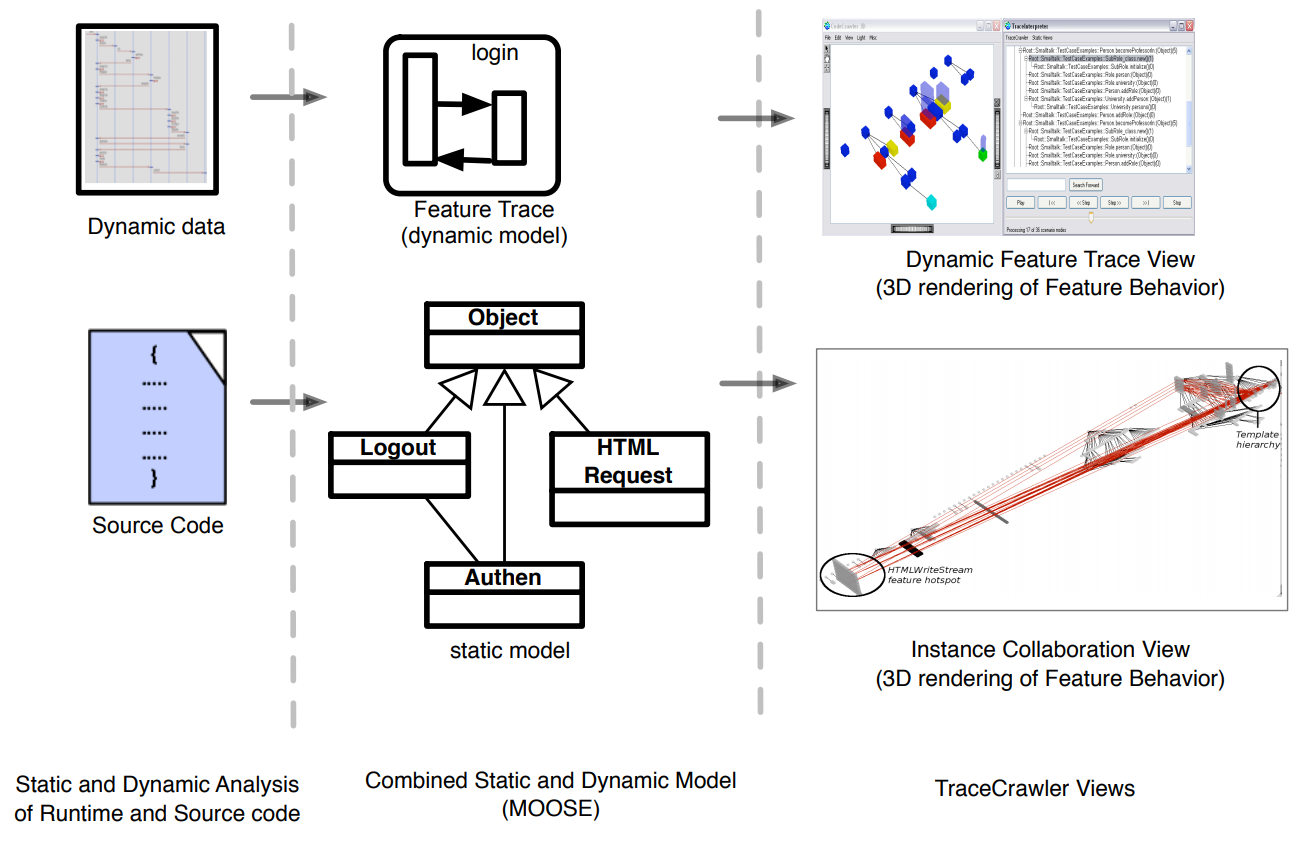

Reading for this week

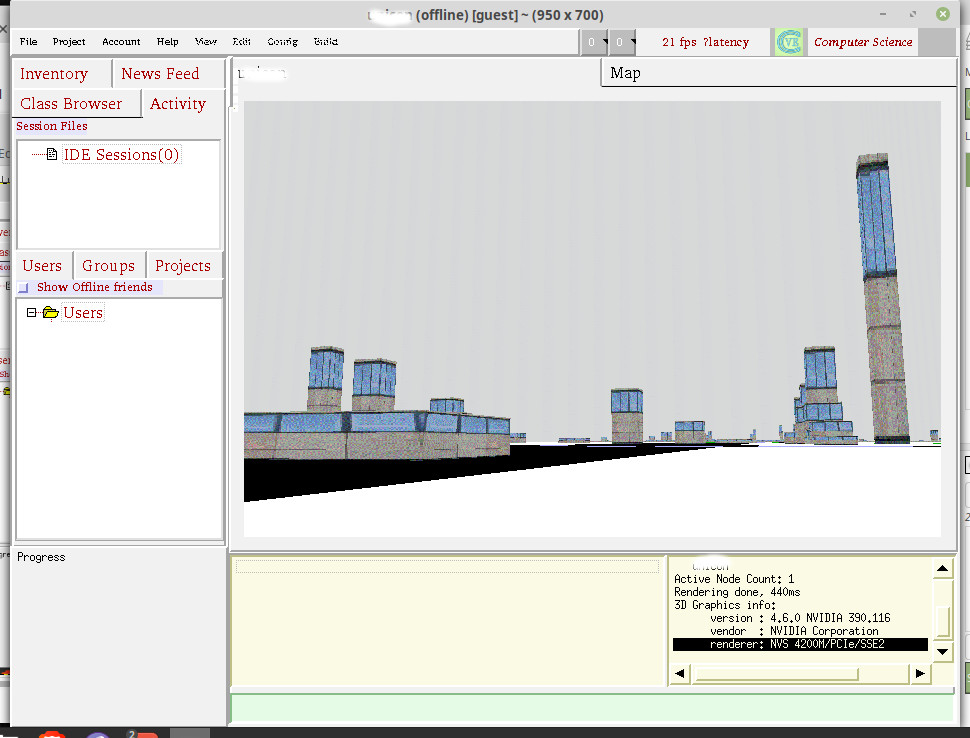

- What does the paper claim is the distinction between 3D and VR?

- Research issues:

- What are the most efficient methods to

visually represent software systems?

- What are the perceptual

limits of these visualization with respect to large software

systems?

- How do we represent static and dynamic information

about the software?

- What are the user's most important information

needs with respect to particular software engineering tasks?

- What features are most suited to supporting collaborative problem

solving in this domain?

- What software engineering tasks benefit

(most) from this collaborative environment?

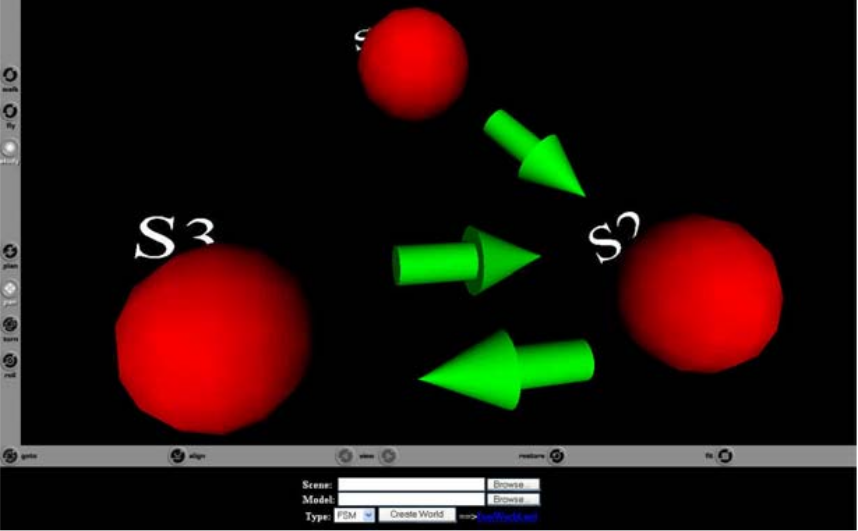

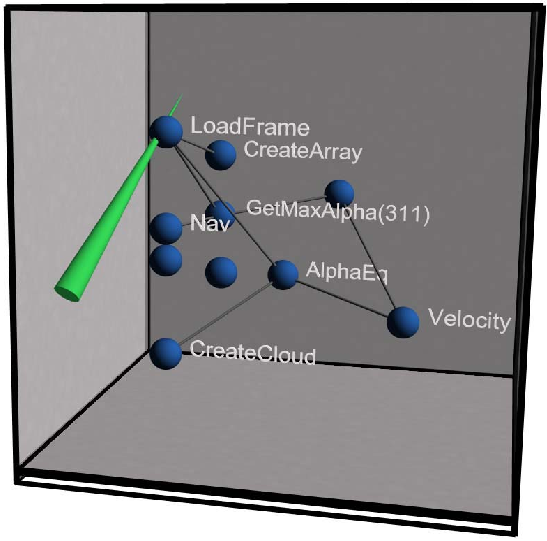

- VRML - late 90's, expected to be a big hit follow-on to HTML, did the

static part OK but didn't solve well the need for dynamics.

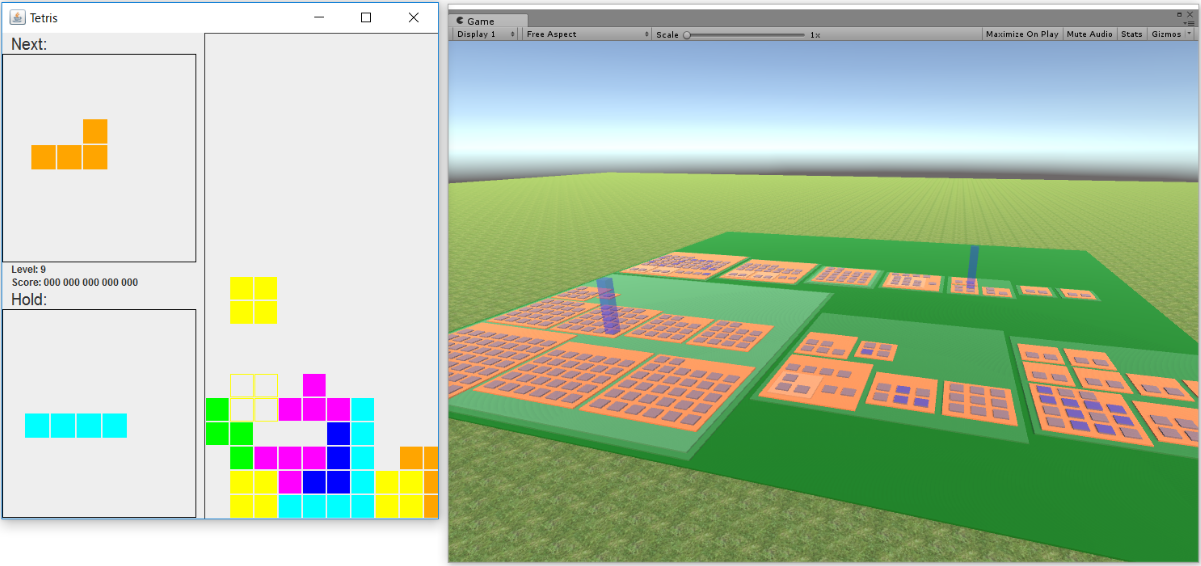

- "Imsovision" - class as a rectangular platform, methods as columns

(laid out how?)

- Did you notice

system(s) and open(s, "p") ?

- Built-in structure types as the associations between objects

Dr. J's intro to OO design was a user ...

- Classes

- Windows

Notes from Past Students' Unicon Program Visualizations

- "Longer" is not better.

Writing good Unicon is like haiku or other short poetry.

Practice toward mastery of the art.

- Avoid platform-dependent colors - stick to the portable color

names (see Unicon Graphics Book) or use RGB values.

- avoid platform-dependent fonts - stick to mono, sans, serif, typewriter

- do not assume that the display is larger than 1024x768*

- put your name in a header comment at the top of your homework

- check user input for validity, avoid crashes

This version is based on one by Mike Wilder.

lecture 7

How's the Homework Going? Any questions?

Things that might be useful:

Let's look at the code from this sorting visualization,

based on one by Mike Wilder. Start at the bottom, with main().

- You've seen

!x before, but how about x ! y

-

x ! y is the apply operator. It calls function x

with parameters given by the elements of list y.

lecture 8

Reading for this Week

- Bonyuet/Ma/Jaffrey, ICWS 2004

- Basic PC GPUs existed by this time; World of Warcraft came out in 2004.

- Key Criteria: usefulness, intuition, and scalability

- What were their definitions for these?

- Schneiderman's 7 tasks

- overview, zoom, filter, detail-on-demand, relate, history, extract

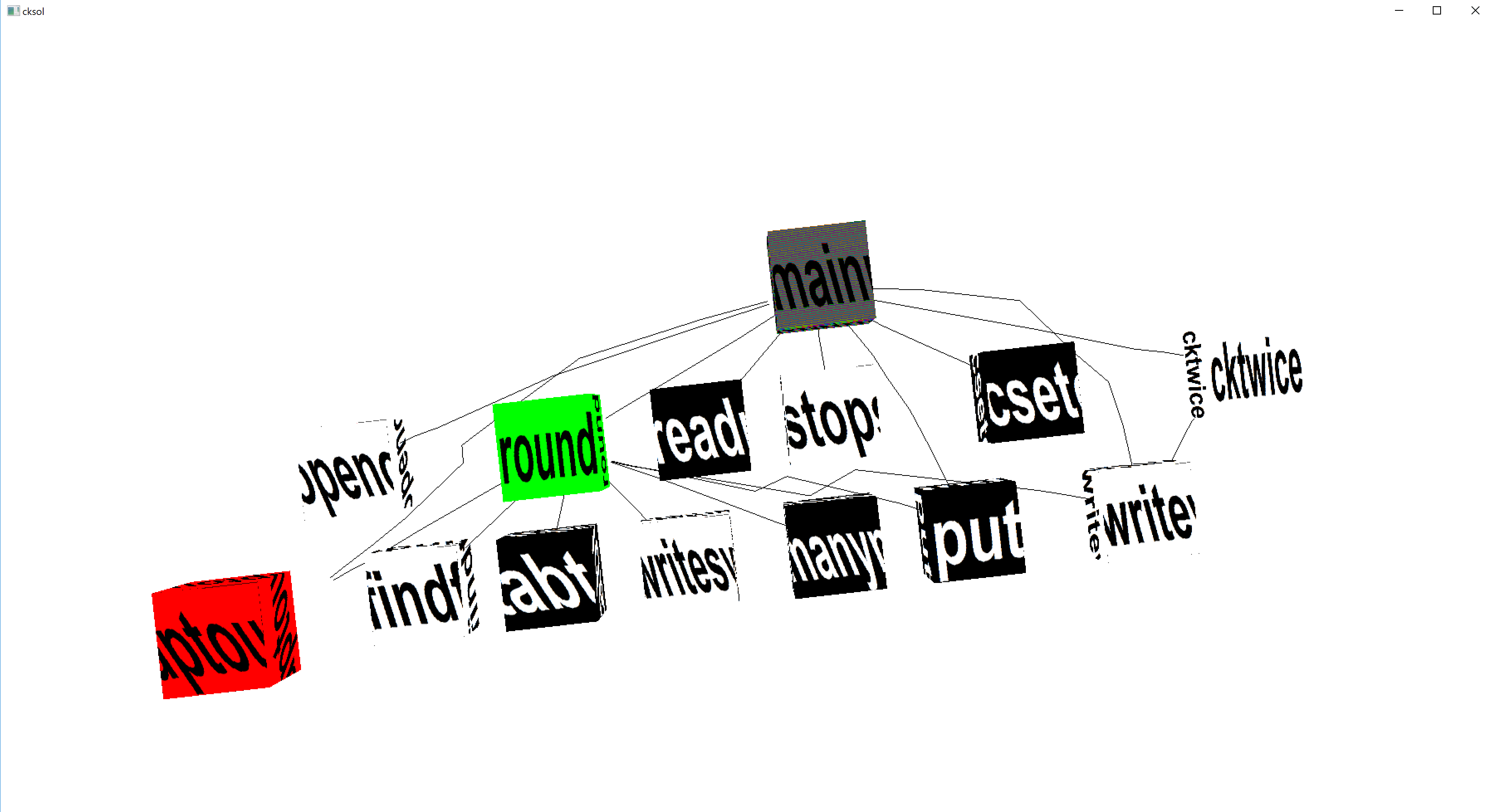

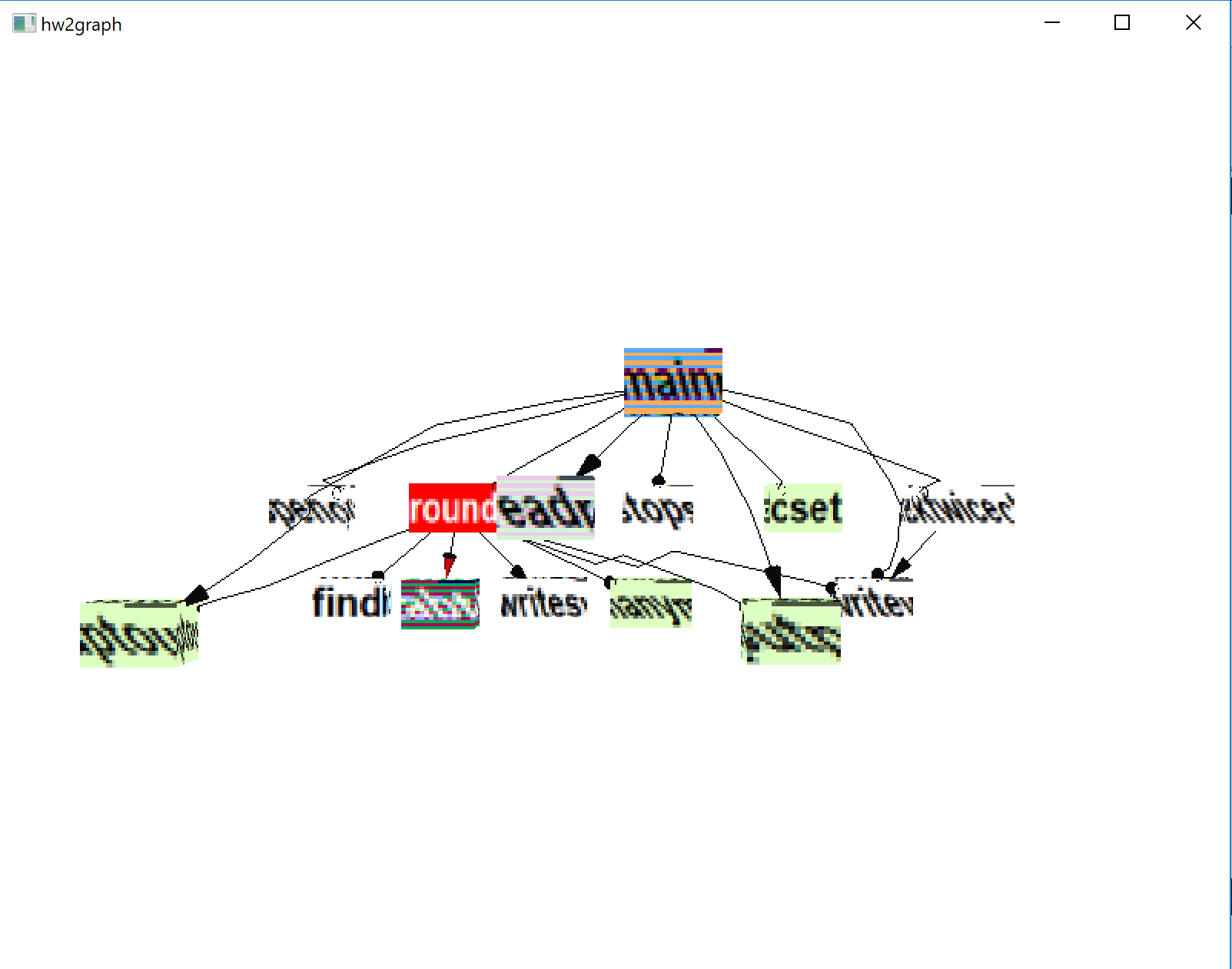

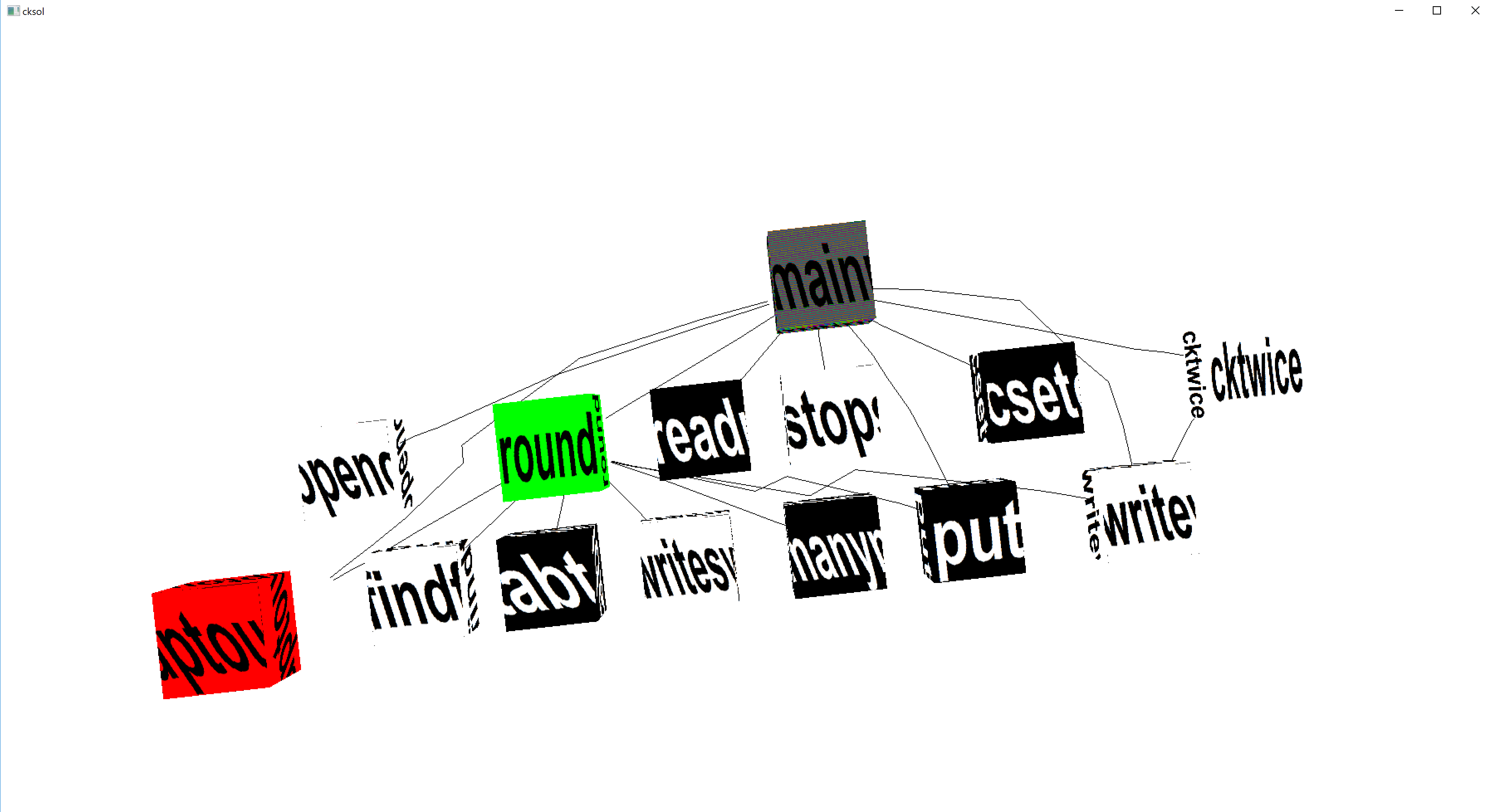

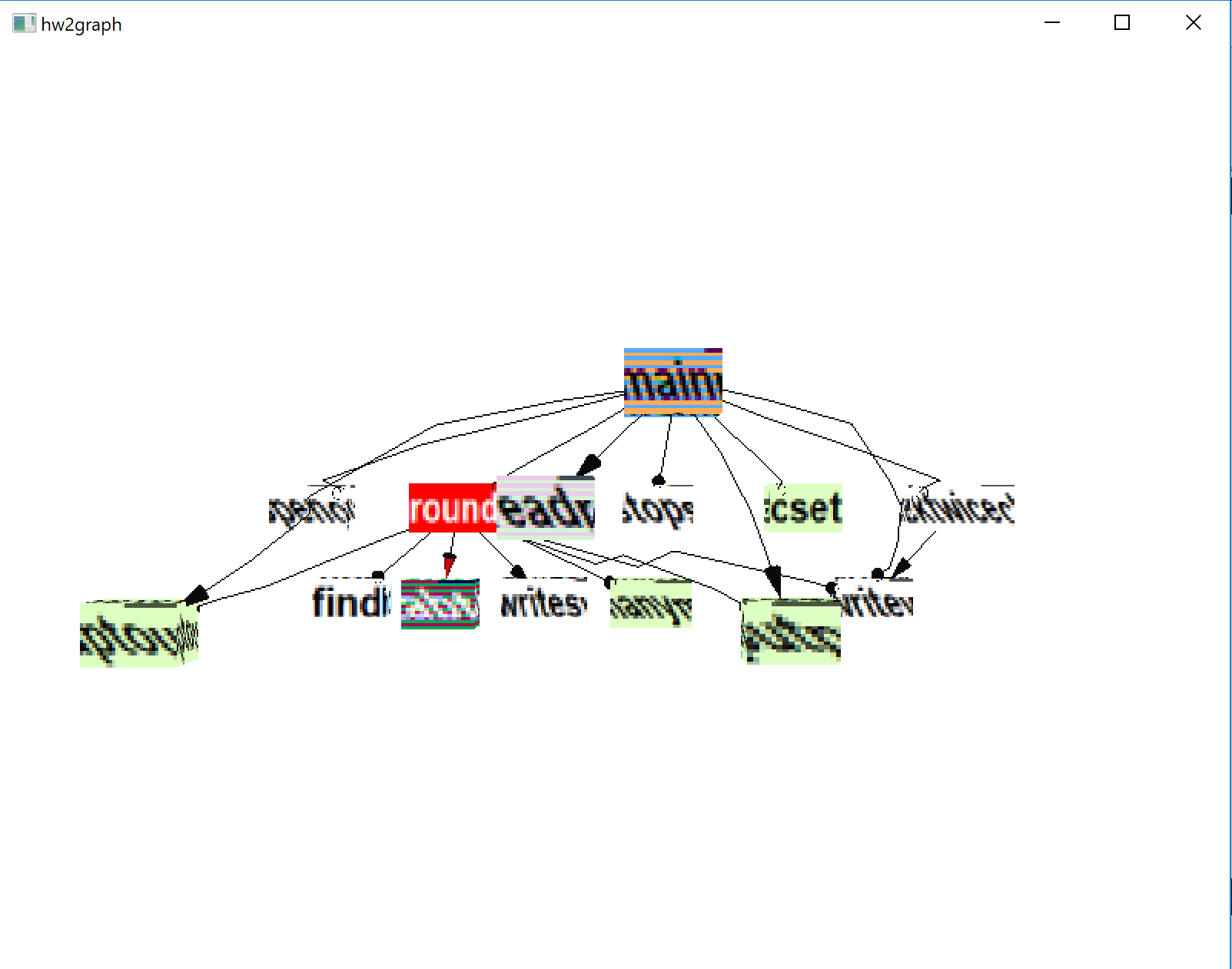

- CodeMapping achieves: labeled "atomic metaphor" 3D graphs.

- did they achieve their key criteria?

Introduction to Unicon Monitoring Facilities, Part 2

- built-in function

EvGet(c)

- Activates

&eventsource (Monitored) to get next event whose code is of type c

- event codes and masks

- an event code is a one-letter string. an event mask is a cset.

This is, literally, just grad-student-drj exploiting the handy

bit vector implementation that was in Icon.

-

link evinit

- library function EvInit(argv) loads program

-

$include "evdefs.icn"

- include file evdefs contains definitions of event codes

Writing your first Unicon monitor

Consider the beauty and virtue of m0.icn,

m1.icn and events.icn.

Now checkout sos.evt

lecture 9

Windows Unicon Trouble?

- Windows Unicon wasn't running for one student due to a long PATH

environment variable. On current Windows you can pretty much set

a path arbitrarily long, but for a new process to see it there is a

length limit (2047 characters, possibly less).

- Windows Unicon bug reports are extremely welcome; please send me

cut/paste console messages or screenshots...

Summary of Event Monitoring Libraries

From unicon/ipl/mprocs

- evinit

- EvInit(args) loads another Unicon program that is to be monitored

- evnames

- evnames(e) maps event codes to English, e.g. E_Pcall -> "procedure call"

- evsyms

- returns a table that maps codes to symbols t[E_Pcall] -> "E_Pcall"

- ...

- there are several more that we will introduce as needed

From unicon/ipl/mincl

- evdefs.icn

- $defines for all 100+ event codes. We should probably tour this.

- patdefs.icn

- $defines for the ~100 integer &eventvalue's of the E_PatMatch event

Subject to some tweakage, here it is.

Unicon 2D Graphics Functions

We briefly discussed the built-in 2D graphics function set.

Functions you might have a use for in this class:

- Bg - get/set the background color

- CopyArea - copies a rectangular area

- DrawArc - draw a partial or complete oval

- DrawCircle - draw a partial or complete circle

- DrawCurve - draw a smooth curve through points

- DrawImage - draw an image from a ASCII-art string representation

- DrawLine - draw one or more lines

- DrawSegment - draw connected lines

- DrawPoint - draw points

- DrawPolygon - draw a polygon

- DrawString - draw text

- DrawRectangle - draw a rectangle

- EraseArea - fill a rectangle with the background color

- Event - read the next keyboard or mouse event

- Fg - get/set the foreground color

- FillArc - draw an arc filled with the foreground color

- FillCircle - draw a circle filled with the foreground color

- FillRectangle - draw a rectangle filled with the foreground color

- FillPolygon - draw a polygon filled with the foreground color

- Font - set the font

- GotoXY - set the text cursor to pixel (x,y) based on current font

- Pattern

- Pending - return the list of pending user input on this window

- QueryPointer - obtain the current mouse location

- ReadImage - read an image from a file into a window

- TextWidth - return the # of pixels wide a string is in the current font

- Texture

- Texcoord

- WAttrib

- WDefault

- WFlush

- WSync

- WriteImage

3D Functions We Will Worry about Later

- DrawTorus

- DrawCylinder

- DrawDisk

- DrawCube

- DrawSphere

- PopMatrix

- PushMatrix

- Rotate

- Scale

- Translate

Functions you probably don't need in this class:

- Active - returns a window with pending input

- Color - set a mutable color (color-index frame buffers only)

- Couple - bind a canvas from w1 to a context from w2

- FreeColor - free a mutable color (color-index frame buffers only)

- GotoRC - set the text cursor to (row,column) based on current font

- NewColor - allocate a mutable color (color-index frame buffers only)

- PaletteChars

- PaletteColor

- PaletteKey

- Uncouple

lecture 10

Unicon Mailbag Questions

- How does open mode "p" work?

- You don't have to use it you can do anything that you find works for you.

But open(cmdline, "p") runs cmdline in a shell and opens a file that reads

its standard output into your program.

| Linux Example | Windows Example

|

|---|

f := open("ls -l | grep icn", "p")

while filename := read(f) do stuff(filename)

close(f)

|

f := open("cmd /C dir", "p")

while line := read(f) do

if find(".icn", line) then stuff(line)

close(f)

|

- how would I make global lists or tables that I can access in other procedures?

-

- Declare global variables.

- Assign them list or table values (maybe in main())

- They will then be visible everywhere.

Partial Highlights from HW#1 Solutions

outfile := open("output.json","w")

# OK, but check whether open() fails or not

s := f(s, "morestuff")

# functional style is fine and appropriate. no reference parameters.

L := []

every put(L, !fileIO)

# OK, but consider L := [: !fileIO :]

truth := 1

...

if truth = 1 then {

# fine, use boolean flags if you must. no boolean data type.

# More common to use &null as false and non-null as true.

every x := find("(", line) do { #finds every instance o

# outstanding; uses find() to iterate through line

if not member(&letters ++ &digits, line[x-1]) then {

# fine, but if you do this a lot of times, pull ++ out of the loop

hashIndex := &null

hashIndex := find("#", line)

if hashIndex ~=== &null then{

# fine, but consider

# if hashIndex := find("#", line) then { ...

system (["cflow", "--omit-arguments", name], f, f, f3)

# wow, kudos for using the full power of system()! Is this better than

# system("cflow --omit-arguments " || name, f, f, f3)

word := tab(upto("("))

# kudos for using string scanning!

# consider using tab(find("(")) or change to tab(upto('(')))

every i := 1 to *args do tableofprogs[args[i]] := preprocess(args[i])

# every arg := !args do tableofprogs[arg] := preprocess(arg)

p := <[_a-zA-Z][_a-zA-Z0-9]*[ \n\t]*"(">

p2 := p || .>y

s ?? p2 -> s2

# wow, regexps and patterns!

if s2[j] == (" "|"\n"|"\t"|"(") then {

# if any(' \n\t(', s2[j]) then { ...

system("cflow cflow.c > info.txt", "p")

# hmm, possible mixed metaphor

if(pos ~== 0 ) then

# if pos ~= 0 then

if(lenghtOfString(L[i]) = 1 )then {

# not just misspelled, also misleading

wchar := &letters ++ &digits ++'\'_'

lista ? while tab(upto(wchar)) do {

# this is good practice

n_pos := find("()", p_name)

f_pos := find(")", p_name)

if p_name[n_pos] == "(" then {write("nice")}

# better know for sure that these can't fail, or check

procedure getSpaceNumber(line)

local pos:=0

space := line[1]

while (space == ' ') do {

pos := pos + 1

space := line[pos]

}

return pos

end

# many(' ', line)

if not (tab(find("class"|"procedure"))) then {

# cool

&pos := &pos + 6

# move(6)

lineno=0

# lineno := 0

Visualization Principles (according to Dr. J)

- animation

- incremental algorithms are a primary means of achieving efficient

animation. complementary to the principle of minimizing ink (or # pixels)

used to

convey a given set of information, this is like minimizing the

motion of the plotter arm, or in our case, the # of memory writes.

- least astonishment

- use the golden rectangle, labels and legends

- metaphors

- a familiar metaphor saves the user a lot of time and improves

understanding. Metaphors can be taught, and become familiar over time,

but that is often laborious.

- interconnection

- connecting different pieces of data is key, follow Playfair's example

- interaction

- the big difference between a visualization and a paper chart or graph

is that the user can interact with the data. exploit this.

- dynamic scale

- visualizations compete for screen space and hardware varies widely.

it is extra work, but if you write everything so that it scales, your

visualization will be useful on more machines and in more ways.

- static backdrop

- one of the best ways to make dynamic data understandable is to present

it in terms of static data. An execution is an instance of the underlying

universal abstract thing that is the program.

Notes from Past Students' Unicon Code

-

main(av)

- av is always a list of strings; if no arguments, *av = 0

-

paramnames() is a generator

- use it with

every, or ask questions like "if type(x:=paramnames(...))=="list" then..."

- the apply operator

p ! L is pretty awesome

- what does

every maxval <:= !L do?

-

max() is a built-in function, so maxval := (max ! L)

- failure and success

-

if i := find() then ... is cooler than i := find(); if \i then ...

- check for

open() failure

- I asked nicely before, now I am telling you

- sticking

&fail at the end of a routine is a noop

- a routine fails for free if it falls off its end;

&fail

does not return a failure and is in fact seldom used.

Unlike lisp, the return value of a function is not its final expression's

evaluation.

Graphic Design of the Day

Fisheye Views.

If you want, you can read Furnas' paper on

Generalized Fisheye Views.

As we proceed into the "meat" of the course, we have a need for lots

of subject programs to study, lots of example monitors, and bigger

programs that presumably will have more complex behavior.

- Suspects

- This directory was compiled by Ralph Griswold as a collection of

interesting or weird programs whose behavior could be understood

by program visualization. The good part of the Suspects directory

is that the programs all run non-interactively, in some cases they

were modified to do so, and those that require input have sample

.dat files on which they run nicely. This lets monitors do their

thing unimpeded. We should probably add some representative

object-oriented programs to this collection this semester. I probably

can dig out my "gui recorder" and create recordings of GUI programs

so that we can monitor them conveniently in this context.

- tools

- This directory was compiled by Clinton Jeffery as a collection of

simple program visualization programs and library procedures. Many

of these codes are featured in the book, Program Monitoring and

Visualization.

- Big Programs

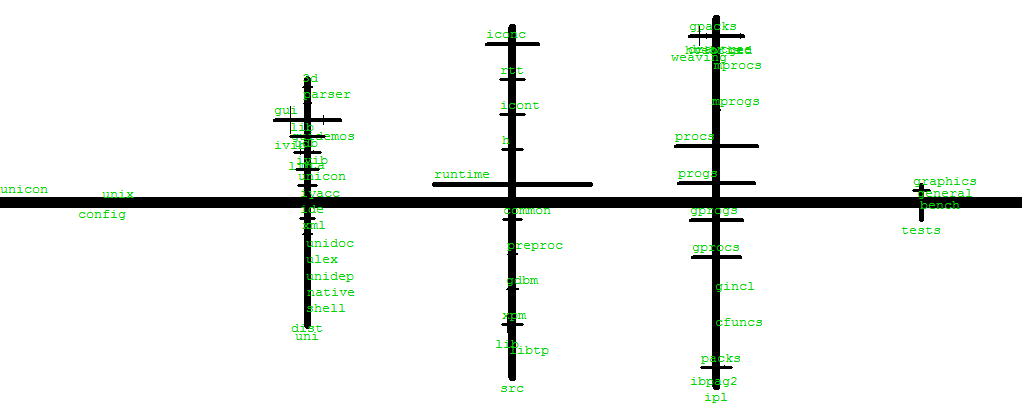

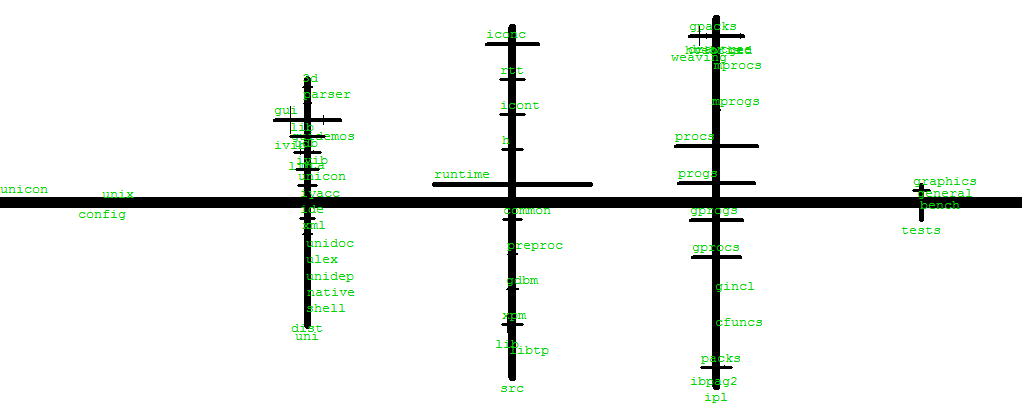

- The largest programs in the suspects directory are typeinfer (2.6k lines),

and yhcheng (1.9k lines). These were considered large in the Icon

language, where source codes are typically 1/3 to 1/10 the size of

C programs that do the same thing. The other largest public domain

Icon programs are in the ipl/*packs directories. Among these,

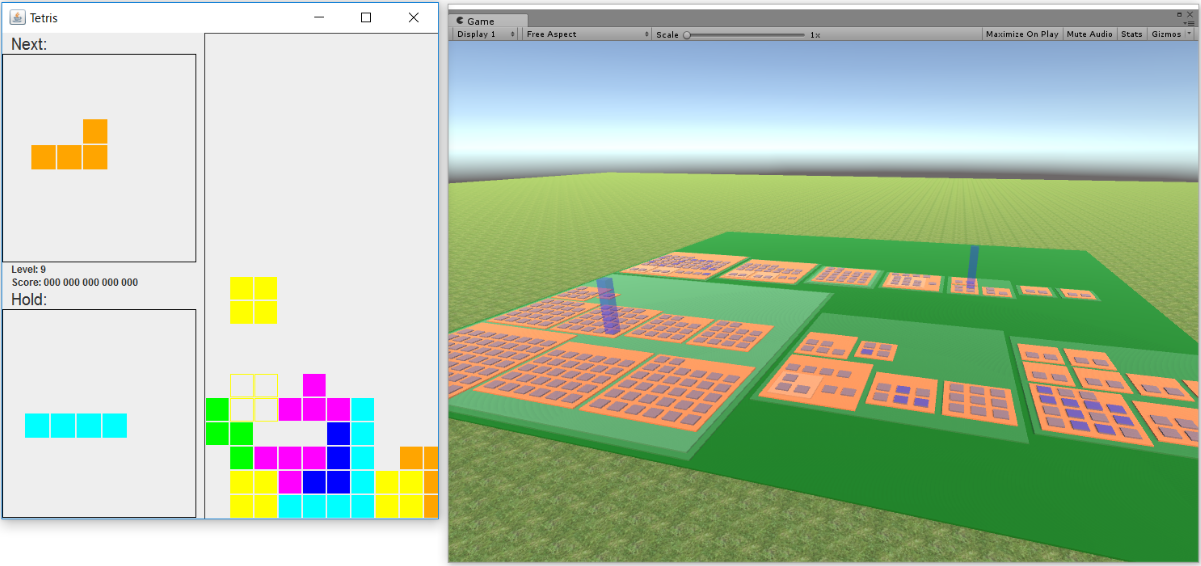

ibpag2 is 3.7k lines, itweak is 3.5k lines, skeem is 3.1k lines,

ged is 3.6k lines, htetris is 4.3k lines, vib is 4.4k lines, and weaving

is 11.3k lines (?). Monitoring these might or might not be easy, since

they may be interactive, and you might or might not know what to click

at them in order to get them to behave. The largest known Icon programs

(source not available) was Bill Wulf's testcase generator (rumored to

be on the order of a half-million lines, perhaps machine-generated.

The Unicon language supports larger programs than Icon was intended for.

The unicon translator itself is 10k lines of Unicon.

The uni/lib class library is 20K lines, and the uni/gui

GUI class library is 14.5K lines; large subsets of these libraries

may be added onto whatever the tool size is. The Unicon IDE is 17K

lines, the IVIB user interface builder is 16K lines, and so on.

Some of these you can acually monitor.

The largest Icon/Unicon programs for which I have source code

include the SSEUS database review/update system (35K lines), and a

Knowledge Representation language and system (50K lines) done by an

AT&T scientist. It might be possible to find these and monitor

them, but it would take work to set them up for monitoring.

lecture 11

Mailbag

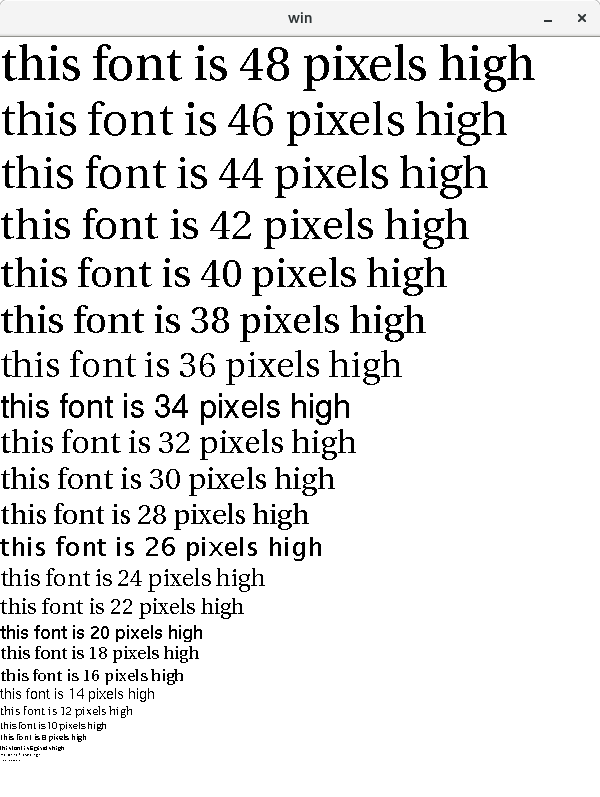

- How can I set the width and height of the string that I print with

DrawString() using the values height and width from the dot

output?

- Great question. Text labels are going to be important all through this

course. Visualizations often botch them: either not enough, or too many to

the point they are unreadable.

- Unicon fonts have height, ascent, and descent attributes that are

independent of what string you are trying to output. Units are pixels.

- Some fonts are fixed width, and some are spaced such that wider

characters use more pixels.

- The four portable font names are "sans", "serif", "mono", and "courier"

- You set the font with the

Font(s) function, or assign

it with WAttrib("font="||...).

- Typical font strings look like

Font("serif,14") or

WAttrib("font=sans,11,italics").

-

DrawString(x,y,s) draws s starting from (x,y).

- If you

link gpxop you get a procedure

CenterString(x,y,s) where s is centered at (x,y).

- There are several calculations that might be relevant:

determine whether a given font will fit a given

(width,height) size, calculate what is the largest font that will fit,

or print only as many characters of a string that will fit, if the font

is not to get smaller.

Reading

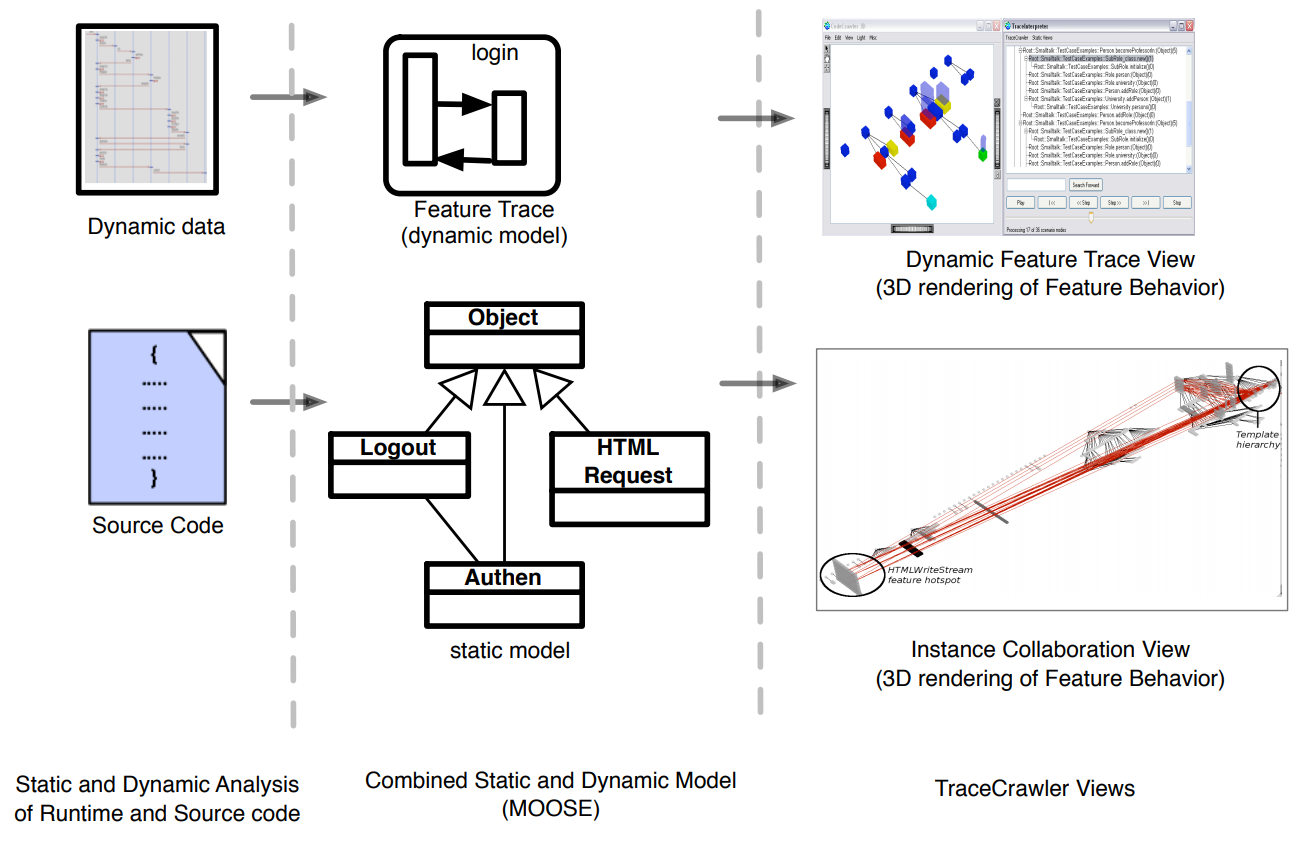

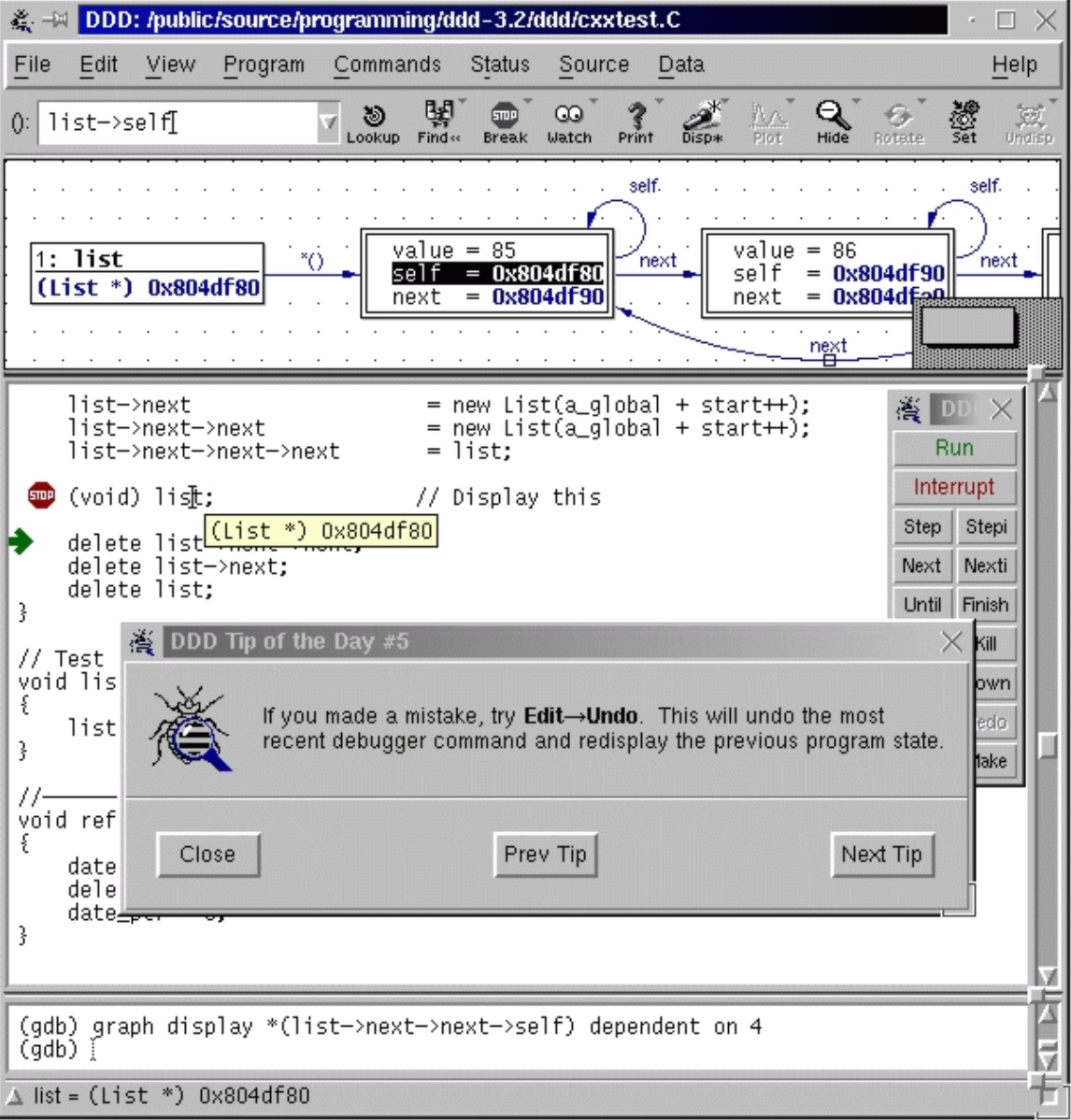

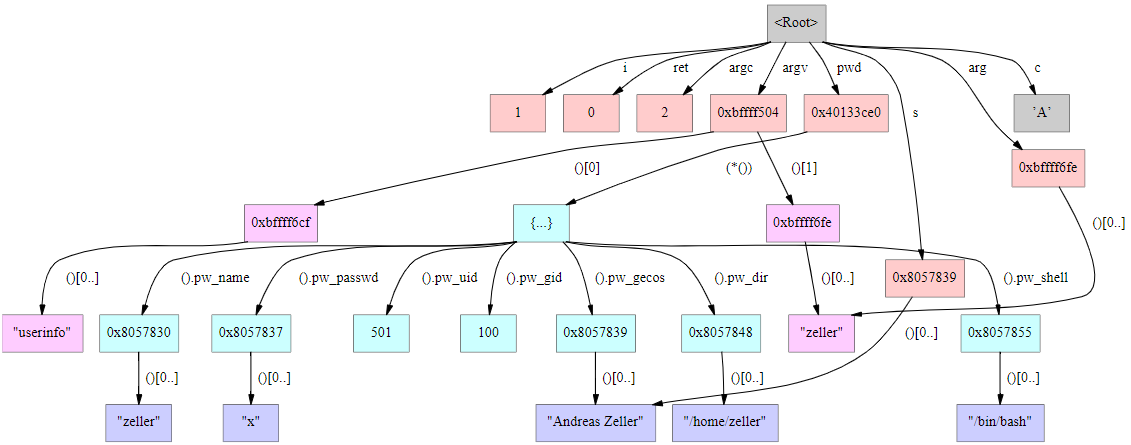

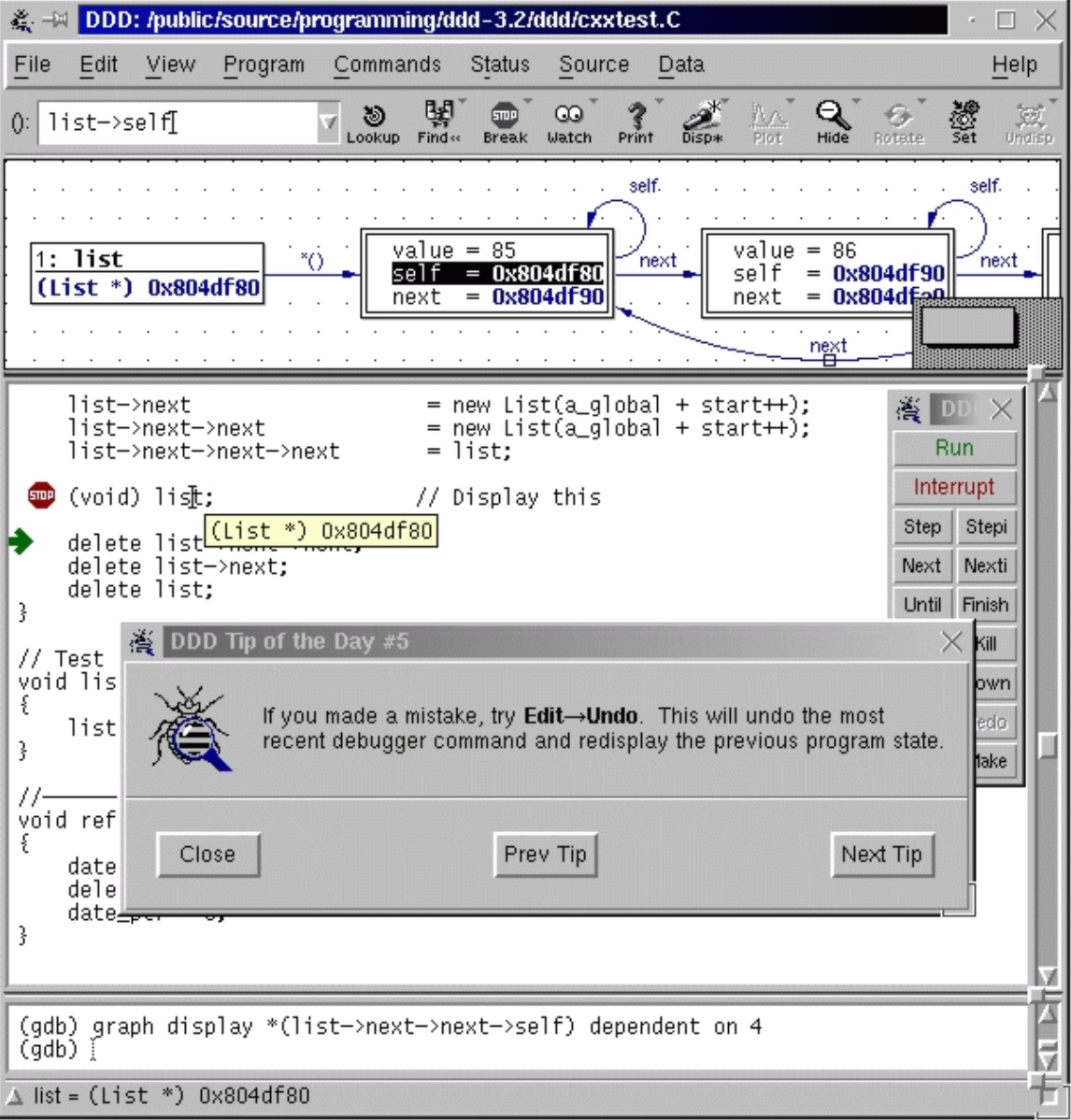

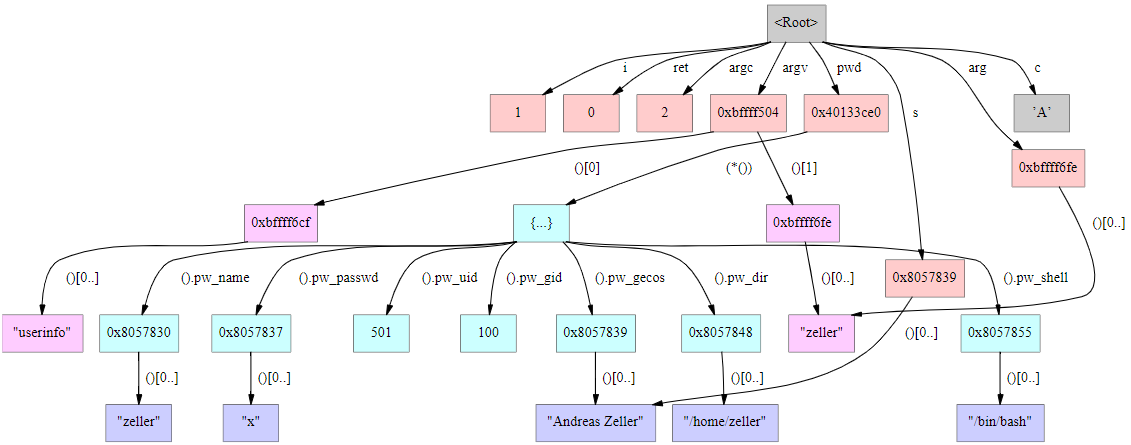

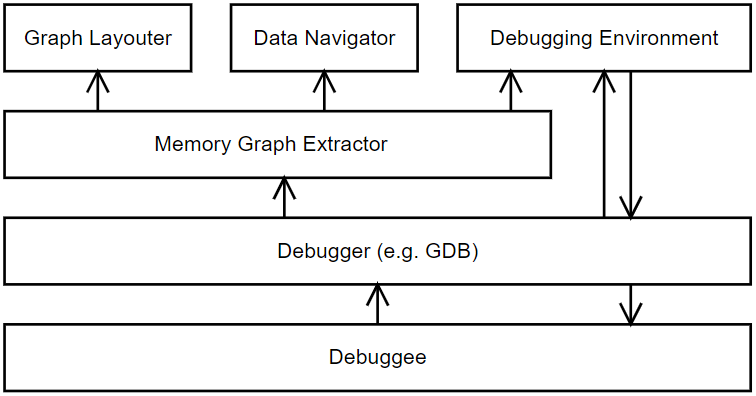

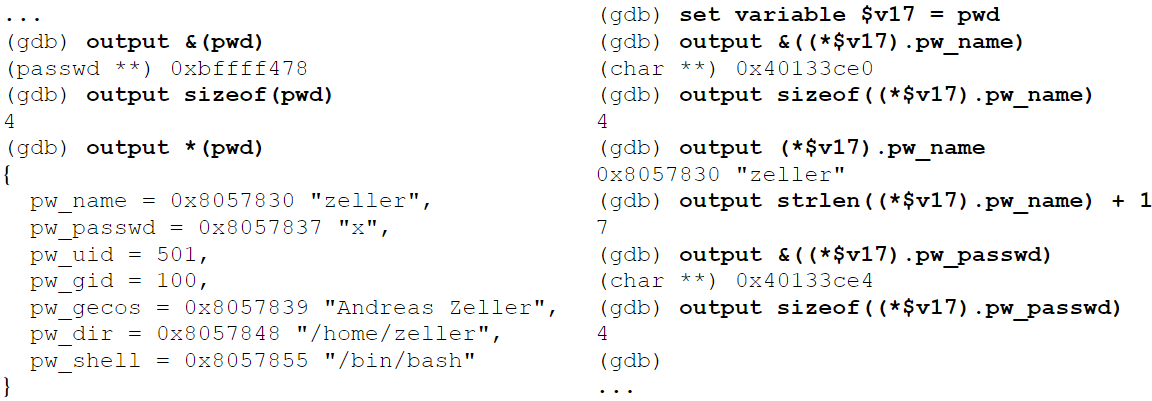

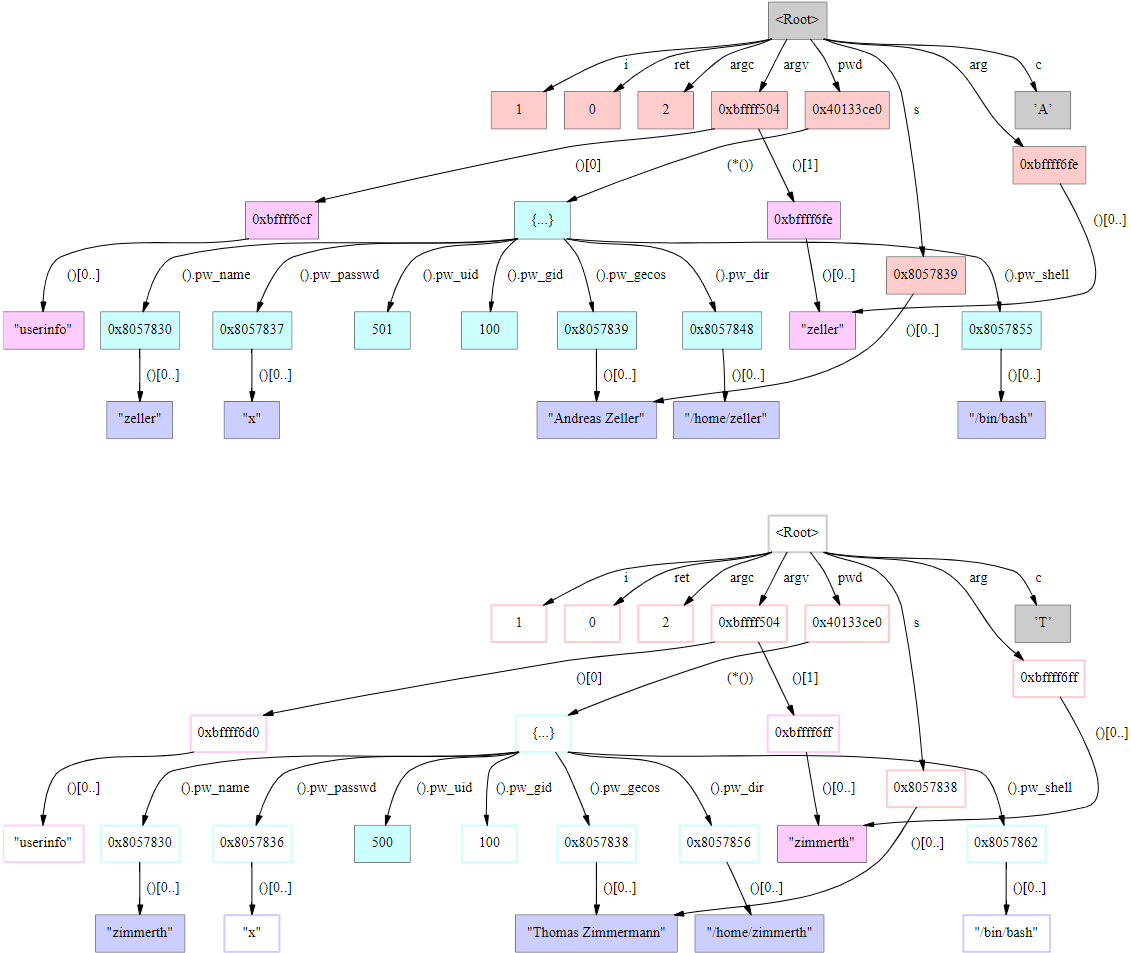

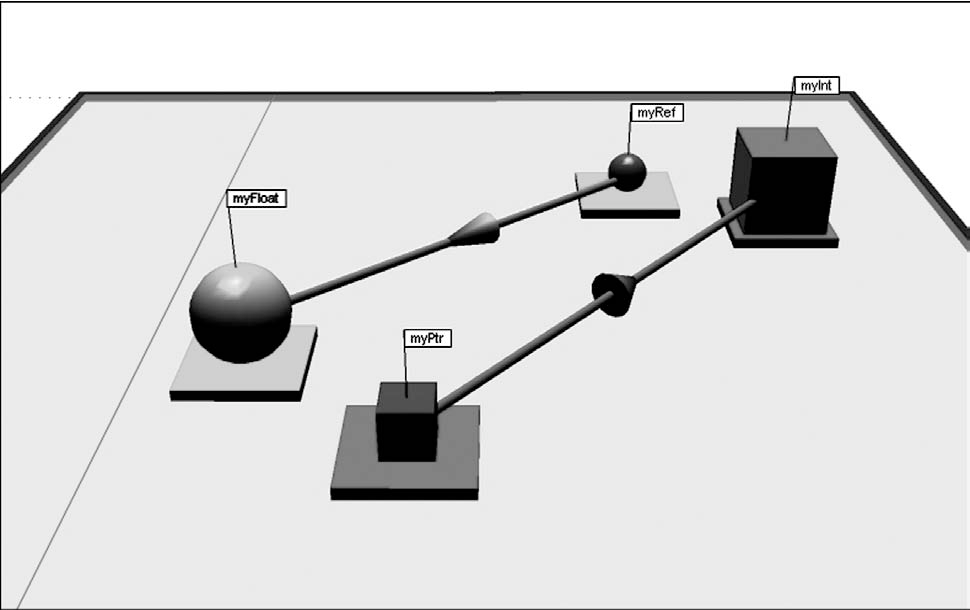

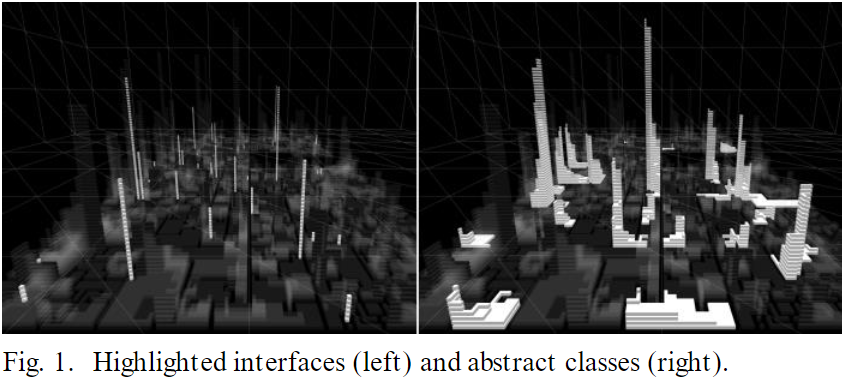

Highlights from OGRE [Milne/Rowe 04]

- topics related to memory are the most difficult

- pointers, dynamic memory allocation, copying, polymorphism...

(9/10 of the most difficult topics for novices identified in

a previous paper)

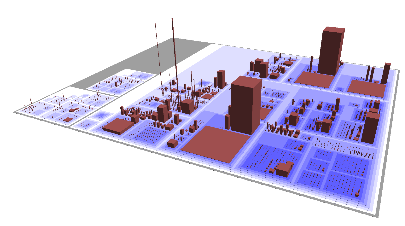

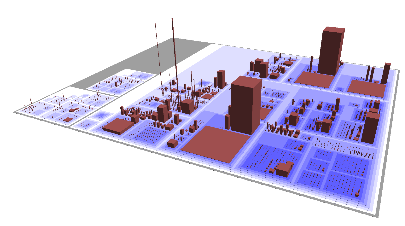

- [Knight and Munro 2000] "Software World" sounds interesting.

- Not assigned as homework/reading. It proposes a city metaphor in

which:

- each class is a "district" and each function is a building.

- The height of the building gives the source code size in lines.

- Building exterior color shows visibility (light=public, dark=private).

- Outside doors indicate parameters number and type.

- Object-oriented systems can be harder to understand than traditional

imperative code.

- So maybe it would be more important for us to figure out how to visualize

them.

- A conceptual view is needed more than a literal view of memory

- At least for novices, sizing each object to its # of bytes is not the

main point.

- Understanding scopes is important. Each one gets a plane.

- Local scopes are mostly extremely numerous and short-lived.

We need a metaphor in which these "planes" or sets of variables/objects

come in together in a rush, and leave together with a wimper. A lot.

We are looking for a metaphor for the stack. Of course, we could depict

them as a stack. Pancakes? Waffles?

Note OGRE's target: novice C++ programmers who need to develop a very

concrete mental model of how pointers work.

More Unicon highlights from HW#1 code?

if first:=find("at ", line) & lineNumb==1 then{

move(first+2)

# what's the difference between tab() and move() again?

# extremely common: tab(find(...)), tab(upto(...))

while move(1) == " " do {

count:=count+1

}

# count +:= 1 ok, but how about count +:= *tab(many(' '))

Graphic Design of the Day

CASSE POSTALI DI RISPARMIO ITALIANE by

Antonio Gabaglio, via the revered Tufte, and cited in

a nice discussion of cyclic data, apparently by Benj Lipchak.

Unicon feature of the day: Packages

Packages were added to Unicon more or less against my will,

but they are obviously of growing importance in larger scale

development. Packages are about protecting a name space from

collisions. Without them, global variables in all modules

are shared, and accidentally, these variables may conflict

with globals (and undeclared, thought-to-be locals!) in other

modules. The more libraries you use, the more inevitable these

conflicts. Proof that packages are needed is evident in the

Icon Program Library, where, after fundamental built-in functions

like "type" were accidentally assigned one too many times by

client code, Ralph Griswold got in the habit of protecting "type"

or similar built-in functions the hard way, inside each library

procedure that uses them:

static type

initial type := proc("type", 0) # protect attractive name

This gets old in a hurry, and it actually bloats code a little bit.

So anyhow, Robert Parlett implemented packages, and I accepted them,

and now they are here to stay, and they aren't bad. You do have to

know the "package" and "import" keywords, and the ::foo syntax, and

that is about it.

lecture 12

Mailbag

- I am stuck trying to parse dot output. For example, if I have a string

s == " a -> b [pos=\"e,63,108.41 63,143.83 63,136.13 63,126.97 63,118.42\"];"

how do I parse it?

- Well, obviously we are still learning Unicon and I will take whatever

bloody harvest of bytes you manage to deliver me. But if I had to do this

homework, I might start with something like:

s ? {

tab(upto(&letters)) # discard up to node name

srcnodename := tab(many(&letters))

tab(many(' \t')) # discard whitespace

if ="->" then { # we have an edge

tab(many(' \t')) # discard whitespace

dstnodename := tab(many(&letters))

tab(many(' \t')) # discard whitespace

if ="[pos=\"e," then {

L := []

while num := tab(upto(', \"')) do {

put(L, numeric(num))

if ="\"" then break

else tab(many(', '))

}

}

else write(&errout, "malformed edge")

}

}

- I am stuck trying to use

DrawCurve(). From

reading the book, I understand that the arguments need to be x,y pairs. My

issue is when I try to pass DrawCurve() a string or a list as

an argument containing all of the x,y pairs. For example a string or list

containing [127.7,180.41, 127.7,215.83, 127.7,208.13, 127.7,198.97,

127.7,190.42]. I am assuming the string or list gets treated as just one

argument when I do this, is there something else I can do?

DrawCurve() and the other Draw*() functions do

not take their parameters in a list or a string. If you have all your

arguments in a list L, you can turn them into parameters using

the apply operator, as in DrawCurve ! L

cflow on Windows

If you dare, check out

https://github.com/noahp/cflow-mingw.

It is either a nice guy who built cflow on Windows and shared it with the

world, or a nefarious ransomware hacker luring victims with offers of

cflow binaries. If you don't like trusting his .exe's you can certainly

examine the source code and try to follow this github project's build

instructions. How I found it: googled cflow.exe. Random github is not

a highly reputed official distribution, but at least with source

code it is not obviously one of those codehosts of ill repute malware

sites, like a fake device driver repository.

dot on Windows

There have been reports of problems running dot on Windows. graphviz.org

provides windows executables and dot.exe seems to work OK. Maybe it

conspicuously chooses not to add itself to your PATH; adding the directory

where it was installed to the Path got it working for one student. In

another student's case, instead of open("dot ...", "p")

we ended up using open("cmd /C dot ...", "p"). That smells

also like a Path issue, but I am not sure.

Monitoring Location Events

-

E_Line events show line # changes

-

E_Loc events contain line, column, and a syntax code.

Somewhat more frequent than E_Line.

- to get line # out of an E_Loc:

iand(&eventvalue, 65535)

- to get column # out of an E_Loc:

ishift(&eventvalue, -16)

(actually, maybe a bit trickier)

Vizualization Idea:

- map line #'s to y coordinate, one pixel per text row.

- map column #'s to x coordinate, one pixel per text column

- aggregate ALL events at a coordinate to a color. that way,

nothing has to move, it just gets "hotter" over time.

The program miniloc.icn is a "miniature location profiler". It is our

first example from the tools/ directory mentioned in

an earlier lecture. It is 66 lines of code.

What is "mini" about miniloc is that each source code line and column is

one pixel row and column. This is a scaling problem for large programs

or small monitors.

Miniloc could be rewritten to scale its graphics. The frequency of

location events at various locations is recorded using a log scale

through a range of colors from boring to red-hot. Humans

don't really perceive red as a larger # than green, but the metaphor

of a temperature map is widely recognizable anyhow.

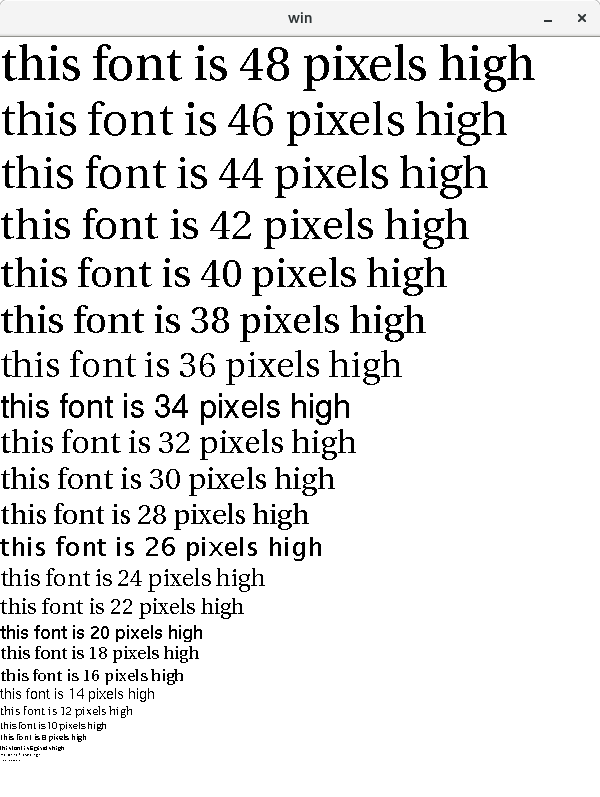

lecture 13

Reflections on Miniloc

My first thought after briefly running miniloc last time was:

- I need bigger dots (for legibility on TV's).

After sleeping on it, additional ideas came calling:

- How hard is it to adjust the scale interactively?

- How hard is it to tell how many lines/columns this viz.

needs to be able to scale to show the whole file?

- I wonder how big the dots have to be before I could just be printing text?

- I wonder how to scale miniloc to programs with (many) files?

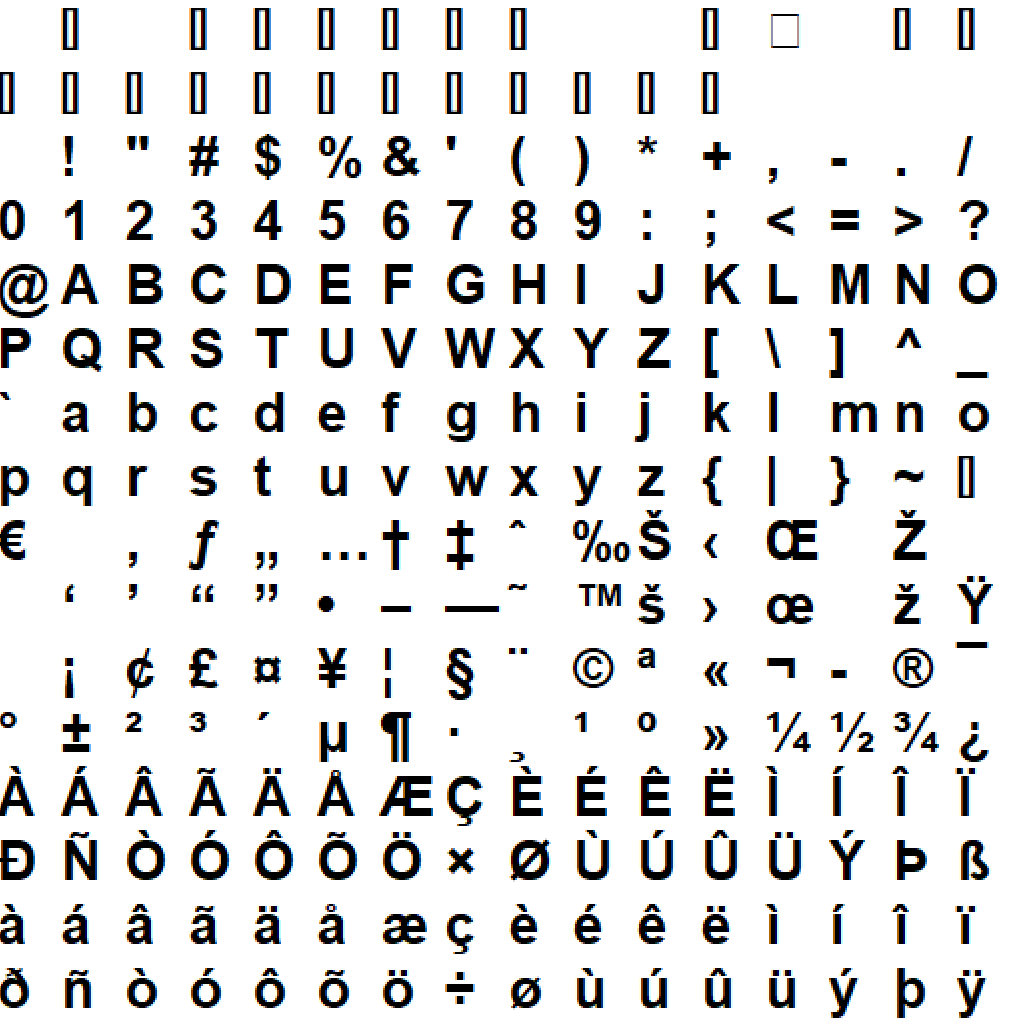

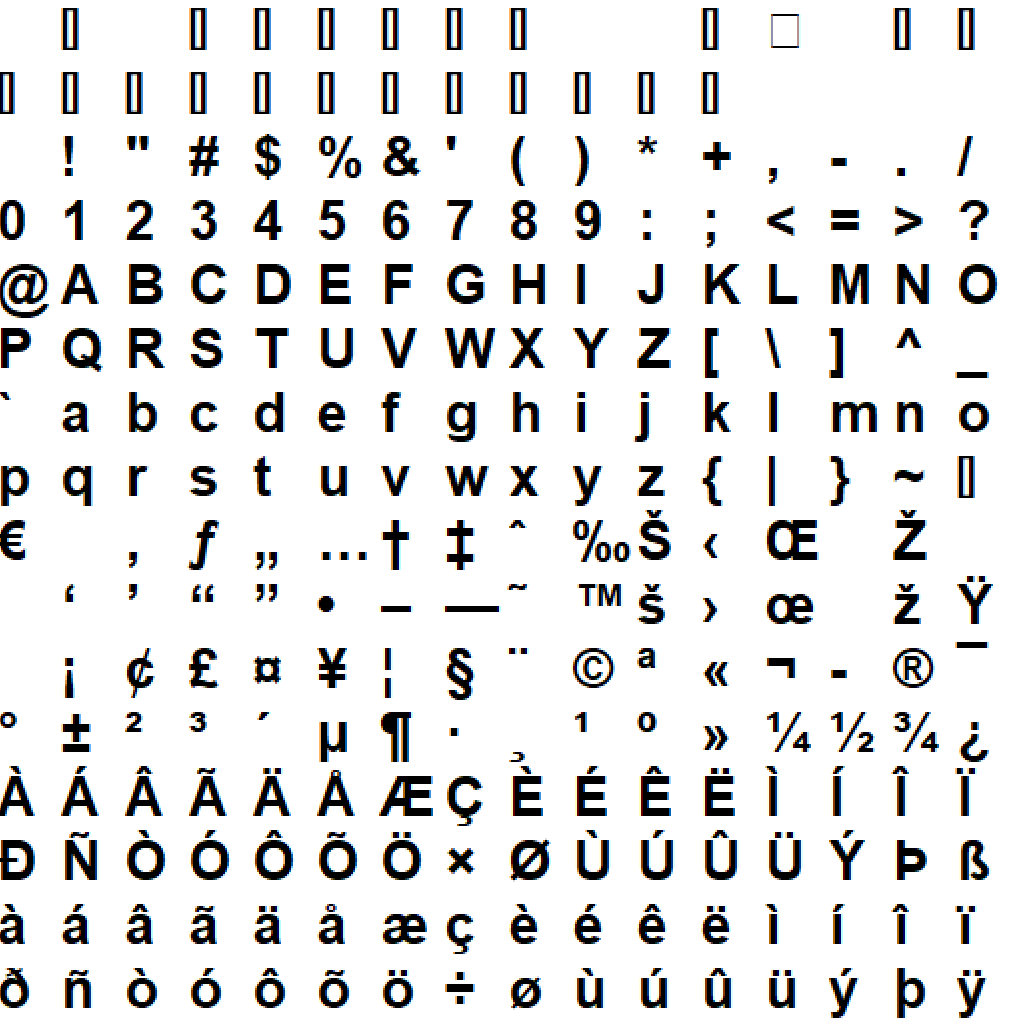

For the small-font legibility question, we might take a look at this

font demo.

- What is the smallest row you can read?

- Below that point, illegible font shapes drawn might still be useful

for miniloc's purpose, which is to be a "mini-map" of the code.

- my problem last time wasn't just small dots on a TV screen

- the copy of miniloc.icn I showed you hadn't been

updated when the

E_Loc event was enhanced for

Ziad Al-Sharif's Ph.D.

- I have too many copies of the execution monitoring tools, on

too many machines

- This is a great argument for placing them under revision control

Bigger Questions

- How much more static information will we need, e.g. beyond the

function call graph? What types?

- Does that "static" information include source code?

- How much could we get if wanted to not depend on source code?

Vizualization Idea:

- map line #'s to y coordinate, one pixel per text row.

- map column #'s to historical sequence of line numbers,

show last (K) events, where K=width of window in pixels

- wrap around when you get to K+1'th column (why?)

Hani's Clever Case Tag

Case expressions in Icon use === semantics, looking for an exact match with

no type conversions. Case branches are evaluated sequentially as if one

were writing

if x === firstbranchexpr then firstcodebody

else if x === secondbranchexpr then firstcodebody

else if x === thirdbranchexpr then firstcodebody

...

If all the branch labels are constants, this is colossally inefficient

compared with a C switch statement. But, it is fully general and you

can use arbitrary expressions, including generators, for which the entire

result sequence will be generated in trying to find a match.

You can add a predicate filter on the front, or have your values supplied from

subroutines, or whatever:

case x of {

p() & q() & foo: { ... }

a | b | 1 to 10 | f(): { ... }

}

This generator capability can be used with cset event masks, as in the

following; it would also work with sets, table keys, or any other generator

you wanted to write.

case x of {

...

!ProcMask: {

}

...

}

This makes for short elegant code, but it is inefficient. Generating

the individual elements out of a cset costs a type conversion (cset to

string) which isn't cheap, and all generators pay for extra bookkeeping

on the stack, for that suspending resuming capability, which is slow at

times. You are paying for convenience and generality, and a good

optimizing compiler might make some of that go away, but the VM sure

does not. In a couple minutes we will see another measure of how much

you pay. But in the meantime...

Hani Bani Salameh showed me some code once that looked like:

case x of {

...

member(a_set, x): {

}

...

}

member(a_set, x) tests whether x is a member and returns

x if it is, so it is just a filter, and by the way it avoids a linear

search via a generator so it is fast. Its got a seemingly redundant

comparison of x===x after the member() test succeeds, but that is C

code and probably very fast compared with a case with a lot of alternation

| or generate ! operators in it.

lecture 14

Reading

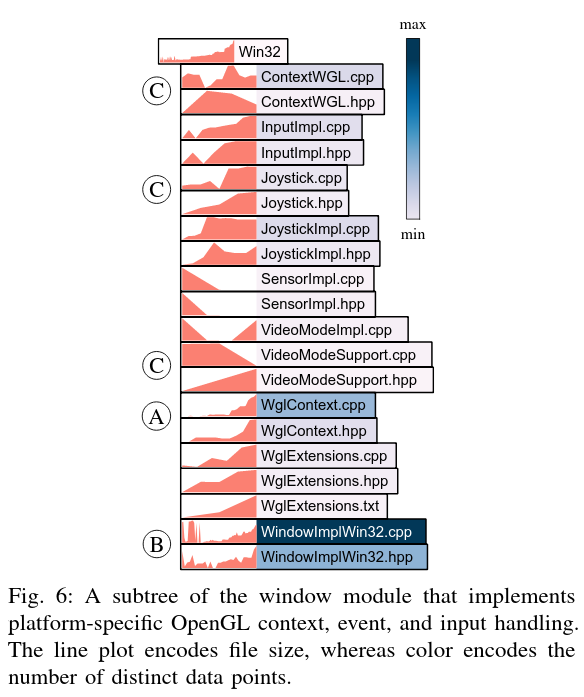

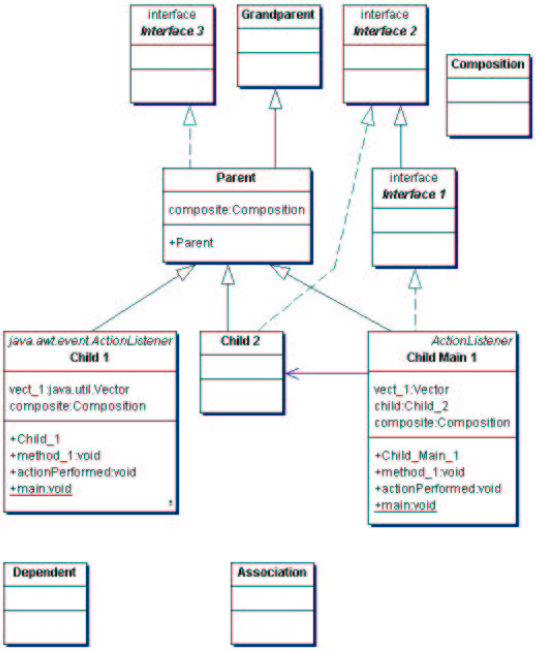

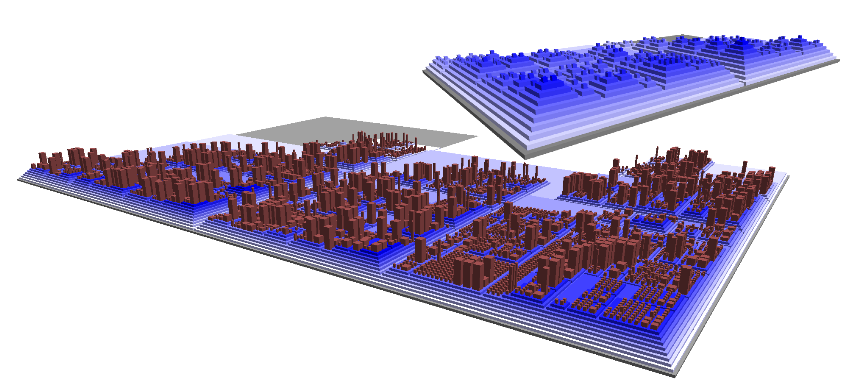

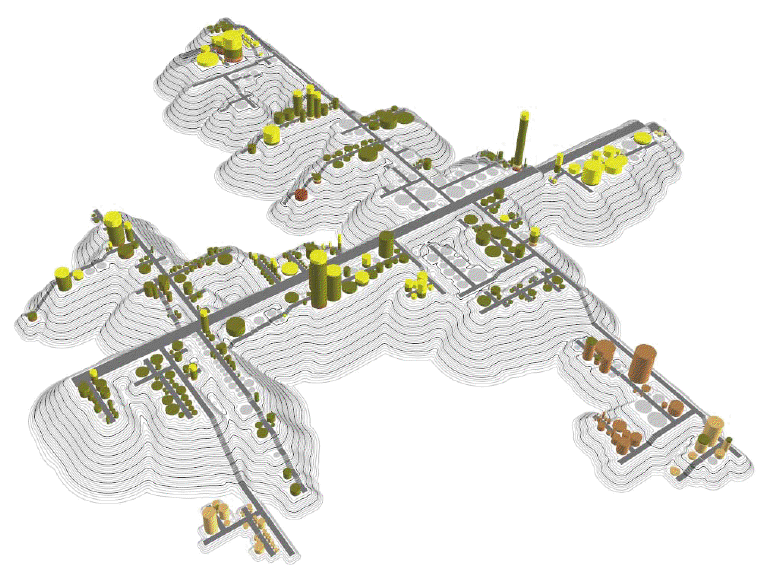

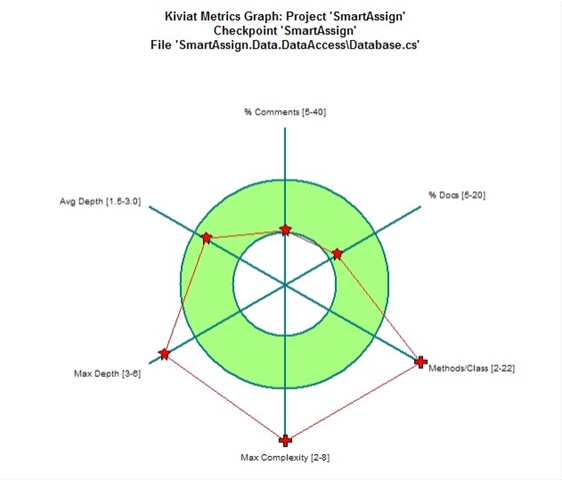

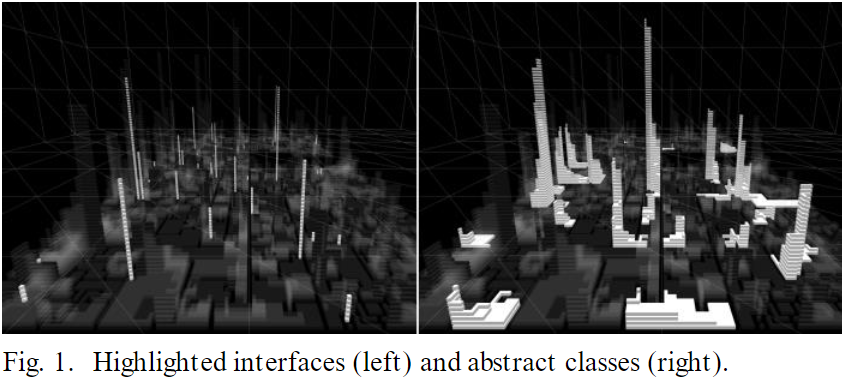

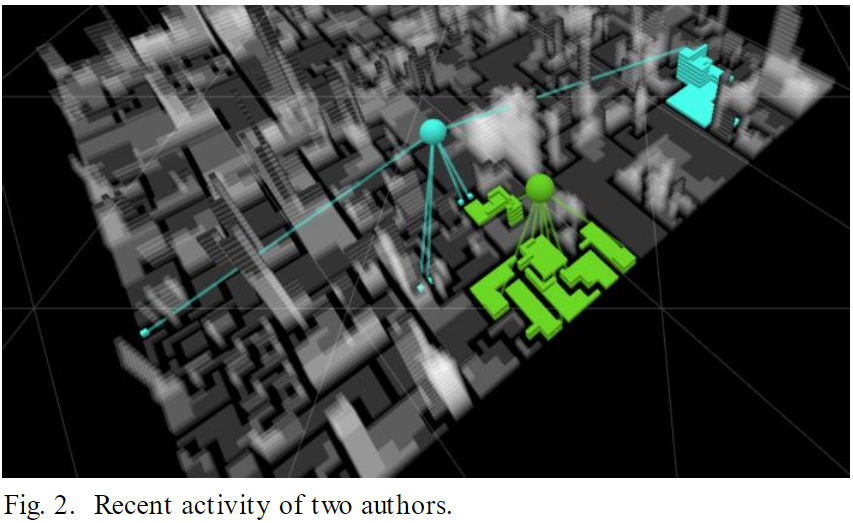

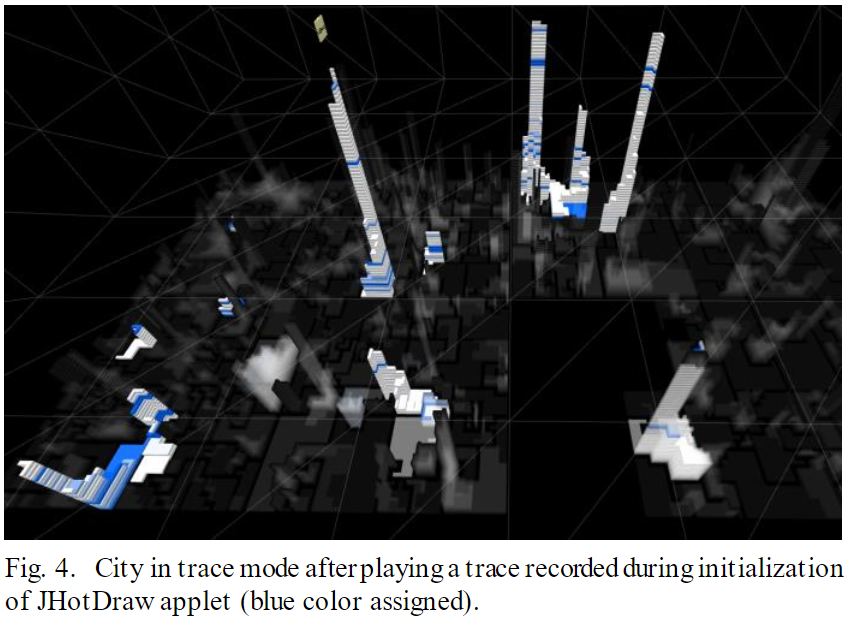

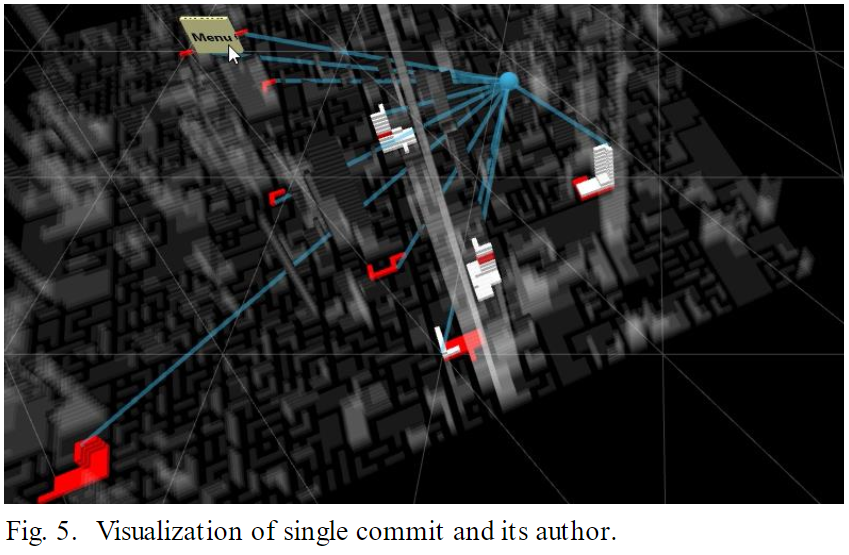

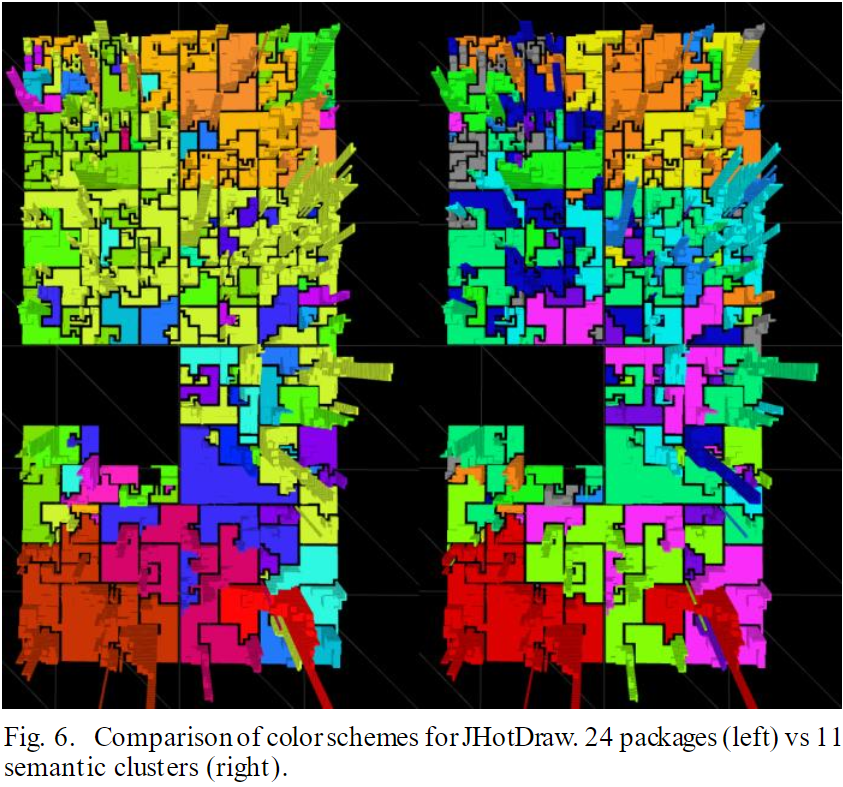

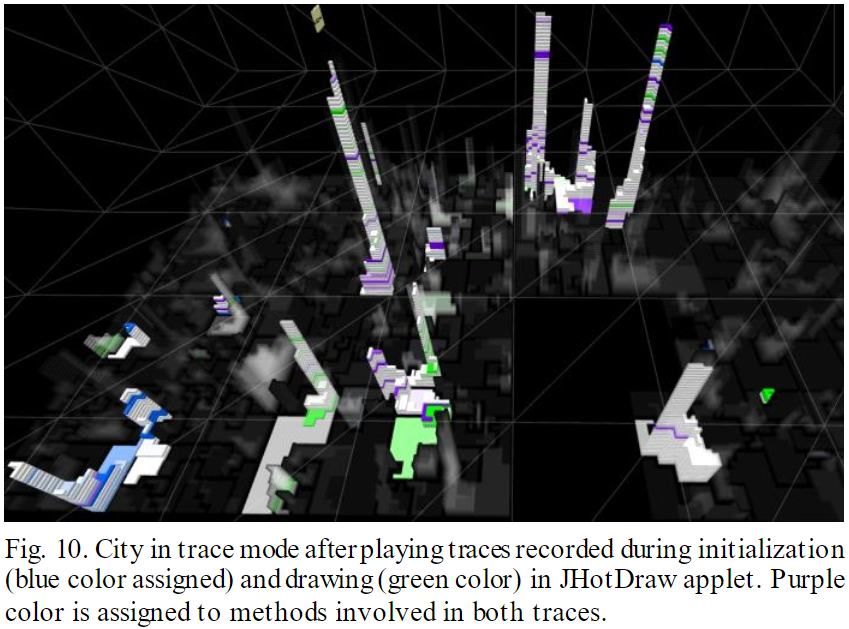

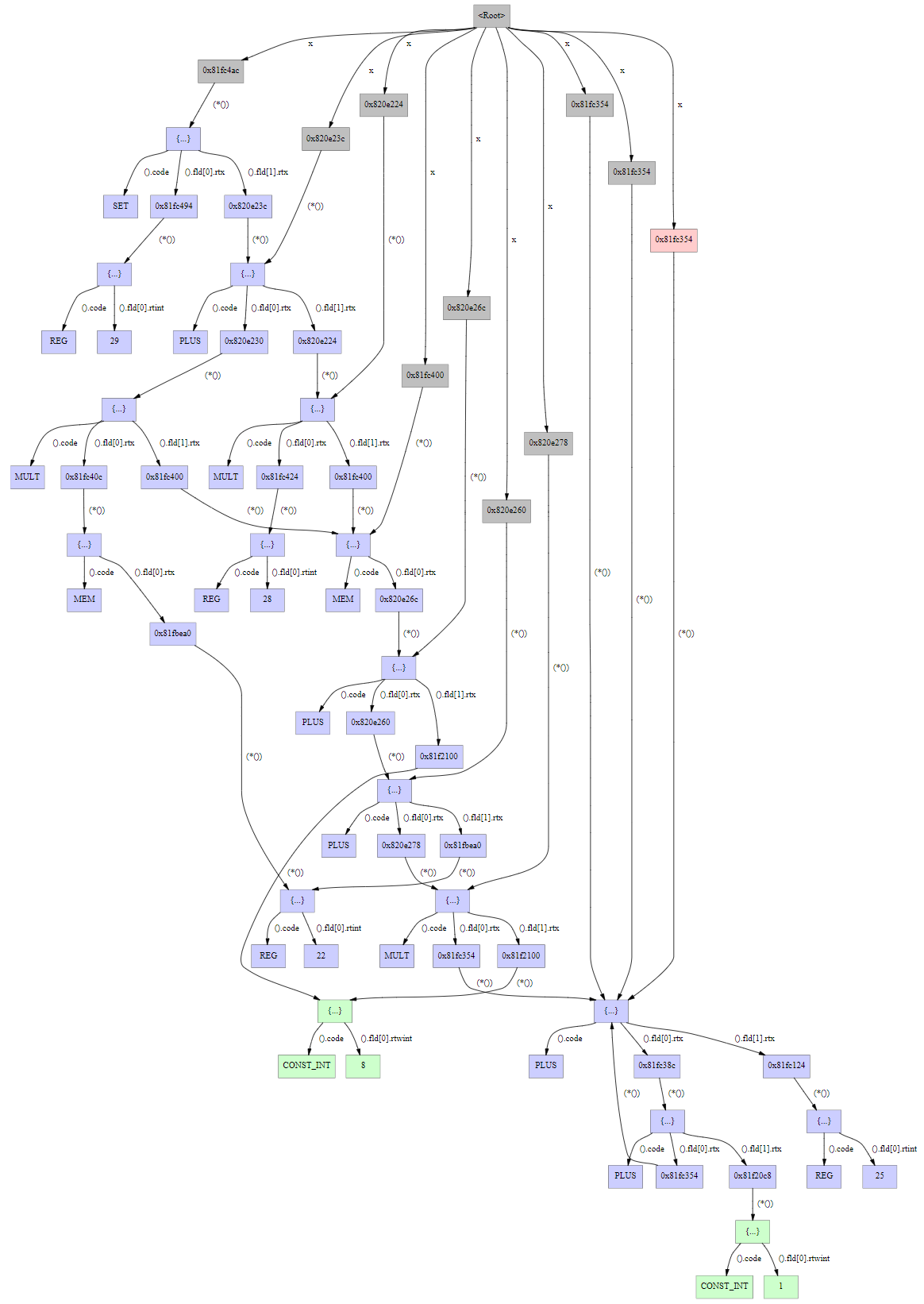

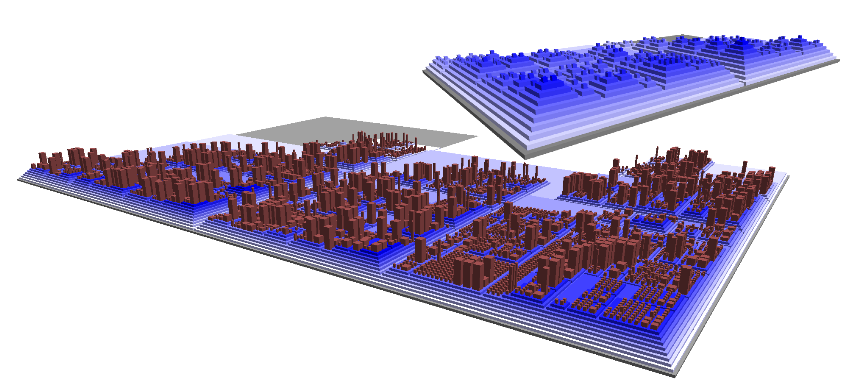

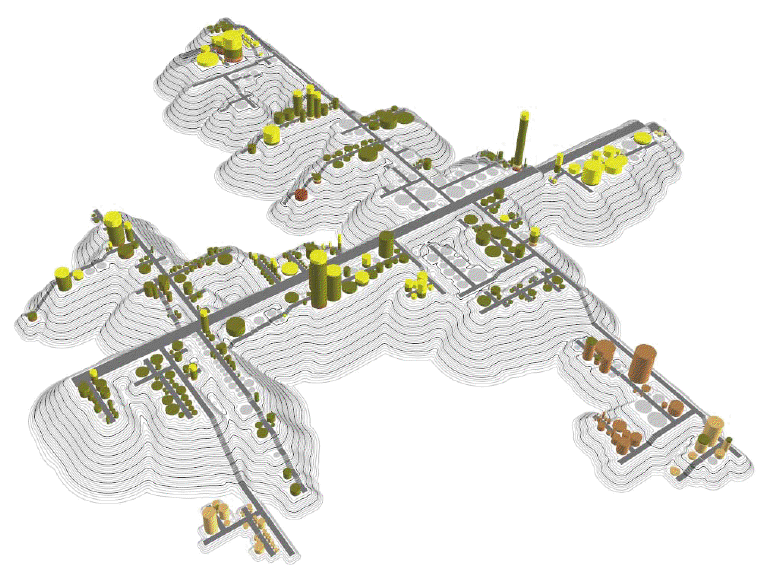

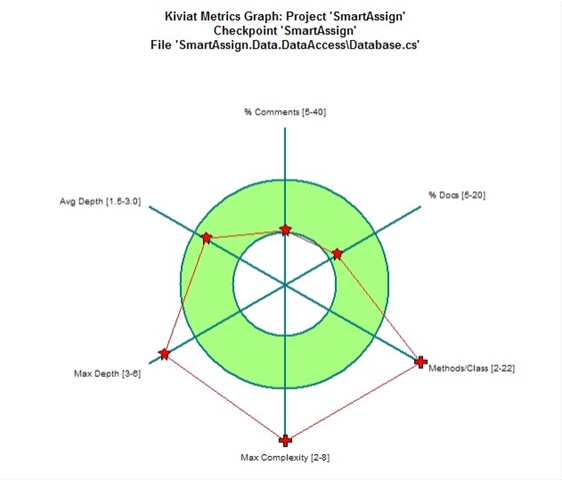

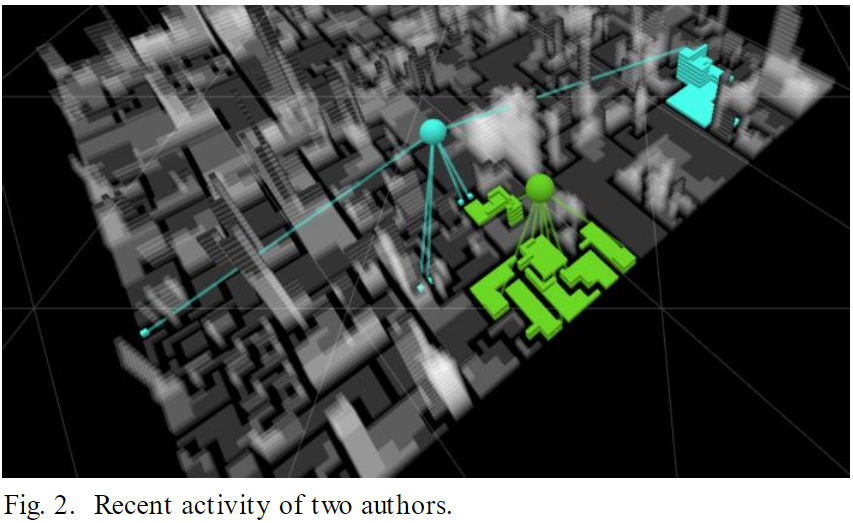

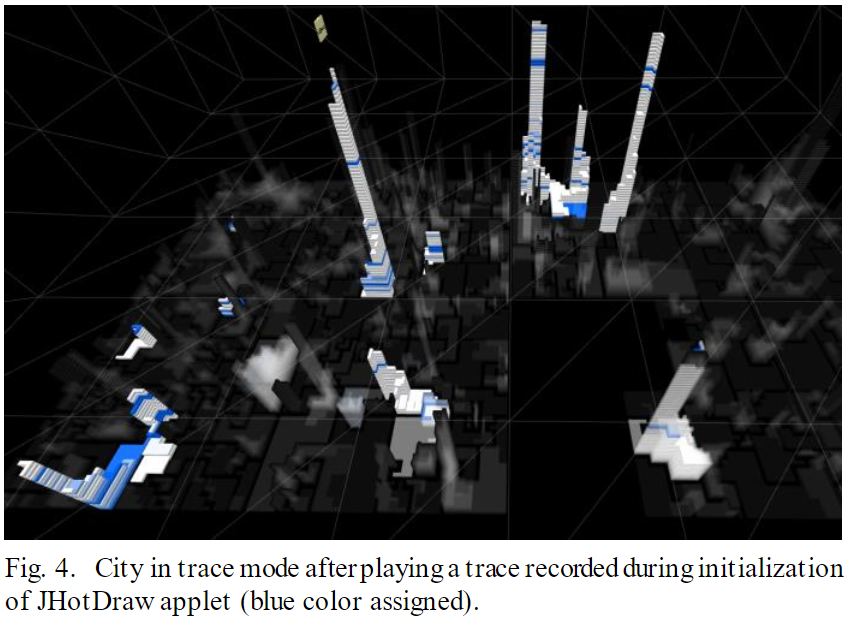

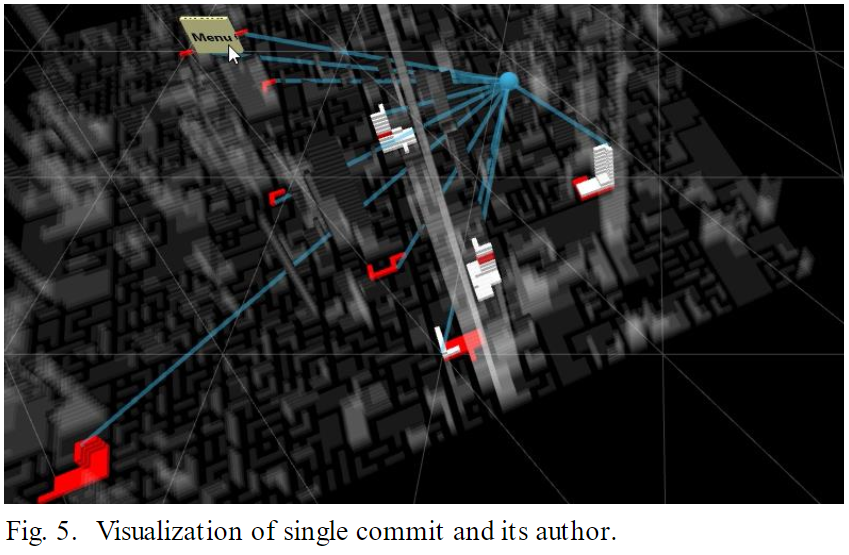

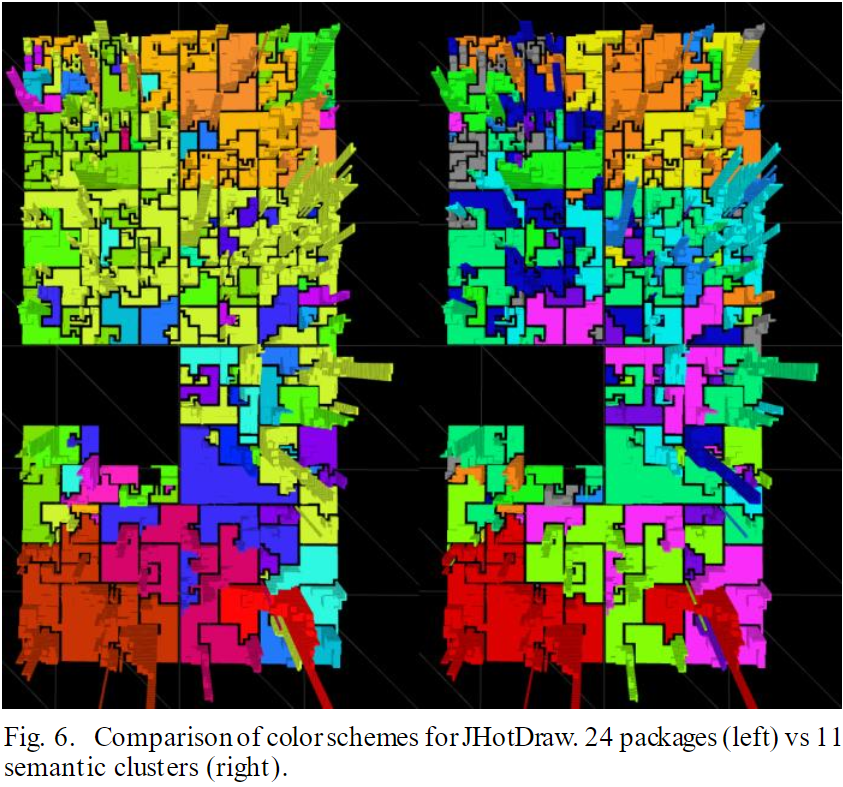

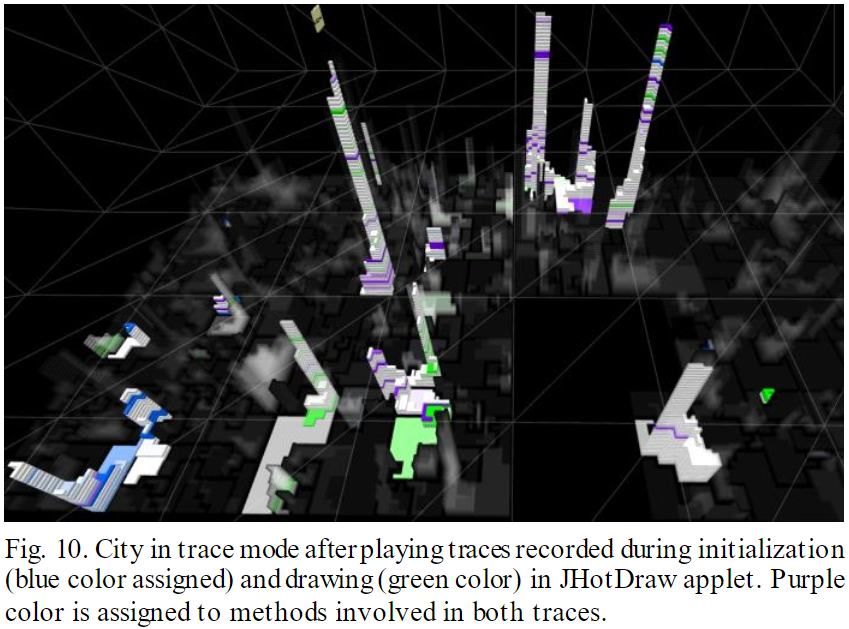

Highlights from [Wettel and Lanza]

- CodeCity

- classes and interfaces are buildings

- building height == # of methods

- width and length proportional to # of attributes (all square)

- position hue, saturation, and transparency are all available

to depict more information

- land topography/elevation depicts package structure

- Visualized on real, large systems, e.g. 8,000 classes

- Layout: largest first, splitting rectangles into pieces, treemap algorithm

- Studied software evolution in a repository

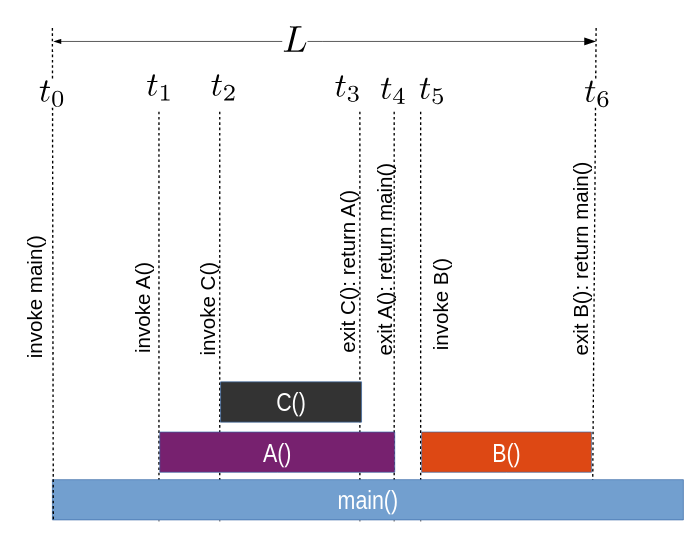

Monitoring Procedure Activity

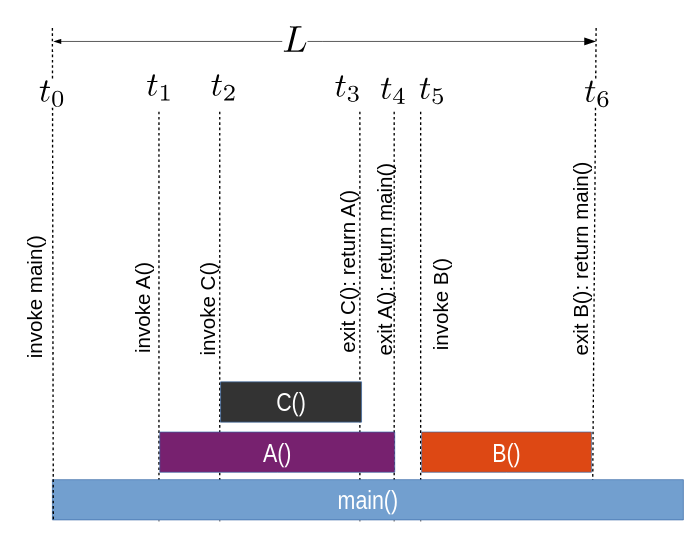

- Procedure activity is a subset of the control flow behavior of

expression evaluation.

- It is related to but far coarser-grained than monitoring line#/col#

- In a normal language monitoring procedure activity

would mean monitoring the stack of procedure activation records

- In a multi-threaded context, there would be a set of stacks of

procedure activation records.

- Just looking at the stack, one might note how deep the stack

gets (not a problem unless it overflows) and look for patterns

that suggest bugs

- Can anyone think of a call-return sequence that suggests a bug?

- Besides correctness, you might imagine looking for performance

problems or tuning opportunities.

Monitoring Icon and Unicon is a little more complicated because procedures

can suspend and be resumed. The events for this behavior are given below.

The include file evdefs.icn defines an event mask named

ProcMask that will select all six of these events.

| Event | Value | Description

|

|---|

E_Pcall | procedure called | Procedure call

|

E_Psusp | value produced | Procedure suspended to caller

|

E_Presum | procedure resumed | Resume a previous suspension

|

E_Pret | value produced | Procedure returned to caller

|

E_Pfail | failing procedure | Procedure failed

|

E_Prem | removed procedure | Procedure removed

|

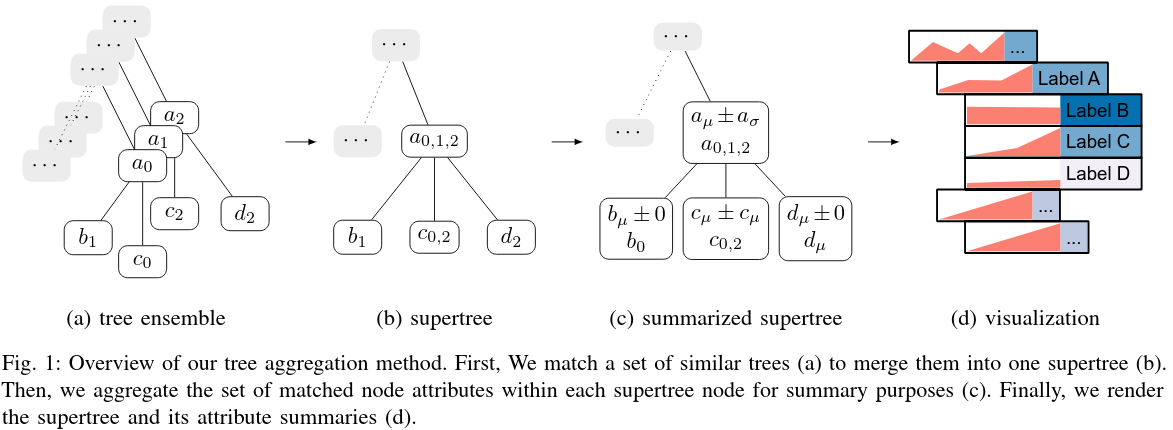

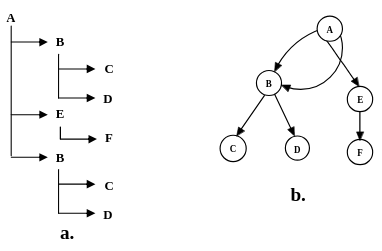

In the presence of suspend/resume, the "call stack" becomes a "call tree",

a.k.a. an activation tree (a better term since

procedures can be activated by more than just calls).

You can just ask for all the procedure activity events, but if your monitor

is doing more than just counting them then it potentially will need to do

more. One way to monitor the activation tree is to build a model of the

tree itself.

- a library procedure named

evaltree(), does this for you.

- you can

link evaltree to link this module into your program.

- study in detail the implementation of

evaltree.icn.

We will look at examples that use evaltree, but first a word on timing.

- Monitoring costs time.

- If it costs too much, folks won't want to do it even

if your successful program visualizations do make pretty moving pictures.

- The instrumentation of all events costs time even if you don't ask for the

event reports

- when you do ask for an event, the event report (co-expression switch)

costs a lot more time.

- It is difficult to even measure the timings of different parts of the

monitoring process.

- You may be able to do a good job by going into the VM C

code and using your own expertise, or using specialty tools for doing

timing, such as gprof.

- This discussion is just based on casual observation.

Example. In the suspects/ directory are many candidates (which one runs the

longest?). We will consider the poetry scrambler for this example.

time ./scramble <scramble.dat

uses the UNIX time(1) command to

measure the runtime externally. It reports something like:

| Sun Sparc, ~9/2007 | Threadripper, 2/2019

|

|---|

1.0u 0.0s 0:03 32% 0+0k 0+0io 0pf+0w

|

0.019u 0.025s 0:00.15 20.0% 0+0k 0+0io 2pf+0w

|

Over a decade ago, that program took 1.0 seconds of user time, 0.0 seconds of

system time, 3 seconds of wall-clock observed time. Out of curiosity,

since it writes out a lot to standard out, I re-timed it directing output

to /dev/null, and it still took a second of user time, but the wall clock

is down to 1 second.

Now I take an almost-empty monitor, timer.icn,

and time it using the UNIX utility.

time timer ./scramble <scramble.dat

and it writes out

| Sun Sparc, ~9/2007 | Threadripper, 2/2019

|

|---|

tp time: 1830 - 0 = 1830

em time: 0 - 0 = 0

1.0u 0.0s 0:03 30% 0+0k 0+0io 0pf+0w

|

tp time: 35 - -5 = 40

em time: 5 - 5 = 0

0.025u 0.018s 0:00.15 20.0% 0+0k 0+0io 2pf+0w

|

Given that timer.icn is the "empty monitor",

what do these numbers tell us?

- The time command doesn't see any appreciable extra time spent

due to the act of monitoring (in 1993 this would have reported

~50% slowdown for the privilege of having the instrumentation present)

- The "monitor" thread thinks it has spent no time at all (almost true)

- 2007 Sparc monitoring facilities reported the program is spending 1.8

seconds, versus system time's 1.0u CPU time, or 3sec wall clock.

- System time program in 2019 reports same overall time (0.15sec)

but fluctuates as to whether user or system time is more to blame...

[clock tick fluctuation?]

- Are threadrippers more like 6x faster than Sparcs, or is it more like 40x?

- CPU (user+system) is pretty routinely a small fraction of wall clock time

- Which timers are more accurate/relevant?

- Is there time spent that is unaccounted for?

- Timing facilities on PCs have resolution/accuracy limits and you will

have to run something for a long time in order to get any useful accuracy.

Time measurement accuracy is limited by tools of

observation and hardware/OS limitations. Another problem with measurement

is that external evironmental considerations (load average, user activity)

change results to some extent. The 2007 measurements were done long ago on

mars.cs.uidaho.edu, a sparc Solaris machine. The "who" command

reported 5 different

people logged in at the time, although the load average was apparently low

(inactive terminal sessions). The 2019 Threadripper numbers were for the

machine in my office running Fedora. Lots of processes, only 1 user.

lecture 15

No Class on Monday

Monday is President's Day.

Mailbag

- How do I draw arrowheads?

- The arrow is to be drawn from the last point

to the point given with the "e,x,y" at the beginning of the pos

attribute. Possible implementations:

- no arrow head, just a line from one to the other

- compute midpoint, draw a circle there

- calculate points on each side of the last point on the line segment

orthogonal to the last line segment, form triangle between them and

the point listed in the "e,x,y"

- Draw using decreasing line widths along the final line segment

- ... what did you-all end up doing?

Upcoming Conferences

Some of you should consider doing a semester project worthy of a research

paper. Some of you might even want to target one of these venues.

- WSCG 2019, papers due March 10, conference in Pilsen Cz 5/27-31

- CGI 2019, papers due March 25, conference in Calgary 6/17-20, (notification Apr 21)

- VISSOFT 2019, papers due Apr 26, conference in Cleveland 9/30-10/1. (notification May 26)

A Brief on Windows Unicon

- I did some debugging recently, related to spaces in pathnames, and

unbreaking an event monitoring bug that was Windows-specific.

- I have put up a .zip with my current .exe's and libraries at

http://www2.cs.uidaho.edu/~jeffery/unicon.zip.

It might or might not be usable, or less broken, than the public

Windows Unicon at the moment.

- I will work testing it, and on a proper Windows installer

at my earliest convenience

- I expect to put out some more improvements, related to drawing

text in the 3D facilities, in time for you to use them in homeworks

Timing, Part 2

- Last time we saw that the timing under a monitor that isn't asking for

any events costs very little.

- I think the times I reported were on cs-445 instead of the threadripper

in my office. Mea culpa. Threadripper is about 4x faster.

- Now let's look at how much it costs to monitor every single location

change, a very frequent event. Check out

timerloc.icn

- Remember: every event report, you are doing a context switch

to a different program, and back! This timer is mostly measuring that

context switch time.

time ../tools/timerloc ./scramble < scramble.dat > /dev/null

tp time: 366 - -6 = 372ms

em time: 394 - 6 = 388ms

0.490u 0.881s 0:01.46 93.8% 0+0k 0+0io 2pf+0w

Wow! Is that a factor of 100x? BTW, a pthreads context switch, where the

OS gets involved because you want to support true multicore or whatever,

costs maybe easily another 100x.

- You don't want

E_Loc events unless you really want them.

- We will see for other types of events, different costs

proportional to event frequency

- The most frequent event of all is probably

E_Opcode,

E_Loc pales in comparison.

- The high cost of event reports is an open area of research.

To work on it we'd go inside the VM runtime system (C code; outside

the scope of this class).

- Suppose you only want E_Loc for certain locations (e.g. breakpoints),

or E_Opcode only for certain instructions?

- Besides event masks, there are also value masks.

- installable, one per event code, thusfar rarely used

Now, I wonder how much evaltree costs? A past student once claimed it was

"slow". I wonder why that would be...

- procedure activity events are frequent.

- Not as frequent as line number changes...but then again

there can be several/many calls on a line of code...

- far more so if you ask for built-ins as well as user-level

- each procedure activity event report costs two co-expression switches

- evaltree itself uses time building and maintaining the tree

- the evaltree callback procedures (monitor application code) uses time

It would be useful to know whether the co-expression switch totally

dominates the time spent in the monitor. Although our intuition says

it does, intuition is not always correct. Evaltree costs: a big case

statement (not very efficient in Icon/Unicon), whose labels are generators

(not very efficient), whose code bodies do allocations and list operations

(pretty darned fast), and call the monitor callback procedure. One way

to do our experiment is to measure &time before and after each EvGet(),

and instead of measuring time spent in the target program, measure the

the other time, time spent in the monitor. Another way to do the experiment

is to rewrite the evaltree() functionality for speed instead of clarity, and

see if it is measurably different or not.

Compare evaltime.icn,

evaltime2.icn,

evaltime3.icn, showing an

attempt to do this experiment.

time evaltime ./scramble <scramble.dat

shows

| Sun Sparc, ~9/2007 | Threadripper, 2/2019

|

|---|

tp time: 2760--10=2770

em time: 6670-0=6670

10.0u 0.0s 0:18 55% 0+0k 0+0io 0pf+0w

|

tp time: 56--7=63

em time: 207-7=200

0.212u 0.094s 0:00.30 100.0% 0+0k 0+0io 0pf+0w

|

Using evaltree, the monitor is accounting for the vast majority of the

time, and the time reported for the target program is much slower than

for the unmonitored or empty monitored cases. evaltime2, which skips

the evaltree mechanism but uses a big case statement, gives:

| Sun Sparc, ~9/2007 | Threadripper, 2/2019

|

|---|

tp time: 2490-0=2490

em time: 2660-0=2660

5.0u 0.0s 0:08 61% 0+0k 0+0io 0pf+0w

|

tp time: 55--7=62

em time: 90-7=83

0.113u 0.085s 0:00.19 100.0% 0+0k 0+0io 0pf+0w

|

Cost of monitoring is substantially lower, although the particular

details may be affected by machine load fluctuation. One would have

to run several times and take averages for the numbers to be meaningful.

Using evaltime3, which avoids the large case statement, we get

| Sun Sparc, ~9/2007 | Threadripper, 2/2019

|

|---|

tp time: 2580-0=2580

em time: 2050-0=2050

5.0u 0.0s 0:07 70% 0+0k 0+0io 0pf+0w

|

tp time: 60--8=68

em time: 76-8=68

0.088u 0.103s 0:00.19 94.7% 0+0k 0+0io 0pf+0w

|

At this point, monitoring procedure activity is seen to impact

execution time substantially, but at least the monitor is taking

no more time than the target program.

Many Morals of the story:

- the UNIX

time(1) command is not very fine-grained

or precise.

- The monitoring of

&time gives times in milliseconds

which might or might not be reliable, they report what the C

millisec() function returns.

- The monitoring facilities attempt to explicitly separate the

&time reported by the TP from that of the EM.

My best guess is that this is imperfect, and TP is being charged

for part of the co-expression time.

- The coding of the EM has a (surprisingly?)

large impact on the practicality of the EM. Mastering

the language and coding elegantly actually matters for EM authors.

- Co-expression switch time may dominate but not totally dominate timings.

Griswold was fond of saying that on at least one old CPU where it was

measured, the co-expression switch cost less than a procedure call

in Icon. This is probably not true for us, but co-expression costs

are not the only factor in performance and not always the primary factor.

- The evaltree.icn module might be rewritable for

much better speed. It begs to have Hani's clever case tag applied to it,

and timings measured.

- Icon and Unicon VM compilers need a decent case expression optimization.

iconc might already do one, I am not sure.

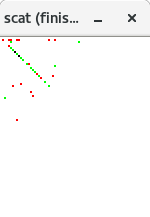

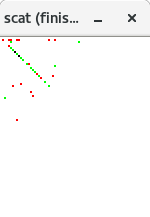

scat

The scat program is a simple application of evaltree. You kind of have

to see this one running to appreciate it, so let's try and demo it.

It links in a scatterplot library which

might or might not be useful to you; scatlib implements the log scaling

that scat uses.

$include "evdefs.icn"

link evinit

link evaltree

link scatlib

Scat uses several global variables, three tables to remember what

has been plotted, and three clones set with different colors.

global at, # table: sets of procedures at various locations

call, # table: call counts

rslt, # table: result counts

red,

green,

black

Scat uses a generic evaltree-compatible record type for modeling;

no extra payload added.

record activation (node, parent, children)

The initialization is straightforward.

procedure main(av)

local mask, current_proc, L, max, i, k, child, e

EvInit(av) | stop("can't monitor")

scat_init()

red := Clone(&window, "fg=red")

green := Clone(&window, "fg=green")

black := Clone(&window, "fg=black")

current_proc := activation(,activation(,,,,[]),[])

Control is handed over to evaltree, which calls scat_callback

with events

evaltree(ProcMask ++ FncMask ++ E_MXevent,

scat_callback, activation)

WAttrib("label=scat (finished)")

EvTerm(&window)

end

scat_callback mostly calls scat_plot, which calls colorfor to decide

what color to plot with.

procedure scat_callback(new, old)

case &eventcode of {

E_Pcall:

scat_plot(new.node, 1, 0, , colorfor)

E_Psusp | E_Pret:

scat_plot(old.node, 0, 1, , colorfor)

E_Fcall:

scat_plot(new.node, 1, 0, , colorfor)

E_Fsusp | E_Fret:

scat_plot(old.node, 0, 1, , colorfor)

E_MXevent: {

case &eventvalue of {

"q" | "\033": stop("terminated")

&lpress : {

repeat {

scat_click(proced_name)

if Event() === &lrelease then

break

}

}

}

}

}

end

Procedure proced_name returns the name of a procedure, taken from its image.

procedure proced_name(p)

return image(p) ? {

[ =("procedure "|"function "), tab(0) ]

}

stop(image(p), " is not a procedure")

end

Procedure colorofone distinguishes procedures from functions.

procedure colorofone(p)

return if match("procedure ", image(p))

then red else green

end

Procedure colorfor uses a list (of procedures/functions) to select

what color to plot. If it is not the first color choice and the

subsequent value should be a different color, resort to black.

Return a red or green if all values say to be red or all say to be green.

procedure colorfor(L)

if *L = 0 then return &window

every x := !L do {

if not (/c := colorofone(x)) then

if colorofone(x) ~=== c then

return black

}

return c

end

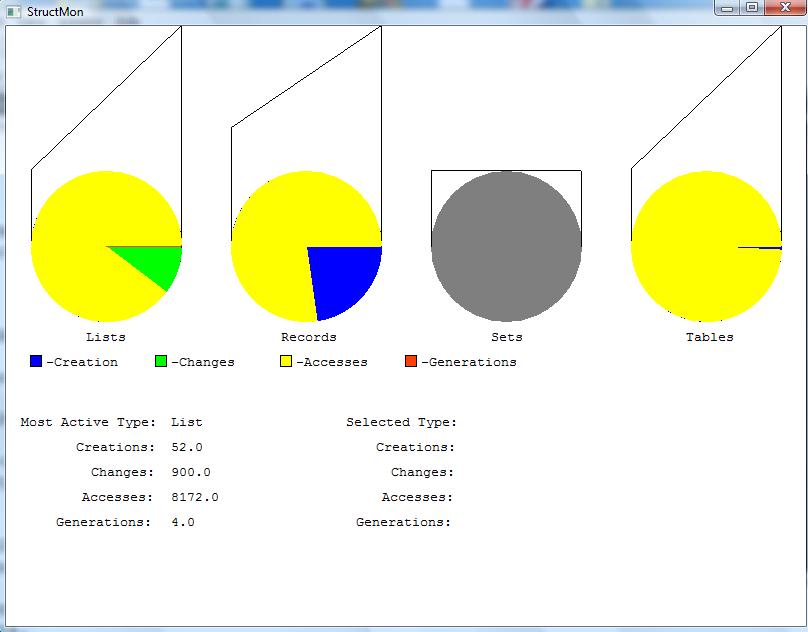

What is scat good for?

scat is cooler than you think. It shows not just who the hot procedures

are, it also shows what procedures always fail, what procedures generate

lots of results per call, and what procedures (predicates) generate

between 0 and 1 result per call.

lecture 16

Office Hours Pushback

My office hours today will start at 3pm due to my boss requesting the

half hour from 2:30-3.

More Class Cancellations

I am going to ACM SIGCSE in Minneapolis February 26-March 3. We will miss a

Wednesday and a Friday class that week, sorry! I will be reachable by e-mail

and will try to accommodate office appointment requests via Zoom.

HW#3 Discussion

- As a reminder, if you did HW#1 for C, you get to re-tool a bit

for HW#3 and beyond, as we will be monitoring Unicon programs. If any

of you are interested in working on building/updating my monitoring

framework for C/C++ or Java after the semester, please come visit.

- I tweaked one of your HW#1 solutions a bit and ran it on all the

suspects, so you should have no shortage

of json files to play with. Try out your HW#3 on all of them, and

look for interesting images/animations.

- I recommend you do as much HW#3 between now and Monday as possible,

so that if you need to consult me in person, you can do it before

Minneapolis.

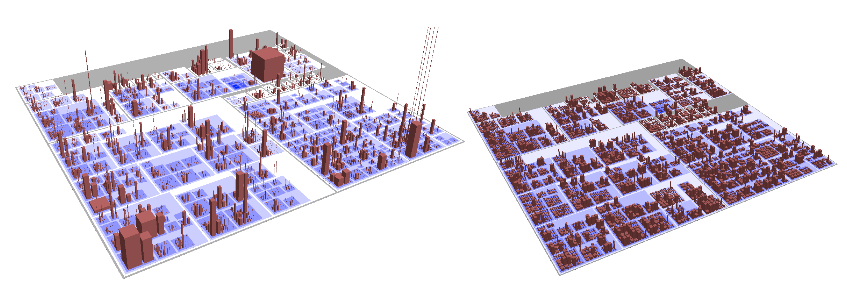

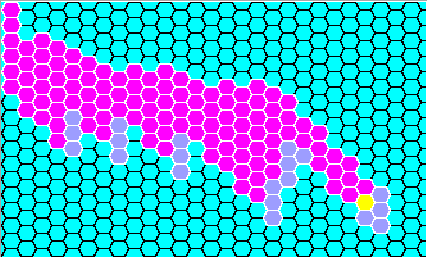

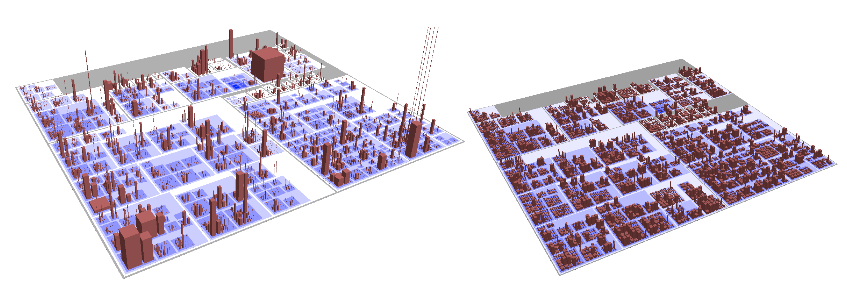

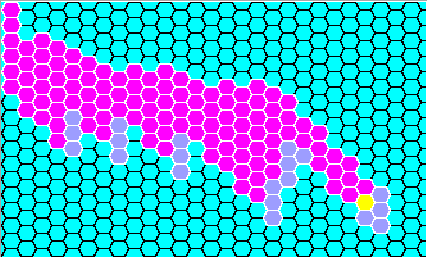

algae

The flagship demonstration of the evaltree framework is a fairly literal

visualization of the activation tree.

EvInit(av) | stop("Can't EvInit ",av[1])

codes := algae_init(algaeoptions)

evaltree(codes, algae_callback, algae_activation)

WAttrib("windowlabel=Algae: finished")

EvTerm(&window)

Algae takes command line options to say how much to monitor, how to

graphically depict the tree, etc. It deliberately chooses a simple-minded

incremental graphic, coming from a time that graphic performance was deemed

to be a likely monitor bottleneck. By default it uses hexagons for

activation records (compare hexagons with a square grid). A real but still

INCREMENTAL tree layout algorithm would be better.

procedure algae_init(algaeoptions)

local t, position, geo, codes, i, cb, coord, e, s, x, y, m, row, column

t := options(algaeoptions,

winoptions() || "P:-S+-geo:-square!-func!-scan!-op!-noproc!-step!")

/t["L"] := "Algae"

/t["B"] := "cyan"

scale := \t["S"] | 12

delete(t, "S")

if \t["square"] then {

spot := square_spot

mouse := square_mouse

}

else {

scale /:= 4

spot := hex_spot

mouse := hex_mouse

}

codes := cset(E_MXevent)

if /t["noproc"] then codes ++:= ProcMask

if \t["scan"] then codes ++:= ScanMask

if \t["func"] then codes ++:= FncMask

if \t["op"] then codes ++:= OperMask

if \t["step"] then step := 1

hotspots := table()

&window := Visualization := optwindow(t) | stop("no window")

numrows := (WHeight() / (scale * 4))

numcols := (WWidth() / (scale * 4))

wHexOutline := Color("white") # used by the hexagon library

if /t["square"] then starthex(Color("black"))

return codes

end

The real work happens in algae_callback()

procedure algae_callback(new, old)

local coord, e

initial {

old.row := old.parent.row := 0; old.column := old.parent.column := 1

}

case &eventcode of {

!CallCodes: {

new.column := (old.children[-2].column + 1 | computeCol(old)) | stop("eh?")

new.row := old.row + 1

new.color := Color(&eventcode)

spot(\old.color, old.row, old.column)

}

!ReturnCodes |

!FailCodes: spot(Color("light blue"), old.row, old.column)

!SuspendCodes |

!ResumeCodes: spot(old.color, old.row, old.column)

!RemoveCodes: {

spot(Color("black"), old.row, old.column)

WFlush(Color("black"))

delay(100)

spot(Color("light blue"), old.row, old.column)

}

E_MXevent: do1event(&eventvalue, new)

}

spot(Color("yellow"), new.row, new.column)

coord := location(new.column, new.row)

if \step | (\breadthbound <= new.column) | (\depthbound <= new.row) |

\ hotspots[coord] then {

step := &null

WAttrib("windowlabel=Algae stopped: (s)tep (c)ont ( )clear ")

while e := Event() do

if do1event(e, new) then break

WAttrib("windowlabel=Algae")

if \ hotspots[coord] then spot(Color("light blue"), new.row, new.column)

}

end

Boring square graphics:

procedure square_spot(w, row, column)

FillRectangle(w, (column - 1) * scale, (row - 1) * scale, scale, scale)

end

# encode a location value (base 1) for a given x and y pixel

procedure square_mouse(y, x)

return location(x / scale + 1, y / scale + 1)

end

A whole new meaning for the term "graphical breakpoints":

#

# setspot() sets a breakpoint at (x,y) and marks it orange

#

procedure setspot(loc)

hotspots[loc] := loc

y := vertical(loc)

x := horizontal(loc)

spot(Color("orange"), y, x)

end

#

# clearspot() removes a "breakpoint" at (x,y)

#

procedure clearspot(spot)

local s2, x2, y2

hotspots[spot] := &null

y := vertical(spot)

x := horizontal(spot)

every s2 := \!hotspots do {

x2 := horizontal(s2)

y2 := vertical(s2)

}

spot(Visualization, y, x)

end

User input handling:

#

# do1event() processes a single user input event.

#

procedure do1event(e, new)

local m, xbound, ybound, row, column, x, y, s

case e of {

"q" |

"\e": stop("Program execution terminated by user request")

"s": { # execute a single step

step := 1

return

}

"C": { # clear a single break point

clearspot(location(new.column, new.row))

return

}

" ": { # space character: clear all break points

if \depthbound then {

every y := 1 to numcols do {

if not who_is_at(depthbound, y, new) then

spot(Visualization, depthbound, y)

}

}

if \breadthbound then {

every x := 1 to numrows do {

if not who_is_at(x, breadthbound, new) then

spot(Visualization, x, breadthbound)

}

}

every s := \!hotspots do {

x := horizontal(s)

y := vertical(s)

spot(Visualization, y, x)

}

hotspots := table()

depthbound := breadthbound := &null

return

}

&mpress | &mdrag: { # middle button: set bound box break lines

if m := mouse(&y, &x) then {

row := vertical(m)

column := horizontal(m)

if \depthbound then { # erase previous bounding box, if any

every spot(Visualization, depthbound, 1 to breadthbound)

every spot(Visualization, 1 to depthbound, breadthbound)

}

depthbound := row

breadthbound := column

#

# draw new bounding box

#

every x := 1 to breadthbound do {

if not who_is_at(depthbound, x, new) then

spot(Color("orange"), depthbound, x)

}

every y := 1 to depthbound - 1 do {

if not who_is_at(y, breadthbound, new) then

spot(Color("orange"), y, breadthbound)

}

}

}

&lpress | &ldrag: { # left button: toggle single cell breakpoint

if m := mouse(&y, &x) then {

xbound := horizontal(m)

ybound := vertical(m)

if hotspots[m] === m then

clearspot(m)

else

setspot(m)

}

}

&rpress | &rdrag: { # right button: report node at mouse loc.

if m := mouse(&y, &x) then {

column := horizontal(m)

row := vertical(m)

if p := who_is_at(row, column, new) then

WAttrib("windowlabel=Algae " || image(p.node))

}

}

}

end

Calculating which activation a given click refers to:

#

# who_is_at() - find the activation tree node at a given (row, column) location

#

procedure who_is_at(row, col, node)

while node.row > 1 & \node.parent do

node := node.parent

return sub_who(row, col, node) # search children

end

#

# sub_who() - recursive search for the tree node at (row, column)

#

procedure sub_who(row, column, p)

local k

if p.column === column & p.row === row then return p

else {

every k := !p.children do

if q := sub_who(row, column, k) then return q

}

end

A similar calculation for placing new nodes

#

# computeCol() - determine the correct column for a new child of a node.

#

procedure computeCol(parent)

local col, x, node

node := parent

while \node.row > 1 do # find root

node := \node.parent

if node === parent then return parent.column

if col := subcompute(node, parent.row + 1) then {

return max(col, parent.column)

}

else return parent.column

end

#

# subcompute() - recursive search for the leftmost tree node at depth row

#

procedure subcompute(node, row)

# check this level for correct depth

if \node.row = row then return node.column + 1

# search children from right to left

return subcompute(node.children[*node.children to 1 by -1], row)

end

How to use Clone()

#

# Color(s) - return a binding of &window with foreground color s;

# allocate at most one binding per color.

#

procedure Color(s)

static t, magenta

initial {

magenta := Clone(&window, "fg=magenta") | stop("no magenta")

t := table()

/t[E_Fcall] := Clone(&window, "fg=red") | stop("no red")

/t[E_Ocall] := Clone(&window, "fg=chocolate") | stop("no chocolate")

/t[E_Snew] := Clone(&window, "fg=purple") | stop("no purple")

}

if *s > 1 then

/ t[s] := Clone(&window, "fg=" || s) | stop("no ",image(s))

else

/ t[s] := magenta

return t[s]

end

Graphic Design(s) of the Day

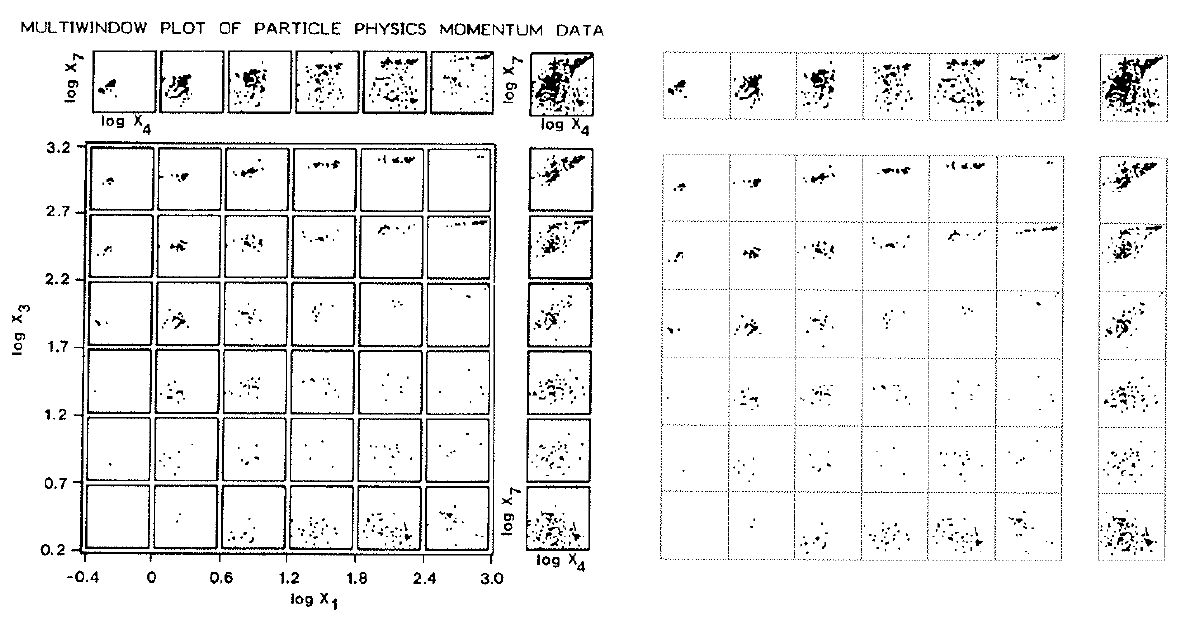

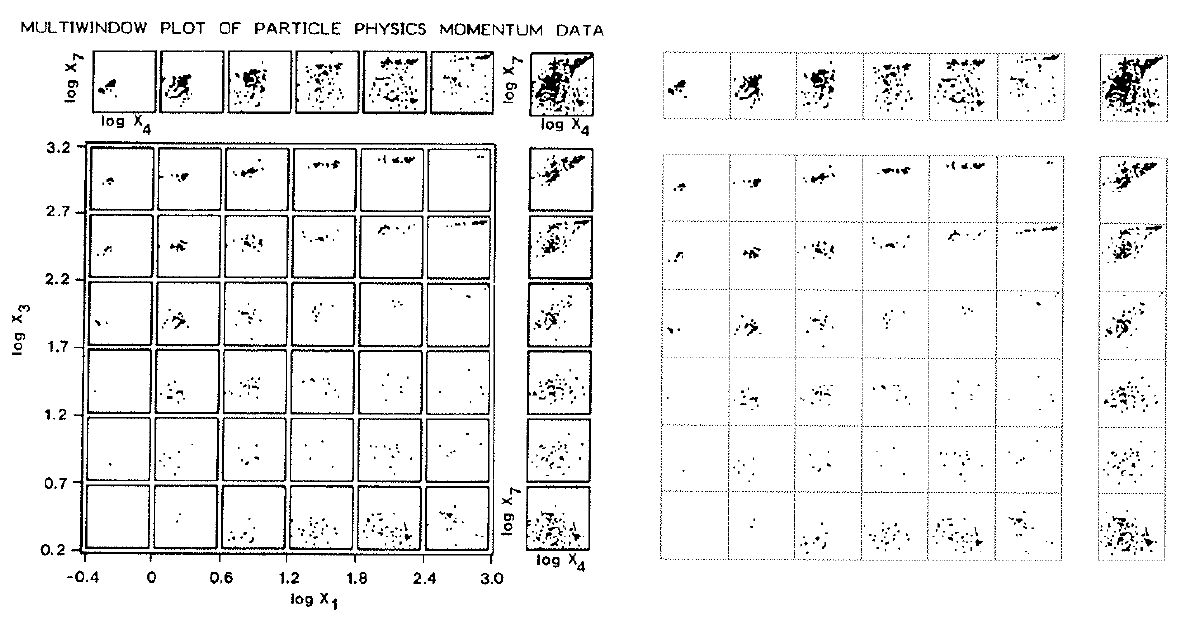

Consider the Tukeys' Multiwindow- and Box-Plots on the left,

and Tufte's Data-ink maximization on the right.

Consider the Tukeys' Multiwindow- and Box-Plots on the left,

and Tufte's Data-ink maximization on the right.

lecture 17

HW#2 Feedback

- vague HW spec? That is on Dr. J

- bugs in Unicon? That is on Dr. J

- Still, to give your program proper credit

I want to see it work on a variety of inputs.

- I tested with: hirose.json,

poem.json, and

typeinfer.json.

More broadly I might want to run it on any suspects/*.json

- Found a bug thanks to typeinfer.json: .dot format has reserved words that

we have to avoid! Like "node" for example. What all should we mangle

in translating .json to .dot format?

- Found a bug with open(cmd, "p"), when "dot.exe" was on the path in

a directory with spaces in its names, things did not go well. Fixed.

- I will accept one fixed/improved resubmit of HW#2 for

regrading purposes.

Windows Users' Notes

Reading

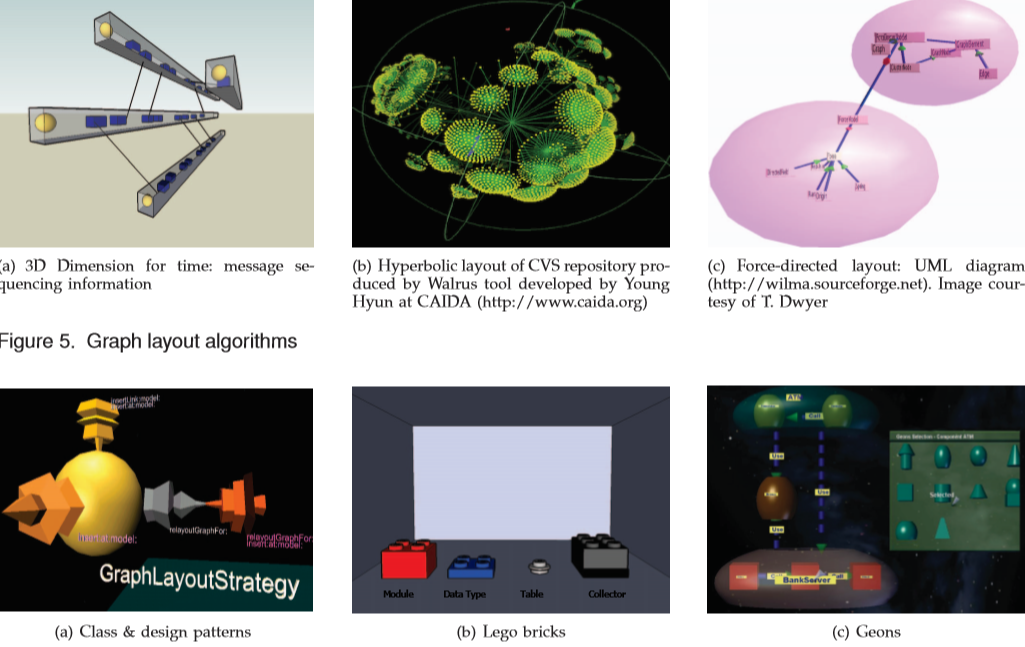

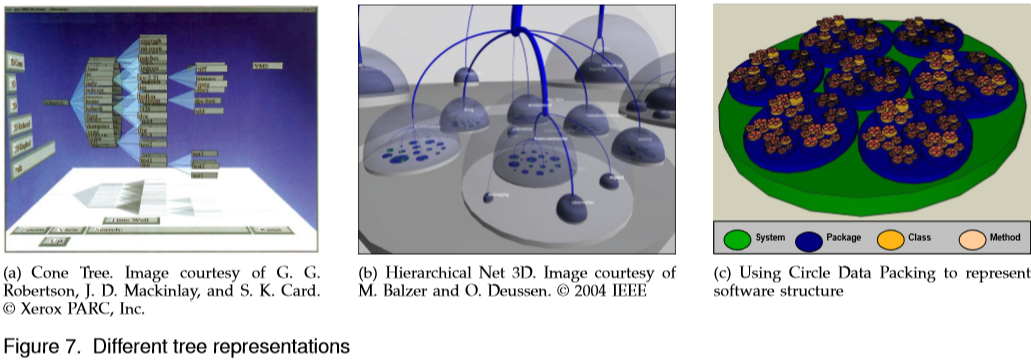

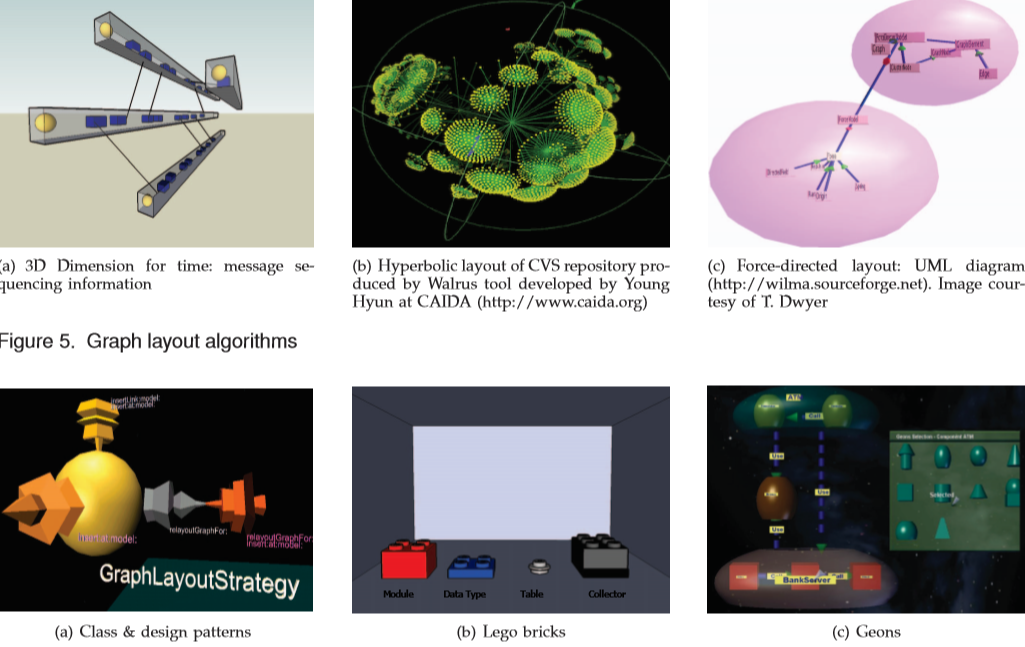

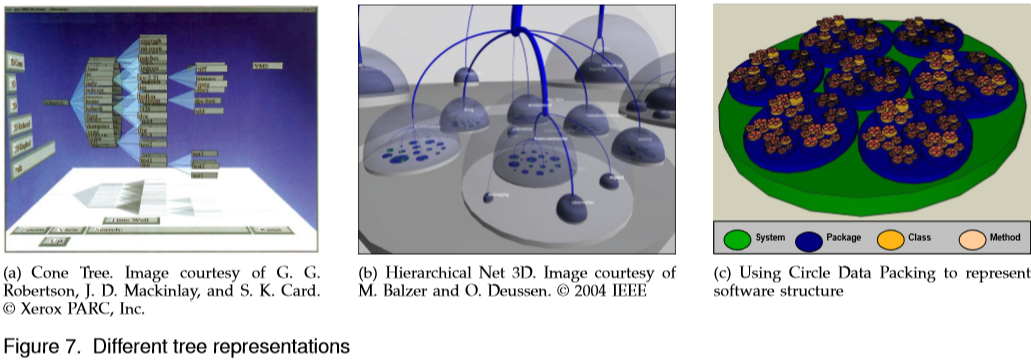

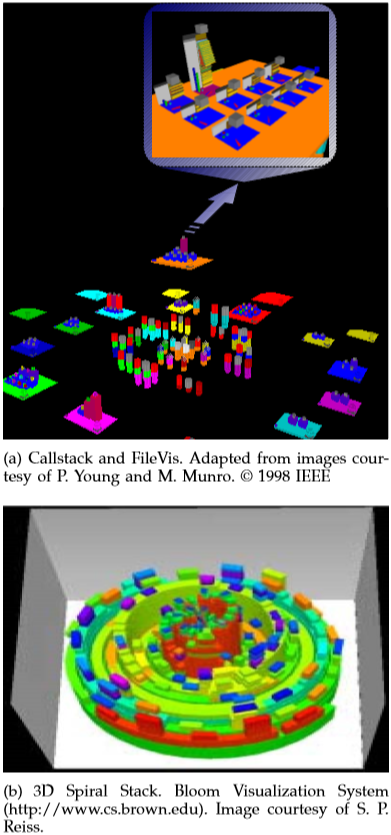

Discussion of "Overview of 3D Software Visualization"

- What was your favorite visualization idea from this paper?

- Define the three types of cognitive processes that visualization supports:

- Exploratory/Discovery

- Analytical/Decision-making

- Descriptive/Explanation

- What is the difference between scientific visualization and information

visualization?

- What are the main arguments as to whether to use 2D vs. 3D?

| 2D | 3D

|

|---|

| Pro | - fast

- low CPU

- cheap

- ubiquitous

- trained from infancy

| - density

- composition

- perception

- familiarity

|

|---|

| Con |

| - compute intensive

- hard to program

- hard to do 3DUI

- occlusion

|

|---|

- I dismiss "Augmented 2D" views out of hand. What about Adapted 2D views,

are they useful? What about combined 2D+3D presentations?

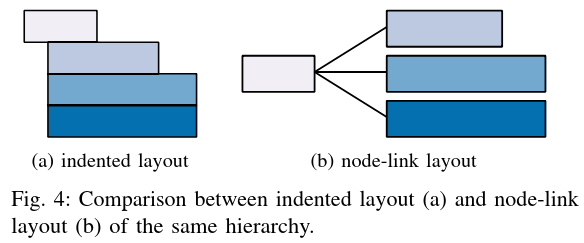

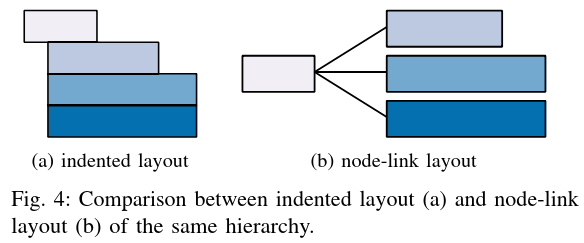

- Space metaphor: HUGE potential. See Figure 4.

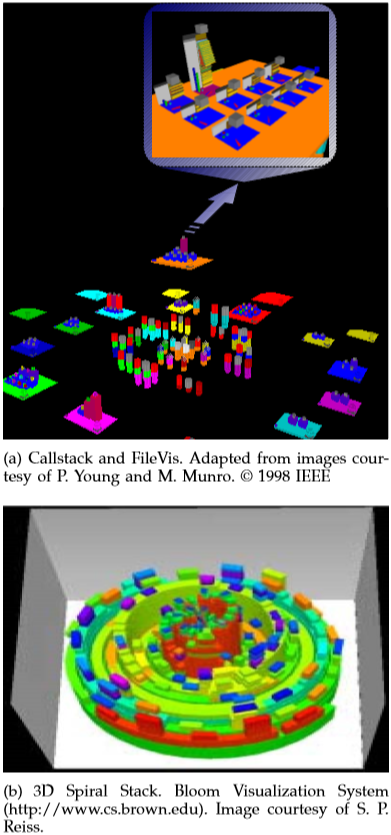

- Which was your favorite 3D layout? Which ones pose questions?

- Which was your favorite 3D tree layout? Which ones pose questions?

- What did you think of the circular/spiral depictions of call stacks?

GUI Monitors

- Unicon has a GUI class library, written by Robert Parlett

- big, feature rich, inspired-by-Java

- GUI programming in Unicon has a learning

curve comparable to GUI programming in other languages.

- Homework #4 probably will include adding a GUI to your work

Step #1 in GUI exploration is usually to get familiar with the interface

builder program; in our case that is IVIB. (Demo of IVIB goes here).

IVIB generates code that looks like this.

- The 70-line application creates a dialog and calls

show_modal()

- For a normal GUI app you then fill in the method bodies for

whatever events you've requested.

- For most applications, it is not necessary to understand much of the

scaffolding and large classes you inherit behavior from.

- Unicon Technical Report UTR#6 teaches the IVIB basics.

IVIB let's you draw a GUI and generates the code for you. For a program

execution monitor the main question will be: how to merge the event streams,

or how to merge the event processing loops, from the GUI and from the

monitored program's events. To accomplish this, you need to know more about

the underlying GUI classes.

There are 3 classes that most Unicon GUI programmers

need to become semi-comfortable with:

- Component

- Component is superclass of all basic visible GUI elements in an

application: buttons, sliders, lists, editable text boxes, and so

on. Components are generally organized hierarchically -- they form a tree in

Venn diagram style, with larger background components containing smaller

more active components.

- Dialog

-

A Dialog is a component that constitutes the root of some window -- it owns

a window and therefor can receive input events, which it then needs to route

down the tree to the correct leaf.

- Dispatcher

- The Dispatcher class handles the actual

event-processing loop, allowing for multiple dialogs, and wall-clock time

events in addition to GUI events.

In order to merge the Monitor and GUI event streams, we might do one of

the following:

- keep the monitor event loop primary, and poll for GUI events (!)

- keep the GUI event loop primary, and peridically read monitor events (!)

There is no way to select() from between GUI and monitor or

poll both, because to ask for an EvGet() is to transfer control

to the target program (freezing the GUI of the monitor until an event

occurs). However, you can call EvGet() with an

E_Tick along with your other events if you want to be sure to

regain control periodically even if the other monitored events do not occur

for long periods... then your only danger is: what if the target program

that you are monitoring chooses to block on some input it wants to read?

Additional notes on GUI-monitors:

- "piano.icn" had been doing its own input event processing, with