CS 210: Programming Languages Lecture Notes

|

|

|

Spring break

Covid-19

etc.

|

|

|

|

lecture #1

Welcome to CS210, here is our Syllabus

The Computer Science Assistance Center (CSAC), located in the JEB floor "2R"

area, has tutors available during most of "business hours" Monday through

Friday.

Most likely you will need help in this course; get to know who works

the CSAC and which ones know which languages.

- The main textbook in this class is by Adam Webber. Either the first or

the current (2nd) edition will be adequate. The textbook for the class

will be supplemented by a large number of language references as well as

this set of lecture notes,

which are revised substantially each time the course is offered.

Printing up front at start-of-semester is inadvisable, since I will

typically be re-ordering material and adding a new language to the mix.

It is OK to read ahead, but plan to re-read after the lecture is given.

Plan to spend quality time here in the class lecture notes.

- all homeworks in this class should be turned in in .zip compressed

format with no binary or executable files included. Submission is on

bblearn.uidaho.edu, which I will occasionally call "blackboard", which

is the commercial name of that product. From time to time

some class clown turns in a homework that is a gzip-compressed tar file,

a .rar, or some other archive format.

- We will discuss, and you are tasked to write homeworks as "literate"

programs. Your submitted .zip files must include your source code and a

makefile that has a "make pdf" rule.

Produce code as a document that you want me to be able to read

and understand. Points will be assigned for readability.

Reading

Read Webber Chapters 1-2.

Slides for Chapter 1

We went through slides #1-21 from Webber Ch. 1.

You should scan through the rest of them and see what questions they raise.

lecture #2

Picking up with Programming Languages

- Reminder: no class on Monday (Martin Luther Day)

- Have you read Webber Ch. 1-2 yet? Might be a quiz next Wednesday

- Webber Chapter 2: programming language syntax

- First Big Homework Unit: programming language syntax

- First Language Paradigm: Declarative

- First Language for you to Learn: Flex (and then Bison)

- I am gonna give you some of my own thoughts on Programming Languages before

we go to Webber Chapter 2.

- Lecture notes (this page) getting moved around a lot to accommodate the

Webber order of topic delivery; if you see a bug please report it. Otherwise,

apologies for the dust.

Why Programming Languages

This course is central to most of computer science.

- Definition of "programming language"

- a human-readable textual or graphic means of specifying the behavior

of a computer.

-

Programming languages have a short history

- ~60 years

-

The purpose of a programming language

- allow a human and a computer to communicate

- Humans are bad at machine language:

- Computers are bad at natural language:

Time flies like an arrow.

- So we use a language human and computer can both handle:

procedure main()

w := open("binary","g", "fg=green", "bg=black")

every i := 1 to 12 do {

GotoRC(w,i,1); writes(w, randbits(80))

}

WriteImage(w, "binary.gif")

Event(w)

end

procedure randbits(n)

if n = 0 then return ""

else return ((?2)-1) || randbits(n-1)

end

Even if humans could do machine language very well, it is still better

to write programs in a programming language.

Auxiliary reasons to use a programming language:

- portability

- so that the program can be moved to new computers easily

- natural (human) language ambiguity

- Computers would either guess, or take us too literally

and do the wrong thing, or be asking us constantly to restate the

instructions more precisely.

At any rate, programming of computers started with machine language, and

programming languages are characterized by how close, or how far, they are

from the computers' hardware capabilities and instructions. Higher level

languages can be more concise, more readable, more portable, less subject to

human error, and easier to debug then lower languages. As computers get

faster and software demands increase, the push for languages to become ever

higher level is slow but inevitable.

Turing vs. Sapir

The first thing you learn in studying the formal mathematics of computational

machines is that all computer languages are equivalent, because they all

express computations that can be mapped down onto a

Turing Machine,

and from

there, into any of the other languages. So who cares what language we use,

right? This is from the point of view of the computer, and it should be

taken with a grain of salt, but I believe it is true that the computer does

not in fact care which language you use to write applications.

On the other hand,

the Sapir-Whorf hypothesis

suggests to us that improving the programming

language notation in use will not cause just a first-order difference in

programming productivity; it causes a second-order difference in allowing

new types of applications to be envisioned and undertaken. This is from

the human side of the human-computer relationship.

From a practical standpoint, we study programming languages in order to

learn more tools that are good for different types of jobs. An expert

programmer knows and uses many different programming languages, and can

learn new languages easily when new programming tasks create the need. The

kinds of solutions offered in some programming languages suggest approaches

to problem solving that are usable in any language, but might not occur to

you if you only know one language.

The Ideal programming language is an executable pseudocode that perfectly

captures the desired program behavior in terms of software designs and

requirements. The two nearly insurmountable problems with this goal are

that (a) attempts to create such a language may be notoriously inefficient,

and (b) no design notation fits all different types of programs.

A Brief History of Programming Languages

There have been a few major conferences on the History of Programming

Languages. By the second one, the consensus was that "the field of

programming languages is dead", because "all the important ideas in

languages have been discovered". Shortly after this report from the

2nd History of Programming Languages (HOPL II) conference, Java swept

the computing world

clean, and major languages have been invented since then. It is conceivable

that the opposite is true, and the field of programming languages is still

in its infancy.

There are way over 1000 major (i.e. publically available and at one

point used for real applications) programming languages. Much less

than half are still "alive" by most standards. Programming languages

mostly have lifespans like pet cats and small dogs. Any language

can expect to be obsoleted by advances in technology within a decade

or at most two, and requires some justification for its continued

existence after that. Nevertheless some dead languages

are still in wide use and might be considered "undead", so long as

people have businesses or governments that are depending on them.

Languages evolved very approximately thus:

machine code, assembler

instruction sets vary enormously in size, complexity, and capabilities.

Difficult for humans.

Basic unit of computation is the machine word, often used as a number.

|

|

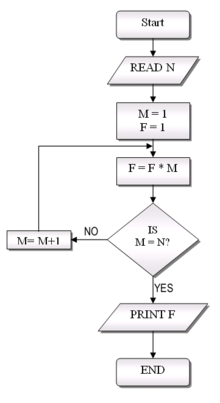

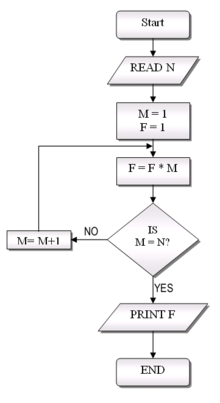

FORTRAN, COBOL

"high-level" languages. imperative paradigm.

Entire human-readable arithmetic expressions can be written on a single line.

Flowcharts widely used to assuage the chaos entailed by

"goto"-based program control flow.

|

|

Lisp, SNOBOL, APL, BASIC

functional paradigm and alternatives. interpretive. user-friendlier. slow.

Entire functions, or other complex computations, can be written in a line or

two in some of these languages. More important are advances such as automatic

recycling of memory, and the ability to modify or construct new code while

the program is running. But for some folks, they may have fatal flaws.

|

|

Algol, C, Pascal, PL/1

"structured" languages solve/eliminate the "goto" control flow problem.

Imperative paradigm; "goto"s considered harmful.

The mainstream of the 1970's. Emphasis on fast execution, and protecting

programmers from themselves and each other. Programs tend to become

unmaintainable as they grow bigger.

|

|

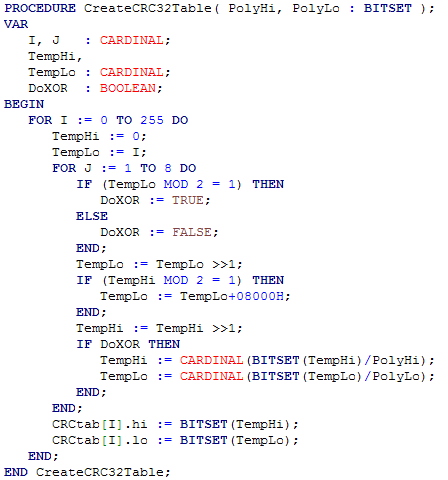

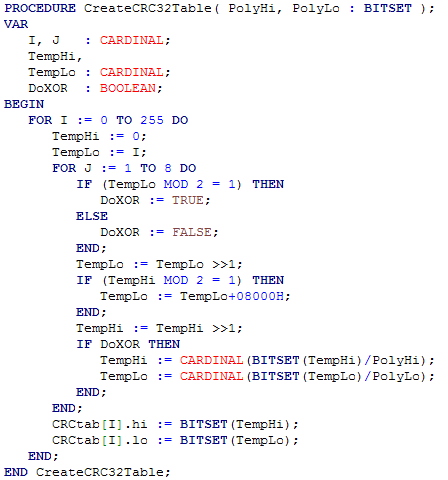

Ada, Modula-2, C++

"modular" systems programming languages. data abstraction.

Improvements in scalability to go along with the fact that you have to

write a zillion lines to do anything.

|

|

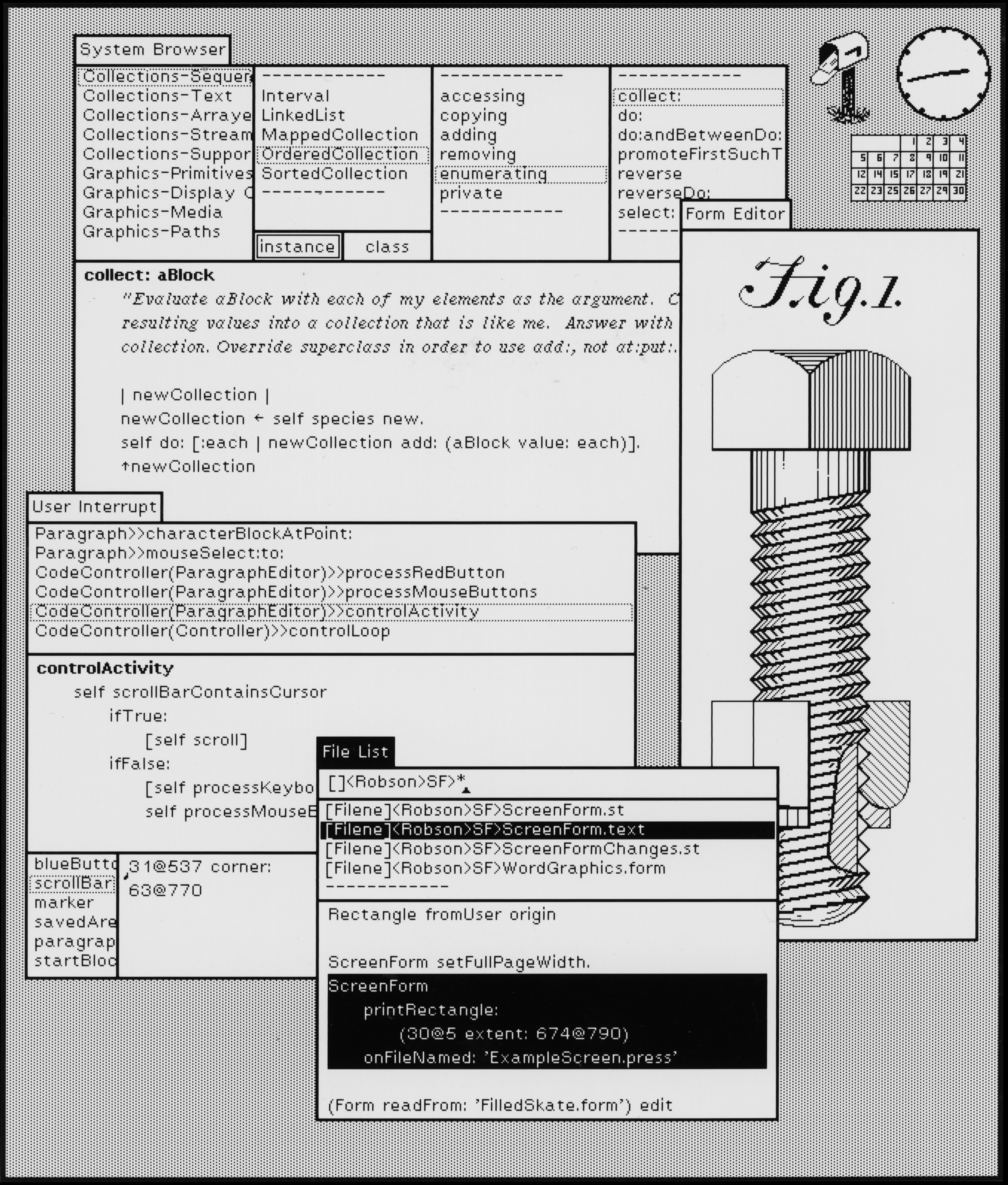

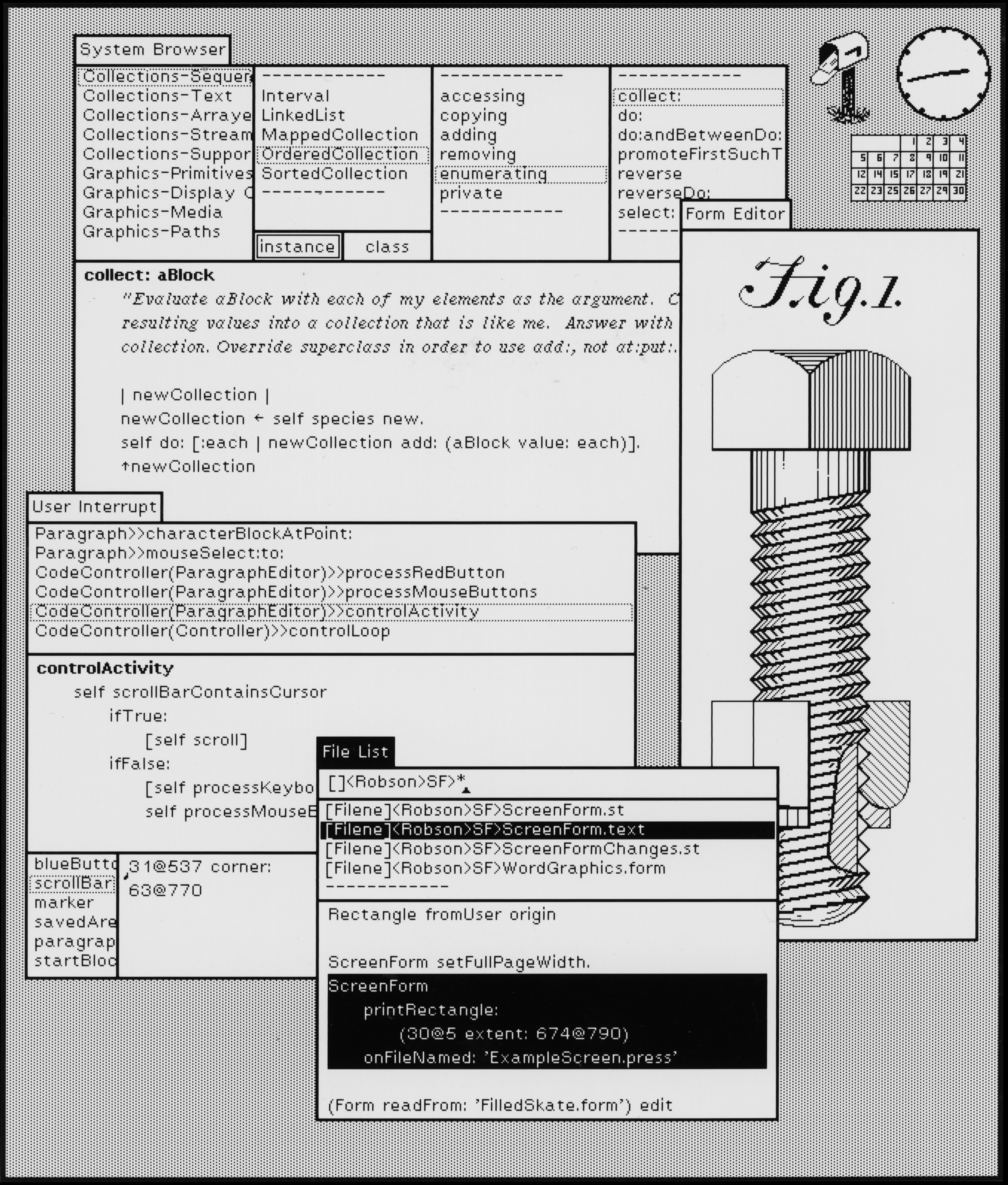

SmallTalk, Prolog; Icon, Perl

"Pure" versions of object-oriented, functional, and declarative paradigms;

rapid-prototyping and scripting languages.

Extreme power, often within specific problem domains.

|

|

Visual Basic, Python, Java, C#, Ruby, PHP, ...

GUI-oriented and web languages. mix-friendly languages.

The learning curve may be more in the programming environment.

|

|

Go, Swift, Rust...

New languages keep on coming. Improvements are perhaps becoming more gradual

over time. How many times must someone build "a better C" language? They are

still doing it.

What languages should be on this list?

What new languages are "hot"?

|

|

Programming Language Buzzwords

-

"low level", "high level", and "very high level"

-

"low" (machine code level) vs. "high" (anything above machine level)

is ubiquitous but inadequate

- machine readable vs. human readable

- certainly humans have difficulty reading binary codes, but

machines find reading human language text vexing as well

- data abstraction vs. control abstraction

- really, I might prefer data vs. code as my counterpoints

- kinds of data abstractions

- basic/atomic/scalar vs. structural/composite

- "first class" value

- an entity in a programming language that can be computed/constructed

at runtime, assigned to a variable, passed in or returned out of a

subroutine.

- kinds of control abstractions

- many variants on selection, looping, subroutines

- syntax and semantics

- meat and potatoes of language comparison and use

- translation models

- compilation, interpretation, source/target/implementation languages

Googling for History

Here are some highlights from the history of programming languages;

google them and see if they give clean answers or raise more questions

(for exam purposes):

- Programming predated the electronic computer. The first programmer

is widely claimed to be Lady Ada Lovelace. What language was

Ada Lovelace writing in?

- Who was Grace Murray Hopper and what is she famous for?

(her appearance on Letterman only gives part of the answer)

- What does APL, a language from the 1950's/60's have to do with

Google's Map/Reduce paradigm, upon which a decent chunk of modern

cloud computing is built?

- How has the rise of graphical user interfaces affected language design?

- What is the most successful visual programming language to date?

Paradigms and Languages

Several paradigms, or "schools of thought", have been promulgated regarding

how best to program computers.

The dominant imperative paradigm has been gradually refined over

time. It basically states that to program a computer, you give it

instructions in terms it understands. a.k.a. "procedural" paradigm: a

program is a set of procedures/functions. You write new "instructions" by

defining procedures. Since the underlying machine works this way, this is

the default paradigm and the one that all other paradigms reduce themselves

to in order to execute.

Functional and object-oriented paradigms are arguably special

cases of imperative programming. In functional programming you give the

computer instructions in clean, mathematical formulas that it understands.

In object-oriented programming, you give the computer instructions by

defining new data types and instructions that operate on those types.

Declarative programming is a polar opposite of

imperative programming, introduced in many different application contexts.

In declarative programming, you specify what computation is required, without

specifying how the computer is to perform that computation. The

logic programming paradigm is arguably a special case of

declarative programming.

Languages are implemented by compilers or interpreters. There are many

implementation techniques that fall somewhere in between.

Pure vs. Impure; Multi-paradigm

Really, when we say a programming language embodies a particular paradigm,

we are usually saying what it "mainly" does. Languages can be characterized

by evaluating how "pure" is their adherence to their dominant paradigm.

Impurity usually means:

falling back on imperative paradigm when expedient or necessary.

Purity is elegant but often comes at the price of idiocy.

| Pure Language Examples

|

|---|

| Language | Example | Commentary

| | SmallTalk |

quadMultiply: i1 and: i2

"This method multiplies the given numbers by each other and the result by 4."

| mul |

mul := i1 * i2.

^mul * 4

| Pure OO. Even ints are objects.

| | classic Lisp |

(defun fibonacci (N)

"Compute the N'th Fibonacci number."

(if (or (zerop N) (= N 1))

1

(+ (fibonacci (- N 1)) (fibonacci (- N 2)))))

| Pure functional.

No I/O, no assignment statements, etc.

| | Prolog |

perfect(N) :-

between(1, inf, N), U is N // 2,

findall(D, (between(1,U,D), N mod D =:= 0), Ds),

sumlist(Ds, N).

| Pure logic. Surprise failures, wild backtracking, nontermination

|

|

Different programming paradigms seem ideal for different application

domains. What is great for business data processing may be terrible for

rocket scientists. A computer scientist should know all the major paradigms

well enough to know which paradigm is best for each new project that they

come across. One option is to become proficient in several diverse languages.

Another option, sometimes, is to use a language that supports

multiple paradigms. These run the risk of being Frankenlanguages.

They are more likely to succeed when designed by a genius, and

when pragmatic, viewing multi-paradigm as an extension of impurity

rather than a theoretical ideal to aspire to.

| Example Multi-Paradigm Languages

|

|---|

| language | example | commentary

|

|---|

| LEDA |

relation grandChild(var X, Y : names);

var Z : names;

begin

begin writeln('test father-father descent'); end;

grandChild(X,Y) :- father(X,Z), father(Z,Y).

begin writeln('test father-mother descent'); end;

grandChild(X,Y) :- father(X,Z), mother(Z,Y).

begin writeln('test mother-father descent'); end;

grandChild(X,Y) :- mother(X,Z), father(Z,Y).

begin writeln('test mother-mother descent'); end;

grandChild(X,Y) :- mother(X,Z), mother(Z,Y).

end;

| Logic paradigm default; imperative when needed

| | Oz |

proc {Insert Key Value TreeIn ?TreeOut}

case TreeIn

of nil then TreeOut = tree(Key Value nil nil)

[] tree(K1 V1 T1 T2) then

if Key == K1 then TreeOut = tree(Key Value T1 T2)

elseif Key < K1 then T in

TreeOut = tree(K1 V1 T T2)

{Insert Key Value T1 T}

else T in

TreeOut = tree(K1 V1 T1 T)

{Insert Key Value T2 T}

end

end

end

| Pattern matching seems inspired by FORMAN, which is under-credited.

| | Icon |

# Generate words

#

procedure words()

while line := read() do {

lineno +:= 1

write(right(lineno, 6), " ", line)

map(line) ? while tab(upto(&letters)) do {

s := tab(many(&letters))

if *s >= 3 then suspend s# skip short words

}

}

end

|

Imperative default, but logic-style programming when the programmer uses certain constructs.

Unicon adds OO (along with a lot of I/O capabilities).

|

|

lecture #3

Today we did:

We got through about slide 8 or so of the chapter 2 slides.

Syntax

At first glance the syntax of a language is its most defining

characteristic. Languages differ in terms of how they form expressions

(prefix, postfix, infix), what kinds of control structures govern the

evaluation of expressions, and how the programmer composes complex

operations from built-ins and simpler operations.

Syntax is described formally using a lexicon and a grammar. A lexicon

describes the categories of words in the language. A grammar describes

how words may be combined to make programs. We use regular expressions

and context free grammars to describe these components in formal

mathematical terms. We will define these notations in the coming weeks.

| Example Regular Expressions | Example Context Free Grammar

|

|---|

ident [a-z][a-z0-9]*

intlit [0-9]+

|

E : ident

E : intlit

E : E + E

E : E - E

|

Many excellent languages have died (or, been severely hampered)

simply because their syntax was poorly designed, or too weird.

Introducing new syntax is becoming less and less popular.

Recent languages such as Java demonstrate that it is possible

to add more power to programming languages without turning their

syntax inside out.

Syntax starts with lexicon, then expression syntax, and grammar.

We are going to study these ideas in some detail in this course; expect

to revisit this topic.

A context free grammar notation is sufficient to completely

describe many programming languages, but most popular languages

are described using a context free grammar plus a small set of

cheat rules where surrounding context or semantic rules affect

the legal syntax of the language.

Lexical syntax defines the individual words of the language.

Often there are a set of "reserved words", a set of operators,

a definition of legal variable names, and a definition of legal

literal values for numeric and string types.

Expression syntax may be infix, prefix, or postfix, and may

include precedence and associativity rules. Some languages

"expression-based", meaning that everything in the language

is an expression. This might or might not mean the language

is simple to parse without needing a grammar.

Context free grammars are a notion introduced by Chomsky and

heavily used in programming languages. It is common to see

a variant of BNF notation used to formally specify a grammar

as part of a language definition. Context free grammars have

terminals, nonterminals, and rewriting rules.

CFG's cannot describe all languages, and some grammars are

inherently ambiguous. Consider

1 - 0 - 1

and

if E1 then if E2 then S1 else S2

Semantics

However much we love to study syntax, it is semantics that really defines

the paradigms. Semantics generally includes type system details and an

evaluation model. We will come back to it again and again this semester.

For now, note that there can be axiomatic semantics, operational

semantics, and denotational semantics.

Runtime Systems

Programming Languages' semantics are partly defined by the compiler or

interpreter, and partly by the runtime system. A runtime system consists

of libraries that implement the language semantics. They range from tiny

to gigantic. The may be linked into generated code, or linked into an

interpreter, or sometimes embedded directly in generated code. They include

things ranging from implementing language built-ins that aren't supported

directly by hardware, to memory managers and garbage collectors, to thread

schedulers, to input/output.

Memory: the Most Important Problem Solved by (the field of)

Programming Languages

You can argue that the biggest thing languages have done for is us

solve the control flow problem, by eliminating goto statements and

all the spaghetti coding that made early programs difficult to debug.

But Dr. J's Conjecture #1 is that memory management is a dominant aspect

of modern computing. If it is not solved by the language, it will dominate

the effort required to develop most programs. Example: memory debugging in

C and C++ may occupy 60%+ of time spent getting a working solution. Many

C/C++ programs ship with memory bugs.

- Early languages laid out all data statically, as global variables

- About the time we started using functions for everything, we

had discovered that most data was short-lived and could be

re-used effectively if we allocated it on a stack (i.e. local variables).

Machine hardware evolved to dedicate 1-2 registers for this.

- About the time we started using objects for everything, we had

discovered that longer-lived data tended to be associated with

application domain concepts, and that such data had highly variable

lifetimes best served by an (automatically managed) heap.

OO systems typically dedicate another register ("self" or "this")

for this.

I/O: the Key to All Power in the (Computing) Universe

Almost all programming languages tend to consider I/O an afterthought.

Dr. J's Conjecture #2: I/O is a dominant aspect of modern computing and of

the effort required to develop most programs.

Evidence: dominance of graphics, networking, and storage in modern hardware

advances; necessity of I/O in communication of results to humans;

proliferation of different computing devices with different I/O capabilities.

Implications: programming language syntax and semantics should promote

extensible I/O abstractions as central to their language definitions.

Ubiquitous I/O harware should be supported by language built-ins.

Expansion on the whole "Compilers" vs. "Interpreters" thing

Remind me of your definitions of "compiler" and "interpreter" in the

domain of programming languages. What's the difference? Are they

mutually exclusive?

Variants on the Compiler

- classic

- source code to machine code

- preprocessor

- source code to...simpler source code (Cfront, Unicon)

- JIT

- compiles at runtime, VM-to-native or otherwise

- special-purpose / misc

- translate source code to hardware, to network messages, ...

Variants on the Interpreter

- classic

- executes human-readable text, possibly a statement or line at a time

- tokenizing

- executes "tokenized" source code (array of array of tokens)

- tree

- executes via tree traversal

- VM

- executes via software interpretation of a virtual machine instruction set

Enscript

enscript(1) is a program that converts ASCII text files

into postscript. It has some basic options for readable formatting.

enscript --color=1 -C -Ejava -1 -o hello.ps hello.java && ps2pdf hello.ps

produces a PDF like this.

Flex and Bison

Our next "language" in this course is really two languages that were designed

to work together.

- Flex and Bison are free GNU implementations of two classic

languages (lex and yacc) designed by the team at AT&T that brought you

C/C++ and UNIX.

- They are examples of the declarative programming

paradigm.

- Declarative languages take a (mathematically precise) specification

of what is to be computed, and compute it, without the programmer

having to specify the sequence of instructions used to compute the

result.

Reading Assignment: Flex

Read Sections 3-6 of the Flex manual,

Lexical Analysis With

Flex. This manual describes a slightly different version than that

installed on our Linux boxes, but you are unlikely to encounter any

differences that matter in a CS 210 homework.

Regular Expressions

The notation we use to precisely capture all the variations that a given

category of token may take are called "regular expressions" (or, less

formally, "patterns". The word "pattern" is really vague and there are

lots of other notations for patterns besides regular expressions).

Regular expressions are a shorthand notation

for sets of strings. In order to even talk about "strings" you have

to first define an alphabet, the set of characters which can

appear.

- Epsilon (ε) is a regular expression denoting the set

containing the empty string

- Any letter in the alphabet is also a regular expression denoting

the set containing a one-letter string consisting of that letter.

- For regular expressions r and s,

r | s

is a regular expression denoting the union of r and s

- For regular expressions r and s,

r s

is a regular expression denoting the set of strings consisting of

a member of r followed by a member of s

- For regular expression r,

r*

is a regular expression denoting the set of strings consisting of

zero or more occurrences of r.

- You can parenthesize a regular expression to specify operator

precedence (otherwise, alternation is like plus, concatenation

is like times, and closure is like exponentiation)

Although these operators are sufficient to describe all regular languages,

in practice everybody uses extensions:

- For regular expression r,

r+

is a regular expression denoting the set of strings consisting of

one or more occurrences of r. Equivalent to rr*

- For regular expression r,

r?

is a regular expression denoting the set of strings consisting of

zero or one occurrence of r. Equivalent to r|ε

- The notation [abc] is short for a|b|c. [a-z] is short for a|b|...|z.

[^abc] is short for: any character other than a, b, or c.

Some Regular Expression Examples

In a previous lecture we saw regular expressions, the preferred notation for

specifying patterns of characters that define token categories. The best

way to get a feel for regular expressions is to see examples. Note that

regular expressions form the basis for pattern matching in many UNIX tools

such as grep, awk, perl, etc.

What is the regular expression for each of the different lexical items that

appear in C programs? How does this compare with another, possibly simpler

programming language such as BASIC?

| lexical category | BASIC | C |

| operators | the characters themselves | For operators that are regular expression operators we need mark them

with double quotes or backslashes to indicate you mean the character,

not the regular expression operator. Note several operators have a

common prefix. The lexical analyzer needs to look ahead to tell

whether an = is an assignment, or is followed by another = for example.

|

| reserved words | the concatenation of characters; case insensitive |

Reserved words are also matched by the regular expression for identifiers,

so a disambiguating rule is needed.

|

| identifiers | no _; $ at ends of some; 2 significant letters!?; case insensitive | [a-zA-Z_][a-zA-Z_0-9]*

|

| numbers | ints and reals, starting with [0-9]+ | 0x[0-9a-fA-F]+ etc.

|

| comments | REM.* | C's comments are tricky regexp's

|

| strings | almost ".*"; no escapes | escaped quotes

|

| what else?

|

lex(1) and flex(1)

These programs generally take a lexical specification given in a .l file

and create a corresponding C language lexical analyzer in a file named

lex.yy.c. The lexical analyzer is then linked with the rest of your compiler.

The C code generated by lex has the following public interface. Note the

use of global variables instead of parameters, and the use of the prefix

yy to distinguish scanner names from your program names. This prefix is

also used in the YACC parser generator.

FILE *yyin; /* set this variable prior to calling yylex() */

int yylex(); /* call this function once for each token */

char yytext[]; /* yylex() writes the token's lexeme to an array */

/* note: with flex, I believe extern declarations must read

extern char *yytext;

*/

int yywrap(); /* called by lex when it hits end-of-file; see below */

The .l file format consists of a mixture of lex syntax and C code fragments.

The percent sign (%) is used to signify lex elements. The whole file is

divided into three sections separated by %%:

header

%%

body

%%

helper functions

lecture #4

Lecture 4 was spent on student questions about HW#1, particularly, how Flex

worked with C code. The following mailbag question was also answered:

Mailbag

Sometimes if you ask a good question by e-mail that the whole class needs

to hear the answer to, I will answer it in class. Sometimes I will give the

same answer you got by e-mail, and sometimes I will add to it after I think

about it some more.

- Do I have to develop on the cs course server or can I use my own

personal development environment on my laptop? If so, what version of Flex

should I be using. The latest version is 2.6.4

- Develop on any machine you want...but the test scripts will be

run and your grade will be based on how your program runs

on cs-210.cs.uidaho.edu. In practice, different versions of Flex

probably work the same for the purposes of this course, but it is

recommended that you allow time to TEST and FIX on cs-210.cs.uidaho.edu

even if you developed on another machine.

lecture #5

Flex Header Section

The header consists of C code fragments enclosed in %{ and %} as well as

macro definitions consisting of a name and a regular expression denoted

by that name. lex macros are invoked explicitly by enclosing the

macro name in curly braces. Following are some example lex macros.

letter [a-zA-Z]

digit [0-9]

ident {letter}({letter}|{digit})*

Flex also has a bunch of options, such as

%option yylineno

Read the Flex Manual and/or the Flex Man Page!!!

Flex Body Section

The body consists of of a sequence of regular expressions for different

token categories and other lexical entities. Each regular expression can

have a C code fragment enclosed in curly braces that executes when that

regular expression is matched. For most of the regular expressions this

code fragment (also called a semantic action consists of returning

an integer that identifies the token category to the rest of the compiler,

particularly for use by the parser to check syntax. Some typical regular

expressions and semantic actions might include:

" " { /* no-op, discard whitespace */ }

{ident} { return IDENTIFIER; }

"*" { return ASTERISK; }

"." { return PERIOD; }

You also need regular expressions for lexical errors such as unterminated

character constants, or illegal characters.

The helper functions in a lex file typically compute lexical attributes,

such as the actual integer or string values denoted by literals. One

helper function you have to write is yywrap(), which is called when lex

hits end of file. If you just want lex to quit, have yywrap() return 1.

If your yywrap() switches yyin to a different file and you want lex to continue

processing, have yywrap() return 0. The lex or flex library (-ll or -lfl)

have default yywrap() function which return a 1, and flex has the directive

%option noyywrap which allows you to skip writing this function.

A Short Comment on Lexing C Reals

C float and double constants have to have at least one digit, either

before or after the required decimal. This is a pain:

([0-9]+"."[0-9]* | [0-9]*"."[0-9]+) ...

You may be happier with something like:

([0-9]*"."[0-9]*) { return (strcmp(yytext,".")) ? REAL : PERIOD; }

or

([0-9]*"."[0-9]*) { return (strlen(yytext)>1) ? REAL : PERIOD; }

You-all know and love C/C++'s ternary e1 ? e2 : e3 operator, don't ya?

It's an if-then-else

expression, very slick. Since flex allows more than one

regular expression to match, and breaks ties by using the regular expression

that appears first in the specification, perhaps the following is best:

"." { return PERIOD; }

([0-9]*"."[0-9]*) { return REAL; }

This is still not complete.

-

After you add in optional "e" scientific exponent notation, what

should it look like?

- If present, it is an E followed by an integer with an optional

minus sign.

- Remember that

there are optional suffixes F and L.

- E, F, and L are case

insensitive (either upper or lower case) in real constants if present.

Cheesey Flex Example

On the fly, we wrote an example that

recognizes some basic English words, and punctuation.

lecture #6 began here

Reading json.org with my grader, I realized

- there is no character type in JSON, only strings

- there are a lot of things that are not legal JSON!

Accordingly, HW#1 has been tweaked. Beware, and refresh your browser.

Doing Homework on Windows

Yesterday in office hours, a student presented me with a view of their

Windows machine.

- it is possible to get Windows-native versions of flex, gcc etc.

that would run very similarly as cs-210.cs.uidaho.edu.

Mingw32 and Mingw64 are Windows-native compilations of UNIX tools.

-

We found a working flex.exe but missed a GCC on our first try.

- I should have brought up Windows Subsystem for Linux.

It is very capable of giving you all you need for CS 210, but you

have to know enough Linux to install packages after you enable it.

- There are also multiple similar 3rd party packages that provide

a linux-like command environment: cygwin and MSYS2 are like that.

- There is also Oracle virtual box, and installing a Linux in a virtual

machine there.

Lex extended regular expressions

Lex further extends the regular expressions with several helpful operators.

Lex's regular expressions include:

- c

- normal characters mean themselves

- \c

- backslash escapes remove the meaning from most operator characters.

Inside character sets and quotes, backslash performs C-style escapes.

- "s"

- Double quotes mean to match the C string given as itself.

This is particularly useful for multi-byte operators and may be

more readable than using backslash multiple times.

- [s]

- This character set operator matches any one character among those in s.

- [^s]

- A negated-set matches any one character not among those in s.

- .

- The dot operator matches any one character except newline: [^\n]

- r*

- match r 0 or more times.

- r+

- match r 1 or more times.

- r?

- match r 0 or 1 time.

- r{m,n}

- match r between m and n times.

- r1r2

- concatenation. match r1 followed by r2

- r1|r2

- alternation. match r1 or r2

- (r)

- simple parentheses specify precedence but do not match anything

- (?o:r), (?-o:r), (?o1-o2:r)

- parentheses followed by a question mark trigger (or if preceded

by a hyphen, suppress) various options

when interpreting the regular expression

| i | case-insensitivity

|

|---|

| s | interpret dot (.) to mean any character including \n

|

|---|

| x | ignore whitespace and (C) comments

|

|---|

| # | a real Flex comment. Looks like (?# ... )

|

|---|

This is some of the most awful and embarrassing language design

I have ever seen in a production tool. Enjoy.

- r1/r2

- lookahead. match r1 when r2 follows, without

consuming r2

- ^r

- match r only when it occurs at the beginning of a line

- r$

- match r only when it occurs at the end of a line

This example comes from the Flex manual page.

What is similar here to your HW assignment? What must be different?

/* scanner for a toy Pascal-like language */

%{

/* need this for the call to atof() below */

#include <math.h>

%}

DIGIT [0-9]

ID [a-z][a-z0-9]*

%%

{DIGIT}+ {

printf("An integer: %s (%d)\n", yytext,

atoi( yytext ) );

}

{DIGIT}+"."{DIGIT}* {

printf( "A float: %s (%g)\n", yytext,

atof( yytext ) );

}

if|then|begin|end|procedure|function {

printf( "A keyword: %s\n", yytext );

}

{ID} printf( "An identifier: %s\n", yytext );

"+"|"-"|"*"|"/" printf( "An operator: %s\n", yytext );

\{[^}\n]*\} /* eat up one-line comments */

[ \t\n]+ /* eat up whitespace */

. printf( "Unrecognized character: %s\n", yytext );

%%

int main(int argc, char **argv )

{

++argv, --argc; /* skip over program name */

if ( argc > 0 )

yyin = fopen( argv[0], "r" );

else

yyin = stdin;

yylex();

return 0;

}

yyin

Consider how yyin is used in the preceding toy compiler example,

if you have not already done so. You may need to do something similar.

Warning: Flex is Idiosyncratic!

Flex is a declarative language. The declarative paradigm is the highest-level

paradigm, so why is it so difficult to debug?

Examples of past student consultations:

- Doctor J, my program is sick:

...

IDENT [a-zA-Z_]+ /* this is an ident */

...

- C comments are allowed some places in Lex/Flex, but I guess not all.

This one causes a cryptic error message where the macro is used.

|

- Doctor J, my program won't do the regular expression I wrote:

...

[ \t\n]+ { /* skip whitespace*/ }

...

^[ ]*[a-zA-Z_]+ { return IDENT; }

...

- If the newline and whitespace are consumed by one big grab,

the newline won't still be sitting around in the input buffer to match

against

^ in this ident rule.

|

Point: a language can be declarative, but if it is cryptic and/or

gives poor error diagnostics, much of the claimed benefits of declarative

paradigm are lost.

Warning: Flex can be Arbitrary and Capricious!

Perhaps because of a desire for brevity, the lex family of tools makes

one the same, fatal and idiotic mistakes as Python and FORTRAN: using

whitespace as a significant part of the syntax! Consider when are %{

and %} needed in

- test1.l

- No errors, but fails to declare num_lines and num_chars unless you

add whitespace to the front or use %{ ... %}

- test2.l

- Gives cryptic flex syntax errors unless you

add whitespace to the front or use %{ ... %}

- test3.l

- The proper way to include C code in a Flex header.

Matching C-style Comments

Will the following work for matching C comments? A student e-mail proposed:

[ \t]*"/*".*"*/"[ \t]*\n

What parts of this are good? Are there any flaws that you can identify?

The use of square-bracket character sets in Flex

A student once sent me an example regular expression for comments that read:

COMMENT [/*][[^*/]*[*]*]]*[*/]

This is actually trying to be much smarter that the previous example. One

problem here is that square brackets are not parentheses, they do not nest,

they do not support concatenation or other regular expression

operators. They mean exactly: "match any one of these characters" or for ^:

"match any one character that is not one of these characters". Note also

that you can't use ^ as a "not" operator outside of square

brackets: you can't write the expression for "stuff that isn't */" by saying

(^ "*/")

Does your assignment this semester need to detect anything similar to

C style comments? If so, you should find or invent a working regular

expression that is better than the "easy, wrong" one.

Many different solutions are available around the Internet and

in books on lex and yacc, but let's see what we can do. On a midterm exam,

I am likely to ask you not for this regular expression, but for a regular

expression that matches some pattern of comparable complexity.

Danger Will Robinson:

/\* ... \*/

legal in classic regular expressions, not so in Flex which uses / as a

lookahead operator! Feel free to try

\/\* ... \*\/

But I prefer double-quoting over all those slashes. A famous non-solution:

"/*".*"*/"

and another, pathologically bad attempt:

"/*"(.|"\n")*"*/"

Flex End-of-file semantics

yylex() returns integers. From the Flex manual, it returns 0 at end of file.

HW#1 NOTE: originally the HW#1 spec said to return -1 on end of file. To do

that, you would write a regular expression like

<<EOF>> { return -1; }

This would be compatible with C language tradition of using -1 to indicate

EOF in functions such as fgetc(). However, I changed the main.c spec to say

it would continue to ask for words/tokens as long as it is getting positive

values returned, and it will not matter whether your yylex() function

returns 0 or -1 to indicate end of file. Still, you should know about this

EOF thing in case I make you do multiple files (and use yywrap()) later on.

Flex "States" (Start Conditions)

Section 10 of the Flex Manual discusses start conditions, which allow you

to specify a set of states and apply different regular expressions in

those different states. State names are declared in the header section

on lines beginning with %s or %x. %s states will also allow generic regular

expressions while in that state. %x states will only fire regular expressions

that are explicitly designated as being for that state.

There is effectively an implicit global variable

that remembers what state you are in. That variable is set using a

macro named BEGIN(); in the C code body in response to seeing some regular

expression that you want to indicate the start of a state.

ALL your regular expressions in the main section may optionally

specify via <sc> what start condition(s) they belong to.

Extended Flex Demo

Let's pretend we are doing HW#4 for a bit. In particular, let's try doing

as much as is needed for this program: wh.icn.

procedure main()

i := 1

while i <= 3 do

write(i)

end

Lexical Structure of Languages

A vast majority of languages can be studied lexically and found

to have the following kinds of token categories:

- reserved words

- literals

- punctuation

- operators

- identifiers

In addition, almost all languages will have separators/whitespace

that occur between tokens, and comments.

As you may have seen from homeworks 1-2, regular expressions can't

always handle real world lexical specifications. FORTRAN, for example,

has lexical challenges such as having no reserved words. Consider the

line

DO 99 I = 1.10

FORTRAN doesn't use spaces as separators.

The keyword DO isn't a keyword, unless you change the period

to a comma, in which case we can't be doing an assignment to a variable

named "DO99I" any more...

How many of you used

"states" (a.k.a. "start conditions")? What online resources for flex

have you found? Googling "lex manual" or "flex manual" gives great

results.

Chomsky Hierarchy

- A "language" in formal language theory is a mathematical entity: a set

of strings. It can be finite or infinite.

- Any particular regular expression will match some set of strings,

i.e. some "language".

- The set of all languages matchable by any regular expression

is an interesting class of languages, called the regular languages.

- The regular languages are incapable of matching balanced marks such

as parentheses: no regular language can do 0n1n

- The coming weeks of class will introduce a more powerful notation,

with a corresponding, broader class of languages, the context free

languages, which are described using context free grammars.

- There is a more powerful class than context free languages, called

context sensitive languages.

- The levels of increasing power among categories of languages are

called the Chomsky hierarchy.

Back to Textbook Ch. 2 slides

we got through about slide 26

lecture #7 began here

Syntax Analysis

Lexical analysis was about what words occur in a given language.

Syntax analysis is about how words combine. In natural language

this would be about "phrases" and "sentences"; in a programming

language it is how to express meaningful computations. If you

could make up any three improvements to C++ syntax, what would

they be? Some syntax is a lot more powerful or more

readable for humans than others, so syntax design actually matters.

And some syntax is a lot harder for the machine to parse.

Some Comments on Language Design

- success or failure of a language due to complicated factors

including its design (what else?)

- human-oriented vs. machine oriented

- purist vs. pragmatic

- general vs. special-purpose

Language Design Criteria

"(programming) language design is compiler construction" - Wirth

- efficiency of execution

- writability (efficiency of construction)

- readability (efficiency of maintenance)

- scalability (really Big programs?)

- extensibility (calling out, or adding new built-ins?)

- portability (where-all will it run?)

- stability/reliability (can you count on it?)

- implementability (if not, who cares?)

- consistency

- simplicity

- expressiveness

Context Free Grammars

A context free grammar G has:

- A set of terminal symbols, T

- A set of nonterminal symbols, N

- A start symbol, s, which is a member of N

- A set of production rules of the form A -> ω,

where A is a nonterminal and w is a string of terminal and

nonterminal symbols.

A context free grammar can be used to generate strings in the

corresponding language as follows:

let X = the start symbol s

while there is some nonterminal Y in X do

apply any one production rule using Y, e.g. Y -> ω

When X consists only of terminal symbols, it is a string of the language

denoted by the grammar. Each iteration of the loop is a

derivation step. If an iteration has several nonterminals

to choose from at some point, the rules of derivation would allow any of these

to be applied. In practice, parsing algorithms tend to always choose the

leftmost nonterminal, or the rightmost nonterminal, resulting in strings

that are leftmost derivations or rightmost derivations.

Context Free Grammar Examples

OK, so how much of the C language grammar can we come up

with in class today? Start with expressions, work on up to statements, and

work there up to entire functions, and programs.

Back to Textbook Ch. 2 slides

We started from slide 27 or so. We finished the slide deck.

lecture#8

Announcements

- HW#1 is due Wednesday! I will probably post a HW#2 between now and then.

- No CS 210 class, this Friday, February 7.

YACC (and Bison)

- YACC ("yet another compiler compiler") is a popular tool which originated at AT&T Bell Labs.

- The folks that gave us C, UNIX, and the transistor.

- YACC takes a context free grammar as input, and generates a

parser as output.

- Writes out C code. Handles a subset of all possible CFG's

- YACC's success spawned a whole family of tools

-

Many independent implementations (AT&T

yacc, Berkeley yacc, GNU Bison) for C and most other popular languages.

YACC files end in .y and take the form

declarations

%%

grammar

%%

subroutines

The declarations section defines the terminal symbols (tokens) and

nonterminal symbols. The most useful declarations are:

- %token a

- declares terminal symbol a; YACC can generate a set of #define's

that map these symbols onto integers, in a y.tab.h file. Note: don't

#include your y.tab.h file from your grammar .y file, YACC generates the

same definitions and declarations directly in the .c file, and including

the .tab.h file will cause duplication errors.

- %start A

- specifies the start symbol for the grammar (defaults to nonterminal

on left side of the first production rule).

The grammar gives the production rules, interspersed with program code

fragments called semantic actions that let the programmer do what's

desired when the grammar productions are reduced. They follow the

syntax

A : body ;

Where body is a sequence of 0 or more terminals, nonterminals, or semantic

actions (code, in curly braces) separated by spaces. As a notational

convenience, multiple production rules may be grouped together using the

vertical bar (|).

A Little Peek Behind Lex and Yacc Magic

Why? Because you should never trust a declarative language

unless you trust its underlying math.

-

Lex and Yacc (i.e. Flex and Bison) generate out C code

implementations of a state machine (a.k.a. automaton)

which remembers/encodes (in an integer "state")

what-all the pattern recognizer has seen at a given point.

- The difference between Lex and Yacc is that Lex's state

machine has no "memory", just the single state ("register").

Yacc's state machine has a "memory" consisting of a stack.

The memory (called the parse stack) is what allows Yacc to

manage

- The act of grabbing the next terminal symbol and placing it on the

parse stack (marking it as "seen" and moving to the next symbol) is

called a "shift".

- The act of replacing symbols on the parse stack that match the

righthand side of a grammar rule, with the nonterminal on its lefthand

side, is called a "reduce".

- See CS 385 for more info on the mathematics of state machines

- See CS 445 for more details on parsing algorithms.

Reading Assignment

Read Bison Manual chapter 1-4, 6, and skim chapter 5.

Ambiguity

In normal English, ambiguity refers to a situation where the meaning is

unclear, but in context free grammars, ambiguity refers to an unfortunate

property of some grammars that there is more than one way to

derive some input, starting from the start symbol. Often it is necessary

or desirable to modify the grammar rules to eliminate the ambiguity.

The simplest possible ambiguous CFG:

S -> x

S -> x

Maybe you wouldn't write that, but it is pretty easy to do it accidentally:

S -> A | B

A -> w | x

B -> x | y

In this grammar, if the input is "x", the grammar says it is legal. But

what is it, an A or a B?

Conflicts in Shift-Reduce Parsing

"Conflicts" occur when an ambiguity in the grammar creates a situation

where the parser does not know which step to perform at a given point

during parsing. There are two kinds of conflicts that occur.

- shift-reduce

- a shift reduce conflict occurs when the grammar indicates that

different successful parses might occur with either a shift or a reduce

at a given point during parsing. The vast majority of situations where

this conflict occurs can be correctly resolved by shifting.

- reduce-reduce

- a reduce reduce conflict occurs when the parser has two or more

handles at the same time on the top of the stack. Whatever choice

the parser makes is just as likely to be wrong as not. In this case

it is usually best to rewrite the grammar to eliminate the conflict,

possibly by factoring.

Example shift reduce conflict:

S->if E then S

S->if E then S else S

Consider the sample input

if E then if E then S1 else S2

In many languages, nested "if" statements produce a situation where

an "else" clause could legally belong to either "if". The usual rule

attaches the else to the nearest (i.e. inner) if statement. This

corresponds to choosing to shift the "else" on as part of the current

(inner) if-statement being parsed, instead of finishing up that "if"

with a reduce, and using the else for the earlier if which was

unfinished and saved previously on the stack.

Example reduce reduce conflict:

(1) S -> id LP plist RP

(2) S -> E GETS E

(3) plist -> plist, p

(4) plist -> p

(5) p -> id

(6) E -> id LP elist RP

(7) E -> id

(8) elist -> elist, E

(9) elist -> E

By the point the stack holds ...id LP id

the parser will not know which rule to use to reduce the id: (5) or (7).

YACC error handling and recovery

- Use special predefined token

error where errors expected

- On an error, the parser pops states until it enters one that has an

action on the error token.

- For example: statement: error ';' ;

- The parser must see 3 good tokens before it decides it has recovered.

- yyerrok tells parser to skip the 3 token recovery rule

- yyclearin throws away the current (error-causing?) token

- yyerror(s) is called when a syntax error occurs (s is the error message)

lecture 9

Announcement

- Reminder: no class Friday February 7, sorry!

- HW#1 is due. HW#2 is posted on the class webpage.

Improving YACC's Error Reporting

yyerror(s) overrides the default error message, which usually just says either

"syntax error" or "parse error", or "stack overflow".

You can easily add information in your own yyerror() function, for example

GCC emits messages that look like:

goof.c:1: parse error before '}' token

using a yyerror function that looks like

void yyerror(char *s)

{

fprintf(stderr, "%s:%d: %s before '%s' token\n",

yyfilename, yylineno, s, yytext);

}

Yacc/Bison syntax error reporting, cont'd

Instead of just saying "syntax error", you can use the error recovery

mechanism to produce better messages. For example:

lbrace : LBRACE | { error_code=MISSING_LBRACE; } error ;

Where LBRACE is an expected token '{'.

This assigns a global variable error_code

to pass parse information to yyerror().

Another related option is to call yyerror()

explicitly with a better message

string, and tell the parser to recover explicitly:

package_declaration: PACKAGE_TK error

{ yyerror("Missing name"); yyerrok; } ;

Using error recovery to perform better error reporting runs against

conventional wisdom that you should use error tokens very sparingly.

What information from the parser determined we had an error in the first

place? Can we use that information to produce a better error message?

Getting Flex and Bison to Talk

The main way that Flex and Bison communicate is by the parser

calling yylex() once for each terminal symbol in

the input sequence. The terminal symbol is indicated by the integer

values returned by function yylex().

An extended example of this functioning can be

built by expanding

the earlier Toy compiler example

Flex file for a subset of Pascal so that it talks to a similar

toy Bison grammar.

This was a nice lecture on Flex and Bison with a hands-on end-to-end

example consisting of a lexer and parser for a subset of English language

dates. The main difference between this and your homework, structurally,

was the placement of main() in dates.y instead of a separate .c file. The

example is incomplete; what refinements are needed?

Getting Lex and Yacc to Talk ... More

In addition, YACC uses a global variable named yylval, of type YYSTYPE,

to collect lexical information from the scanner. Whatever is in this variable

each time yylex() returns to the parser is copied over onto the

top of a parser data structure called the "value stack" when the token

is shifted onto the parse stack.

The YACC Value Stack

- YACC's parse stack contains only states

- YACC maintains a parallel set of values

- $ in semantic actions names elements on the value stack:

- $$ denotes the value associated with the LHS (nonterminal) symbol

- $n denotes the value associated with RHS symbol at position n.

- Value stack typically used to construct a parse tree

- The default value stack is an array of integers

- The value stack can hold arbitrary values in an array of unions

- The union type is declared with %union and is named YYSTYPE

- Typical rule with semantic action: A : b C d { $$ = tree(R,3,$1,$2,$3); }

lecture 10

There was no class on Friday February 7.

lecture 11

Using the Value Stack for More Than Just Integers

You can either declare that struct token may appear in the %union,

and put a mixture of struct node and struct token on the value stack,

or you can allocate a "leaf" tree node, and point it at your struct

token. Or you can use a tree type that allows tokens to include

their lexical information directly in the tree nodes. If you have

more than one %union type possible, be prepared to see type conflicts

and to declare the types of all your nonterminals.

Getting all this straight takes some time; you can plan on it. Your best

bet is to draw pictures of how you want the trees to look, and then make the

code match the pictures. No pictures == "Dr. J will ask to see your

pictures and not be able to help if you can't describe your trees."

Declaring value stack types for terminal and nonterminal symbols

Unless you are going to use the default (integer) value stack, you will

have to declare the types of the elements on the value stack. Actually,

you do this by declaring which

union member is to be used for each terminal and nonterminal in the

grammar.

Example: in a .y file we could add a %union declaration to the header

section with a union member named treenode:

%union {

nodeptr treenode;

}

This will produce a compile error if you haven't declared a nodeptr type

using a typedef, but that is another story. To declare that a nonterminal

uses this union member, write something like:

%type < treenode > function_definition

Terminal symbols use %token to perform the corresponding declaration.

If you had a second %union member (say struct token *tokenptr) you

might write:

%token < tokenptr > SEMICOL

Comments from (Old) Student Office-Hour Visits

- lots of productive learning occurs when doing the homeworks

- troubles with syntax error on first token? Bison's integer tokens

for its terminal symbols must match what your yylex is giving it.

- End of file can cause problems. It is

entirely possible to accidentally be returning an end of file code

multiple times or forever, if flex and bison are not handling EOF

the same.

- In debugging, printing out each token (like in the last homework)

inside yylex() can be handy. Or just define YYDEBUG and turn on yydebug.

- Need help with bugfinding? (A) learn difference between syntax and parse

trees and how to use $$=$1 and (B) view each bug in terms of what parent

treenode to look for it in, and what child node shape(s) exhibit the bug.

Debugging a Bison Program

The power of lex and yacc (flex and bison) is that they are declarative:

you don't have to supply the algorithm by which they work, you can treat

it as if it is magic. Good luck debugging magic. Good luck using gdb to

try and step through the generated parser. If "bison --verbose" generates

enough information for you to debug your problem, great.

If not, your best hope is to go into the .tab.c file that Bison

generates, and

turn on YYDEBUG and then assign yydebug=1. If you do, you will get a runtime

trace of the shifts and the reduces. Between that and a trace of every token

returned by yylex(), you can figure out what is going on, or get help with it.

An Inconvenient Truth about YACC and Bison

Did we mention that the parsing algorithm used by YACC and Bison (LALR)

can only handle a subset of all legal context free grammars?

- Full context

free parsers exist, but use so much time and space such that they

were prohibitive back in the 1970's.

- YACC runs in linear time --- proportional to the

input size (# of tokens), a very desirable property for tools that must handle

large inputs all the time, like compilers.

- YACC's space requirements are worse than linear, but it uses tricks

(such as noticing that many of the rows in its

tables are identical) to keep its parse tables reasonable in size.

Hand-simulating an LR parser

Suppose we simulate the "calc" parser on an example input.

It uses the following algorithm. The details are sort of

beyond the scope of this class; what you

are supposed to get out of this is some intuition.

ip = first symbol of input

repeat {

s = state on top of parse stack

a = *ip

case action[s,a] of {

SHIFT s': { push(a); push(s') }

REDUCE A -> β: {

pop 2*|β| symbols; s' = new state on top

push A

push goto(s', A)

}

ACCEPT: return 0 /* success */

ERROR: { error("syntax error", s, a); halt }

}

}

LR Parsing Cliffhanger.

OK, here comes a sample input data! The grammar is:

E : E '+' T | E '-' T | T ;

T : T '*' G | T '/' G | G ;

G : F '^' G | F ;

F : NUM | '(' E ')' ;

What we are really missing in order to actually simulate a

shift-reduce parse of this are the parse tables and how they

are calculated -- this

is covered thoroughly in a number of compiler writing

textbooks. By the way LR parsing (the magic that YACC does) is

not the only or most human-friendly of parsing methods.

lecture #12 began here

Discussion of parsing "(213*11^5)-8"

- the lexical analysis and the parsing are interleaved.

- the whole array of tokens is not constructed before parsing (usually).

-

yyparse() calls yylex() once each time it

does a shift operation

- lexical analysis is thus gradually performed.

This could mix CPU operations and I/O operations

in an attractive balance, but in practice, the I/O has to be heavily

buffered to get good performance at it. You can at least figure that

you are starting with an array of characters

Now, let's see that parse again. The array of char looks like.

The parse stack is empty, yyparse() calls yylex() to read the first

token

| Parse stack | current token | remaining input

|

| empty | '(' |

|

Shift or reduce ? -- shift. Note that you could reduce, even in this empty

stack case, if the grammar had a production rule where there was some

optional thing at the start.

| Parse stack | current token | remaining input

|

| '(' | NUM213 |

|

Shift or reduce ? -- shift. Can't reduce '('.

| Parse stack | current token | remaining input

|

NUM213

'(' | '*' |

|

Shift or reduce ?? Before we can shift a '*' onto the stack, we

have to have an T. We don't have one, we have to reduce. What can

we reduce? We can reduce NUM to an F.

| Parse stack | current token | remaining input

|

F

'(' | '*' |

|

Shift or reduce ?? We still have to have a T and don't, so reduce again.

| Parse stack | current token | remaining input

|

T

'(' | '*' |

|

Shift or reduce ?? Shift the '*'

| Parse stack | current token | remaining input

|

'*'

T

'(' | NUM11 |

|

Shift or reduce ??

(The lecture went on to finish, on the whiteboard)

lecture #13 began here

Announcement

- No class Monday February 17, it is a UI holiday (President's Day)

YYDEBUG and yydebug demo

Let's use Bison to do the previous example.

Extended Discussion of Parse Trees and Tree Traversals

lecture #14 began here

How is HW#2 Going?

- It will be due soon. It is a challenging assignment.

- I have been getting a lot of requests for an extension.

- Extension merit kinda depends on whether you've procrastinated;

also slightly: whether the grader finishes grading HW#1 soon or not.

- What questions do you have for me today?

Reflections on Recent Office Visits

- Folks need to actually read Flex and Bison manuals and try to learn

those tools.

- If you didn't really understand or complete a correct HW#1, HW#2 starts

with: do HW#1.

- json.org, your specification document,

doesn't tell you where the line

between Flex and Bison is, it just presents both in one seamless form.

- HW#2 line between Flex and Bison is: Flex does the 10 categories defined

in HW#1, plus discarding whitespace. Bison does everything else.

- Bison does: objects, arrays, and the elements within them, such as

comma-separated lists of things.

lecture #15 began here

ML Lecture #1

Announcements

- No office hours today 2/21, sorry. But you might catch me between 10:30

and 12:30 today if you are not in class then.

- If you didn't finish your HW#2, keep working on it, seek help by e-mail

or next week. In the absence of an excused lateness, late fees will be

"modest" (5%/day) until our grader finishes

grading HW#1. After that: 10%/day.

- It is time to set the date of our midterm. How about Friday March 13?

Functional Programming and ML

You must unlearn what you have learned. -- Master Yoda

The language ML ("Meta Language"), is from the functional programming

paradigm.

- Function programming tries to view the entirety of computing in

terms of mathematical functions.

- ML was invented by Robin Milner and

colleagues at the University of Edinburgh in the 1970's.

- ML is

influenced by Lisp, the mother of all functional programming languages,

from the 1950s.

To be honest, I like Lisp and am new to ML. Our textbook

author Dr. Webber is an ML nerd, and that is the least of his...

eccentricities. ML is grossly overrepresented in our book. I expect to

march through it fast, and learn however much we can. Webber would like

us to spend half the course on it. I am thinking more like 1/4.

Functional programming in a nutshell

Reading

Read the Webber textbook chapters 5/7/9/11. Originally the intent was to

cover one chapter per class period, but that seems to be impossible.

We will do however much ML we have time for before spring break, and you

should read as fast as we manage to cover material.

ML Slides from Webber

lecture #19 began here

Discussion of HW #1 and HW #2

- If you rocked them both, kudos to you

- If you didn't rock them, you kinda get to choose your future.

- What I can do, and what I can't do.

| Can Do | Can't Do

|

|---|

- help you every which way to learn Flex, Bison, ML

- accept resubmits for partial credit

- ... ??

|

- write your homeworks for you

- extend the academic calendar

- change the past

|

- you must ask the right questions

- HW#2 grading methodology substantially revised from HW#1

- HW#2 was graded similar to how CS 445 homeworks are graded.

- Misgraded, or other complaints with your grade/feedback? Take it up with me.

- Uncanny resemblance between your code and a classmate's? Take it up with me.

- Haven't really learned flex/regex'es/bison/grammars yet? Learn before the midterm.

- Need to fix your homework? Get help as needed, fix, and resubmit.

A Second Look at ML (slides 8-26)

lecture #20 began here

A Second Look at ML (slides 27+)

Polymorphism (slides 1-17)

lecture #21 began here

Polymorphism (slides 18-)

A Third Look at ML (slides 1-11)

lecture #22 began here

Midterm on Friday this week

The midterm will cover what we have seen up to now: Flex, Bison, and ML.

Wednesday will be a Midterm Review.

Thoughts on ML TextIO.inputLine

A Third Look at ML (slides 12-)

We will not cover, and the exams will not include:

A Fourth Look at ML (43 slides)

We will probably discuss, during the 2nd half of the semester:

Scope (48 slides)

Binding (53 slides)

lecture 23

CoronaVirus Update

- Campus authorities have instructed us to not

physically hold class for rest of the semester.

- Class will be online via Zoom.

- You can attend from home, or from your dorm or house on campus, so

long as you have good internet.

- You should have your microphone muted except when

you are asking a question.

- Our CS 210 Zoom ID is 625-323-868. You may wish to connect to zoom.uidaho.edu and

then join our session.

- I will run class from my office (or home office) our classroom appears

to not have a camera setup.

CDAR Testing?

If you have accommodations, feel free to work with CDAR regarding your

exam scheduling. Several students are eligible for this.

Random numbers in ML

From stackoverflow:

val r = Random.rand(1,1);

- returns a random number generator object. The tuple is used to generate

a random seed; almost any two integers would work.

val nextInt = Random.randRange(1,100);

- returns a function that takes a random number generator and returns

an integer between 1 and 100.

nextInt r;

- fetches a random number in the range nextInt was setup for (1..100)

Random.randReal r;

- fetches a random number between 0.0 and 1.0

There are also other functions; see

the manual.

Midterm Review

- Friday's exam will be on Flex and Bison and ML

- Emphasis will be on Flex and Bison because you have had more time to

learn them. ML may feature more than Flex/Bison on the final.

Programming Languages Big Picture Stuff

You should know what are the major programming paradigms, their main ideas,

and which ones have been covered in our class thus far.

Flex Review Materials

You should know...

- flex's basic syntax, major sections, etc.

- basic regular expressions and regex operators

- flex's extended regular expressions -- at least most of them

- sample.l was done in class to illustrate previous

three bullets

- how flex is called from C code, how it communicates with the caller

Bison Review Materials

You should know...

- bison's basic syntax, major sections, etc.

- basic context free grammar notation

A : b A c ;

- what is a shift and what is a reduce

- how a bison generated parser is called from C code (yyparse()),

how it communicates with the caller,

how it communicates with flex

- basic idea of how to build a parse tree

ML Review Materials

What can you tell me, or what can I tell you, about the following:

- ML language

- syntax and semantics

- ML runtime system

- garbage collection, symbol table

- Using ML

- common ML built-in functions and control structures

- ML execution behavior

- be able to diagram memory

What to study in ML

- Functional programming paradigm

- clean, mathematical thinking about computation

- exploratory and experimental programming

- How is ML different than C++?

- Practice with recursion

lecture 24

Welcome to Virtual CS 210

HW#3 Extension

Per student request Homework #3 is now due Wednesday, 11:59pm.

Midterm Exam Results

grade distribution:

157 157

140 143 145 147 149

---------------------- A

132 137

124 129

---------------------- B

111 113 116 117 117

102 103 105

---------------------- C

96

81

---------------------- D

65

34

Midterm Examination Solutions

As an experiment, the midterm exam solutions presentation has been

recorded in 8 separate videos available at this link.

These videos comprise 40+ minutes of the lecture for March 23, which will

consist of reading questions from e-mail, and taking them live at 9:30 on

3/23.

Mailbag

- How do I print multiple lines at one time in ML?

-

That depends on what you mean by multiple lines I guess. To print out

multiple lines at one time, you may want to concatenate those lines into one

big string

s, putting "\n" characters in between

each line. Then call TextIO.output(s) on it. Alternatively,

you could use a loop or recursion to output several lines with several calls

to TextIO.output.

- How can I clear the screen?

-

Clearing the screen might be tricky. ML is not exactly designed to be doing

advanced terminal stuff, and advanced terminal stuff tends to be not

portable -- what works on Linux might be different than what works on Windows

or MacOS for example. My first thought was to call

TextIO.output("\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n");

with enough newline characters to clear the screen. On cs-210.cs.uidaho.edu

you could probably also use OS.Process.system("clear"). More

advanced interactive programs might want to be able to move the cursor to a

particular row and column, or go into "raw" input mode to read characters

one key at a time, but that is beyond the scope of this class.

- I wrote an

exit() function, but it gives a warning message.

How do I get rid of that?

- fun exit () = OS.Process.exit OS.Process.success;

...

- exit();

stdIn:2.1-2.7 Warning: type vars not generalized because of

value restriction are instantiated to dummy types (X1,X2,...)

- The warning is harmless and has to do with the SML type inferencer not

knowing what to do with the return type of function

exit().

Perhaps the

simplest way to shut it up is to return something else. The following

example discards the return value of exit and just returns false.

- fun exit () = (OS.Process.exit OS.Process.success; false);

...

- exit();

- My ML global variables aren't working, what do I do?

- They work alright, but they are immutable. In pure functional

programming, you don't modify existing values, you construct new ones.

ML feels strong enough about this that variables are generally immutable.

Dr. Webber feels strongly enough about it that at the end of

the slides for his 4th Look at ML chapter, he mentions that he explicitly

omitted a discussion of "reference types" which are ML's way of having

mutable values.

Brief Primer on ML Reference Types

Because although you should do everything with recursion and immutable

variables, you eventually must do whatever it takes to get your program

to meet its requirements. Some ideas here came from

Cornell

- A value of type "int ref" is a pointer to an int.

- The

! reads/dereferences the value pointed to.

- The

:= operator writes/modifies what a ref points to.

Examples:

- val Health : int ref = ref 30;

val Health = ref 30 : int ref

- Health;

val it = ref 30 : int ref

- !Health;

val it = 30 : int

- Health := (!Health) - 1;

val it = () : unit

- !Health;

val it = 29 : int

lecture 25

Practice Raising your Hand

If you text chat me enough, and are patient, I will probably respond to that,

but if you click on the Attendees (Participants) button, that window also has