COPYRIGHT NOTICE. COPYRIGHT 2007-2015 by Clinton Jeffery.

For use only by the University of Idaho CS 383 class.

Lecture Notes for CS 383 Software Engineering

Reading

Please obtain your copy of the text book (Sommerville, 10e) as soon

as possible.

Introduction to Software Engineering

- What does "software engineering" really mean?

- Many expert computer scientists argue that this term is a misnomer.

This course is really about: tools and methods that

are useful for larger-scale software development projects.

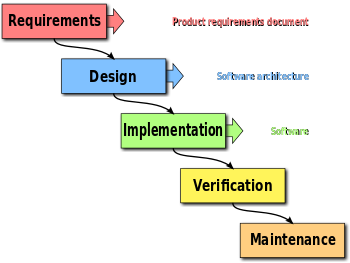

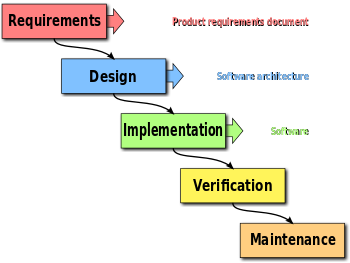

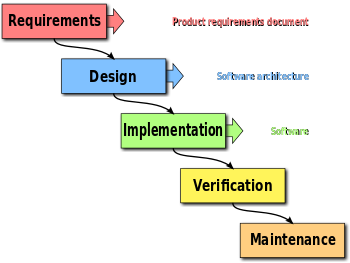

Software Lifecycles

- waterfall model

- The original proposed lifecycle for engineered software.

Sequential. Requirements analysis,

design, implementation, testing, and maintenance phases.

(Wikipedia)

(Wikipedia)

- spiral model

- An iterative waterfall model, proposed by Boehm. The waterfall is

repeated in sector 3. The graphic suggestion 75% of the spiral is now

in non-waterfall activities is probably misleading.

(Wikipedia)

(Wikipedia)

- agile model

- A priority-driven iterative model. Waterfall phases are freely mixed

into a work schedule organized aroound a sequence of "sprints" and daily

"scrums".

(LinkedIn/Hamzeh AbuZaid)

(LinkedIn/Hamzeh AbuZaid)

Expectations Management

A brief discussion on expectations management: this class' primary goal is

to teach and gain experience with software engineering concepts, tools, and

methods. The goal is not a finished working product at the end of the

semester, it is more like: a properly engineered (i.e. documented) working

prototype.

Course Perspective

I believe that you learn by doing, and that you

learn more and better by doing a larger project than you could do by

yourself or in a small group.

Ideally this course would cover the entire software lifecycle, and you

would learn hard truths about coming into a project during the middle

of development. You would have to read and understand others' documents,

ask questions until it makes sense, and make an important contribution

in a short span. In practice, it is hard to achieve this in a university

setting. Don't expect this knowledge to come without a price, whether

you learn it here or on the job someday.

Recent (Dr. J Dynasty) History of this Course

In the past few years, CS 383 has undertaken with varying degrees of

success the following projects.

- TBDCRPG

- A tile-based dungeon-crawling role playing game.

- L33t

- An educational software framework which was to teach pre-college students

basic principles of computer and information science.

- Wellspring

- A collaborative software engineering diagram editor.

- Arkham Horror

- A complex, cooperative board game.

- The Table

- A (hardware+software) platform for computer-assisted board games.

- Gus

- A Management System for Campus Groups & Clubs.

- Freedom in the Galaxy

- An adaptation of a Star Wars-like science fiction simulation game.

- Swords & Sorcery

- An adaptation of a fantasy simulation game parodying D&D and others

Discussion of Project Topics

CS 383 has contradictory requirements

- Project needs to be the "right size and shape".

- Bigger than you can do on your own. Small enough we can complete it,

at least somewhat.

- Project should be Interesting and Fun

- By definition, that means it uses networking and graphics a lot

- Project can require no prior expertise on networking and graphics

- Those classes are not prerequisites. Consider this a warmup.

- Accreditation Requirements

- We must spend most class time on lecture material, not just the project

Constraints on the Project

Size and shape constraints will exclude many

fine potential software projects you might wish you could do. The constraints include:

- Application should have many distinct user tasks, such that each

student on team can design several of them (55, or maybe 110 things

you can do with the software).

- Application domain already familiar to students or easily learnable by

the whole class.

- Requisite API's (database, network, graphics, etc) already familiar or

easily learnable by the whole class.

- Can develop for target platform on CS lab machines (additional student

machine platform support strongly desirable)

What I Learned from (Previous Iterations of) CS 383

- Python is poorly suited to software engineering

- A brilliant team with expert Python programmers can

experience a lack of optimal success of they don't design

or communicate well.

- Need to start using agile methods from the beginning

- We will do homeworks this semester in terms of lists of assigned tasks

Weekly Activity Reports.

Starting with this (next) week.

- Instructor needs to be (semi) dictatorial

- We need both instructor- and student-leadership.

Leaving it all up to you is unwise. Students need to

recognize the burden that comes with leadership.

- "Smart" does not imply (good leader | productive student | team player)

- There are different kinds of "smart"

- "Bossy" does not imply (good leader)

- Many teams let whoever is loudest win. This is not always good.

- Cliques are hard to avoid, and damaging

- Hidden costs associated with sticking close with your buddies

- Language familiarity seems to be important

- Especially when time is of the utmost

- Design is more difficult than coding

- Counterintuitive. Also: bad design precludes good code.

- Early rounds of coding needed to feed design process

- "rapid prototyping" is usually where C++ and java stink it up.

- We need competition

- Although I would prefer a single class-wide effort, it doesn't

always work optimally.

- We need large teams and shared assets

- Small teams do not let you learn some course topics.

- Communicating and committing are more difficult than technical issues

- Counterintuitive.

- Integration is more difficult than designing and coding your own stuff

- Therefore it takes time.

- All modern OO languages have ugly "warts".

- There is a "semantic

gap" between clean/abstract OO as seen in designs, and ugly OO

provided by programming languages.

- Don't run two independent projects

- We cannot split the class time like that.

- Don't elect a new rotating boss man

- Nobody will know where the buck stops. Dr. J suggests instead

that your team adopt the rules of pirate captaincy. (Elect a

boss, then live with that person's management style. Respect

authority. Mutiny only when your project success or grade is at stake.)

- Enact some system to force teammates to meet weekly goals.

- Can't be all carrots, must have some sticks.

- Sundays are a poor day for team meetings

- They work fine for some, fine for awhile, hard to keep attendance up.

- Need 1+ group technical sessions each week, not just group/mgt. meetings.

- Same-time tech sessions, maybe same place not always necessary.

- Group meetings need to start on time and stay on task

- Many of your teammates do not have time to goof around

Lecture 3

Discussion and Vote on Project Candidates

Notes:

- Unicon Portable Help System removed due to it being in Unicon.

Our preferred languages for this class are Java or C++.

Languages

There is the language we will use for our software design (UML),

the language we will use for our documentation (LaTeX), and the

language we will implement with (probably Java). There are some

considerations and trade-offs involved in our selection of languages.

- UML is an industry standard and CS major should be familiar with it

- LaTeX is ASCII-human readable and thus amenable to use with

revision control systems such as SVN or Git.

- Java is valuable on resumes, similar but easier than C++, and has

a good track record. C++'s main advantage would be: staying fresh

for CS 445 prep

Lecture 4

Basic Concepts for the HW

- use cases and their descriptions

- the first step in requirements is to know what tasks the user

will perform using the software

- agile methods #1: sprint

- as defined here, a sprint consists of a interval of time,

usually 1-2 weeks, with a concrete set of goals to which

each team member commits. In between each sprint is a sprint

planning phase, including individual progress reports and

a planning meeting.

Use Cases and Class Extraction

You can identify classes from a software specification document by looking

for "interesting" nouns, where interesting implies there are some pieces

of information to represent in your application, and operations to perform

on them. You can also identify classes by developing use cases from the

specification document.

Lethbridge defines a use case as:

A use case is a typical sequence of actions that an actor performs in

order to complete a given task.

I would say: use cases are formatted descriptions of "discrete"

tasks. By "discrete", we mean an individual standalone thing a user does

while using the system.

If you look through the tasks mentioned in a specification document, you

can identify a set of candidates.

Example candidate tasks for a "wargame":

- Combat

- Roll dice

- Move pieces

- Perform the Missions Phase

Example candidate tasks for the Parker Brothers game called Monopoly:

- Buy property

- Roll dice

- Move piece

- Count money

Example candidate tasks for an online collaborative IDE:

Entire books have been written about use cases.

Use cases are also described

in Chapter 11 of the

Unicon book; some of today's examples may be found there.

Use Cases: Terminology

- actor

- role that an external entity plays in a system

- use case (or just "case")

- depiction of some aspect of system functionality that is visible

to one or more actors.

- extension

- a use case that illustrates a different or deeper perspective on another use case

- use

- a use cases that re-uses another use case.

Now we will expand on the discussion of use cases, use case diagrams, and

look at examples.

Use Case Descriptions

Drawing an oval and putting the name of a task in it is not very helpful

by itself, for each use case you need to add a detailed use case

description. Your first homework assignment is to "go and do this" for

your semester project.

Section 7.3 of the text explains the format of use case descriptions. Each

use case has many or all of the following pieces of information. The items in

bold would be found in any reasonable use case description.

- Name

- The name of the use case.

- Actors

- What participants are involved in this task.

- Goals

- What those people are trying to accomplish.

- Preconditions

- The initial state or event that triggers this task.

- Summary

- Short paragraph stating what this task is all about.

- Related use cases

- What use cases does this use case use or extend? What uses/extends this use case?

- Steps

- The most common sequence of actions that are performed for this task.

Lethbridge divides actions into two columns: user input

is given in the left column, while system response is

given in the right column. The two column format is

optional, but saves on paper and may improve clarity.

The steps are numbered, so there is no ambiguity in using

both columns on each line.

- Alternatives

- Some use cases may vary the normal sequence of steps.

- Postconditions

- what does this task produce?

Use case descriptions, examples

A simple generic use case for a "file open" operation might look like:

Open File

Summary: A user performs this task in order to view a document.

The user specifies a filename and the document is opened in a new window.

Steps:

- Choose "Open" from the menu bar.

- System displays a File Open dialog.

- User selects a filename and clicks "OK".

- System closes the dialog and opens the file in a new window.

Alternative: If the user clicks Cancel in step 3, no file is opened.

|

Lethbridge-style two column format is nicely motivated in the following

example, which has enough steps to where two columns saves enough

space to matter. When you start having trouble fitting the whole use case

description on a page, there are substantial benefits to a compact format.

Exit parking lot, paying cash

Actor: car driver

Goal: to leave the parking lot

Precondition: driver previously entered the parking lot, picked up a ticket,

and has stayed in the lot long enough that they must pay to leave.

Summary: driver brings their vehicle to an exit lane, inserts their ticket

into a machine, and pays the amount shown on the machine.

Related use case: exit parking lot, paying via credit card.

Steps:

| 1. Drive to exit lane, triggering a sensor.

| 2. System prompts driver to insert their ticket.

|

| 3. Insert ticket.

| 4. System displays amount due.

|

| 5. Insert money into slot until cash in exceeds amount due.

| 6. System returns change (if any) and raises exit barrier

|

| 7. Drive through exit, triggering a sensor.

| 8. Lower exit barrier

|

Alternative: User crashes through exit barrier with rambars on front of truck

in step 1. (just kidding)

|

The following example (by Lethbridge et al) gives you one more look at use

case descriptions. This one is for a library management application.

Check out item for a borrower

Actor: Checkout clerk (regularly), chief librarian (occasionally)

Goal: Help the borrower borrow the item, and record the loan

Precondition: The borrower wants to borrow a book, and must have a library

card and not owe any fines. The item must be allowed for checkout (not on

reserve, not from reference section, not a new periodical, etc.)

Steps:

| 1. Scan item's bar code and borrower's library card.

| 2. Display confirmation that the loan is allowed, give due date.

|

| 3. Stamp item with the due date.

|

| 4. Click "OK" to check out item to borrower.

| 5. Record the loan and display confirmation that record has been made.

|

Alternative: the loan may be denied for any number of interesting reasons

in step 2 (see preconditions).

|

Lecture 5

(most of class spent discussing HW#1)

(How to Estimate) What's Feasible?

After we have a set of use cases (with descriptions) for our project, we

can use it to form a "version 1.0" estimate of our system's function

points. We can, from that, estimate time and cost of developing the

system.

Perhaps this might be the 2nd type of thing you measure about a forthcoming

or under-construction software project (after "# of use cases").

- # user-input activities

- # user-output views

- # user "queries" (for database apps)

- # of data files

- # of external interfaces

Weight each of these; perhaps just designate as "simple", "average", or "complex".

Sum of weights = "function points" of program.

We wil have to come back to this, because we have more urgent content to

work on.

Agile Methods and Scrum

- Intro to scrum and sprints.

- Note: how many do not have the text yet?

- If you do not have the text, it is not an emergency (yet).

- Please obtain the text as soon as you can, follow the

class presentation, and ask your teammates questions as needed.

Lecture 6

Use Case Description Tip

Maybe I need to move this comment earlier, to deliver in time

for use on HW#1. In the meantime, consider it a thing to check and

fix if need be, based on past CS 383 experience.

- Something can't be both a precondition, and a step

- If it was a precondition, it was already true before the use case.

What else did you find confusing or tricky about use case descriptions?

Some Project Considerations

- Should we stick with 4 teams? Do more? Fewer?

- Rationale for more: common "wisdom" that too large a team will allow

folks to freeload.

Rationale for 4 teams: scrum book recommendation as to maximum

scrum team size.

Rationale for fewer (like 2 or 3): larger team experience gives unique

insight into communication and coordination challenges in software

engineering.

- Shared functional requirements, not team-separate.

- Rationale:

common requirements means time spent working on them in class will be of

equal interest to all parties.

- Team composition

- Instead of randomizing, should I in future distribute

the GPA's equally among the three teams. Do you have a better suggestion?

A buncha famous software engineering snake-oil salesgurus all signed the

following inarguable statement:

We are uncovering better ways of developing

software by doing it and helping others do it.

Through this work we have come to value:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

That is, while there is value in the items on

the right, we value the items on the left more.

Agile Methods Tips

Gamedev.net once posted (associated apparently with gdc2010) an interesting

article on agile methods, which has since disappeared into the ether. What

we have left are the following observations about doing agile methods well.

See if any will help in your sprints this semester.

- Instead of "completion of developer tasks", focus on

delivery of features.

- Agile teams spend more time on planning than

traditional teams. Expect and budget time for that.

- Allowing too much uncertainty into a project can reduce velocity.

This is our key challenge right now

- Developers are responsible for what they achieve each sprint.

- Do things that add value.

- Get things done in a constrained time-box.

- Know your capabilities...and your limitations.

- Communications is essential! Keep 'em frequent, keep 'em short.

Since different teammates have different styles,

parties need to agree on a medium and frequency of communication.

- Have a central shared place (e.g. wiki) for project information

Scrum

The term scrum derives from a part of the game of rugby in which teams

lock arms and put heads together. As an agile method it refers to a way

of conducting planning meetings. There are typically two kinds of meetings:

- "daily scrum"

- a short meeting, held frequently, amongst the team

- "sprint"

- a longer meeting, held every 1-2 weeks, to deliver features and set

goals for the next sprint

Our implementation:

- daily=15 minutes every other day, MWF

- sprint=25 minutes, every 2 weeks

Scrum Roles

These roles are primarily evident during the planning meetings for each

two week sprint.

Adapted from Scrum Alliance.

- product owner

- for our class purposes, this is me. decide what work will be done each

sprint. maintain the backlog.

- development team member

- deliver increments of functionality each sprint. "they have the

responsibility to self-organize to accomplish each sprint goal".

product owner says what is to be done, devteam members forecast what

can be done in one sprint, and decide how/who are going to do what.

- scrum master

- "servant leader" who helps the team follow the process. Scrum masters:

- "evolve the definition of Done".

- help find and implement whatever technology and methods are needed

to get to Done each sprint.

- remove impediments

- facilitate meetings, help team members

Creating an Initial Backlog

Adapted from

Fear No Project

- "Product Backlog" is almost: list of (not-finished-yet) Functional Requirements

- Acknowledges Changing Requirements throughout project

- SSRS Functional Requirements Section === backlog ++ completed items.

Hopefully, backlog shrinks as completed items list grows long

- The first backlog is "vision, analysis, and marketing promises"

- Oh by the way, everything needs to be prioritized.

- Responsibility of the product owner (darn!). But as an educational

exercise, you should expect to build and maintain this document (huzzah!).

Scrum "Daily" Meetings

We start these next week.

- 15 minutes

- Each person on the team reports:

- What I did yesterday

- What I will do today

- What impedes me?

- "yesterday" is really: since the last meeting, i.e. 2-3 hours of work

Sprint Meetings

For the purposes of this class, a sprint meeting consists of three parts.

Per the feedback from last time, it is recommended that this semester we

try spreading the sprint meeting over two classes every two weeks.

Sprint Day 1

- Review (N*5 minutes)

- Except on the first one, review the outcome of the past

sprint. <= 5 minutes per team, in front of class.

Show/Demo finished backlog items (deliverables).

Graded on technical content and communication/relevance/value.

- Reflection (2 minutes)

- Answer two questions: What went well? What could be improved?

Process improvement is all extra credit.

Sprint Day 2

- Planning (25 minutes)

- 23 minutes in your team, updating requirements and backlog. 2 minutes

per team to report and/or negotiate with your instructor on items

committed to complete for the next sprint.

The instructor will often provide one or more Required elements for the

following week's sprints, to go along with whatever team-specific goals

you formulate.

Lessons from past class' mis-application of scrums and sprints

- Its bad to not hear reports from all members in a scrum.

- Scrum should not be just an informal chance for the loud people to talk.

- sprint planning needs more time!

- Possible solutions: switch to 75 minute class periods (bad, fewer scrums)

or spread sprints across two classes instead of trying to get them done

in one 50 minute session.

- scribe/recorder should place decisions/work assignments/attendance records

in a public place the whole team can see.

- fact of life: some times folks have to miss

lecture#7 began here

Comments on HW#1

- Overall, good work

- I (almost always) want PDF. Don't resubmit if your .tex is adequately

included in group's PDF. Do resubmit if your PDF was a depiction of

your .tex source code instead of the output pdflatex for that document.

- Grades on HW#1 will be somewhat gentle. Future homeworks will be harsher.

- Latex tips: ``...'' not "..."

- .docx is OK for individual work, but I do actually want you to learn

some LaTeX, it is more git-friendly.

- .zip is OK for .tex+image files, but leave PDF as a separate submission

Yeah, due in a week.

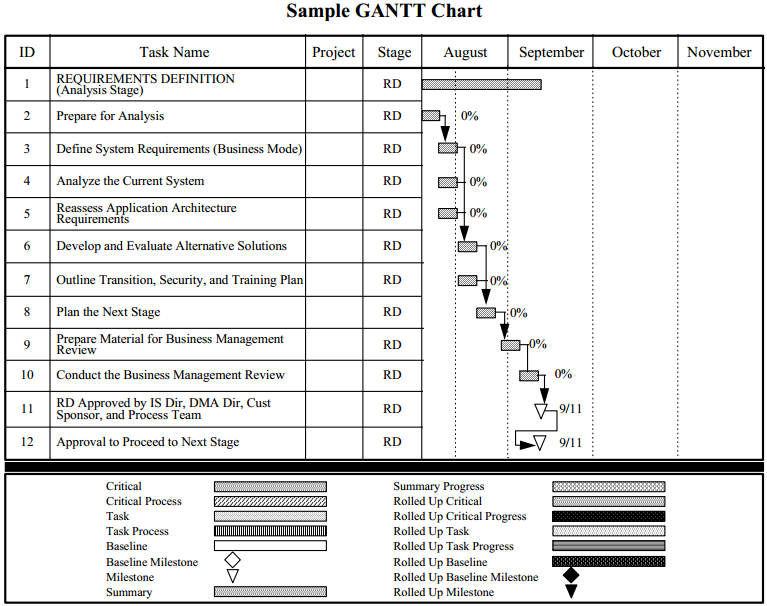

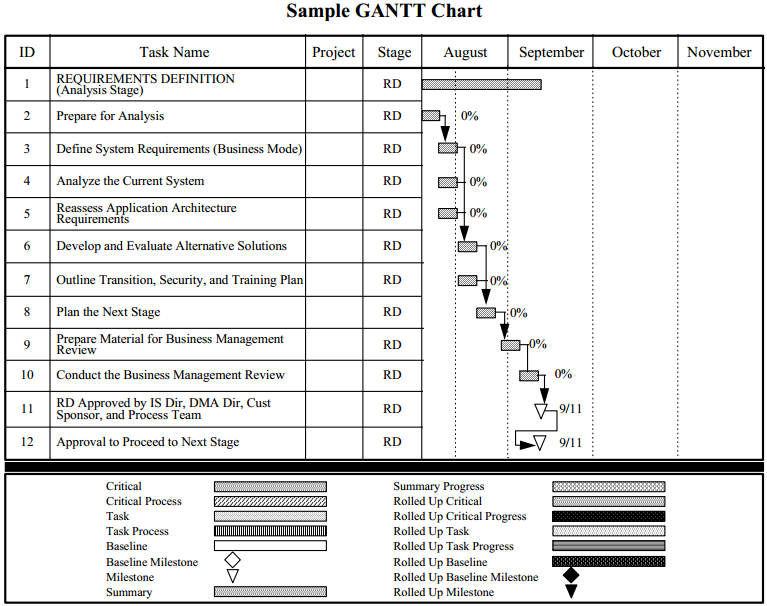

Comments on Project Planning Tools

- Many softwares to run projects

- Some softwares may have specific support for Agile

- One student: "Can we Jira? Because...SEL", or maybe Phabricator

- Spring Backlogs might be managed with list-oriented tools like

Trello, or Emacs "Org Mode"

- Then there are Microsoft Project and its clones, e.g. OpenProj, ProjectLibre, Gantter

- It is not my intention to mandate which tool(s) your team uses,

but I recommend that teams evaluate and select one.

- Lots of other tools and notations in this class are higher priority.

- Will matter more when we have permanent teams.

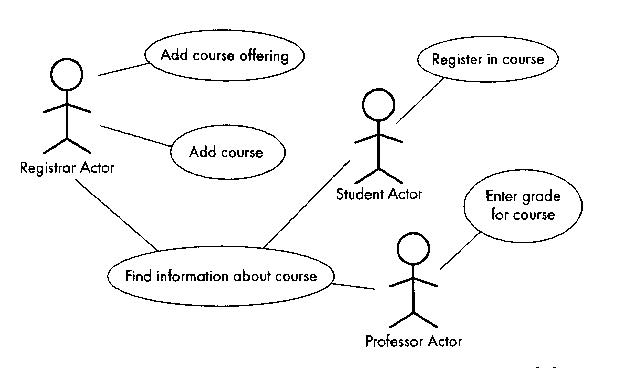

Use Case Diagrams

One reason to do a use case diagram is to summarize or catalog

what tasks are part of the system; a sort of table of contents for the

beautiful set of use case descriptions that you should write.

But the main reason

use case diagrams exist is in order to show who does what, when different

users (actors) participate in different (overlapping) tasks. If you only

have one actor, or there are no tasks in which multiple actors interact,

there may be no reason that you have to do a use case dialog.

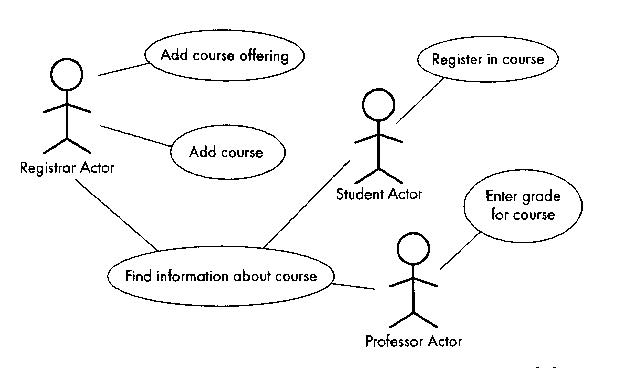

Consider the following figure from a book by Lethbridge.

There are three actors (Registrar, Student, Professor), and there are

five use cases. The "Find information about course" use case is vague

and probably the three actor types can find out different information

from each other. They are not typically involved in the same

instance of finding out information about a class, so the example could

be better.

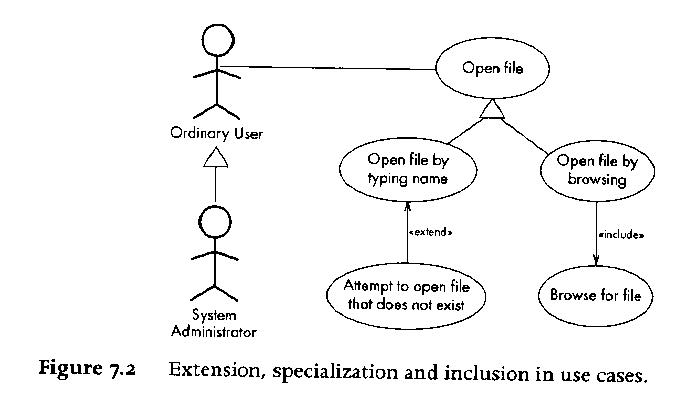

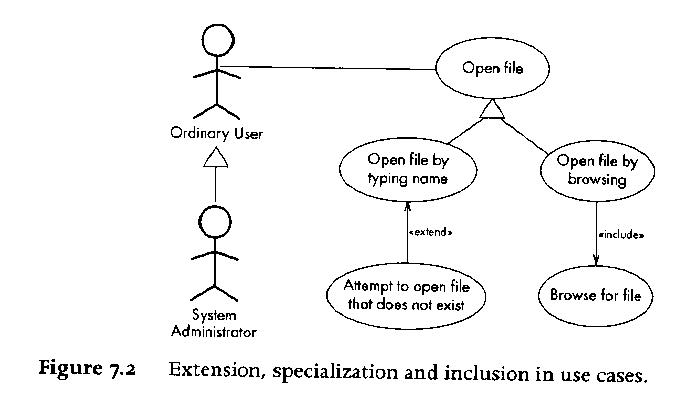

The next figure illustrates a bunch of more exotic use case diagram items,

namely actors and use cases that use or extend other actors and use cases.

Given that UML is a diagramming notation, its ironic that the main thing

about use cases are the use case descriptions.

It is easy to omit one

interesting category of actor in use case diagrams, namely: external system

actors. A computer program may interact with external entities that are not

humans; they may be remote database servers, for example.

Figures 11-1 and 11-2 of the

Unicon book give some more examples of use

cases.

lecture#8 began here

Reading Assignment

Sommerville Chapters 1-4. You are particularly responsible for:

- SE Code of Ethics and Professional Practice

- Waterfall and Spiral models, and Process Activities

- Pretty much all of Chapter 3.

Project Steering and HW#1 Feedback

- name: sQuire

- Less "gamey". Dropping explicit MUD and roguelike features from requirements

- More: evaluation-based. Peer review. Up/down votes on projects/people.

- user roles: admin, project owner, user

- minecraft-like server/peer structure

- "up" technologies: Docker, containers...if they actually solve a

problem for us

- "down" technologies: javaScript and its ecosystem

- Several kinds of rooms:

- world=everyone connected to sQuire, everywhere

- project=top level "conference room"

- directory within project=project membership, file manipulations, build rules

- project file=collaborative editing room

- Subscription-based subject rooms

- Users auto-enter chats from all subscribed/relevant rooms

Use Case Description Tips

- If-statements don't happen in use case steps.

- The more common (then-part or else-part) belongs as the main sequence.

- The other identifies an Alternative.

- If too many or alternatives are

non-trivial: split into multiple use cases

- While-loops don't happen in use case steps.

- Not a rule cast in reinforced concrete, but:

- Use cases are not pseudocode, they are user-eye view of system function

- Time is linear, for humans.

Use Case Diagram Tips

- Actors interact with your app

- The only way a part of the software or an object being modeled by the

system is an Actor, is if it has some agency, i.e. a mind

of its own. AND it lives in a separate process, or minimally, a separate

thread.

Lecture 9.

Requirements Elicitation Techniques

Purpose: produce a requirements specification (i.e. software specification)

document.

-

Identify functional and non-functional requirements (F+URPS) (Completeness,

Consistency, Correctness).

- Focus on the

users' view of the system, NOT the internals.

- Identify actors, scenarios,

use cases. Refine and relate use cases.

Scenarios

- Before there were use cases, there were scenarios.

- A scenario is a narrative description of what people do and

experience as they try to make use of computer systems and applications

[Carroll].

- The word is overloaded. Scenarios may describe:

- a current way things are done

- a proposed way that a future software system should do them

- a method of evaluating a system

- a method of training a user on a system.

Tying Scenarios to Use Cases

A set of scenarios may include many different instances in which the

user is really performing the same task; these get merged into a use case.

Use cases typically contain a primary sequence of steps performed in

common by any scenario in which the user is doing that task, plus a

number of exceptions or alternatives.

Use Case Writing Guide (adapted from Bruegge)

- describe one complete user transaction

- use cases are named with verb phrases that indicate what the user is doing

- actors are named with noun phrases that indicate their role

- boundary between actors and system (i.e. who does what) should be clear

- use case steps are phrased in the active voice

- 7 +- 2 is usually the maximum number of steps

- causal relation between successive steps should be clear

- exceptions are described separately

- do not describe the user interface; UI design is separate

- do not exceed 2-3 pages. Probably not even 1 page.

Scrum

- last 15 minutes of class today you get to do a scrum standup meeting

- do you remember what that consists of?

- have you elected a scrum master and a product owner?

(you will have to have one starting next week)

- elect a scrum reporter, who sends me short 1-2 paragraph scrummary

of each scrum meeting. I want: absentee list, decisions made,

any unresolved obstacles, short progress summary. Today's scrummary

should include: who is your github boss, scrum master, product owner,

and scrum reporter.

The Core Problem of Software Engineering: Complexity

Over time, this means: change. What complexity? This refers not to the

asymptotic time and space complexity of a code, although algorithmic

complexity is a crucial part of it. But software's complexity, to the

humans trying to build or maintain it, goes beyond the algorithms. There

are dimensions to it: static vs. dynamic, and control vs. data, and

more.

What ones do you know of?

- Denver airport baggage handler

- Patriot missile defense system

- Ariane 5 rocket

- Therac-25 radiation treatment machine

- Mars probe

- Power grid rolling blackouts

- The FBI's Sentinel caseload management system

- Healthcare.gov

- FAA flight controller system

- DOD payroll system.

- Toyota, and more recently Dodge, with multi-billion dollar vehicle recalls

due to software bugs that cause crashes/deaths.

What do we do about complexity? Anticipate it. Minimize it. Mitigate it.

Manage it. More on this topic later; for now, you are supposed to be aware

that it is your chief opponent --- your adversary.

Why is Software Engineering Crucial?

Because the larger a program gets, and the more features you add, the

more bugs you get. Why? Because things get too complex for us to handle.

Until we can solve this unsolvable puzzle, Moore's Law is limited or

revoked by our inability to utilize hardware, just as we are

unable to utilize our own brain (wetware).

Belady-Lehman observed:

[D. Berry, The Inevitable Pain of Software Development,

Monterey Workshop 2002]

So, Software Engineering is All About Pain

Software Engineering, it turns out, is mainly about pain.

Dan Berry, one of software engineering's luminary founding fathers,

had this to say about software engineering methods:

Each method, if followed religiously, works. Each method provides the

programmer a way to manage complexity and change so as to delay and

moderate the B-L upswing. However, each method has a catch, a fatal

flaw, at least one step that is a real pain to do, that people put off.

People put off this painful step in their haste to get the software

done and shipped out or to do more interesting things, like write more

new code. Consequently, the software tends to decay no matter what.

The B-L upswing is inevitable.

Dr. Berry goes on to give the following examples:

| Software Method | Pain

|

|---|

| Build-and-fix | doesn't scale up

|

| Waterfall Model | it is impossible to fully understand and document complex software up front

|

| Structured Programming | Change is a nightmare: patch or redesign from scratch

|

| Requirements Engineering | Haggling over requirements is a royal pain.

|

| Extreme Programming | Writing adequate test cases is a pain

|

| Rapid Prototyping | We can't bear to throw away the prototype!

|

| Formal Methods | Writing formal specification, and verifying it, may be a pain. Changing requirements is definitely a pain.

|

| Code inspections | Documentation prep for inspection is a pain; nobody wants to be inspected.

|

| "Daily Builds" | Testing, regression testing, and possibly reworking

your latest change to not break someone else's latest change is a pain.

|

My goal for this course is to maximize your learning while minimizing your

pain.

Lecture 10

HW#3: first Sprint

Weekly Activity Reports

- Due weekly on Sunday 10pm, starting a week from this Sunday

- Keep a casual log of your project time

- Spend 5 minutes/week giving an individual report on what you did

- Send to jeffery@uidaho.edu, not my gmail

- Format described in the CroftSoft WAR template

- Subject line must say exactly:

[CS383 WAR] name, date with date in mm?/dd?/yyyy format so I can file them. I promise to

delete them otherwise.

- Send text in-line, not in an attachment.

- You may (and should) include links that point at your work

(usually, in the github repository).

- purpose

- Establish a record of your contributions to the team each week.

Do you know what your personal commitments are in each sprint,

and are you doing something about them?

- graded

- from 1-4, 4 being an "A"

- format

- I need to be able to read these really easily. Plain text in the

body of the e-mail is better than an attachment.

- granularity

-

The point of weekly reports is to tell me what you

are doing; probably an upper bound ought to be 2-3 subteam members can submit

a joint report on their activities.

- redundancy

- If you do submit joint work,

I only want one copy, so if you are sharing report text, I want a

single submission with all contributors' names on it.

- persistence

-

There needs to be a better mechanism for keeping these reports, besides me

just shoving them underneath my pillow. Like: put them in a subdirectory

under your doc/ in your repository.

- sharing

- some reports contain information that would be useful if it

were visible to the entire team

- privacy

- Some reports might contain information that should be for my eyes only.

Upshot: comments and suggestions are welcome; I am going to tweak/improve

what we are doing with weekly reports.

Lecture 11

Github

My github id is cjeffery. Please add me to your teams.

Feedback on HW#2

- Tip for groups: document assembly burden needs to be shared

- Group submission assemblers report the time burden is too large,

figure out how to streamline and spread out the load.

- Time to unpack/print has to be minimized

-

- one PDF per person per assignment default

- images into LaTeX documents into PDF

- Turn in one attempt...or document the difference

- If I can't get grading burden down enough, I will revert future

assignments to single submission.

- Avoid blank lines connecting UML entities.

- use case diagrams have blank lines connecting actors to use cases,

but the lines connecting use cases to other use cases should generally

have <<uses>> or <<extends>> on them.

- Avoid mixing UML diagram types

- Rectangles/classes/major subsystems/components do not belong in

use case diagrams. Just because plantUML will do it does not make it OK.

- Put your images into latex documents

- You will have more control over what I see, and whether it is legible and

well-formatted, if your images are embedded within a PDF and preferably,

interated with supporting text that expands/explains the image content.

Some Big Concepts

- In software engineering, modeling is the art of constructing a

simplified representation of a domain, which portrays those aspects

of its essence and behavior that are needed for a given application.

- Big complex systems get modeled as a set of subsystems

- Big complex designs get drawn using multiple views

- UML relies heavily on object-oriented principles. If you have

programmed in C++ does that mean you understand OOP?

Let's expand the discussion of use cases to a discussion of software

specifications.

Per Wikipedia, what I might informally and casually call a software

specification is typically and more formally called a

Software Requirements Specification, or SRS for those of you who like

TLA's. Dr. Oman, our department's reigning software engineering expert,

calls them SSRS (Software and Systems Requirements Specification),

potentially including hardware or other aspects of the system besides

just the software.

It can be argued

that developing the initial natural language prose document is not the

software engineer's job, but instead the customer's; in practice, however,

the software engineer frequently has to help, or do the customer's job to

some extent. In any case, from an existential point of view, unless we

were to choose a project with an extant specification, we must develop one.

There are IEEE standards for requirements specifications. Wikipedia's

definition says the requirements specifications includes a set of use

cases and in this class you can say that they are a prominent part of

the requirements specifications development. Based on the IEEE standards,

we have the infamous-yet-improved

LaTeX edition of the CS 383 SRS Template.

Introduction to UML

Spend 5-10 minutes surfing

http://www.uml.org/#UML2.0

and then read the

Crag Systems UML Tutorial Chapters 1 and 2.

A supplemental (non-required) reading resource for the diagram types covered

in this class can be found in the middle chapters of "Programming with Unicon",

where object-oriented features are being presented.

UML stands for Unified Modeling Language. A "modeling language" is not

a programming language, although some efforts have been made to "compile"

UML diagrams down into code.

UML was created when 3 very successful software engineering diagramming

gurus banded together to wipe out the other 12 software engineering gurus.

Actually, there was a serious need to create a common notation; prior to

that, software engineers that worked with one guru's diagrams might not

easily be able to read or understand software designs drawn by another

software engineer who had been trained using another guru's diagrams.

In CS 383,

we care about ~4 common kinds of diagrams, starting with use case diagrams.

Most other UML diagram types would be used in specialized domains.

- use case diagrams

- document how human users and other "external entities" perform tasks

using the software system that is to be built.

- class diagrams

- document major application domain entities whose representation in the

system will include state and behavior. These diagrams document the

associations, or relationships, between classes. At implementation time,

there may be many implementation classes in addition to whatever classes

are written to correspond to domain classes and domain class relationships.

- interaction diagrams

- depict dynamic behavior and communication between objects. Generally

more detailed elaborations and special cases of the "relationships" from

class diagrams.

- statecharts

- These are finite automata, with software engineering semantics added.

There are states, events, and behavior that goes on during states or events.

Interpersonal Communications: Some Rules of Engagement

- 0. Behave Professionally

- If you intend to have a career as a computer scientist, this starts with

behaving like a professional: use no profanity, work

hard, behave ethically, be honest, and do what you say you will do.

If you can't behave professionally, you can't become a decent software

engineer: please drop the course.

- 1. Respect your classmates, even when you disagree or they are wrong.

- "Treat others the way you would like to be treated" - Jesus. This starts

with being polite and/or courteous to teammates, but goes farther. No

one should disrespect your teammate(s) publically; group leaders

should be especially careful about this. If you have a problem

with one of your team member's contributions, discuss it with them

privately. If you cannot resolve it through polite discussion with

the individual, discuss it RESPECTFULLY within your group, and if

there is a problem that can't be resolved internally, see me. Part

of your grade will be based on whether I determine that you respected

your classmates or not.

- 2. Accept group decisions even when you disagree.

- "The Needs of the Many Outweigh the Needs of the Few...or the One" - Spock.

There has to be some mechanism for making decisions, whether it is

democracy, dictatorship, or whatever. Those decisions should be made

based on what's best for the group, not what makes an individual look good.

- 3. You must include all group members in decisions.

- I want to hear no more team members who are surprised about something

that affects them.

- 4. You should do your best to contribute to your team.

- "From each according to his abilities" - Marx.

The easiest way to fail this course is to not contribute to your team.

If you do your best, make your contribution, and the team discards it,

that is not your problem or fault. If you don't do your best

to help your team succeed, don't be surprised at the grade you get.

- 5. E-mail is arguably the best medium for most asynchronous team

communications.

- See Greg Donohoe's guidelines.

Some of you millenials are more into texting or whatever, but e-mail

has a lot going for it. It is portable and multi-platform. It is

reliable and takes attachments. It lends itself to recordkeeping.

- 6. E-mail is not a good medium for resolving problems.

- I have found through many long years that e-mail does not work well

at conveying emotions. Using e-mail to try to resolve problems can

easily make them worse. Of course, sometimes you have no choice, but

basically e-mail is easily misinterpreted. Human faces and intonation

are lost, and people do not type as well as they talk. When there is

a problem, your best bet is to e-mail to setup a meeting to discuss it.

Your next best bet is to think, and rethink, what you are planning to

send by e-mail. Ask: how will this person react to this e-mail? Have

I respected them? Will they understand my situation? Will they feel

I am attacking them, or trying to help?

Example of how not to use e-mail for interpersonal communications:

From: ralph

To: cjeffery

Date: Wed, Apr 22

Subject: Carping

I'm more than a bit tired of beating you about the ears in hopes that you'll

rearrange your priorities, work habits, or whatever it takes to get your

research on track.

I'll assess the situation in a couple of weeks. If I'm still not

satisfied with your progress, I'll put it in writing.

This e-mail may have accomplished a certain motivational goal, but it did

not improve the working relationship between sender and recipient.

How to Approach Dr. J with Concerns

If you are happy with what we've been doing up to now, feel free to just

file this section in a "just in case" folder, for future reference.

My goal is to make software engineering happen.

All the requirements are negotiable. All the tools edicts are negotiable.

All you have to do is come up with a better plan, and sell me on it.

- you can speak with Dr. J privately

- you might find he is reasonable at times

- you can speak with Dr. J as a group

- Dr. J is more likely to hear a group

- you can elect a leader

- Dr. J will listen to duly appointed team leaders

Revision Control Systems

We have a lot of UML to learn, but we need to get settled on and using a

revision control system.

- Revision control systems (RCSs) are programs which track

changes to collections of files (for example, the

files that are part of a software project) over time

- you can tell who did what, and revert

to an earlier version if you get broken.

- a related genre of tool are Software Configuration Management Systems.

Configuration Management is when you integrate

Revision Control, Testing, Feature Auditing, and (OS + hardware) Platform

Adaptation and Porting.

- While SCMs are a bit too much for CS 383, revision control is life.

A Brief History of Revision Control

- SCCS

- "Source Code Control System", one of the early, proprietary revision

control systems from AT&T,

inventors of C and UNIX. Library model (check out in order to write, then

check back in) makes it difficult to overwrite someone else's code, but

does not scale very well. Cool but dangerous idea: system integration with

"make" on some Sun platforms kept you up to date automatically.

- RCS

- Early open source revision control system aptly named "Revision Control

System". Library model. Set of many separate cryptic commands

("ci", "co", etc.)

- CVS

- "Concurrent Versioning System" is the open source RCS that

defined the 2nd generation. Everyone can edit files at once, it is when

you try to check in changes that things get exciting.

- SVN

- Subversion is almost just a better CVS.

- Git

- A third generation of these systems has emerged. Git was written by

Linus, the inventor of Linux, giving it an unfair marketing advantage.

- Mercurial

- Another third generation revision control system is Mercurial (Hg).

It is said to be much faster and simpler than Git.

- Bazaar

- Another third generation RCS, haven't used it, but it looks interesting.

Handwaving at SVN

Everyone in 383 should know a bit about SVN.

Compared with earlier tools, they have these properties:

- let programmers to edit any file at any time. Earlier tools "lock"

files, allowing only one programmer to edit a file at a time.

- semi-automatically merges changes by multiple programmers; if the

edits do not conflict it is fully automatic, and if the edits are to

the same place in the program, it notes the conflict, shows both

versions, and requires the programmer(s) to resolve

the conflicts manually. Note: occasionally, automatic merging has

a spectacular problem. Do sanity checks and develop system tests

to avoid surprises.

- works on multiple platforms (e.g. UNIX and Windows) and since they are

open source, everyone can use them. Previous systems were not very

portable (RCS) or proprietary and commercial (SCCS, PVCS, etc).

- works over the internet, making it awesome for coordinating the

development of public open source projects with personnel scattered

around the world.

Major SVN Commands

SVN works using a "repository" which is a database for source files.

Unless you are creating your own repository, the first command you need

is

svn checkout projectname

which grabs a copy of a named project from the repository.

The various svn commands that manipulate the repository have the syntax

svn command [filenames...]

The other commands you need immediately for SVN include:

- svn diff [filenames...]

- Show any differences between your file and the version in the repository

- svn update [filenames...]

- Merge in any changes others' have committed to the repository.

If you have changed lines that others have changed, the conflict

is reported and both copies of the changed lines are left in for

you to merge by hand.

- svn commit [filenames...]

- Merge your changes into the repository.

- svn log [filenames...]

- Show history of changes that were made to a file or files.

There are many other SVN commands, and command-line options, that

you may find useful; read the manuals! One option of special interest

is -r tag which let's you ask for older versions from

the repository instead of the current version. This may help if the

current repository gets broken. :-) Use it with care, however; when

you go back to an earlier version, the repository doesn't think any

changes you make apply to the current version.

Similarly, there are "gotchas" to avoid if you have to move a directory

around in the SVN repository. One student just did a "mv" and then was

stuck in a "eternal SVN conflicts from hell" mode, until he found out

he needed to do new "svn add" commands for the directories at their new locations.

His GUI client interface (Eclipse) allowed him to get into this

mess and failed to warn / prevent it...

So be careful: you have been warned.

It is possible to study Git relative to the SVN commands, starting with the

GitHub Help,

git - the simple guide

and

Everyday GIT pages. I am still learning more git myself.

Here is Git's user manual.

Obvious differences between Git and SVN:

Pithy Software Engineering Quote of the Day

Design without Code is just a Daydream. Code without Design is a Nightmare."

-- attributed to Assaad Chalhoub, adapting it from a Japanese proverb.

Brief Discussion of Requirements

Dr. J agreed to drop the unlockable graphics requirement.

Dr. J agreed to drop the player==thread requirement.

Analysis - What Else Besides Use Cases

Having studied the application domain

it is time to produce an analysis model. "Structure and formalize

the requirements".

The analysis model is sometimes viewed as a three-part chorus: "functional

model", "object model", "dynamic model".

At this phase, we start talking about objects in more detail, still focusing

on the application domain, not the implementation. Domain objects can be

classified into three general categories: entity, boundary, and control.

You can use «stereotypes» enclosed in angle quotes or crudely

approximated with less-than and greater than (<<stereotypes>>)

to identify a class' category.

Alternatively, you could color code them or group them physically, maybe

separating the categories using dashed lines or some such.

Identifying Entities

Red flags:

- Real-world entities that the system tracks

- Real world activities that the system tracks

- Terms developers/users clarify/explain in order to understand the use case

- Recurring nouns in the use cases

- Data sources or sinks

| POS | Model | Example

|

|---|

| Proper noun | instance | Alice

|

| Common noun | class | Field officer

|

| "Doing" verb | operation (method) | create, submit, select

|

| "Being" verb | inheritance | is a kind of, is one of either...

|

| "Having" verb | Aggregation | has, consists of, includes

|

| Modal verb | constraints | must be

|

| Adjective | attribute | incident description

|

Boundary Objects

Red flags:

- gui controls needed for the use case

- forms the users need to enter data into

- notices and messages the system will use to inform the user

- different actors' terminals (windows/connections...)

- do NOT UML-model the actual screenshots (sketch or use an interface builder)

- use end-user terms for describing interfaces, not implementation terms

Control Objects

Coordinate boundary and entity objects. "Manage" the forward progress

through a use case. One control object per use case, or maybe per actor

in the use case.

Lecture 12

Quick Peek at Functional & Non-functional Requirements

Easy to count, hard to really evaluate.

- Team 1, 12 functional, 6 non-functional

- Knight Writers, 23 functional, 5 non-functional

- Team 3~62 functional, 34 non-functional

- I.C.Y.17 functional, ~30 non-functional

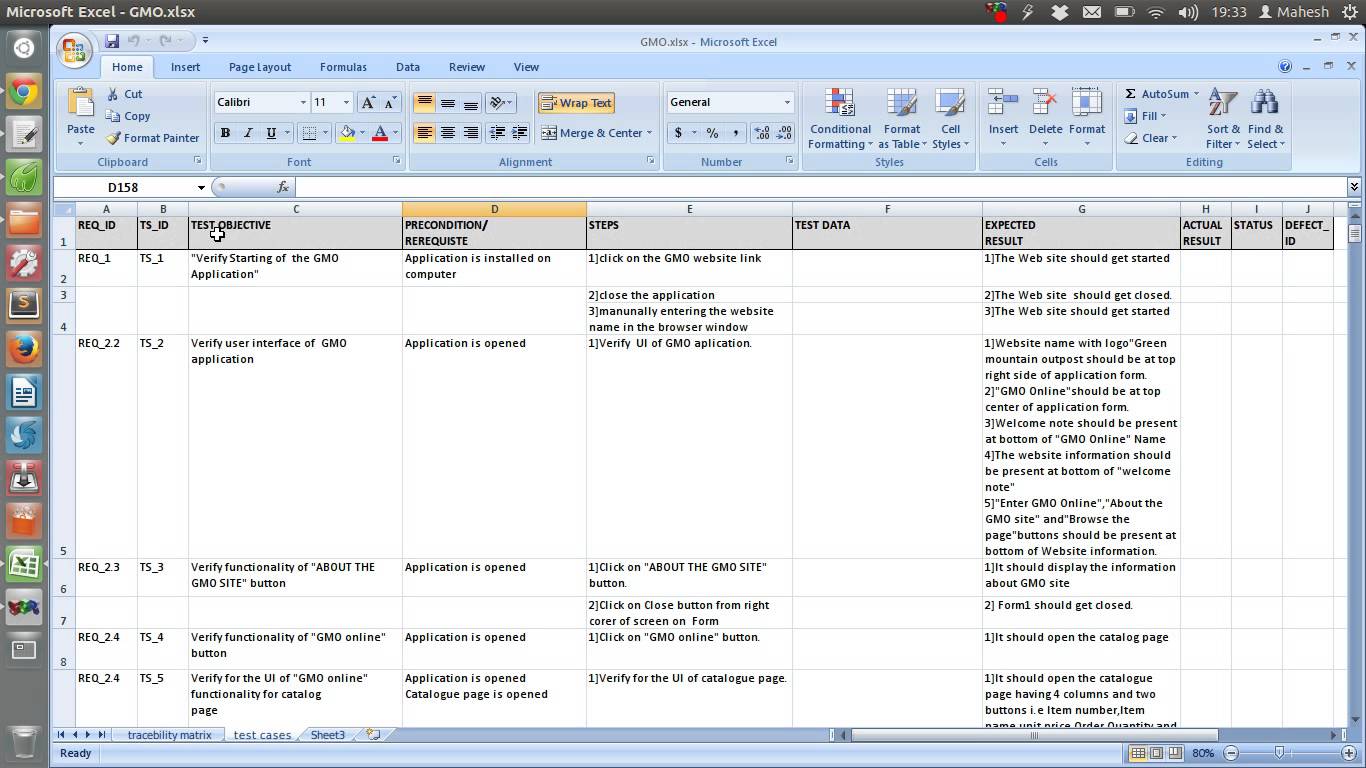

Summary of Functional and Non-Functional Requirements

New idea for today's lecture: requirements traceability. For every

requirement, be able (eventually) to point to where it is reflected

in subsequent UML diagrams and thence to code. Implies a need to

name or number (location of) corresponding chunks of design and code.

Functional.

- Capable of supporting editing, compilation, and execution of Java

programs.

- Programs will be viewed as projects and include multiple directories

and files within an overall directory. Traces to: ?.

Instructor comment: break into three requirements?

- Ability to import/export projects from User's local file system.

- Projects can be brought in from common sources such as Github, local

computer, and other IDEs. Traces to: ?.

Instructor comment: break into two requirements?

- Shared Sessions with easily controlled viewports.

- User will be able to independently control their own window and snap to

the view of other contributors. Traces to: ?.

Instructor comment: break into two requirements?

- User Chat

- User can chat with and view messages from file group, project group,

and individuals. Traces to: ?. Instructor comment: may point to additional

requirements, or user interface design that will be needed.

- User Profiles

- User will have persistent profiles including email, a profile picture,

and project ownership/membership which are viewable by all other users. User

profiles will also keep track of how many reviews the user has been involved

in via achievements. Traces to: ?. Instructor comment: "how many reviews"

needs elaboration. Users' track records and activity do indeed need pieces of

information to be stored, probably multiple pieces of information.

- User Awareness

- User will be able to see what other users are working on in a

file/project, or who are online in the case of friends. Traces to: ?.

Instructor comment: may point to additional

requirements, or user interface design that will be needed.

- Ability to Rate Comments.

- Users reviewing code will be able to up or down-vote comments, which help

make the code better. This will help to ensure code reviews to be as

constructive and useful as possible. Traces to: ?. Instructor comment:

unclear. Do you mean they are rating code with their own comments, or

rating the comments that the developer put in their code, or both?

- Project File Structure Pane

- The users will be able to see the project file structure. Also the user

will be able to move to different files by clicking on icons within the

structure. Traces to: ?. Instructor comment: probably should be titled

Project View or similar. Points to user interface design that will be

needed. (other-)User awareness requirement also applies to structure view.

- Project Forum

- Public projects will be organized in a forum that facilitates

project browsing, joining, and rating. Traces to: ?. Instructor comment:

needs elaboration. "Directory", "Catalog" and other terms seem like a

better fit than Forum. How do you find projects on Github? How do you

find projects on Source Forge?

- File Management

- Users will be able to have complete file management through a “File” menu.

File management operations will include “Saving a file”, “Loading a file”, etc.

Traces to: ?. Instructor comment: needs elaboration, we have to know what

file management operations need to be supported and what they mean. We don't

have to know whether it is a file menu, or toolbar buttons, or what.

- Chat Widget

- The Chat widget will be located off to the side of the IDE, and the Chat

widget will show people who are currently online as well as those working on

a different file for the project. Traces to: ?. Instructor comment: seems

redundant with User Chat and User Awareness requirements. Refactor/merge.

- Multiuser Support

- Projects allow up to 10 users. Rationale: 32 "could lead to a large

amount of errors". Traces to: ?. Instructor comment: discarding the ability

to serve the common case of a demonstrator in front of a room full of reviewers

or learners needs a better rationale. There may exist such a rationale.

Non-functional.

- Resource Protection

- System resists resource hogging, denial of service, tampering.

Traces to: ?. Instructor comment: Need to be as specific as possible

about e.g. what tampering resistance would constitute. Might be multiple

requirements.

- Voice Chat.

- One team argues for deletion, on the grounds that it is unnecessary

feature creep. Another team at one point may have argued for video chat.

How do we all feel?

- User Achievements

- User profile will track lines of code written, number of reviews

performed (as reviewer and reviewee), amount of time logged (per project?),

etc. Traces to: ?. Instructor comment: Too vague. No "etc." or "such as"

allowed. More broadly, the peer review of users and of code has to be

logged somewhere and visible somehow.

- Contributor History

- Project will display history of which user(s) contributed to which

code, and when.

- Syntax Coloring

- Code browser/editor will provide visual indication of syntax and

give clues on how to correct errors.

Traces to: ?.

- Highlighting of user edits

- Users will be able to easily highlight other users' edits.

In this scenario, edits by other users will be grayed out.

Traces to: ?.

Don't Blame Scrum

Article from a dude on Gamasutra

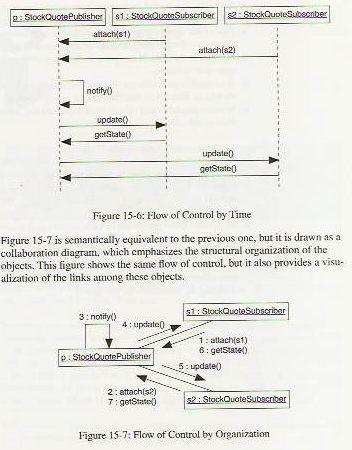

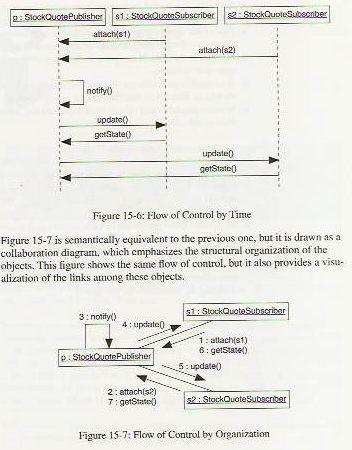

From Use Case Descriptions to UML Design Diagram Types

Former UI 383 student David Klingenberg has suggested the natural bridge to

take us from requirements to design is the Collaboration Diagram. This has

pros and cons, but let's consider learning them now, in preparation for

Class Diagrams.

| Class Diagram First | Collaboration Diagram First

|

|---|

pro:

traditional

static view of system

con:

takes potentially many iterations to flesh out details

|

pro:

draw a diagram to directly enact each use case's steps

subsequent class diagram will have lots of relationships and operations

specified for free

con:

implies collaboration diagrams are about documenting user-system interactions (they aren't)

|

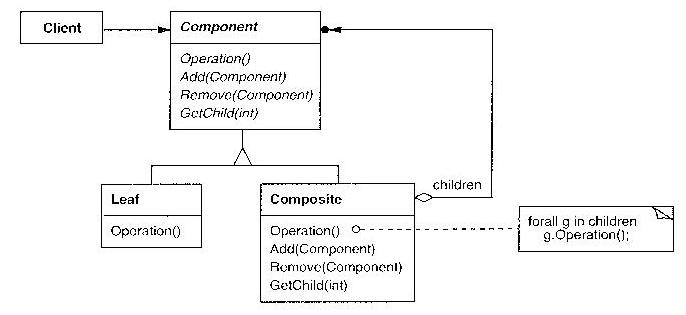

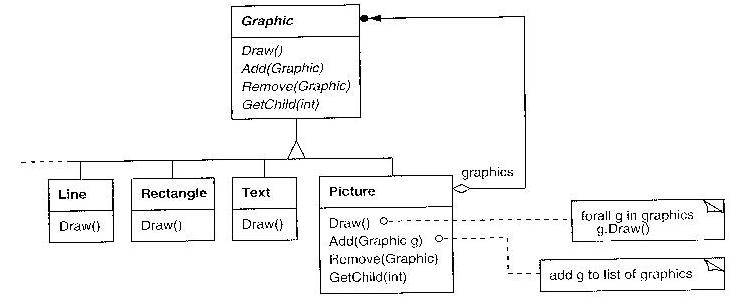

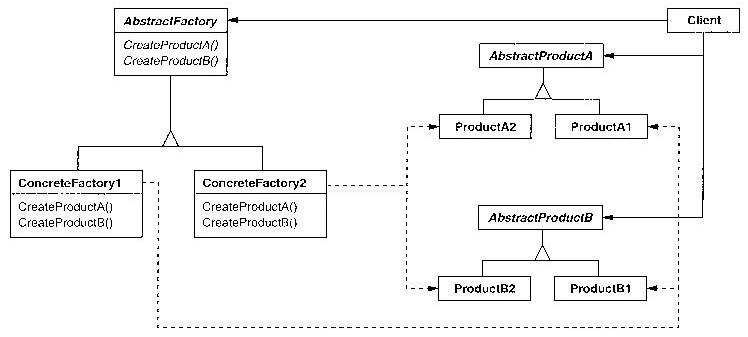

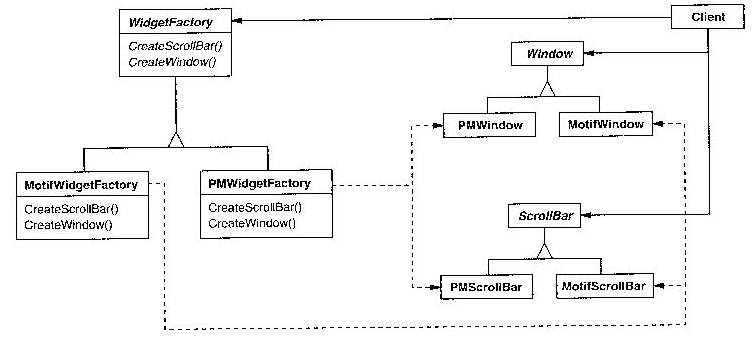

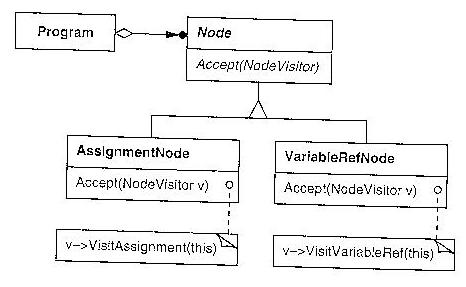

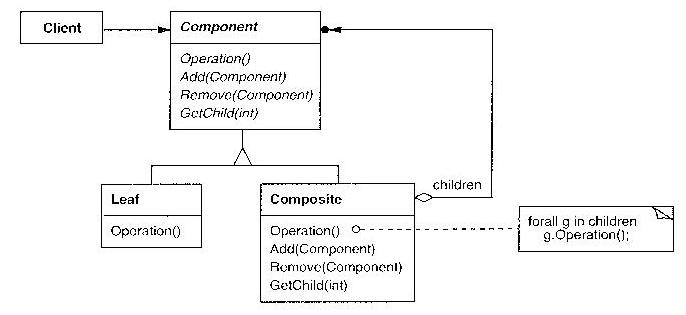

Class Diagrams

Class diagrams are the "meat and potatoes" of object-oriented analysis and

design. Class diagrams describe more detailed, more implementation-oriented

things than use case diagrams.

Class diagrams can present varying levels of detail about the classes in

them. Some class diagrams may have nothing more than the class name for

each class; others may hold the full list of fields and methods. When more

space is taken by class details, there is room for fewer classes per diagram,

so you often have "overview diagrams" that show many classes and their

connections, supplemented by "detail diagrams" that show more information

about closely related classes.

Lecture 13

No class Monday February 15, it is President's Day.

Relationships

Perhaps the main purpose for class diagrams is to identify and depict

relationships between objects that will be needed in the running system.

An association is the word we use for the most common kind

of relationship between classes.

We draw a line between the rectangles for classes to depict an assocation.

There are three major types of relationships:

- association

- includes aggregation and user defined. a run-time, extended-duration relationship. Depicted by a solid line,

with either a name in the middle or a diamond (aggregation) at the

aggregator/whole end. Typically involves

pointers among the objects' member variables.

Bidirectional by default, although pretty commonly directional.

- inheritance

- when one class is a specialization of another class. Compile-time

"is-a" relationship. Depicted by solid line with triangle at the

superclass end.

- dependency

- when one class uses another class.

modifying that other class might mean updating the dependent's code

that uses it.

Depicted by a dotted line, typically with an arrowhead.

Typically involves the temporary/transitory use of

an instance of another class within the dependent's methods,

the instance having been passed as a parameter.

It is directional (by default, although no rule precludes

mutual dependence).

Inheritance: the Un-Association

We have discussed how inheritance is not really an association, it is

a relationship between kinds of things, in the design and maybe in the

programming language type system, whereas associations are relationships

between instances (objects) at run-time. Inheritance is so vital that

many class diagrams focus specifically on a large inheritance class

hierarchy, similar to a biological taxonomy of species. Inheritance is

usually a static feature of a design, although there exist

languages in which instances can change who they inherit from at runtime.

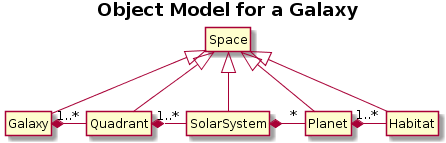

Here is an

example class hierarchy from the Lethbridge book (chapter 2):

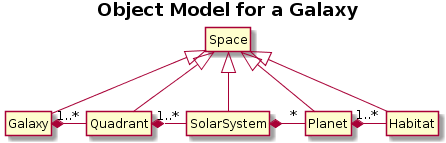

Aggregation: the Simplest Association

Aggregation, the parts-whole relationship, is perhaps the most useful

association of all of them. Many many complex things are made up of

an assembly of simpler items. There are at least two flavors of aggregation,

static and dynamic. Static aggregation is lifelong aggregation; the parts

cannot exist apart from the whole, or enter or leave the whole. Dynamic

aggregation is more like a team whose members can come and go. Here is an

example of a chain of aggregations with a galactic theme:

PlantUML:

hide circle

hide empty methods

hide empty fields

title <b>Object Model for a Galaxy</b>

Space <|-- Galaxy

Space <|-- Quadrant

Galaxy *-right- "1..*" Quadrant

Space <|-- SolarSystem

Quadrant *-right- "1..*" SolarSystem

Space <|-- Planet

SolarSystem *-right- "*" Planet

Space <|-- Habitat

Planet *-right- "1..*" Habitat

Comments:

- in well-drawn UML there would be only one inheritance triangle

- in coarse-grained classes, omit/hide empty field/method sections

- PlantUML does not always read like English intuition suggests,

vis a vis *-right- syntax

Association Details

There are many details added to associations to show more information about

the relationship. Some of these details are discussed in Chapter 5 in your

text.

- link

- just as classes have instances at runtime called objects, associations have instances at runtime

called links. Links occasionally are so important and complicated that they need

their own attributes. The main information about them is usually their lifetime, and what

instances they are connecting.

- multiplicity

- a.k.a. cardinality, it is the number of object instances per link instance in a given relationship

- qualifier

- some many-to-one relationships have a unique key used to traverse the association.

- roles

- the different ends of an association may have differing roles associated with them.

Especially useful if both ends of an association connect the same class.

- composition

- there is a special kind of aggregation called composition, which denotes aggregations

in which the component parts have no existence apart from the whole thing. The relationship

is hardwired, static, or constant. Composition

is marked using a filled diamond; hollow diamond means a regular (transitory, or dynamic)

aggregation.

Lecture 14

Scroll back a bit and talk about roles and composition.

Big Issues with UML Class Diagrams

- The associations may be lame.

- Give extra thought to them.

- Avoid missing associations.

- Avoid faux aggregation.

- Identify/define user-defined relationships from the app domain.

- The diagram may be ignored or becomes obsolete

- Cowboy coders ignore design entirely

- Change is inevitable

- It is contrary to human nature to keep diagrams up to date

during a coding binge.

- Reverse engineering tools might help.

- class2uml

- UmlGraph+

Graphviz --

not just a reverse engineering tool, but it can be used that way.

Resulting diagrams can be placed in javadocs which is cool.

- doxygraph+

Doxygen

- The mapping of diagram to subsequent code may be unnavigable.

In order to map diagram elements to code elements or vice versa,

you generally have to label elements within one phase, and refer

to those elements by name or number within the other phase. This

is broadly refered to as traceability and can apply across all

phases of software engineering.

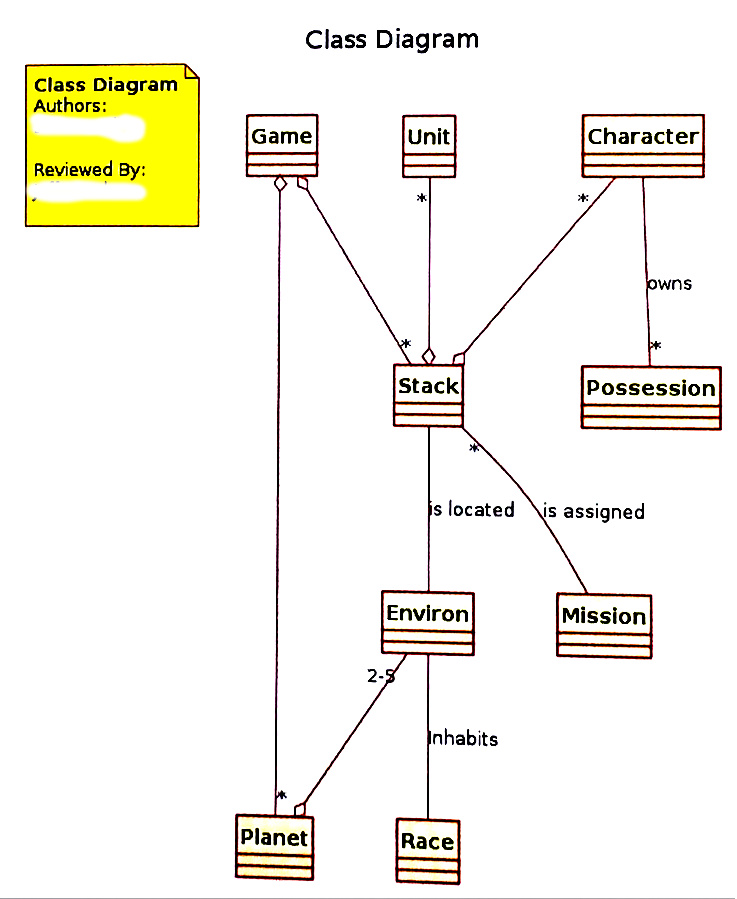

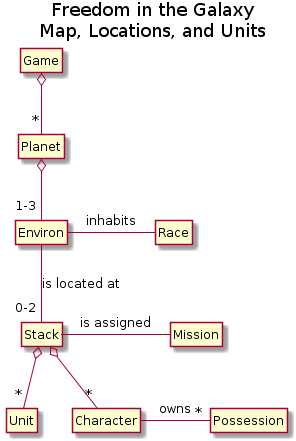

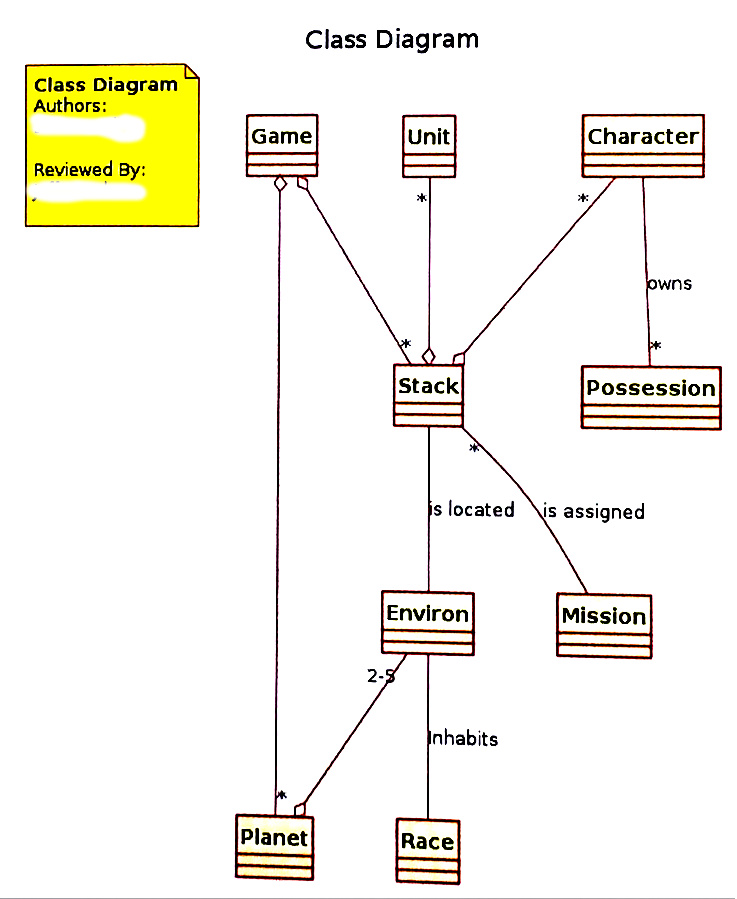

Class Diagram Example(s) from the Past

The following past CS383 student homework submission let's us talk about

many good and bad things you can do in your class diagrams.

Things to aspire to:

- have your work peer reviewed

- waste no ink

- primary axis (horizontal or vertical)

- primary association (aggregation or inheritance)

- balance, minimize distance, avoid edge crossings...

Created by:

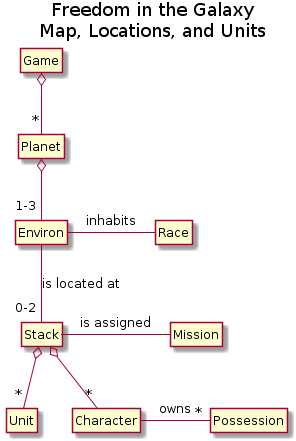

@startuml

title Freedom in the Galaxy\nMap, Locations, and Units

hide circles

hide members

class Game {

}

Game o-- "*" Planet

Planet o-- "1-3" Environ

Environ -- "0-2" Stack : "is located at"

Stack -right- Mission : "is assigned"

Stack o-- "*" Unit

Stack o-- "*" Character

Character -right- "*" Possession : owns

Environ -right- Race : inhabits

But more important than all this:

- focus on your relationships (associations)

- write down (what you understand is) the meaning of those relationships

in a supporting document.

Lecture 15

Midterm Exam

We decided on Wednesday March 9

What is Due Tonight for HW#3

10pm due date for what?

- individual's practice w/ class diagrams

- Group-coordinated assembly of useful class diagrams

- Old/past sprint backlog, results, and artifacts thereof

- Plan for the next sprint (who's doing what)

HW#4

Sprint Planning Meeting

Three parts

- Show your past sprint's backlog, and what you accomplished

- What went well, and what needs improvement

- Develop new sprint's backlog, each team member commits to

one or more items.

Lecture 16

WAR impressions

- Your WAR is to tell me what you did and how it went. You should

be specific.

- "To: Client or Project Manager" can just read "To: Dr. J" or some such

- Your activities planned list does not have to include "attend class";

I do expect that, but it is implicit in every WAR.

- Some teams seemed to think that because class diagrams were the

deliverable, that was all you had to work on last week. Really,

you need to be working off a Backlog task list, and giving me and

your teammates about 9 hours/week, either in meetings or on

task list items or on specific deliverable requirements such as UML

diagrams.

- Some of you still need to figure out how to submit WAR in a direct

"Submission Text", not Comments and not an attached .txt submission.

Ask teammates or submit .pdf or .docx if you can't find the right

button to submit a formatted WAR directly on Blackboard.

Adding Detail to Class Diagrams:

From Requirements to Software Design

We have more examples, and more detailed notation for class diagrams to learn,

but first:

- One of your big picture items right now is to work out the details of

what your project consists of. Application Domain Content.

- A second big issue is to figure out a software design that will deliver

that content.

- To produce a software design, we need more detail. How to get it?

- Asking the customer more questions

- Thinking hard and studying.

- From use case descriptions.

Although we will also be adding more details to our class diagrams, and

seeing more examples, it is also time to learn a new UML diagram type:

Sequence Diagrams.

This UML diagram type:

- illustrates the timing relationships and communications

between objects during a computation.

- can be used to elaborate on use case descriptions, taking the

sequence of steps and working out each step's details.

(coarse grained sequence diagram)

- can show interactions between objects

that are needed for any complex operation, i.e. non-trivial method

whose implementation will involve multiple objects.

Shift in perspective often identifies additional classes and methods.

Sequence diagrams are classically used to show parallel threads or processes

and their communication, but they can certainly depict control flow bouncing

around between objects within a single thread. To create a sequence diagram,

you line up all the involved objects as columns along the x axis, and use

the y axis to depict time (or vice versa).

For coarse-grained sequence diagrams, one way to organize is to use columns

like this:

- First column = actor/object who initiated use case/operation

- 2nd column = boundary object used to initiate

- 3rd column = control object in charge of use case

- <<create>> 3rd from 2nd; additional boundaries from 3rd;

entities probably do not get created except in specific situations

(they are usually "persistent" from some prior use case)

- entity objects get accessed by others, they do not access non-entities

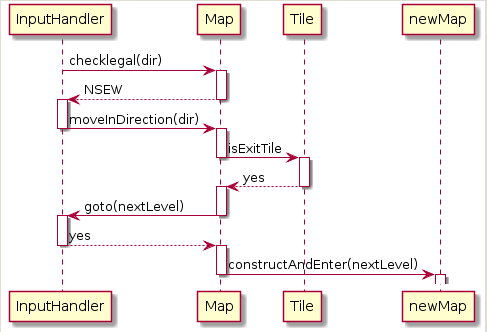

Here is an example from a previous semester's class.

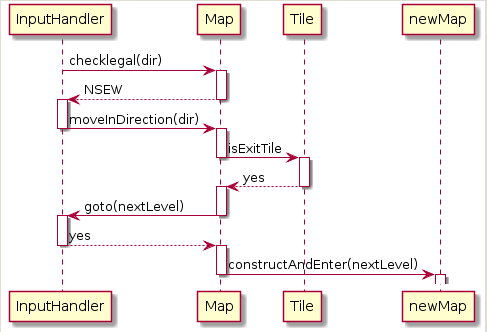

constructed from:

@startuml

hide circle

InputHandler -> Map : checklegal(dir)

activate Map

Map --> InputHandler : NSEW

deactivate Map

activate InputHandler

InputHandler -> Map : moveInDirection(dir)

deactivate InputHandler

activate Map

Map -> Tile : isExitTile

deactivate Map

activate Tile

Tile --> Map : yes

deactivate Tile

activate Map

Map -> InputHandler : goto(nextLevel)

deactivate Map

activate InputHandler

InputHandler --> Map : yes

deactivate InputHandler

activate Map

Map -> newMap : constructAndEnter(nextLevel)

deactivate Map

activate newMap

Lecture 17

Announcements

- Midterm coming up on Wednesday 3/9, right? Review on Monday 3/7.

Sequence Diagrams Resources

Discussion of Sequence Diagrams

Other than: because it is required preparation for a midterm exam,

under what circumstances would you want to do a sequence diagram?

- Look for pieces of functionality where your design has not

broken out things a fine-enough granularity yet.

- Has your team been too conservative in its interpretation of

what some requirements or use cases entail?

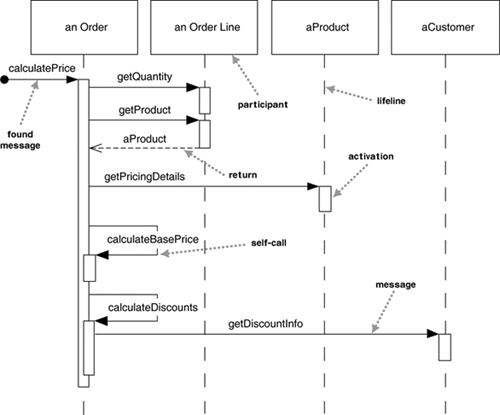

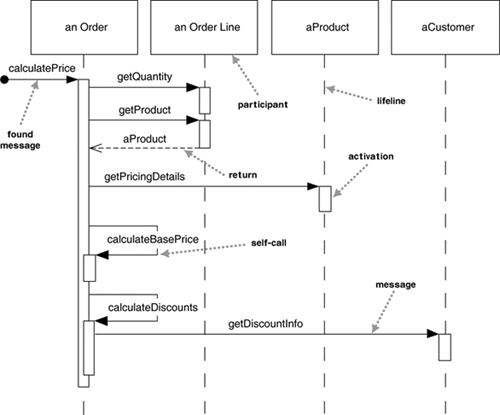

In the following example from c-jump.com, what variations on the

sequence diagram notation do you detect? Are they understandable?

Do they seem like improvements or bugs?

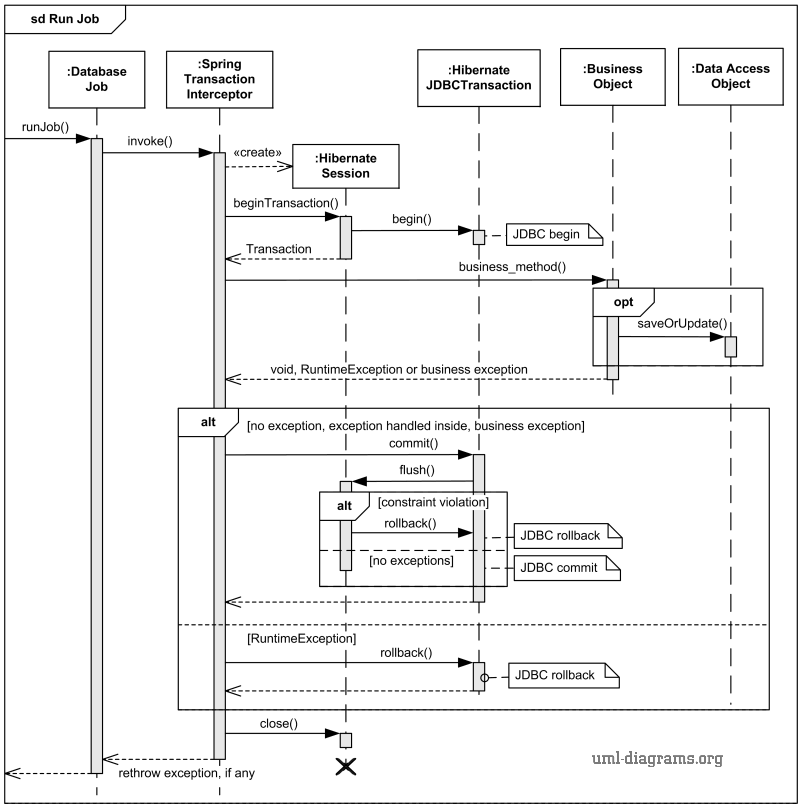

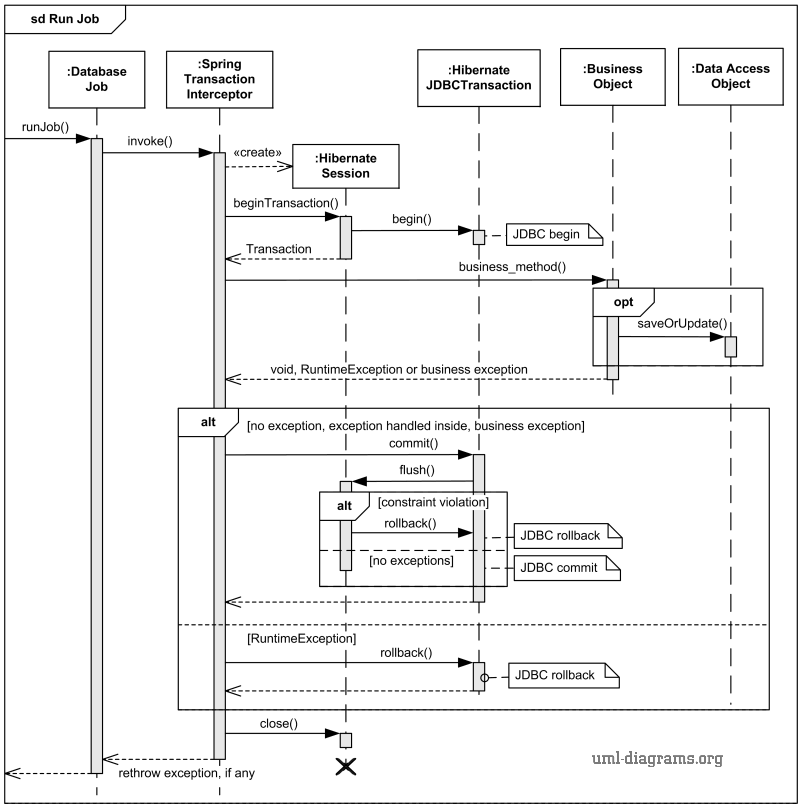

OK, what about the following example from uml-diagrams.org?

User-defined Association Examples

Here is an association you might see in a human resources application:

|

|

employee employer

Works-for

|

|

What are some example instances of this association?

Here is a more detailed version of that association:

| Person | name

SSN

address

salary

job title |

|

employee employer

*

Works-for

|

|

There is a multiplicity, since many people may work for the same company.

But what if a given person works for more than one company?

Here is an association you might need for a geography application:

Now, what are some examples of this association? Give me some instances --

and their "links". To include more information in this association, we need

to know:

- How many capitals can a country have?

- How many countries can a city be capital of?

- Does every country have a capital? Vice-versa?

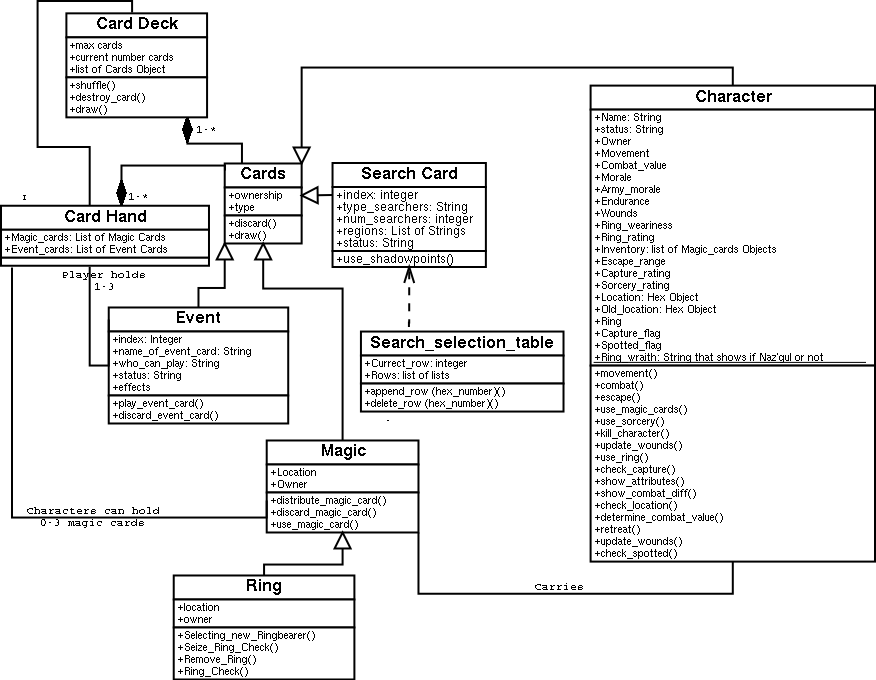

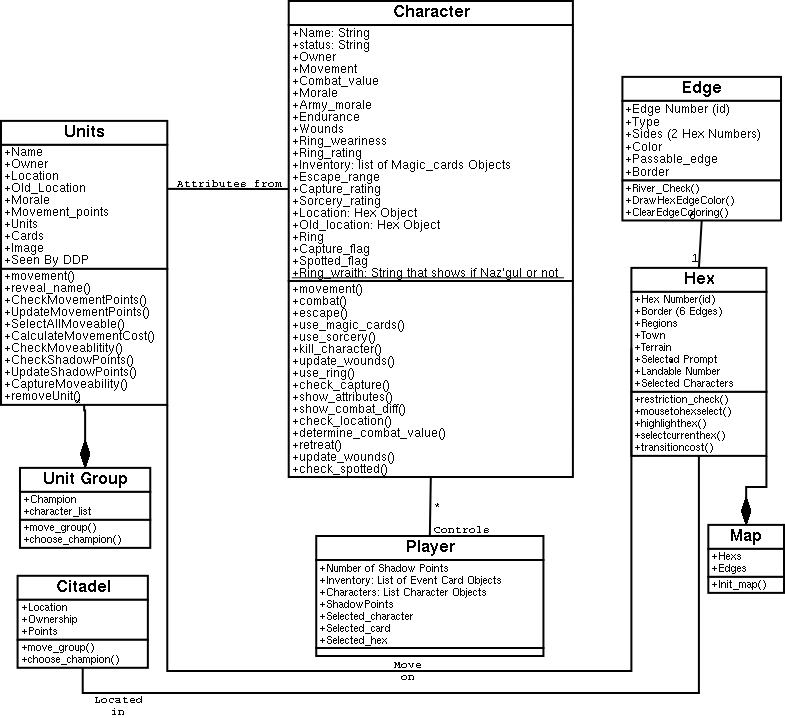

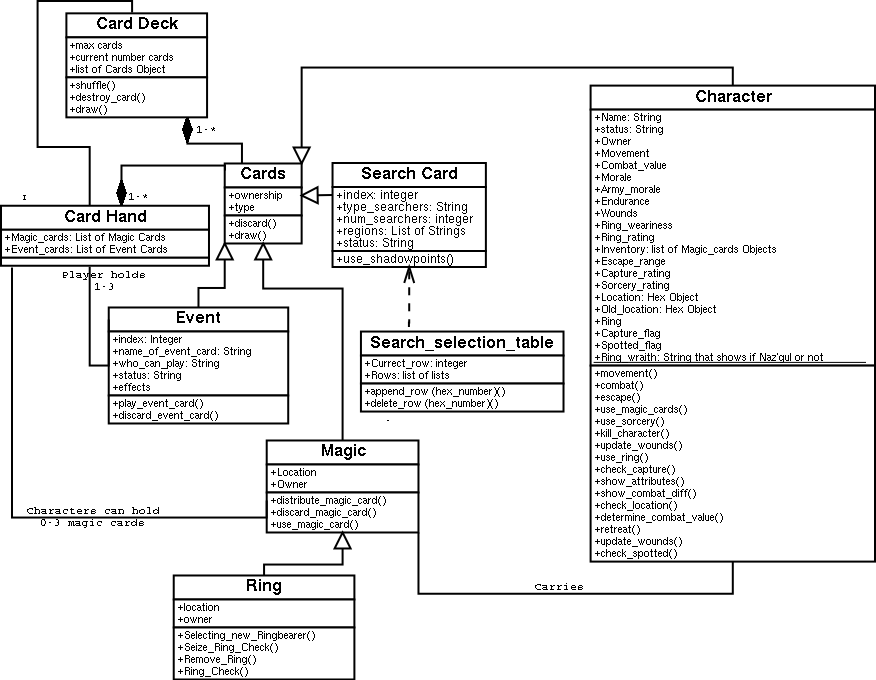

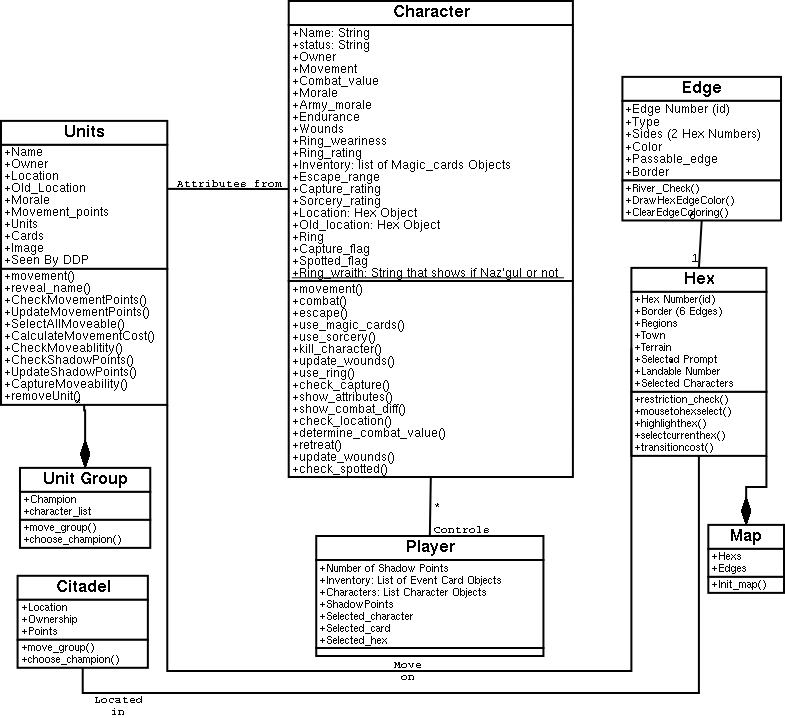

Class Diagrams Examples -- closer to home

As a larger example of class diagrams and associations, consider a previous

semester's project. They produced two, overlapping class diagrams, one

focusing mainly on

cards and card decks

and one focusing on

characters, units, and the map.

We can look at these two diagrams and

consider what previous students did right, and what needs to be changed.

We can also work, as an example, some of the classes and

relationships for our projects.

A "Good" Class Diagram...

- Has more two classes

- If you only have 1-2 classes, you don't need a diagram.

- Has meaningful associations, adequately defined

- No blank lines. No faux aggregation or inheritance. One or more

sentences of supporting English prose to define classes and user-defined

associations.

- Notationally, triangles, diamonds and so on in the correct locations

- Nothing missing, nothing backwards

- Has an appropriate focus on application domain

- Showable to a customer domain expert, not encumbered with

implementation artifacts such as standard library classes.

- Read:

http://www.agilemodeling.com/artifacts/classDiagram.htm

- Figure 9 towards the end of this article looks fishy to me. Why? How would

you fix it?

Lecture 18

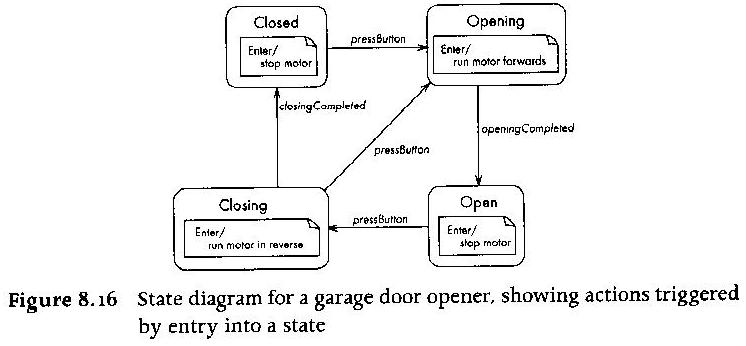

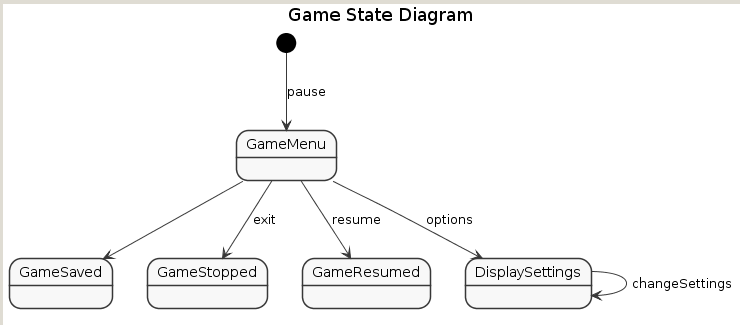

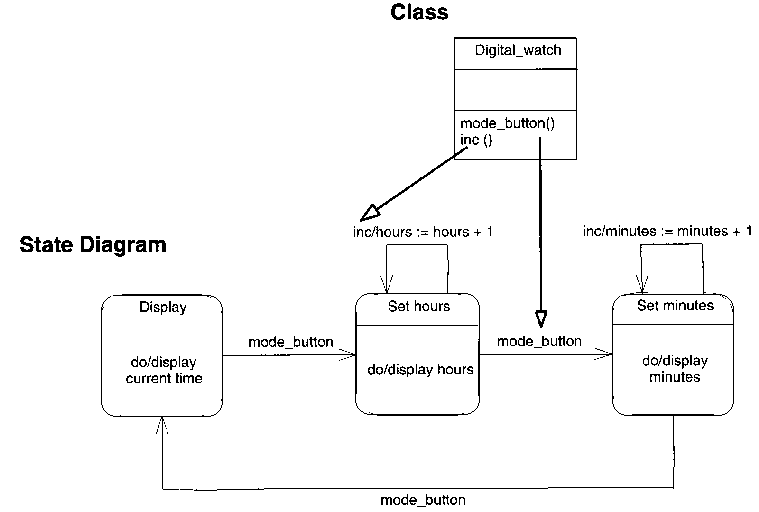

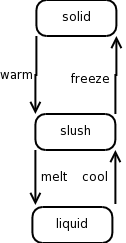

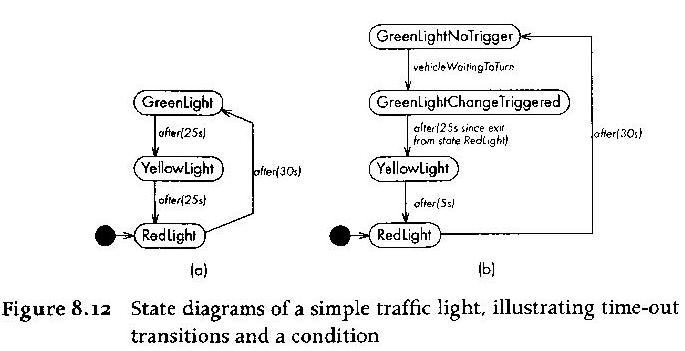

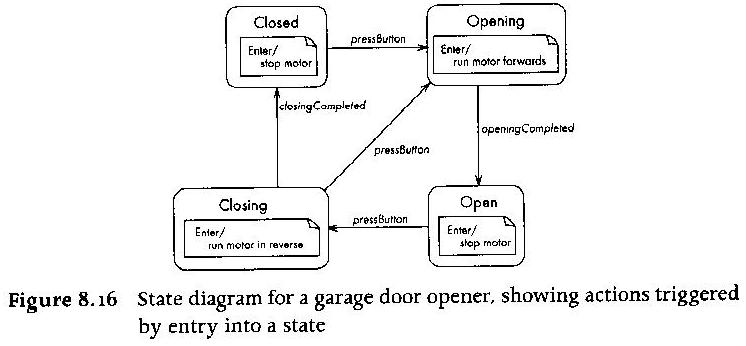

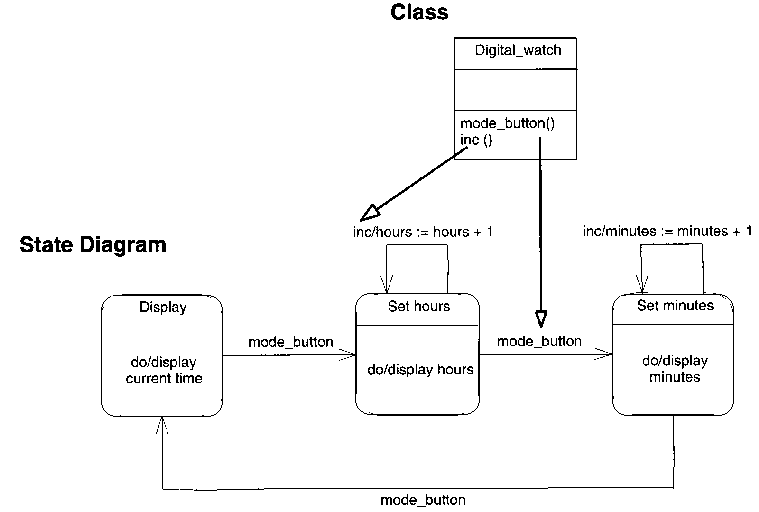

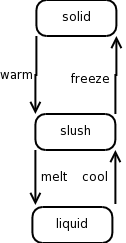

Statecharts

A statechart, or state diagram, depicts dynamic properties of a system.

A statechart consists of

- a set of states

- drawn as circles, ovals, or rectangles, with a usefully semantic

name/label inside.

- a set of transitions

- drawn as arrows from one state to another.

- a start state, and a set of final states

Statecharts are a non-trivial extension of finite automata, because:

- states may have activities associated with entry, exit, a finite task,

or ongoing while in the state.

- instead of "input symbols", transitions have trigger events

and conditions, drawn inside square brackets

- you can have a triggerless transition so long as it either has a

condition, or the state as a (completable) activity

- events may have associated actions

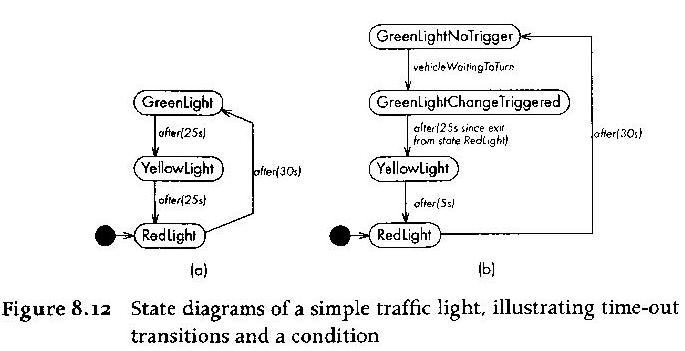

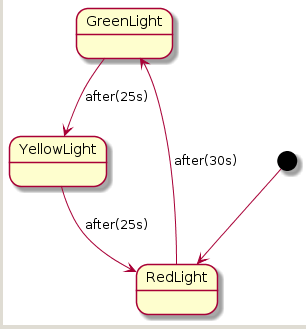

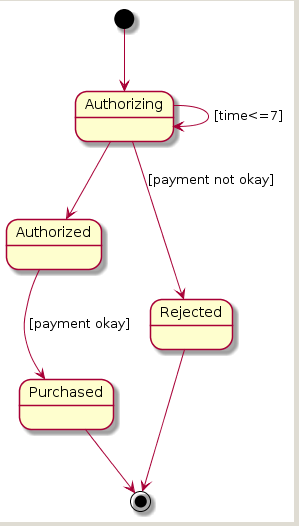

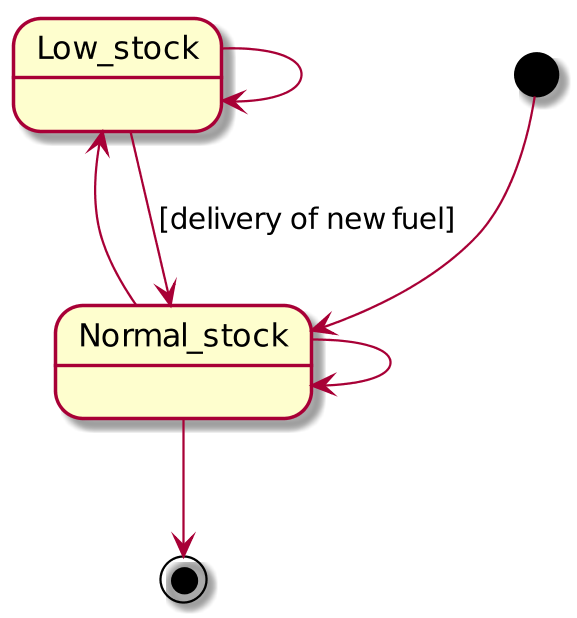

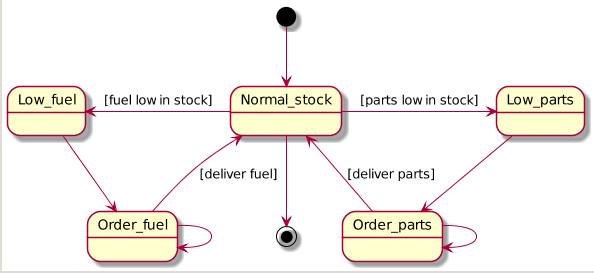

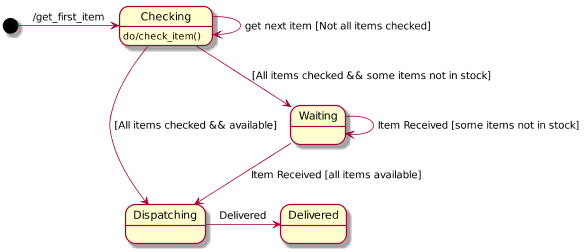

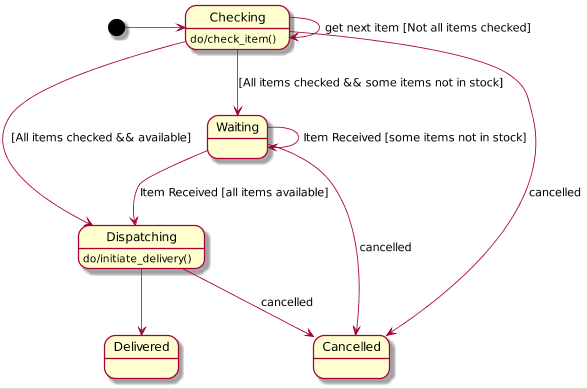

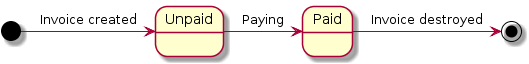

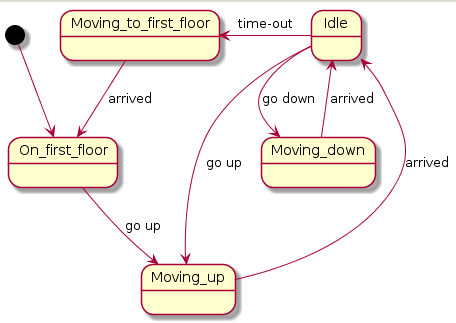

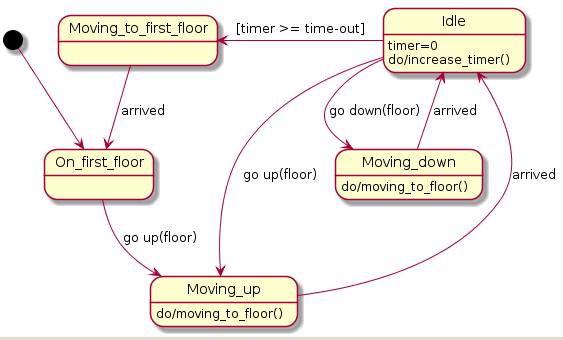

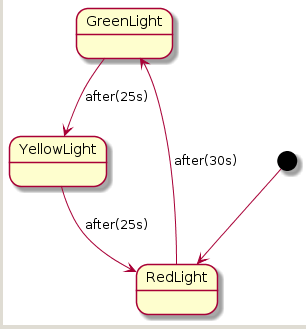

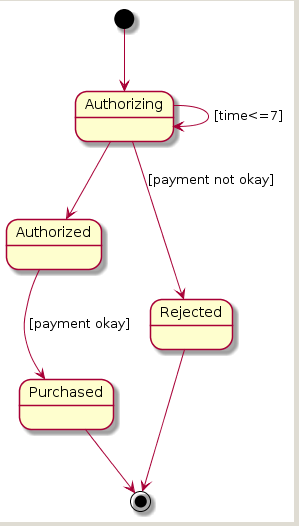

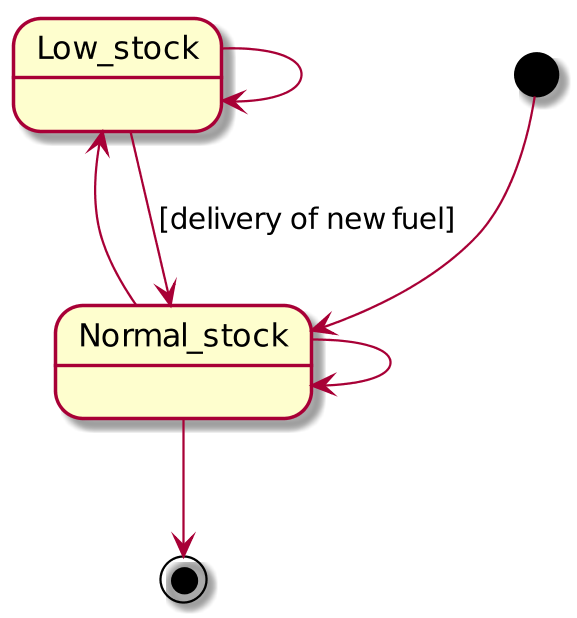

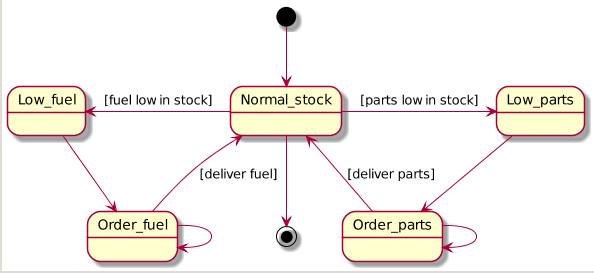

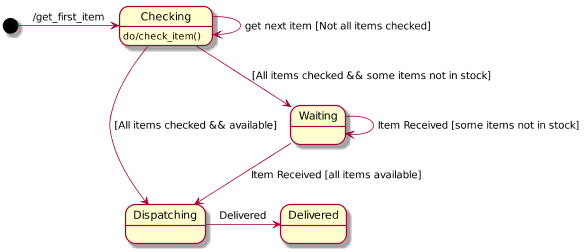

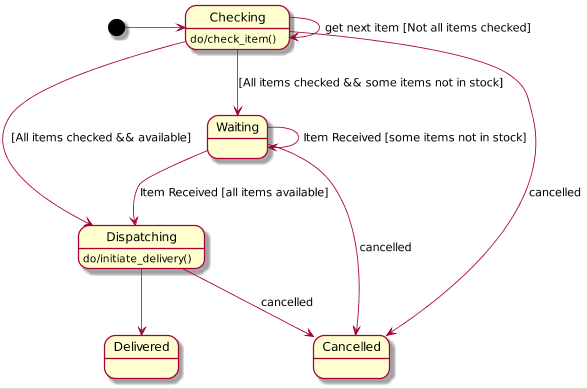

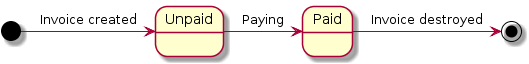

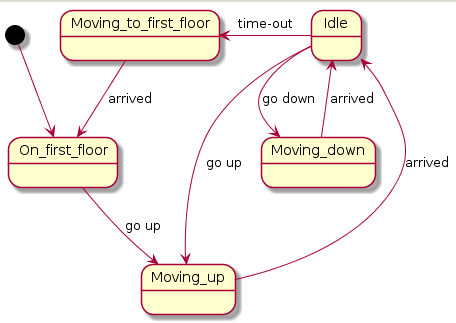

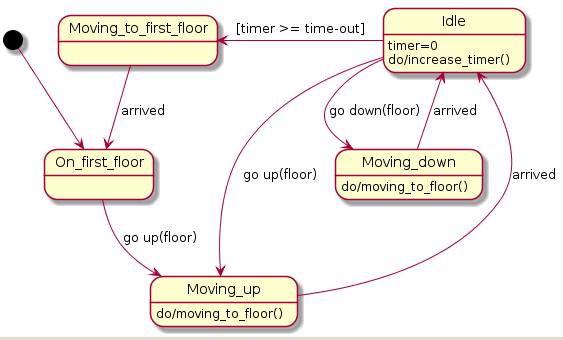

Statechart Diagram Examples

Compare these with the plantuml:

from:

@startuml

GreenLight --> YellowLight : after(25s)

YellowLight --> RedLight : after(25s)

RedLight --> GreenLight : after(30s)

[*] --> RedLight

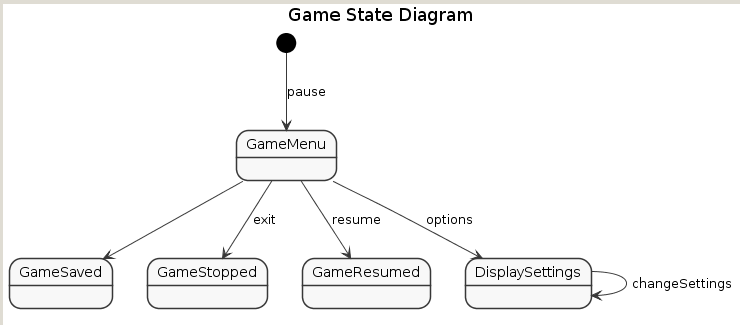

A "Good" Statechart Example

- Has more than a couple states

- Typically, transitions include cycles, or have enough transition

complexity to warrant a diagram. Not usually a simple linear

sequence or tree.

- Has a meaningful, well-defined scope -- the class(es) whose behavior

it governs are specified

- the representation of the states is

explicit (which fields, and which value ranges, denote the states).