Evolution of Cooperative Teams

Many real-world problems are too large and too complex to expect a single, monolithic intelligent agent to solve them successfully. Most accomplishments arise from team efforts. This is true not only in the realm of human accomplishments, but in both the natural and artificial worlds as well. Just as we humans rely on cooperation and teamwork to achieve our most monumental accomplishments, so too do most animals species rely on team efforts for their survival, from wolf packs, to ant colonys, even down to the symbiotic relationships in the bacterial world. Similarly our technology depends on cooperative solutions - what is a car, but many parts working in tandem? And any software project beyond a few lines of code is written, not as a monolithic unit, but as a set of cooperating modules, each with its own specialized role. Even the human body can be viewed as a set of interdependent and cooperating organs.

Thus, if we expect evolutionary techniques to evolve successfully, we must give them the means to evolve cooperative solutions (commonly called teams or ensembles). As programs are expected to solve problems from progressively larger and more complex problem domains the need for cooperative solutions will becoming increasingly critical. What is the most effective and efficient way to evolve this necessary cooperation?

Before addressing this question it is worth considering the form the cooperative solutions are likely to take. My research typically considers two types of problems: problems in which there is an explicit cooperation mechanism - for example all of the members return an answer and the majority answer is used (the cooperation mechanism is a vote); and problems in which cooperation is implicit - for example agents exploring an unknown space (the agents explore independently, but to be effective they must cooperatively cover the entire search are).

|

Two basic approaches to evolving cooperative teams have been used in the past. In island models members are evolved independently and the best of the evolved members are combined into a team. In team models evolution is applied to whole teams at once. A third common approach is boosting, which is a technique for adding team members which compensate for specific skill areas not present in the team. adaBoost is one of the most commonly used boosting algorithms.

In contrast in team approaches the members tend to cooperate very well - you get a specialist for all of the problem domains. Unfortunately, the individual members tend to perform relatively poorly. And its not uncommon to get 'hitchhikers' who contribute nothing to the solution, but just take advantage of the team's overall success.

We have developed an approach we call Orthogonal Evolution of Teams (OET) that generally overcomes these weaknesses. It produces members whose individual performance is better than the members generated via team approaches and that cooperate better than individuals produced via island approaches.

In addition, we have shown that for multi-agent systems, OET teams scale better and are more robust with respect to changes in the teams' composition that either island or team generated sets. This has two important implications. First, it means that with OET its possible to train relatively small teams then increase their size and still have reasonable performance (scalable). Second, it means that if the composition of a team changes, due to loss, up-grades, etc. OET teams will continue to perform well (robust with respect to team composition).

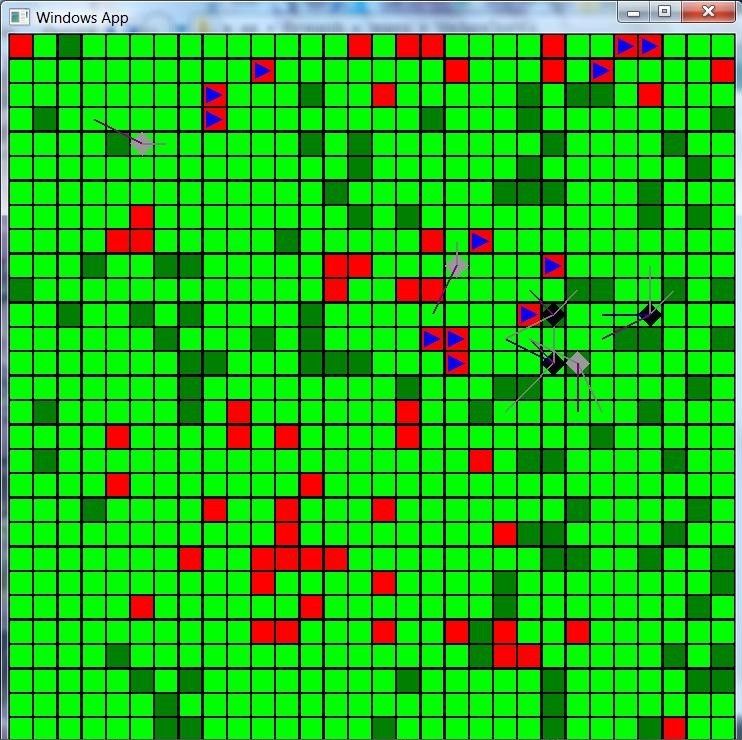

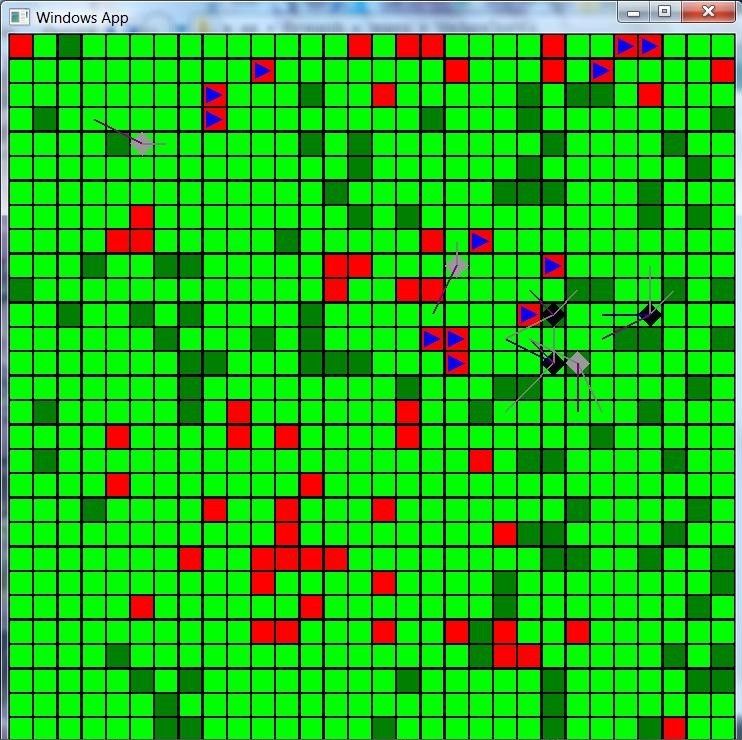

Using cooperative evolution we have evolved hyena behaviors that allow them to successfully coordinate to drive away a pair of lions. The lion's behavior is fixed: they don't move unless they are outnumbered by hyenas by more than 3:1. Hyenas are `injured', i.e. lose fitness, if they get too close to the lions and are rewarded for appraoching the zebra. But the penalty for approaching the lions is much larger than the reward for approaching the zebra, so maximum fitness requires coordinating to drive the lions away.

Video of sample results is available here. There are two lions (yellow circles, if the hyenas enter the large, fainter yellow circles they are 'injured') and 14 hyenas (smaller brownish circles). In the early generations the hyenas evolve behaviors that keep them from approaching the lions too closely. After ~1400 generations the hyenas have evolved to make a coordinated rush at the lions.

Return to Terry Soule's Homepage.